3 players chessboard. (source: Slip on Flickr)

3 players chessboard. (source: Slip on Flickr) Why is the world’s most advanced AI used for cat videos, but not to help us live longer and healthier lives? Here, I’ll provide a brief history of AI in medicine, and the factors that may help it succeed where it has failed before.

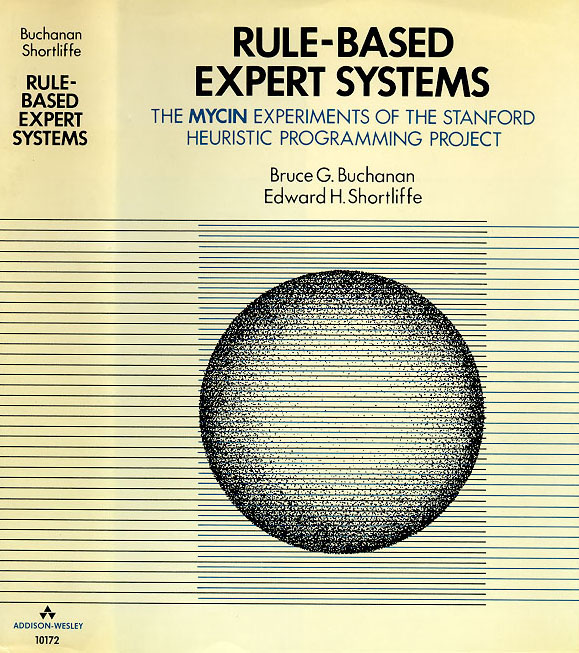

Imagine yourself as a young graduate student in Stanford’s Artificial Intelligence Lab, building a system to diagnose a common infectious disease. After years of sweat and toil, the day comes for the test: a head-to-head comparison with five of the top human experts in infectious disease. Your system squeezes a narrow victory over the first expert, winning by just 4%. It handily beats the second, third, and fourth doctors, and, against the fifth, it wins by an astounding 52%.

Would you believe such a system exists already? Would you believe it existed in 1979?

The system was the MYCIN project, and in spite of the excellent research results, it never made its way into clinical practice[1].

In fact, although we’re surrounded by fantastic applications of modern AI, particularly deep learning—self-driving cars, Siri, AlphaGo, Google Translate, computer vision—the effect on medicine has been nearly nonexistent. In the top cardiology journal, Circulation, the term “deep learning” appears only twice[2]. Deep learning has never been mentioned in the New England Journal of Medicine, The Lancet, BMJ, or even JAMA, where the work on MYCIN was published 37 years ago. What happened?

There are three central challenges that have plagued past efforts to use artificial intelligence in medicine: the label problem, the deployment problem, and fear around regulation. Before we get to those, let’s take a quick look at the state of medicine today.

The moral case for AI in health care

Medicine is about life and death. With such high stakes, one could ask: should we really be rocking the boat here? Why not just stick with existing, proven, clinical-grade algorithms?

Well, consider a few examples of the status quo:

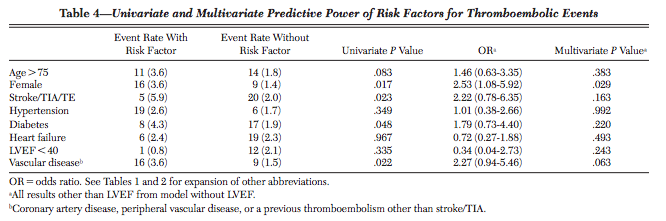

- The score that doctors use to prescribe your grandparents blood thinners, CHADS2-Vasc, is accurate only 67% of the time. It was derived from a cohort that included only 25 people with strokes; out of eight tested predictor variables, only one was statistically significant[3].

- Google updates its search algorithm 550 times per year, but life-saving devices like ICDs are still programmed using simple thresholds—if heart rate exceeds X, SHOCK—and their accuracy is getting worse over time.

- Although cardiologists have invented a life-saving treatment for sudden cardiac death, our current algorithm to identify who needs that treatment will miss 250,000, out of the 300,000 people who will die suddenly this year.[4].

- Pressed for time, doctors can’t make sense of all the raw data being generated: “Most primary care doctors I know, if they get one more piece of data, they’re going to quit,” said Dr. Bob Wachter, a professor of medicine at the University of California, San Francisco.

To be clear, none of this means medical researchers are doing a bad job. Modern medicine is a host of miracles; it’s just unevenly distributed. Computer scientists can bring much to the table here. With tools like Apple’s ResearchKit and Google Fit, we can collect health data at scale; with deep learning, we can translate large volumes of raw data into insights that help both clinicians and patients take real actions.

To do that, we must solve three problems: one technical, one a matter of political economy, and one regulatory. The good news is that each category has new developments that may let AI succeed where it has failed before.

Problem #1: Health care is a label desert and the advent of one-shot learning

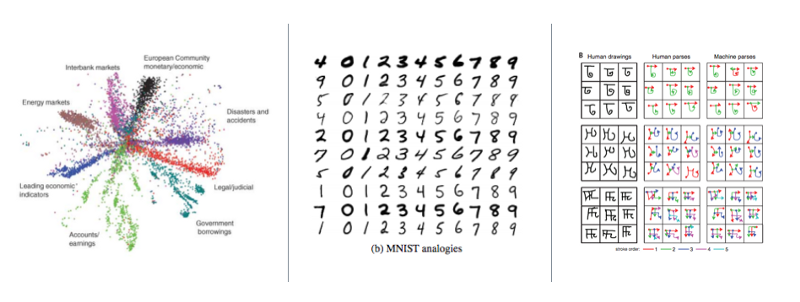

Modern artificial intelligence is data hungry. To make speech recognition on your Android phone accurate, Google trains a deep neural network on roughly 10,000 hours of annotated speech. In computer vision, ImageNet contains more than 1,034,908 hand-annotated images. These annotations, called labels, are essential to make techniques like deep learning work.

In medicine, each label represents a human life at risk.

For example, in our mRhythm Study with UCSF Cardiology, labeled examples come from people visiting the hospital for a procedure called cardioversion, a 400-joule electric shock to the chest that resets your heart rhythm. It can be a scary experience to go through. Many of these patients are gracious enough to wear a heart rate sensor (e.g., an Apple Watch) during the whole procedure in the hope of collecting data that may make life better for the next generation, but we know we’ll never get one million participants, and it would be unconscionable to ask.

Can AI work well in situations where we may have 1000x fewer labels than we’re used to? There’s already some promising work in this direction. First, it was unsupervised learning that sparked interest in deep learning from 2006 to 2009—namely, pre-training and autoencoders, which can find structure in data that’s completely unlabeled.

More recently, hybrid techniques such as semi-supervised sequence learning have established that you can make accurate predictions with less labeled data if you have a lot of unlabeled data.

The most extreme setting is one-shot learning, where the algorithm learns to recognize a new pattern after being given only one label. In Fei-Fei Li’s work on one-shot learning for computer vision, for example, she “factored” statistical models into separate probability distributions on appearance and position; could similar “factorings” of medical data let us save lives sooner? Recent work on Bayesian program learning, (Toronto, MIT, NYU), and one-shot generalization in deep generative models (DeepMind), are promising in this regard.

Underlying many of these techniques is the idea that large amounts of unlabeled data may substitute for labeled data. Is that a real advance? Yes! With the proliferation of sensors, unlabeled data is now cheap. Some medical studies are making use of that already.

For Cardiogram, that involved building an Apple Watch app to visualize heart rates, which has collected about 8.5 billion unlabeled data points from HealthKit. We then partnered with the UCSF Health eHeart project to collect medical-grade labels[5]. From within the app, users share digital biomarkers (like resting heart rate), with researchers at UCSF.

Researchers at USC are also working on this problem; using deep computational phenotyping, they have trained a neural network to embed EMR data. The American Sleep Apnea Association has partnered with IBM Watson to develop a ResearchKit app where anybody can contribute their sleep data.

Problem #2: Deployment and the outside-in principle

Let’s say you’ve built a breakthrough algorithm. What happens next? The experience of MYCIN showed that research results aren’t enough; you need a path to deployment.

Historically, deployment has been difficult in fields like health care, education, and government. Electronic Medical Records (EMRs) have no real equivalent to an “App Store” that would let an individual doctor install a new algorithm[6].

Since EMR software is generally installed on-premise, sold in multi-year cycles to a centralized procurement team within each hospital system, and new features are driven by federally-mandated checklists, you need a clear return on investment (ROI) to get an innovation through the heavyweight procurement process.

Unfortunately, hospitals tend to prioritize what they can bill for, which brings us to our ossified and dirigiste fee-for-service system. Under this payment system, the hospital bills for each individual activity; in the case of a misdiagnosis, for example, they may perform and bill for follow-up tests. Perversely, that means a better algorithm may actually reduce the revenue of the hospitals expected to adopt it. How on earth would that ever fly?

Even worse, since a fee is specified for each individual service, innovations are non-billable by default. While in the abstract, a life-saving algorithm such as MYCIN should be adopted broadly, when you map out the concrete financial incentives, there’s no realistic path to deployment.

Fortunately, there is a change we can believe in. First, we don’t ship software anymore; we deploy it instantly. Second, the Affordable Care Act (ACA) creates the ability for startups to own risk end-to-end: full-stack startups for health care and the unbundling of risk.

Instant deployment

Imaging yourself as an AI researcher building Microsoft Word’s spell checker in the 90’s. Your algorithm would be limited to whatever data you could collect in the lab; if you discovered a breakthrough, it would take years to ship. Now imagine yourself 10 years later, working on a spelling correction algorithm based on billions of search queries, clicks, page views, web pages, and links. Once it’s ready, you can deploy it instantly. That is a 100x faster feedback loop. More than any single algorithmic advance, systems like Google search perform so well because of this fast feedback loop.

The same thing is quietly becoming possible in medicine. Most of us have a supercomputer in our pocket. If you can find a way to package up artificial intelligence within an iOS or Android app, the deployment process shifts from an enterprise sales cycle to an app store update.

The unbundling of risk

The ACA has created alternatives to fee-for-service. For example, in bundled payments, Medicare pays a fixed fee for a surgery, and if the patient needs to be re-hospitalized within 90 days, the original provider is on the hook financially for the cost. That flips the incentives: if you invent a new AI algorithm that is 10% better at predicting risk (or better, preventing it), that will drive the bottom line for a hospital. There are many variants of fee-for-value being tested now: Accountable Care Organizations, risk-based contracting, full capitation, MACRA and MIPS, and more.

These two things enable outside-in approaches to health care: build up a user base outside the core of the health care system (e.g., outside the EMR), but take on risk for core problems within the health care system, such as re-hospitalizations. Together, these two factors let startups solve problems end-to-end, much the same way Uber solved transportation end-to-end rather than trying to sell software to taxi companies.

Only partially a problem, #3: regulation and fear

Many entrepreneurs and researchers fear health care because it’s highly regulated. The perception is that many regulatory regimes are just an expensive way to say “no” to new ideas.

And that perception is sometimes true: Certificates of Need, risk-based capital requirements, over-burdensome reporting, fee-for-service; these things sometimes create major barriers to new entrants and innovations, largely to our collective detriment.

But regulations can also be your ally. Take HIPAA. If the authors of MYCIN wanted to make it possible to run their algorithm on your medical record in 1978, there was no way to do that because the medical record was owned by the hospital, not the patient. HIPAA, passed in 1996, flipped the ownership: if the patient gives consent, the hospital is required to send the record to the patient or a designee. Today those records are sometimes faxes of paper copies, but efforts like Blue Button, FIHR, and meaningful use are moving them toward machine-readable formats. As my friend Ryan Panchadsaram says, HIPAA often says you can.

Closing thoughts

If you’re a skilled AI practitioner currently sitting on the sidelines, now is your time to act. The problems that have kept AI out of health care for the last 40 years are now solvable. And your impact is large.

Modern research has become so specialized that our notion of impact is sometimes siloed. A world-class clinician may be rewarded for inventing a new surgery; an AI researcher may get credit for beating the world record on MNIST. When two fields cross, there can sometimes be fear, misunderstanding, or culture clashes.

We’re not unique in history. In 1944, the foundations of quantum physics had been laid, including, dramatically, the later detonation of the first atomic bomb. After the war, a generation of physicists turned their attention to biology. In the 1944 book What is Life?, Erwin Schrödinger referred to a sense of noblesse oblige that prevented researchers in disparate fields from collaborating deeply, and “beg[ged] to renounce the noblesse”:

A scientist is supposed to have a complete and thorough knowledge, at first hand, of some subjects and, therefore, is usually expected not to write on any topic of which he is not a master. This is regarded as a matter noblesse oblige. For the present purpose, I beg to renounce the noblesse, if any, and to be freed of the ensuing obligation. My excuse is as follows:

We have inherited from our forefathers the keen longing for unified, all-embracing knowledge. The very name given to the highest institutions of learning reminds us, that from antiquity and throughout many centuries, the universal aspect has been the only one to be given full credit. But the spread, both in width and depth, of the multifarious branches of knowledge during the last hundred odd years has confronted us with a queer dilema. We feel clearly that we are only now beginning to acquire reliable material for welding together the sum total of all that is known into a whole; but, on the other hand, it has become next to impossible for a single mind fully to command more than a small specialized portion of it.

Over the next 20 years, the field of molecular biology unfolded. Schrödinger himself used quantum mechanics to predict that our genetic material had the structure of an “aperiodic crystal.” Meanwhile, Luria and DelBrück (an M.D. and a physics Ph.D., respectively) discovered the genetic mechanism by which viruses replicate. In the next decade, Watson (a biologist) and Crick (a physicist) applied x-rays from Rosalind Franklin (a chemist) to discover the double-helix structure of DNA. Both Luria and DelBrück, and Watson and Crick would go on to win Nobel Prizes for those interdisciplinary collaborations. (Franklin herself had passed away by the time the latter prize was awarded.)

If AI in medicine were a hundredth as successful as physics was in biology, the impact would be astronomical. To return to the example of blood thinner CHADS2-Vasc, there are about 21 million people on blood thinners worldwide; if a third of those don’t need it, then we’re causing 266,000 extra brain hemorrhages[7]. And that’s just one score for one disease. But we can only solve these problems if we beg to renounce the noblesse, as generations of scientists did done before us. Lives are at stake.