A million rows isn’t cool. You know what’s cool? A billion rows.

Changing your frame of reference when starting with SQL on Hadoop.

If you’re just getting started doing analytic work with SQL on Hadoop, a table with a million rows might seem like a good starting point for experimentation. Isn’t that a lot of data? While you can exercise the features of a traditional database with a million rows, for Hadoop it’s not nearly enough. Think billions of rows instead.

Let’s look at the ways a million-row table falls short. Understanding the data volumes involved with big data can help you avoid going down unproductive pathways based on misleading assumptions.

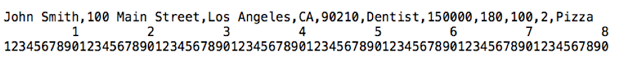

With a million-row table, every byte in each row represents a megabyte of total data volume. Let’s say your table represents people and has fields for name, address, occupation, salary, height, weight, number of children, and favorite food. Here’s what a sample field might look like, with a scale underneath to illustrate length:

This particular record takes up 78 characters, including the comma separators. A back-of-the-envelope calculation suggests that, if this is an average row, we’ll end up with about 78 megabytes of data in the table. (And don’t recycle that envelope just yet — doing analytics with Hadoop, you’ll do a lot of rough estimates like this to sanity-check your expectations about performance and scalability.)

Let’s do a thought experiment using a query that does a full-table scan, something like:

SELECT AVG(salary) FROM million_row_table;

This query considers every row in the table, so there are no shortcuts to avoid reading all 78 megabytes. Is 78 MB a lot of data to process with Hadoop on a parallel cluster of computers? Not at all — in fact, it doesn’t hit the minimum threshold to ensure any parallel execution at all for the query: the whole thing can fit into a single data block.

To see how our understanding of disk storage has evolved, let’s take a brief spin back at block sizes throughout history:

| Commodore 64 | Disk blocks were 256 bytes. (Am I dating myself by admitting that I used to edit them in hex?) |

| Oracle Database | Default block size has traditionally been 4 KB. |

| MySQL Database | Default block size for InnoDB storage engine is 16 KB. |

| Dedicated data warehousing appliances | 64 MB is a popular block size. (That’s a huge jump from 16 KB) |

| Hadoop | Typical block size for HDFS is 128 MB, for example in recent versions of the CDH distro from Cloudera. |

Even an experienced data wrangler might be surprised to realize that today on Hadoop, the million-row table we’re talking about doesn’t even fill up a single data block.

That fact is significant because when breaking down the work to execute SQL queries in parallel on Hadoop, the smallest unit of measure is the block. If you have a 5-node cluster, how many nodes are needed to process 78 MB? Just one. How about if you have a 10-node cluster? All the I/O can still be done by one node. Sure, it’s nice to have all that spare capacity in the cluster, but the actual work of your query doesn’t get done any faster.

Of course, you can split up the original data into more than one file. A file that’s smaller than 128 MB will just consist of one HDFS block — just a smaller-than-normal one. But keep in mind that a Hadoop cluster with fast disk drives can read a 128 MB block in the blink of an eye. If you split up the million-row table into multiple files, you can give the other nodes in the cluster something to do, but performance-wise, it’s still the blink of an eye. The overhead to send requests back and forth on the network roughly balances out the performance benefit from doing such a small query in parallel across multiple nodes.

Therefore, however you slice it, our hypothetical million-row table doesn’t pose a good challenge for interactive SQL on Hadoop. You won’t learn much about performance or scalability with it. You won’t take advantage of the capabilities of your Hadoop cluster. You won’t get useful performance numbers running benchmark queries against it.

The preceding discussion shows how even in the best and simplest case, this million-row table doesn’t come close to the volume we normally deal with for big data. Let’s continue the thought experiment by looking at how this volume of data would factor into more realistic scenarios. (Spoiler: it looks even less substantial when you start applying other best practices such as binary file formats and partitioning.)

In practice, you would store your data in an efficient binary format such as Parquet, instead of as a bulky text file with millions of commas and requiring six bytes to encode a six-figure salary. In Parquet, the numbers are compacted in binary format, repeated values are encoded to minimize repetition, columns with a modest number of different values are encoded using numeric IDs, and the result is compressed one final time at the end. The original 78 MB of text might be packed down to 20-30 MB, or even less. Now you would need tens of millions of rows to get close to filling up an HDFS block. Remember, the ultimate goal is for the data to span multiple blocks, to give the cluster a worthwhile amount of work to do in parallel. That goal would be even harder to achieve because, to take advantage of all this encoding and compression, Parquet data blocks are typically 256 MB, 512 MB, or even 1 GB.

For SQL queries in Hadoop, most people experiment with partitioned tables. Partitioning physically divides the data — for example: by year, month, day — resulting in smaller individual data files. For all the same reasons why a million rows isn’t very much data for a regular table, a million rows also isn’t very much for a partition in a partitioned table.

Now, I hope anyone with a million-row table is not feeling bad. You can still use them quite well as part of big data analytics, just in the appropriate context.

For example, maybe you are a librarian with a million items in your catalog, or maybe you operate a factory that has produced a million items, or maybe you are a successful business with a million customers. The key is to find some more granular data set (in data warehousing terms, a dimension table) to correlate against the million-row table.

The library analyzes every reservation, checkout, and book return to optimize their purchasing process and the inventory for each branch. The factory records information about every component and every step in the manufacturing process, to find more efficient manufacturing methods with fewer defects. The business analyzes every purchase or other interaction from each customer to design a more personalized experience. All of this fine-grained data goes into tables with billions of rows, and that’s where you really see the benefits of interactive SQL queries on a Hadoop cluster.

When you start working with SQL on Hadoop and dealing with performance and scalability aspects of join queries, you’ll hear tuning advice regarding “big tables” and “small tables” involved in the query. Just remember, the million-row table that once seemed so impressive is probably the small table in this scenario.

Now, go out and look for some tables with billions of rows. With your SQL skills and some Hadoop infrastructure backing you, you’ll be able to handle them with ease.