AI’s dueling definitions

Why my understanding of artificial intelligence is different from yours.

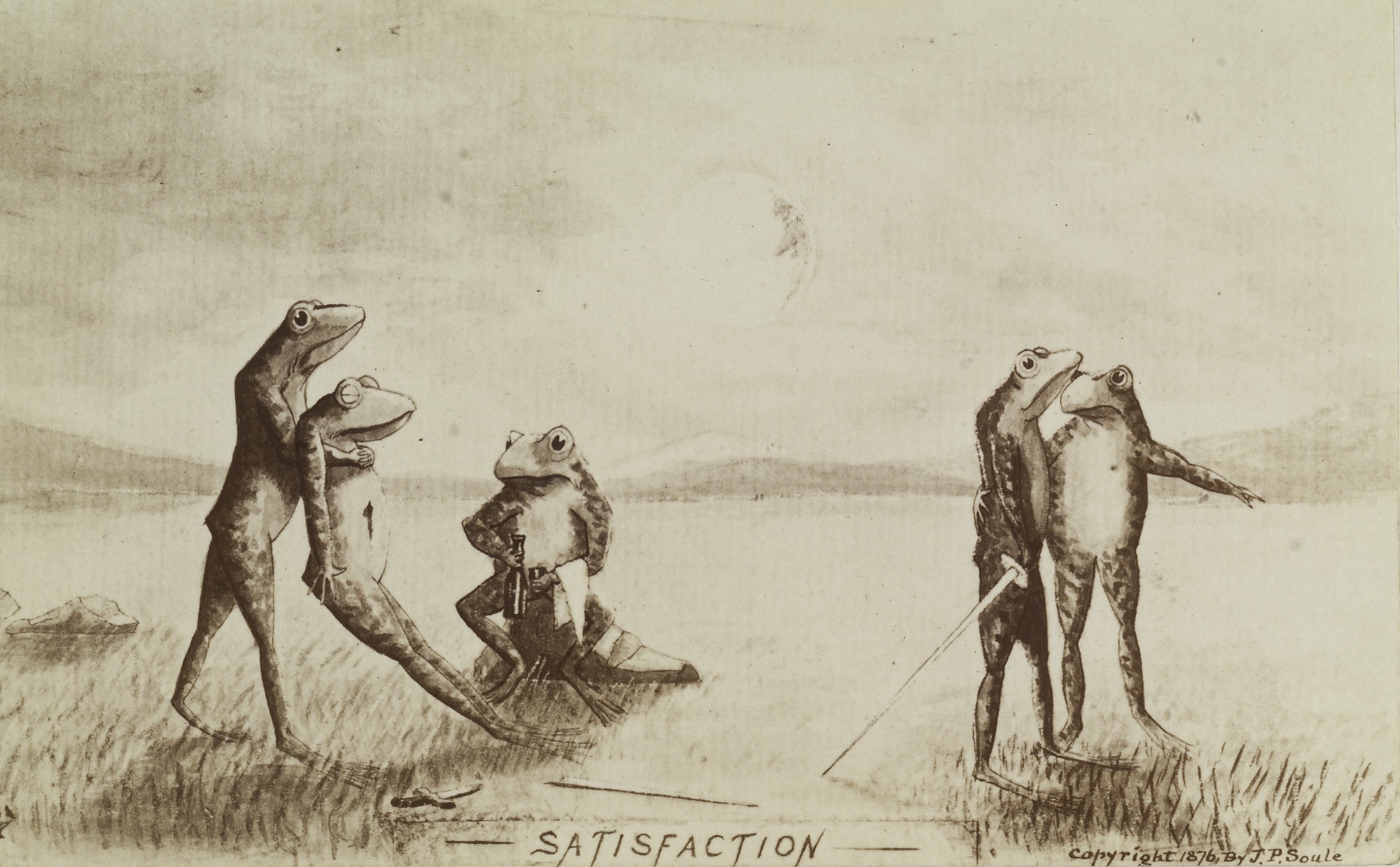

Frogs conducting a duel (source: John P. Soule)

Frogs conducting a duel (source: John P. Soule)

Let me start with a secret: I feel self-conscious when I use the terms “AI” and “artificial intelligence.” Sometimes, I’m downright embarrassed by them.

Before I get into why, though, answer this question: what pops into your head when you hear the phrase artificial intelligence?

For the layperson, AI might still conjure HAL’s unblinking red eye, and all the misfortune that ensued when he became so tragically confused. Others jump to the replicants of Blade Runner or more recent movie robots. Those who have been around the field for some time, though, might instead remember the “old days” of AI — whether with nostalgia or a shudder — when intelligence was thought to primarily involve logical reasoning, and truly intelligent machines seemed just a summer’s work away. And for those steeped in today’s big-data-obsessed tech industry, “AI” can seem like nothing more than a high-falutin’ synonym for the machine-learning and predictive-analytics algorithms that are already hard at work optimizing and personalizing the ads we see and the offers we get — it’s the term that gets trotted out when we want to put a high sheen on things.

Like the Internet of Things, Web 2.0, and big data, AI is discussed and debated in many different contexts by people with all sorts of motives and backgrounds: academics, business types, journalists, and technologists. As with these other nebulous technologies, it’s no wonder the meaning of AI can be hard to pin down; everyone sees what they want to see. But AI also has serious historical baggage, layers of meaning and connotation that have accreted over generations of university and industrial research, media hype, fictional accounts, and funding cycles. It’s turned into a real problem: without a lot of context, it’s impossible to know what someone is talking about when they talk about AI.

Let’s look at one example. In his 2004 book On Intelligence, Jeff Hawkins confidently and categorically states that AI failed decades ago. Meanwhile, the data scientist John Foreman can casually discuss the “AI models” being deployed every day by data scientists, and Marc Andreessen can claim that enterprise software products have already achieved AI. It’s such an overloaded term that all of these viewpoints are valid; they’re just starting from different definitions.

Which gets back to the embarrassment factor: I know what I mean when I talk about AI, at least I think I do, but I’m also painfully aware of all these other interpretations and associations the term evokes. And I’ve learned over the years that the picture in my head is almost always radically different from that of the person I’m talking to. That is, what drives all this confusion is the fact that different people rely on different primal archetypes of AI.

Let’s explore these archetypes, in the hope that making them explicit might provide the foundation for a more productive set of conversations in the future.

- AI as interlocutor. This is the concept behind both HAL and Siri: a computer we can talk to in plain language, and that answers back in our own lingo. Along with Apple’s personal assistant, systems like Cortana and Watson represent steps toward this ideal: they aim to meet us on our own ground, providing answers as good as — or better than — those we could get from human experts. Many of the most prominent AI research and product efforts today fall under this model, probably because it’s such a good fit for the search- and recommendation-centric business models of today’s Internet giants. This is also the version of AI enshrined in Alan Turing’s famous test for machine intelligence, though it’s worth noting that direct assaults on that test have succeeded only by gaming the metric.

- AI as android. Another prominent notion of AI views disembodied voices, however sophisticated their conversational repertoire, as inadequate: witness the androids from movies like Blade Runner, I Robot, Alien, The Terminator, and many others. We routinely transfer our expectations from these fictional examples to real-world efforts like Boston Dynamics’ (now Google’s) Atlas, or SoftBank’s newly announced Pepper. For many practitioners and enthusiasts, AI simply must be mechanically embodied to fulfill the true ambitions of the field. While there is a body of theory to motivate this insistence, the attachment to mechanical form seems more visceral, based on a collective gut feeling that intelligences must move and act in the world to be worthy of our attention. It’s worth noting that, just as recent Turing test results have highlighted the degree to which people are willing to ascribe intelligence to conversation partners, we also place unrealistic expectations on machines with human form.

- AI as reasoner and problem-solver. While humanoid robots and disembodied voices have long captured the public’s imagination, whether empathic or psychopathic, early AI pioneers were drawn to more refined and high-minded tasks — playing chess, solving logical proofs, and planning complex tasks. In a much-remarked collective error, they mistook the tasks that were hardest for smart humans to perform (those that seemed by introspection to require the most intellectual effort) for those that would be hardest for machines to replicate. As it turned out, computers excel at these kinds of highly abstract, well-defined jobs. But they struggle at the things we take for granted — things that children and many animals perform expertly, such as smoothly navigating the physical world. The systems and methods developed for games like chess are completely useless for real-world tasks in more varied environments.Taken to its logical conclusion, though, this is the scariest version of AI for those who warn about the dangers of artificial superintelligence. This stems from a definition of intelligence that is “an agent’s ability to achieve goals in a wide range of environments.” What if an AI was as good at general problem-solving as Deep Blue is at chess? Wouldn’t that AI be likely to turn those abilities to its own improvement?

- AI as big-data learner. This is the ascendant archetype, with massive amounts of data being inhaled and crunched by Internet companies (and governments). Just as an earlier age equated machine intelligence with the ability to hold a passable conversation or play chess, many current practitioners see AI in the prediction, optimization, and recommendation systems that place ads, suggest products, and generally do their best to cater to our every need and commercial intent. This version of AI has done much to propel the field back into respectability after so many cycles of hype and relative failure — partly due to the profitability of machine learning on big data. But I don’t think the predominant machine-learning paradigms of classification, regression, clustering, and dimensionality reduction contain sufficient richness to express the problems that a sophisticated intelligence must solve. This hasn’t stopped AI from being used as a marketing label — despite the lingering stigma, this label is reclaiming its marketing mojo.

This list is not exhaustive. Other conceptualizations of AI include the superintelligence that might emerge — through mechanisms never made clear — from a sufficiently complex network like the Internet, or the result of whole-brain emulation (i.e., mind uploading).

Each archetype is embedded in a deep mesh of associations, assumptions, and historical and fictional narratives that work together to suggest the technologies most likely to succeed, the potential applications and risks, the timeline for development, and the “personality” of the resulting intelligence. I’d go so far as to say that it’s impossible to talk and reason about AI without reference to some underlying characterization. Unfortunately, even sophisticated folks who should know better are prone to switching mid-conversation from one version of AI to another, resulting in arguments that descend into contradiction or nonsense. This is one reason that much AI discussion is so muddled — we quite literally don’t know what we’re talking about.

For example, some of the confusion about deep learning stems from it being placed in multiple buckets: the technology has proven itself successful as a big-data learner, but this achievement leads many to assume that the same techniques can form the basis for a more complete interlocutor, or the basis of intelligent robotic behavior. This confusion is spurred by the Google mystique, including Larry Page’s stated drive for conversational search.

It’s also important to note that there are possible intelligences that fit none of the most widely held stereotypes: that are not linguistically sophisticated; that do not possess a traditional robot embodiment; that are not primarily goal driven; and that do not sort, learn, and optimize via traditional big data.

Which of these archetypes do I find most compelling? To be honest, I think they all fall short in one way or another. In my next post, I’ll put forth a new conception: AI as model-building. While you might find yourself disagreeing with what I have to say, I think we’ll at least benefit from having this debate explicitly, rather than talking past each other.