Analysis without boundaries

Apache Arrow makes it possible to use multiple languages and heterogeneous data infrastructure.

Passport. (source: hjl on Flickr)

Passport. (source: hjl on Flickr)

We’re living in the golden era of data analytics. With data more available than ever, companies have endless opportunities to process and analyze data to extract tremendous business value. A wide range of open source big data technologies have been developed to support these use cases, and companies are increasingly leveraging heterogeneous environments that include a variety of data stores (Apache HDFS, Apache HBase, MongoDB, Elasticsearch, Apache Kudu, etc.), execution engines (Apache Spark, Apache Drill, Apache Impala, etc.), and languages (SQL, Python/Pandas, R, Java, etc.).

Heterogeneous data infrastructure requires that multiple processes be able to share data. Traditionally, that happens in one of two ways:

- APIs. Different systems can share data through APIs. For example, Drill uses the HBase API to read records from HBase, and Spark uses the Apache Kafka API to read records from Kafka.

- Files. Different systems can share data through common file formats optimized for analytics, such as Apache Parquet. For example, many companies use Spark to prepare data, and persist it for later analysis. Then, a SQL engine, such as Drill or Impala, is used to make that data available to business analysts using a BI tool.

While APIs enable a real-time exchange of data, they suffer from several problems. First, serialization and deserialization overheads are very high, and in many cases they become the main bottleneck in the end-to-end application or workflow. Second, because there is no standard data representation, the developers of each system must build an entirely custom integration with all the other systems.

Parquet, meanwhile, has emerged in recent years as the de-facto standard file format in the big data ecosystem. Parquet files are self-describing, and data is encoded in a columnar structure, resulting in excellent compression and performance for analytical workloads. In these workloads, the user often requires only a subset of the columns, or fields, in the data set, and Parquet allows the system to read only the necessary columns from disk.

Building on the success of Parquet, maintainers of popular open source projects focused on data storage, processing, and analysis (Drill, Impala, Cassandra, Kudu, Spark, Parquet, etc.) recently announced Apache Arrow, a new project that enables columnar in-memory data representation and execution. From a technical standpoint, Arrow is a specification for storing data in memory in a columnar structure as well as a set of libraries that implement that specification. The Arrow format provides excellent CPU cache locality and the ability to leverage vectorized (i.e., SIMD) operations in Intel CPUs.

Arrow is now being integrated into a variety of systems and programming languages, and will serve as the foundation for the next generation of heterogeneous data infrastructure. While it is impossible to cover all the use cases that will be enabled by Arrow, let’s explore three use cases that will be supported soon:

Fast import/export of data frames in R and Python. Wes McKinney, creator of the Pandas library and one of the initial Arrow committers, and Hadley Wickham, chief scientist at RStudio, have added Arrow support to Python and R by creating Python and R bindings on top of the C++ Arrow library. Currently, only flat schemas are supported, but nested schemas are planned along with an API that will make them easy to manipulate. Python and R developers can export a data frame to an Arrow-encoded file (also known as Feather) on disk. This is accomplished with simple calls to write_dataframe and read_dataframe:

|

library(feather) path <- “file.feather” write_feather(df, path) df <- read_feather(path) |

import feather path = ‘file.feather’ feather.write_dataframe(df, path) df = feather.read_dataframe(path) |

For more details on how to get started with Arrow in Python and R, see:

- https://blog.rstudio.org/2016/03/29/feather/

- http://blog.revolutionanalytics.com/2016/05/feather-package.html

- http://wesmckinney.com/blog/feather-its-the-metadata/

High-performance Parquet readers. Parquet is now the de-facto standard file format in the big data ecosystem. For example, Parquet is the default file format for Impala, Drill and Spark SQL, most Hadoop users maintain their data in the Parquet format, and many systems can consume Parquet files. The Parquet and Arrow communities have joined forces to develop canonical, high-performance Parquet-to-Arrow readers (in C++ and Java) that will enable systems to process Parquet files significantly faster than what was previously possible. In addition, high-level languages like Python and R will gain first-class Parquet support through this initiative. These Parquet readers will support the entire Parquet standard, including nested data. The current implementation supports vectorized reading of columnar data in the parquet-cpp library. Flat schemas are trivial to read from there, and Python bindings are also available. Full nested conversion to the Arrow format is in the works in both C++ and Java.

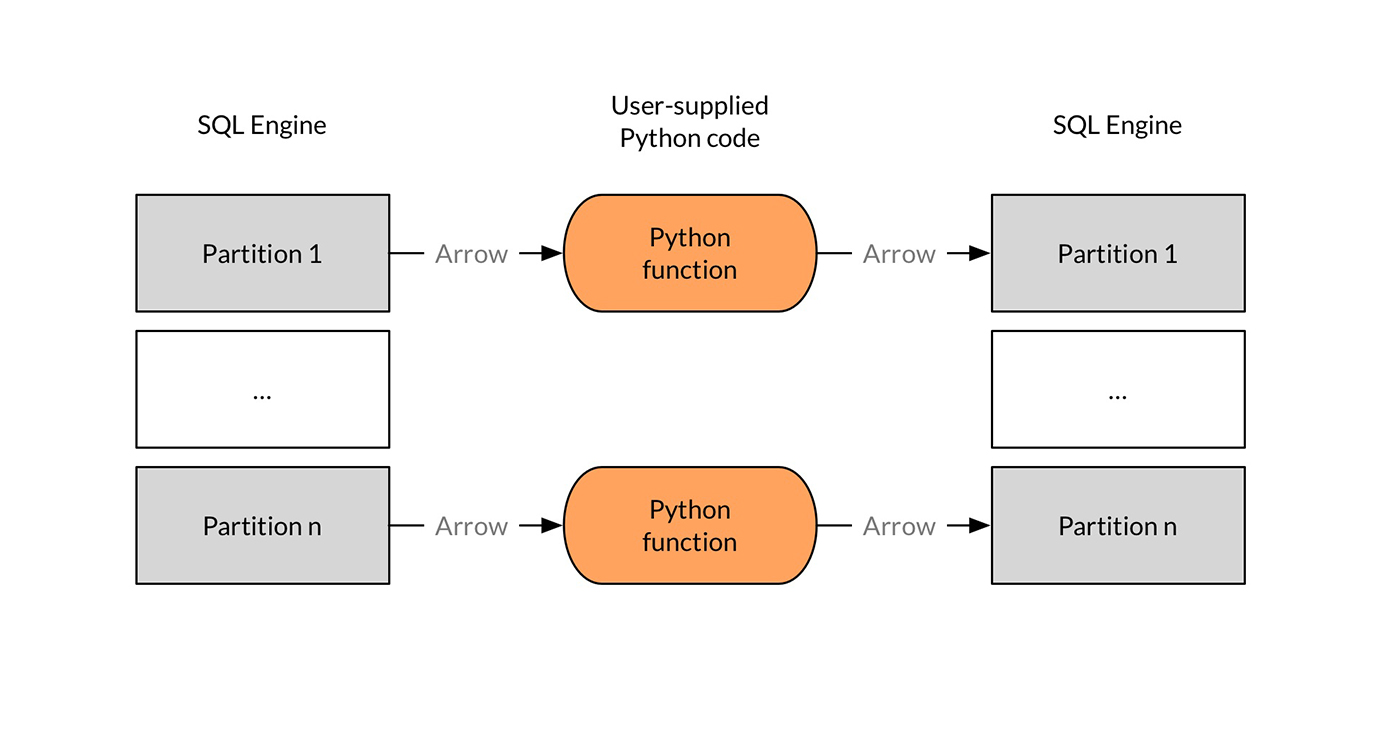

First-class support for Python, R and JavaScript in the big data ecosystem. High-level languages like Python, R, and JavaScript have gained tremendous popularity among developers and data scientists because they enable developers to be more productive. But when it comes to big data and distributed execution, these languages are currently second-class citizens. For example, Python-based Spark applications are significantly slower than Java/Scala-based Spark applications. Arrow is fixing that. For example, it will be possible to write Python UDFs in a SQL query with very little performance impact, and those UDFs will be portable across query engines. Architecturally, the user-defined Python code executes on batches of records, in Arrow format, as illustrated in the following diagram:

These are, of course, just a few examples of what will soon be possible thanks to Apache Arrow. Having an industry-standard columnar in-memory data representation is the foundation for enabling heterogeneous data environments. With more than 15 of the leading big data technologies already on board, it’s just a matter of time until most of the world’s data is handled by Arrow. While you might not interact with Arrow directly, you will certainly feel the difference, whether that’s through embedding Python functions in your SQL statements, joining data across disparate sources at record speeds, or the many other use cases that Arrow unlocks. Defining the Arrow IPC mechanism is currently underway, enabling cross-language communication (Java, C++, Python, R). Shared memory and zero copy data access remove virtually any overhead and make UDFs portable across systems.