Wildebeest Crossing (Kivuku) (source: By John Piekos, used with permission)

Wildebeest Crossing (Kivuku) (source: By John Piekos, used with permission) Modern applications are ingesting vast quantities of streaming event data from multiple sources in real time. The businesses behind those applications hope to use that data to benefit both the organization and its customers: better service, delightful user experiences, and more personalized interactions.

A common way to work toward that goal is streaming analytics, which captures data events from sources such as sensor data, social feeds, billing systems, online gaming, digital advertising platforms, and connected devices. However, to create true business value, you have to integrate the streaming analytic results with applications, via an operational component. Organizations must act at the rate they gain insights, or they will miss business opportunities. Tying fast data streams to data analysis requires architectural choices that can impact the ability to meet technical and company goals.

In other words: analytics create insight, but thoughtful “That’s interesting!” results don’t improve the business process. You have to use the data gathered—with the help of analytics—to take action. Competition is fierce, with threats coming from both incumbents and non-traditional business models. For organizations using data for more than simple reporting or aggregation—isn’t that everyone?—streaming analytics integrated with transactions is an essential competency to acquire.

Integrating streaming analytics with transactions provides a number of significant benefits. For example, applications integrated into a data ingest pipeline can support the use of streaming analytics in user interactions. This can enable more interactive applications, which improve lifetime value, boost conversion rates, optimize resource consumption, and reduce waste. Developers can create software with simpler architectures with fewer, more powerful components when they integrate streaming analytics and transactions into the design. Finally, building in streaming analytics lets us bring fully featured, robust applications to market faster than with build-your-own alternatives.

Macro trends in streaming analytics

Among the trends influencing this technology adoption are the shift from batch to streaming analytics; the increasing popularity and dominance of both public and private cloud computing; and the proliferation of data-producing edge devices such as wearables.

Each of those movements has been underway for a while, separately, but now they are coming together. Examples include personalization, real-time billing, and real-time monitoring—all based on fast data streams. However, there is little consensus on how these applications should be built and what technology is necessary to support them.

First, let’s be clear what we’re talking about. Fast data is shorthand for real-time data feeds from mobile, social networks, sensors, devices, interactions, observations, and large-scale software-as-a-service (SaaS) platforms.

Technology to support fast data is built on streaming analytics that are performed on live data in real time, with the analytic results used to inform actions—e.g., a transaction—running as a continuous process. While this isn’t a business or technical necessity for every use case, it’s a game-changer for some. Among the software requirements that can suggest the need for a fast data solution are:

- Technology feasibility via high-performance data management systems

- Availability of inexpensive cloud storage and compute resources

- User demand for better, faster, accurate information

- Standard manufacturing process control that prefers continuous, pull-based automation, instead of large batch processing

Architectural choices

These trends in analytics create new business demands and opportunities, and also mean that the tech staff will need the tools to get the job done. There are architectural choices to be made to ensure applications can support streaming analytics and transactions on live data streams.

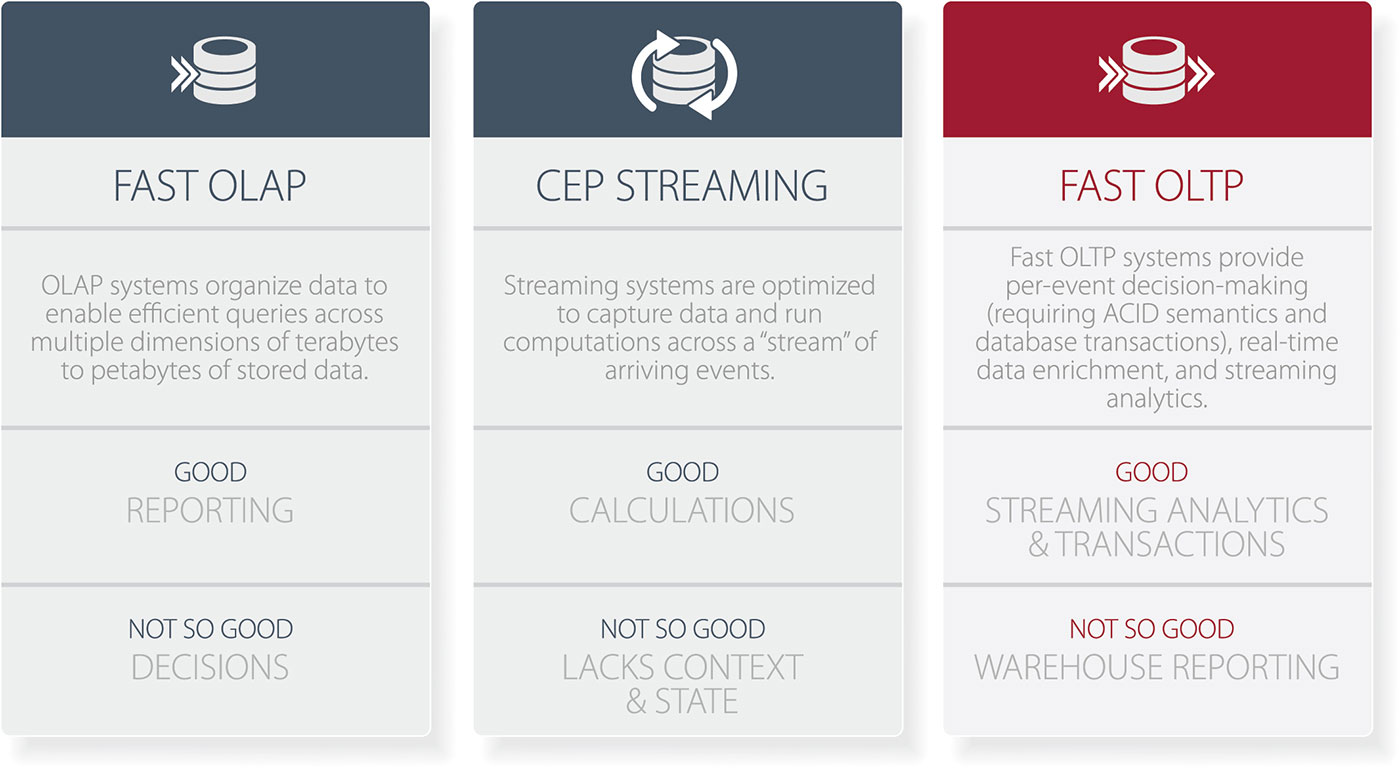

Online analytical processing (OLAP) systems focus on storing and reporting. They can provide real-time ingestion and fast reporting. However, these applications generally don’t support transactions, much less incorporating the results of reporting and streaming analytics immediately back into the application. If transactions are required, they are off-loaded to other database systems.

Streaming systems, which may include continuous event processing (CEP) systems, focus on continuous reporting. A number of streaming products are distributed parallel query engines relying on a unified programming framework that can process both stored data (using batch processing) and streaming data (using stream processing). However, data persistence is off-loaded to other database systems, adding complexity and cost.

Operational database systems focus on application interaction. These online transaction processing (OLTP) products provide storage and query semantics for classic request-response-oriented applications that need to create, read, update, and delete records. However, analytics are off-loaded to other database systems; these systems can’t automatically adapt on the basis of ongoing analysis.

Integrated solutions combine the best of all these options: they deliver data for long-term storage and analytics, enable streaming analysis for customization, and provide the low latency needed for applications with thousands of users. VoltDB, for example, provides a familiar relational SQL data model that supports interactive applications, streaming analytics, and actions on live data for applications that need to manage state and execute per-event transactions. VoltDB is an example of what Forrester calls a translytical database and Gartner dubs a hybrid transaction/analytical processing system (HTAP) solution. It supports applications that need to update, read, and write data in a per-event, record-oriented approach, not simply report against collected batches. With both streaming analytics and transactions baked in, it doesn’t require complex dependencies on other systems, e.g. the Apache ZKSC stack or ancillary databases.

The bottom line: in fast data applications, analytics without actions have little value. If you’re building applications, make sure the analytics enable the business system to deliver the maximum value by using the data gathered, not just stuffing it away in a log file or other digital garage.

This post is a collaboration between O’Reilly Media and VoltDB. See our statement of independence.