Do no harm

In the software world, we’re often ignorant of the harms we do because we don’t understand what we’re working with.

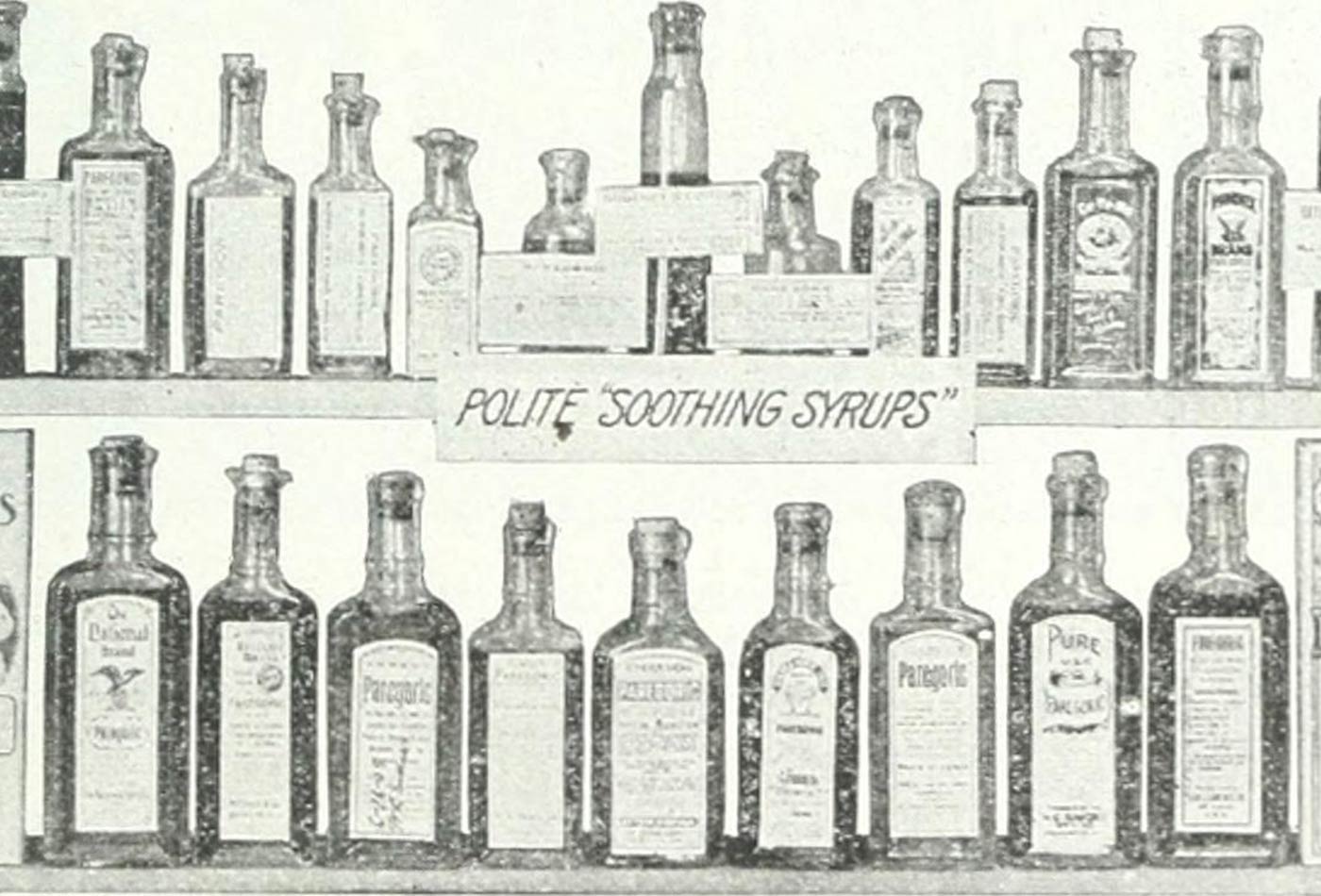

Image from "Nostrums and quackery," from "The Journal of the American Medical Association," 1914 (source: Internet Archive on Flickr)

Image from "Nostrums and quackery," from "The Journal of the American Medical Association," 1914 (source: Internet Archive on Flickr)

“First, do no harm.” That seems to have been a touchstone for the many discussions of data ethics that have been taking place. And, while that is a nice old saying that goes back to the Hippocratic oath, we need to think a lot more carefully about it.

First, let’s take this statement at its word. “Do no harm.” But doctors did nothing but harm until 200 or so years ago. “You have a fever. That’s caused by bad blood. Let me take some of it out.” It was a race to see whether the patient died of blood loss from repeated bleedings before their immune system did its job. The immune system frequently lost. “You’ve got a broken leg. Here, let me cut it off. Without washing my hands first.” Most of the patients probably died from the ensuing infection. Mortality in childbirth had more than a little to do with doctors who just didn’t see the need to scrub down. “Syphilis? Let’s try mercury. Or arsenic. Or both.” A medical school professor I know tells me that the arsenic might have worked, if it didn’t kill you first. Mercury wasn’t good at anything but killing you slowly.

The problem wasn’t that the centuries of doctors prior to the discovery of bacteria and antibiotics were uncaring, or in some way intended to do harm. On the whole, they were well-meaning and, for their time, fairly well educated. It’s that they didn’t understand “harm.” They were working with things (human bodies and their ailments) that they didn’t understand. Why would you wash your hands before surgery? What sort of idiot believes that invisible animals living on your skin will get into the patient and kill them?

That is the problem we face in the software world. Most of the people in our industry don’t want to do harm, but we’re often ignorant of the harms we do because we don’t understand what we’re working with: human psyches. So often, when we look back at the consequences of what we’ve done, we say “oh, that was obvious.” But at the time, it was no more obvious than bacteria.

I was reminded of this when I read about the connection between radicalization and engagement. When you see it spelled out like that, it’s a no-brainer. A generation of internet applications, certainly including YouTube and Facebook, were built to maximize engagement. How do you get people more engaged? Make them more passionate. Turn them into radicals. If you’re reading or watching something a little on the edge, what will make you come back for more? Certainly not a more moderate opinion. The voice of calm reason doesn’t count for much when you’re prowling the underside of the public internet. The DJs of talk radio have known that for years. After your first hit, you want a bigger fix.

Many “unknown unknowns” aren’t really unknown, if your eyes are open.

But that’s all hindsight. Our foresight said, “Engagement makes healthy, strong communities. It nourishes well-informed, thoughtful people. It builds strong bodies seven ways.” I don’t think anyone at YouTube intended to build a tool for radicalization. (I do think many people at YouTube, Twitter, Facebook, and elsewhere, were unwilling to face up to what they were doing—but that’s a somewhat different problem.) Like the doctors, we dosed the patients with mercury until they lost their minds.

What eventually saved the day for medicine was the realization that there was a process of discovery that could lead to better practices. Doctors who didn’t bleed their patients, and who washed their hands, had better outcomes. You can call this hindsight, or you can call it the scientific process, but whatever you call it, doctors learned how to learn from their mistakes. We have hindsight, and as the saying goes, it’s 20/20. The question that we in the technology industry face is: have we learned how to learn? Can we prevent the current generation of mistakes before they happen? We have a lot of data; we need to use that data to teach us how to do better.

Assigning blame isn’t a way forward. Instead, we need what’s been discussed in DevOps circles for years: an industry-wide blameless post-mortem, in which we study what happened and learn how to do better. That isn’t easy, but it will leave us in good shape for fixing our social media. To that end, here are some observations.

First, it’s easy to pretend surprise about the presence of hate groups on social media. But the writing was clearly on that wall. The comment stream for even the most innocuous posts on YouTube and other media quickly degenerated into a cesspool of hate speech. That hate speech wasn’t necessarily about race or religion or politics; it could just as easily be about your favorite band, sports team, or JavaScript framework. That symptom was there, and we didn’t read it. We just turned off comments on our blogs, and started ignoring the comments on the content we read. One important lesson: be aware of the symptoms. Don’t ignore them or write them off.

Second, think carefully about the possible consequences of new technology. There are plenty of unforeseen consequences that could have been foreseen, if anyone had bothered to look. Technologists are frequently poor at understanding how humans work—but most of us have real-world friends, and most of us know how to interact with those friends in healthy ways. Building more diverse teams helps; I’ve noticed that women are often much more aware of technology’s downside than men. This goes back to the 1960s: Bell Labs spent hundreds of millions of dollars developing the picturephone, only for it to flop when they tried to roll it out. Why? They didn’t listen to the women who were telling them that video gave obscene phone calls an entirely new dimension, and that was the last thing they wanted. Many “unknown unknowns” aren’t really unknown, if your eyes are open.

It’s very easy to get caught up in the hype around new technologies, like social media, and miss the things that could go wrong. We have all done that, myself included. Don’t just think about the “happy path”; think about how the technology could be abused, and what you can do to prevent the abuse. I’ve often said that every startup needs at least one skeptic, someone who doesn’t drink the Kool-Aid, someone who questions the assumptions. Be that person. It’s not an easy role, but it’s essential. It’s hard to believe that everyone at YouTube, Twitter, and Facebook missed the connection between the rise of malicious comments and hate speech. Unfortunately, it’s easier to believe that those who saw the connection were ignored, or remained silent because they knew they’d be ignored.

Build in off-switches, and be willing to turn a feature “off” when it is going wrong. Software developers have learned a lot from Toyota’s Kanban, but we don’t yet have the ability to stop production when we see something going wrong. We’ve gotten really good at rapid deployment, but we only roll back changes if they are technically harmful, or if they hurt business metrics. We also need to roll back changes that are socially harmful. And to do so, we need the freedom to push that big red button without fear of repercussions.

Do no harm? Yes. But let’s see if we can do a better job of doing no harm than the doctors. Medicine took centuries, if not millennia, to learn from its mistakes. But it did learn. We need to do the same.