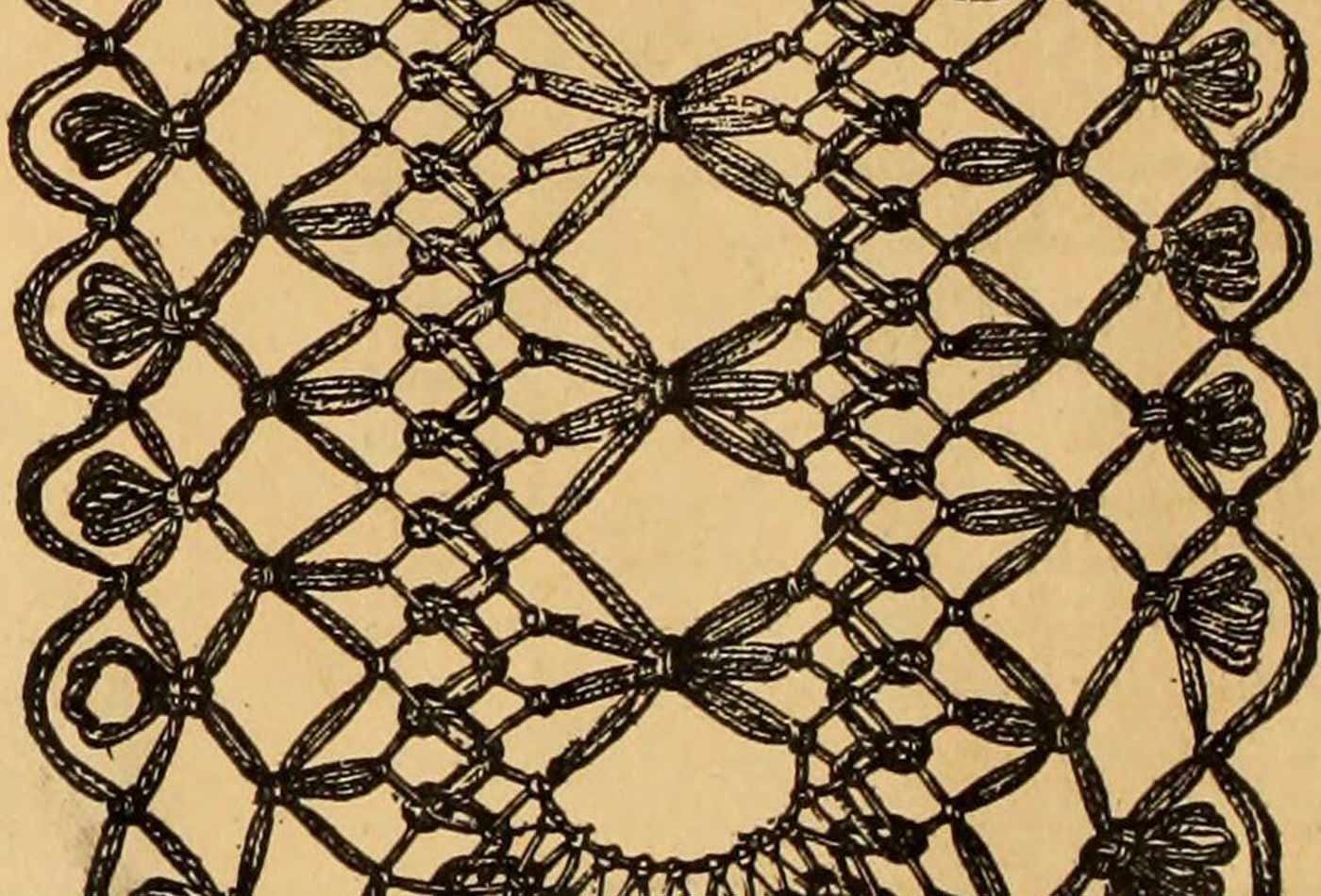

From page 149 of "Tatting and netting" (1895) (source: Internet Archive on Flickr)

From page 149 of "Tatting and netting" (1895) (source: Internet Archive on Flickr) There’s been a lot of recent buzz around the service mesh as a necessary infrastructure solution for cloud-native applications. Despite its surge in popularity, there’s still some confusion about the precise value of adoption. Because the service mesh has proven itself a necessary building block when architecting robust microservice-based applications, it has received a lot of accolades, and the momentum behind its adoption has been wild. Beyond the hype, it’s necessary to understand what a service mesh is and what concrete problems it solves so you can decide whether you might need one.

A brief introduction to the service mesh

The service mesh is a dedicated infrastructure layer for handling service-to-service communication in order to make it visible, manageable, and controlled. The exact details of its architecture vary between implementations, but generally speaking, every service mesh is implemented as a series (or a “mesh”) of interconnected network proxies designed to better manage service traffic.

This type of solution has gained recent popularity with the rise of microservice-based architectures, which introduce a new breed of communication traffic. Unfortunately, it’s often introduced without much forethought by its adopters. This is sometimes referred to as the difference between the north-south versus east-west traffic pattern. Put simply, north-south traffic is server-to-client traffic, whereas east-west is server-to-server traffic. The naming convention is related to diagrams that “map” network traffic, which typically draw vertical lines for server-client traffic, and horizontal lines for server-to-server traffic. In the world of server-to-server traffic, aside from considerations happening at the network and transport layers (L3/L4), there’s a critical difference happening in the session layer to account for.

In that new world, service-to-service communication becomes the fundamental determining factor for how your applications behave at runtime. Application functions that used to occur locally as part of the same runtime instead occur as remote procedure calls being transported over an unreliable network. That means that the success or failure of complex decision trees reflecting the needs of your business now require you to account for the reality of programming for distributed systems. For most, that’s a new realm of expertise that requires creating and then baking a lot of custom-built tooling right into your application code. The service mesh relieves app developers from that burden, decouples that tooling from your apps, and pushes that responsibility down into the infrastructure layer.

With a service mesh, each application endpoint (whether a container, a pod, or a host, and however these are set up in your deployments) is configured to route traffic to a local proxy (installed as a sidecar container, for example). That local proxy exposes primitives that can be used to manage things like retry logic, encryption mechanisms, custom routing rules, service discovery, and more. A collection of those proxies form a “mesh” of services that now share common network traffic management properties. Those proxies can be controlled from a centralized control plane where operators can compose policy that affects the behavior of the entire mesh.

Because service-to-service communication is the fundamental determining factor for the runtime behavior of microservice-based applications, the most obvious place to derive value from the service mesh is management of messages used for remote procedure calls (or API calls). Inevitably, comparisons are then made between the service mesh and other message management solutions like messaging-oriented middleware, an enterprise service bus (ESB), enterprise application integration patterns (EAI), or API gateways. The service mesh may have minor feature overlap with some of those, but as a whole, it’s oriented around a larger problem set.

The service mesh is different because it’s implemented as infrastructure that lives outside of your applications. Your applications don’t require any code changes to use a service mesh. The value of a service mesh is primarily realized when examining management of RPCs (or messages), but its value extends to management of all inbound and outbound traffic. Rather than coding that remote communication management directly into your apps, the service mesh allows you to manage that logic across your entire distributed infrastructure more easily.

The problem space

At its core, the service mesh exists to solve the challenges inherent to managing distributed systems. This isn’t a new problem, but it is a problem that many more users now face because of the proliferation of microservices. Programmers who are accustomed to dealing with distributed systems will recognize the fallacies of distributed computing:

- The network is reliable

- Latency is zero

- Bandwidth is infinite

- The network is secure

- Topology doesn’t change

- There is one administrator

- Transport cost is zero

- The network is homogeneous

These mistaken assumptions present themselves when running at scale. By that point, it’s typically too late to turn back, and developers often find themselves scrambling to build solutions to these newly discovered landmines. But these are actually well-understood problems with several proven (if not entirely reusable) solutions that have been built over the years.

In the past, application developers have solved these problems by creating custom tools directly within their applications: open a socket, transmit data, retry for some specified period if it fails, close the socket when the transaction reaches some inevitable conclusion, and so on. The burden of programming distributed applications was placed directly on the shoulders of each developer, and the logic to do so was tightly coupled into every distributed application as a result.

As an incremental step toward a reusable solution, network resiliency libraries (e.g., Netflix’s Hystrix or Twitter’s Finagle) emerged. Include these libraries in your application code and you now have a set of pre-developed tools ready to go. While these solutions made incredible leaps forward, they were also of limited value for polyglot applications. Different programming languages require different libraries, and then the challenge instead shifts to managing integration between the two. Consistent management between different application endpoints is an inherent challenge in this model.

Enter the service mesh.

The service mesh is meant to solve the challenges of programming for distributed systems. In today’s world, that means the question you should first be asking yourself is, “Do I have a lot of services communicating with each other in my application infrastructure?”

If you primarily manage traditional monolithic applications (even if they’re inside a container for some odd reason), you might still realize some benefit from a service mesh, but it’s value for you is significantly smaller.

If you do manage a number of smaller (née, micro) services, then the reckoning that is dealing with the fallacies of distributed computing is coming for you (if you haven’t slammed into that wall already). As microservice applications evolve, new features are typically introduced as additional external services. As the distribution of your applications continues to grow, so will your need for a solution like the service mesh.

The service mesh exists to provide solutions to the challenges of ensuring reliability (retries, timeouts, mitigating cascading failures), troubleshooting (observability, monitoring, tracing, diagnostics), performance (throughput, latency, load balancing), security (managing secrets, ensuring encryption), dynamic topology (service discovery, custom routing), and other issues commonly encountered when managing microservices in production.

If you currently face these problems, or if you’ve adopted cloud-native and microservice-architecture design patterns, then the service mesh is a tool you should explore to determine if it will work for your environment. By focusing on why this type of tool exists and the specific types of problems it solves, you can avoid the hype and jump right into quantifying its value for you.

This post is a collaboration between O’Reilly and Buoyant. See our statement of editorial independence.