In the age of connected devices, will our privacy regulations be good enough?

Controversial ideas for privacy protection.

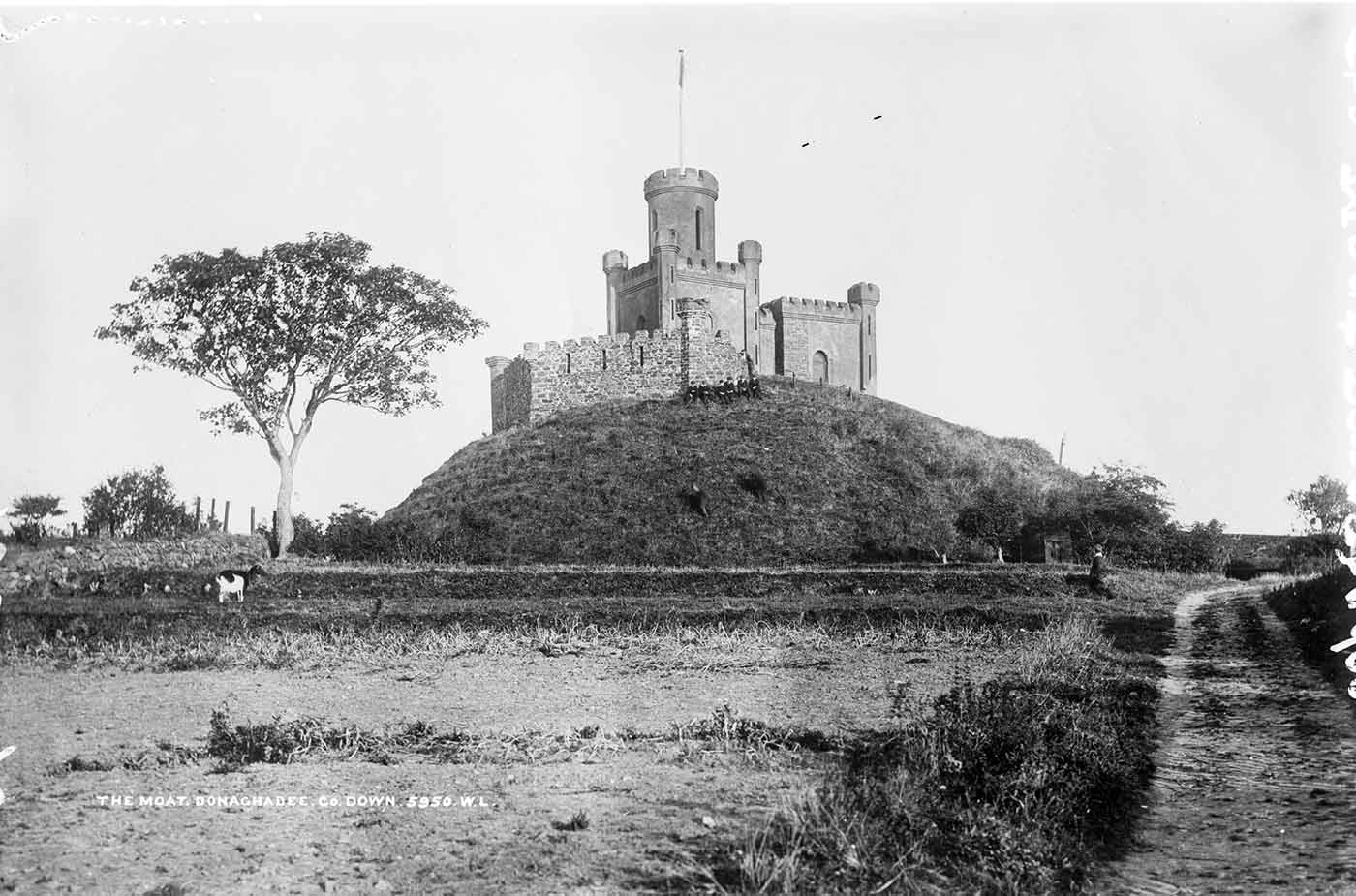

Moat at Donaghadee. (source: National Library of Ireland on Flickr)

Moat at Donaghadee. (source: National Library of Ireland on Flickr)

Did you read a privacy policy in full today? According to research from Carnegie Mellon in 2008, it will take you an average of 76 work days to read all the privacy policies you’ll encounter this year. What about clicking, “I Agree to the Terms and Conditions blah blah blah first born ZZZzzzzzz… ” Did you read what you were agreeing to? If so, congratulations! You are a rare bird indeed. With Ts & Cs plus privacy policies coming in longer than some of Shakespeare’s plays and Einstein’s Theory of General Relativity, it should come as no surprise that the wide consensus is most people do not read them. As a 2014 report to President Obama observed: “Only in some fantasy world do users actually read these notices and understand their implications before clicking to indicate their consent.”

For years, academics, members of industry, regulators, journalists, and the public have been keenly aware of the failure of two pillars of data protection: Notice & Choice, and Consent.

Privacy policies and their ilk are “Notice”—to support and respect your autonomy, you need to be told what information is being gathered about you and how it will be used; i.e., gathering information about you secretly is bad. “Choice” is the ability to walk away after you’ve been told about the prospective data collection and use. “Consent” is taking an action to indicate that you assent—you’re knowingly signing a contract.

Since the 1970s, these principles have been essential ways that states conceive of the fair treatment of people and their information. But the world today does not look like the 1970s and 80s. The intentions of Notices, Choices, and Consenting are still sound, but the Modern Digital Life moves with too much volume and velocity to implement them well. The proliferation of connected devices that see and hear in public and intimate spaces further challenge these intentions.

Another foundation stone of data protection is the idea that there should be limits on data collection; that organizations should only collect the data necessary for a given task, product, or service. Over the last four decades, this idea has been captured by the headings “Collection Limitation,” “Proportionality,” and “Data Minimization.” As these bundles of principles have been restated over the years, they were also commingled with “Purpose Specification”—state up front what you’re going to do with the data.

A wide range of stakeholders in our Modern Digital Life see limiting data collection as infeasible. We are under observation by so many devices, in so many locations, in so many contexts, that a principle of limiting collection is out of step with the world we now live in. So, too, they say, is specifying the purpose of collection in advance because if “Big Data” means anything, it means discovering things you may not have known were there, so we need to give a wide berth to the analytic value of not knowing what the data’s good for initially. As Craig Mundie, senior advisor to the CEO of Microsoft, wrote in Foreign Affairs last year: “Many of the ways that personal data might be used have not even been imagined yet. Tightly restricting this information’s collection and retention could rob individuals and society alike of a hugely valuable resource.”

I raise this in the context of the “IoT Whatever It Is” because billions of connected devices imply two challenges: enhanced monitoring of people (i.e., collection) and the difficulty of providing Notices and Consent mechanisms given the absence of a screen or other meaningful interaction. In other words, the IoT amplifies the perceived problems of Notice & Choice, Collection Limitation, and Purpose Specification.

Since these problems are well known, the privacy community has not been idle in attacking them. Excellent work by Prof. Lorrie Cranor and her colleagues addresses the challenges around Notice, Dr. Ewa Luger and others are attempting to reframe Consent in a world of ubiquitous devices, and recent scholarship questions the assertion that people knowingly make tradeoffs when clicking “I Agree.” But there is momentum building around a much more radical approach to adapting these principles to the modern world: scrapping them.

What if we simply threw up our hands and said Consent and Notice don’t work anymore? Collection is ubiquitous, so why try to prevent it? Isn’t the social and commercial value of unknown data uses too great to worry about specifying the use up front? A broad group of professionals and experts have been coalescing around those very views. Some embrace all of them, some embrace them partially, but the conversation is happening and is likely to influence policy in the future.

So, if we throw out Consent, Notice, and Collection Limitation, then what? The proposed answer is regulating data based on its use.

“[D]ata users…would evaluate the appropriateness of an intended use of personal data not by focusing primarily on the terms under which the data were originally collected, but rather on the likely risks (of benefits and harms) associated with the proposed use of the data,” explained Fred Cate and Viktor Mayer-Schönberger, two senior policy and governance scholars, in a 2013 report.

Instead of a) declaring up front what is being collected, b) saying what will be done with it, and c) getting consent to do so, businesses would weigh the risks of using the data, taking into account the benefits and harms to the data subject (you and me) to determine if such use is appropriate. The method for this risk calculus is, at this stage, left a bit vague. As Cate and Mayer-Schönberger observed: “Measuring risks connected with data uses is especially challenging because of the intangible and subjective nature of many perceived harms.”

Proponents of use regulation envision risk assessments that include the likelihood of harms to people, social impact of the data use (positive and negative), public benefit, norms, common sense, and people’s expectations, among other factors. Businesses would carry out the assessments and then use personal data in ways that had low probabilities of harm and high probabilities of value and acceptability.

Those championing use regulation argue that it shifts the burden of privacy protection back onto the organizations who collect and use it, versus relying on people to protect their own privacy by reading privacy policies and then choosing to consent or not; that it makes “privacy relevant again.”

A number of use-based regulatory frameworks already exist in U.S. law. HIPAA, which governs medical information privacy, specifies how patient data can and cannot be used—e.g., in the provision of care or for research. The Fair Credit Reporting Act specifies that credit data may only be used in creditworthiness, employment, and housing decisions. The Genetic Information Nondiscrimination Act prevents health insurance companies and employers from using genetic data when making decisions. These are all examples of “command and control” regulation, where the government specifies what can and cannot be done. The discussions of use regulation appearing recently tend to focus on industry determining which uses are acceptable and what harms look like.

Use regulation is not without its detractors. Professor Chris Hoofnagle, a privacy law scholar at Berkeley, believes that eliminating limitations on collection and allowing businesses to determine which uses are harmful and which are not will simply result in businesses collecting everything under the sun and doing anything they want with it.

“There are deep problems with protecting privacy through regulating use,” Hoofnagle said in a 2014 Slate article. “When one takes into account the broader litigation and policy landscape of privacy, it becomes apparent that use-regulation advocates are actually arguing for broad deregulation of information privacy.”

Hoofnagle further notes that data collected without limit will be even more attractive to government and law enforcement. Moreover, he argues business violations of the letter and spirit of a use regulation would be undetectable.

In their paper “Why Collection Matters: Surveillance as a DeFacto Privacy Harm,” Justin Brookman and G.S. Hans of the Center for Democracy & Technology argue that by eliminating collection limitation, consumers have greater exposure to five harms:

- Data breach—more data means more attractive targets and greater potential for personal data misuse

- Internal misuse—data voyeurism and other inappropriate accessing

- Unwanted secondary uses and changes in company practices

- Government access—echoing Prof. Hoofnagle and as the NSA revelations have shown, limitless collection could end up in government hands

- Chilling effects—“Citizens who fear that they are being constantly observed may be less likely to speak and act freely if they believe that their actions are being surveilled,” Brookman and Hans said. “People will feel constrained from experimenting with new ideas or adopting controversial positions.”

Given what we know about the upcoming changes to European data protection law, use regulations are unlikely to gain traction there. The debate in America is, however, quite healthy and necessary. No one is disputing the problem: our early ideas about how to regulate information flow and protect privacy need to be updated.

The Internet of Things heralds a qualitative shift in the amount of data collected about people. As we introduce more cameras, microphones, and biometric sensors into our personal and public spaces, we’ll need better rules and tools, institutions, norms, incentives, and dialogue to shape who gets to use what they see and hear. Use regulation is an attractive, flawed, contentious proposal, and ultimately a valuable discussion. Governments have enacted it with mixed results, and we must wonder how businesses would fare; both are the stewards of very intimate information about us.

[The author thanks Joe Jerome, Lachlan Urquhart and Anna Lauren Hoffmann for their help with this post.]