Interactive big data analysis using approximate answers

As data sizes continue to grow, interactive query systems may start adopting the sampling approach central to BlinkDB.

Fractal art. (source: Pixabay)

Fractal art. (source: Pixabay)

Interactive query analysis for (Hadoop scale data) has recently attracted the attention of many companies and open source developers – some examples include Cloudera’s Impala, Shark, Pivotal’s HAWQ, Hadapt, CitusDB, Phoenix, Sqrrl, Redshift, and BigQuery. These solutions use distributed computing, and a combination of other techniques including data co-partitioning, caching (into main memory), runtime code generation, and columnar storage.

One approach that hasn’t been exploited as much is sampling. By this I mean employing samples to generate approximate answers, and speed up execution. Database researchers have written papers on approximate answers, but few working (downloadable) systems are actually built on this approach.

Approximate query engine from U.C. Berkeley’s Amplab

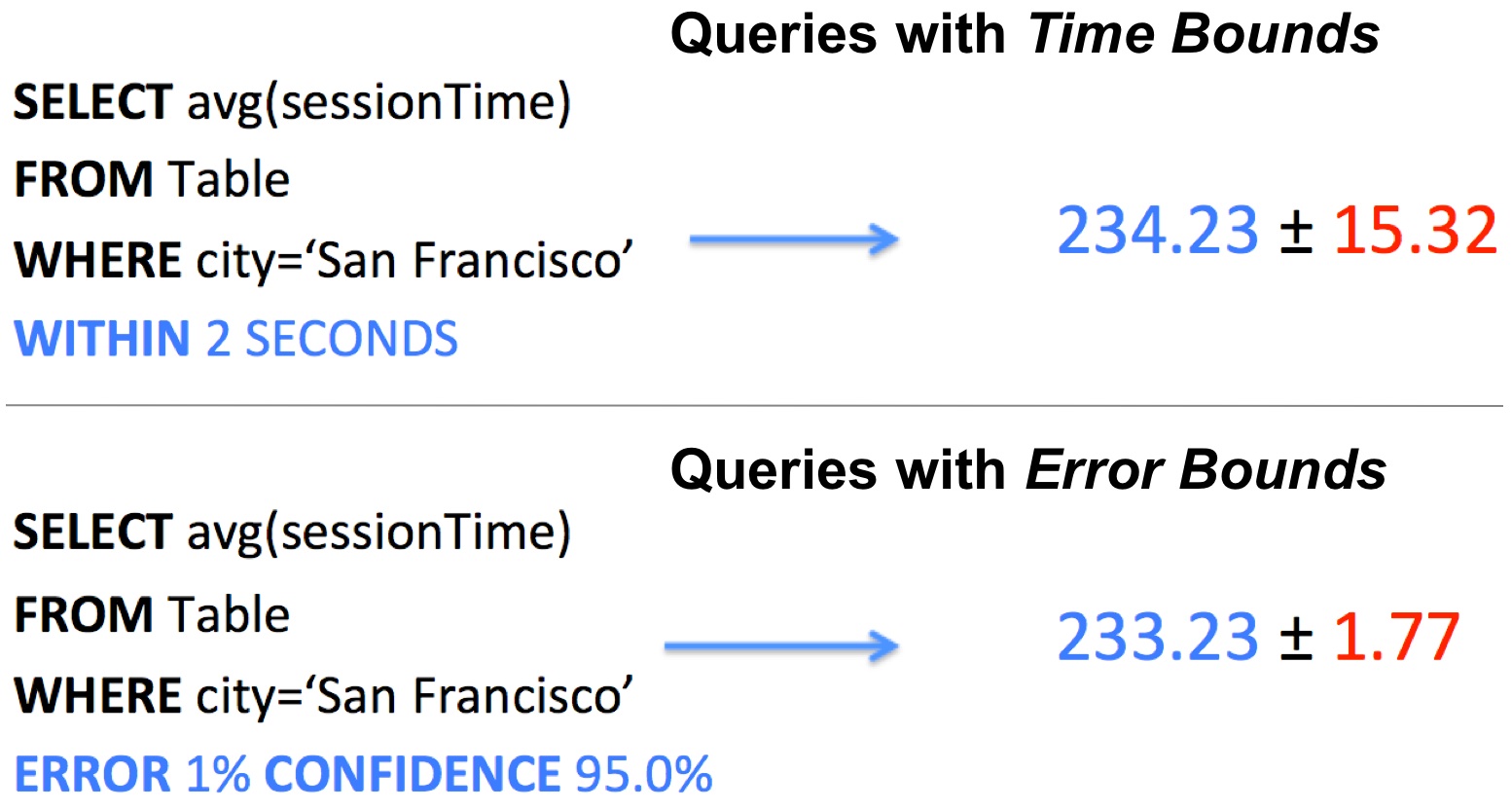

An interesting, open source database released yesterday0 uses sampling to scale to big data. BlinkDB is a massively-parallel, approximate query system from UC Berkeley’s Amplab. It uses a series of data samples to generate approximate answers. Users compose queries by specifying either error bounds or time constraints, BlinkDB uses sufficiently large random samples to produce answers. Because random samples are stored in memory1, BlinkDB is able to provide interactive response times:

Decision making and uncertainty

BlinkDB uses sampling to provide fast query response times. A side-effect is that query results are accompanied by error bars2. While it’s not the central goal of BlinkDB, approximate query systems change (in a good way) how decision makers think of business intelligence. Since answers in BlinkDB come with error bars, focus shifts from obsessing over the “one true answer”, to recognizing that variability and noise are present in data sets3. This requires a certain amount of reorientation as error representation lead to more nuanced discussions (imagine a scenario4 where an error bar spans a region between “fine” and “disaster”).

Sampling as an alternative

As data sets continue to grow, other interactive query systems may start adopting the sampling approach central to BlinkDB. Other areas of data science routinely use sampling: stats students learn early on the difference between a “statistic” & a “parameter”. Within machine-learning, a popular approach to scaling up algorithms is sampling5. When prototyping a data processing pipeline, I sometimes use small random samples to get a sense6 of the data. Sampling is also starting to appear in commercial big data systems. Users of Datameer build their analysis workflows using samples, and when they’re ready, run their MapReduce jobs against massive data sets.

To learn more about BlinkDB, Spark, Shark, Mesos, and other components of the Berkeley Data Analytics Stack, come to the third AMP Camp Big Data Bootcamp at the end of August.

Related posts: