Lessons on Hadoop application architectures

Choosing the right tools for the job.

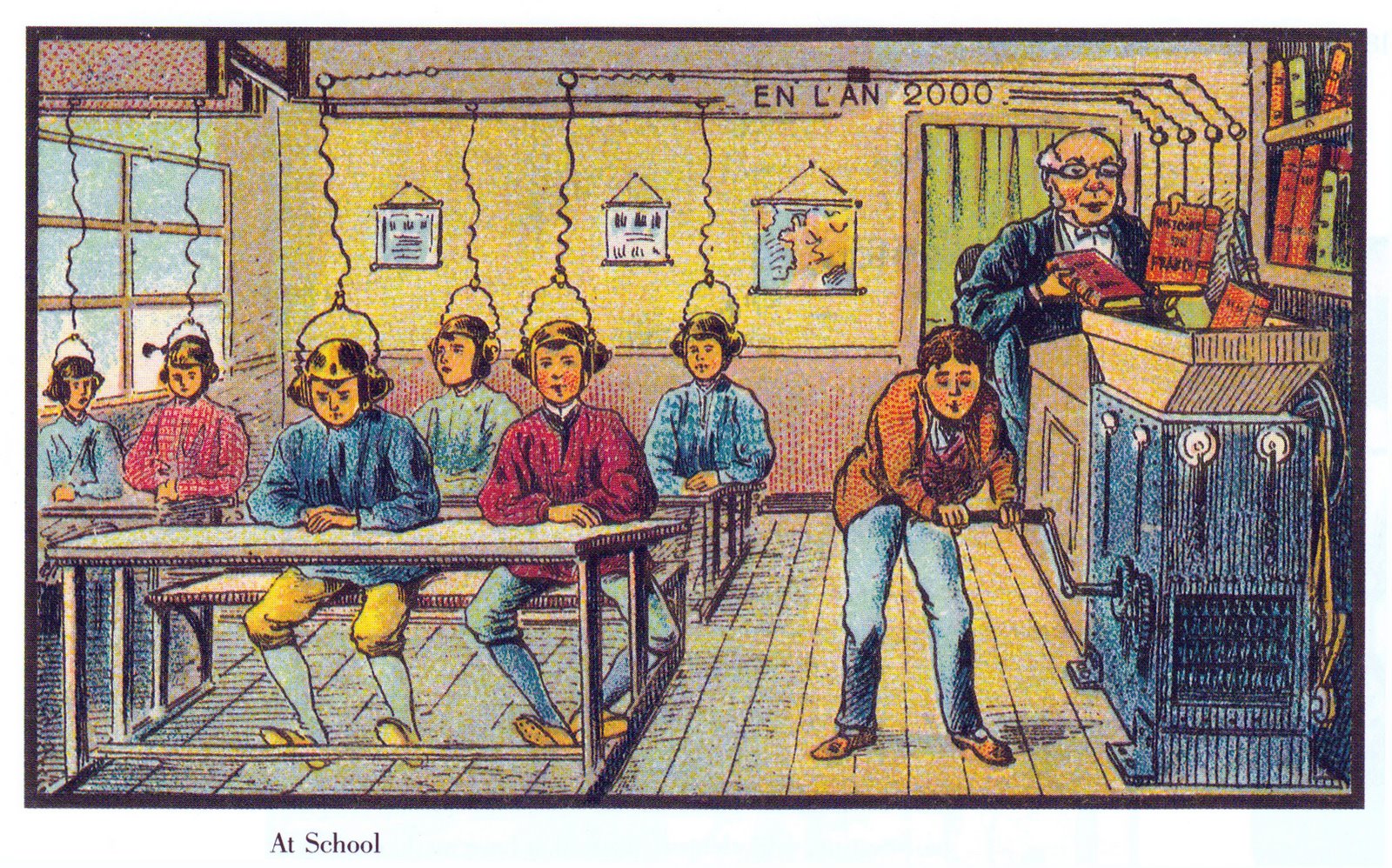

"Future School," circa 1901 or 1910. (source: Wikimedia Commons )

"Future School," circa 1901 or 1910. (source: Wikimedia Commons )

Editor’s note: “Ask Me Anything: Hadoop applications architectures” will be held at Strata + Hadoop World San Jose on March 31, 2016.

In an open Q&A session at Strata + Hadoop World New York 2015, the authors of Hadoop Application Architectures answered a variety of questions related to architecture and the design of applications using Apache Hadoop. Like putting together the pieces of a puzzle, there are many factors to consider when choosing which projects to include in your stack.

Based on their extensive experience in the field, authors Mark Grover, Ted Malaska, Jonathan Seidman, and Gwen Shapira addressed several topics, including:

- Orchestrating a Hadoop system

- Running queries on large data sets

- Optimal file format for data

- Sequence files versus Avro

- Entropy and codec for file compression

Orchestrating a Hadoop system

The first question from the audience dealt with how to handle orchestration in a Hadoop system, and which orchestration system to use (i.e. Oozie, Storm, Azkaban, etc), when handling large-scale data ingestion, transformation, and testing. In Hadoop Application Architectures, there is an entire chapter devoted to orchestration; the authors explained their reasoning for choosing Oozie, above other systems. Oozie has a vibrant user community, is well-integrated with the rest of the Hadoop ecosystem, and is able to perform advanced functions. Certain advanced functions within Oozie can be triggered by events in the data—a set date or time—or can even be triggered by a directory becoming full.

The authors emphasized that the main point, though, is to use something you’re familiar with, regardless of the tool. They also explained that homegrown systems can be challenging to maintain, and you can often save yourself time and effort by using something packaged and geared toward solving scheduling problems.

Running queries on large data sets

In cases where you run aggregation queries on large data sets, returning small subsets of data, a typical setup is to use Impala with Parquet, on top of HDFS. Author Mark Grover explained:

“This saves you a lot of I/O. It’s very good for compression. … It’s pretty typical to use an MPP system that’s highly concurrent, like Impala, store your data on HDFS in a very optimized columnar format, like Parquet, so you have indexes stored with the metadata, stored with the data itself on HDFS. When you’re dealing with only a small subset of columns, you’re not dealing with any of the other columns, so you’ve reduced the I/O. But even within the columns, you can compress them really well into various row groups, and thereby reduce the I/O when you’re reading those columns.”

The recently launched open source project Apache Kudu is also intended to achieve the same scan performance results with its integrated, Parquet-like files. Kudu also has an indexing feature that allows you to isolate scans rather than having to scan an entire data set to find what you need.

Selecting optimal file format for data

When dealing with unstructured data, the authors recommended using Avro, Parquet, and sequence files (the flat files, with key and value pairs, used extensively in MapReduce). In addition, they explained, you can also use any compression codec, such as Snappy or LZO. It doesn’t matter if the compression codec is splittable because the file format offers splitability at a high level—so even non-splittable compression codecs are made splittable. This allows you to save costs, they said, because it reduces file size. In addition, if you use a good compression codec like Snappy to compress intermediate data, you’ll likely get better speeds because the intermediate data being spilled is compressed and much less in volume, thereby increasing the overall job speed.

It’s also important that when you stream data, you record certain metadata (i.e. a timestamp) on every event. In addition, it’s useful to record which application or service, and host, created the event. Author Gwen Shapira explained:

“The service and host is important because if events are lost or there’s any issue with them, you want to go back and figure out where they came from in order to do the accounting, and so on, so you already have kind of a standard schema. But the problem is that you also have large byte arrays that you want to store with it. Luckily for us, Avro records can have a timestamp, string, and a large byte array. And that’s a very valid Avro schema. … Usually, you also have your own application-specific metadata, so it’s not just a byte array. You also might have some other metadata fields about lineage or whatever. … So, Avro gives you the flexibility to add more than just one key and just a value.”

Using sequence files versus Avro

Making a confident decision about file format clearly requires taking a lot into consideration; at the same time, the authors were clear on their preference—Author Ted Malaska explained:

“The sequence file is as raw as you can get. It just has a key and a value. And, essentially, you can put whatever you want in the key and value. It has no schema. Avro allows you to have a schema. And you can also put byte arrays inside Parquet/Avro, where you could have some columns that said source and time, and then you actually have the record. The advantage to that is you have a schema. The disadvantage of that is there’s an extra cost that’s an overhead in writing Parquet/Avro. It’s not tremendous, but it’s there. The other advantage is that with sequence files you can cat it—you can just cat it and see it, and that’s sometimes helpful.

There are mechanisms to cat with Parquet/Avro. You can definitely do it. It’s just not a command straight off the command prompt, but they’re both extremely valid. … And one thing to add to that—when you store data in Avro, it allows for schema evolution. Gwen wrote a full blog post on this topic.

So, if your schema evolves, and you always are worried about, ‘oh, I have one more column to report that got added in the middle, it’s going to break the entire workflow, how do I deal with it,’ and you spend days and hours working on this, really think about using Parquet/Avro to store your data formats.”

Dealing with file compression: entropy and codec

One of the final questions from the audience had to do with Avro inside Kafka. While Avro inside Kafka handles single records well, when creating larger files, optimized columnar formats like Parquet may compress more efficiently when using HDFS. Author Ted Malaska clarified:

“There are two things you always have to consider when you’re dealing with compression. One is entropy and one is the codec. So, codecs: I’ll talk about three real quick. Codecs are Snappy, GZIP, and BZIP—these are the most common. Some people might use LZO. It’s equivalent to Snappy. Some people might use Deflate. It’s equivalent to GZIP. … Snappy is faster than writing and reading raw. GZIP is a little bit slower on write but about as fast on read. And BZIP is just…slow.

… So, the difference between Avro and Parquet or ORC is—well, what Avro does is, if this is your big file, and this is your Hadoop block, what they actually do is take a sub-block of that. And then they compress that in whatever codec you chose, which means it’s the data organized in groups that’s compressed.

And then with a Parquet or ORC file, what they actually do is they compress down as columns. The difference there is the entropy within a column is less than the entropy within a row. So, for an example: if I had stock trades ordered by ticker and then time, that column for stock trades is essentially going to be all Facebook, and it’s going to disappear, and then the time is going to be sequential. I think ORC and Parquet both do difference encoding. So, essentially it’s just going to be like 1s and 0s here. And it’s going to disappear again. So, that’s why they get so much smaller, but they should have a slight performance-write penalty. And they normally require a little bit more memory. But nowadays, memory is cheap, so it’s not a big deal.”

To learn more from the authors of “Hadoop Application Architectures,” join them in the next “Ask Me Anything” session at Strata + Hadoop World San Jose on Thursday, March 31st, 2016.