Modeling your big data enterprise architecture after the human body

How decoupling, optimization, and specialization resemble connective systems in our bodies.

Human architecture. (source: Pixabay)

Human architecture. (source: Pixabay)

On the day before I flew out to Hadoop World London, I had the great pleasure of debating streaming solutions and patterns with Gwen Shapira. We had co-authored a book about Hadoop application architecture a while back, and we spoke together at conferences sometimes. This blog is the result of one of those discussions.

Our discussion started with which tools should be used for ingestion, then quickly expanded to include concepts like SOA, storage, processing, and microservices. The result was the idea of architecting systems like the human body, with all its components and connective systems.

Now, if we dig deeper into what makes up the human body, we will find there are some great architectural patterns that are also found in today’s leading big data platforms. In this short post, I will call out three of these patterns and compare them to patterns and tools we see in big data enterprise architecture: decoupling, consolidation and optimization, and specialization. But first, let’s break down the major parts of the body we will be referencing.

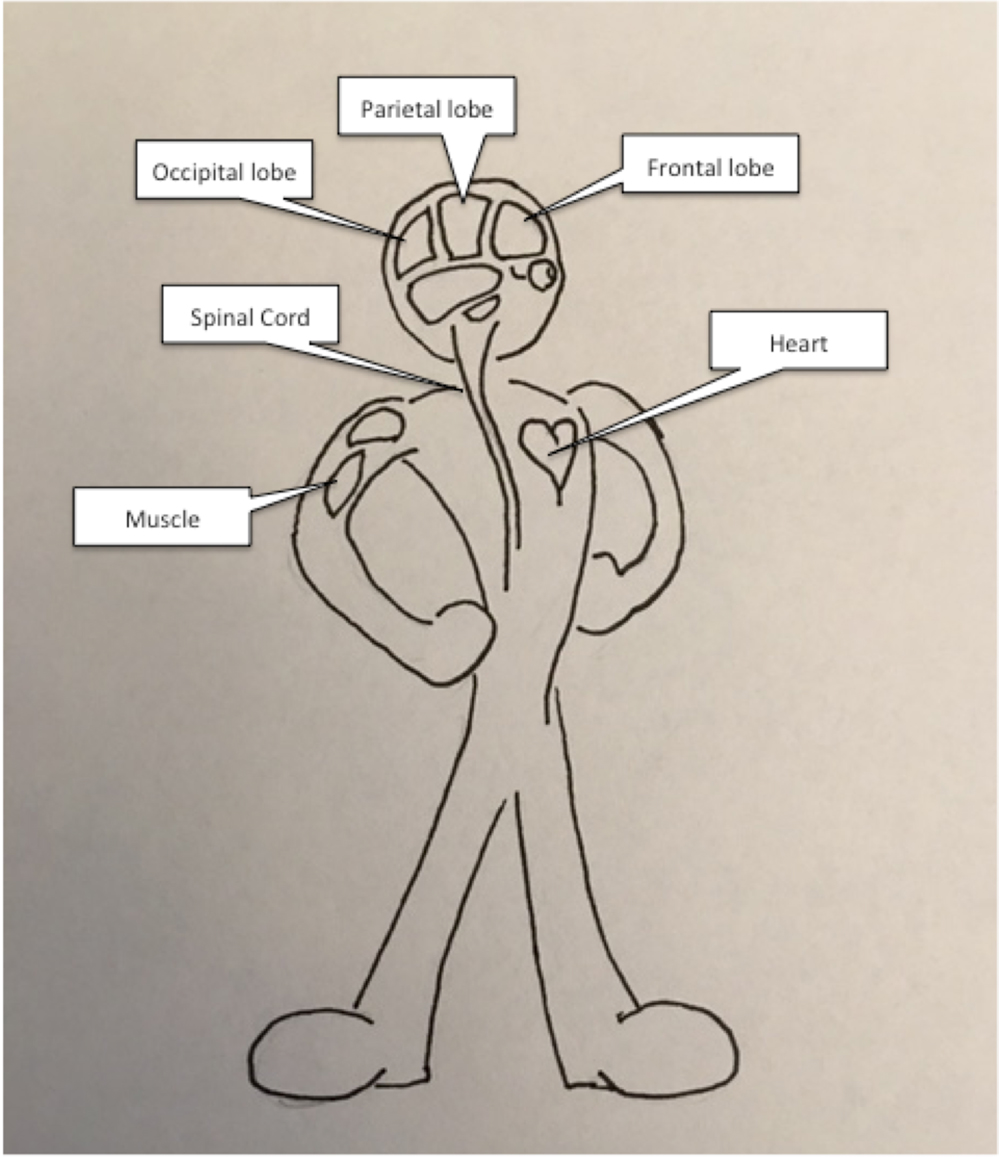

Some information systems in the human body

So, if we break down a human body in terms of its information systems, we can come up with a bunch of different high-level parts, including:

- Peripheral nervous system: The nerves that link all the components of your body together, allowing these components to receive data and send data to each other. Think of this as the information super highway of the body.

- Central nervous system: More purposeful sub-components of your nervous system, like your brain and your spinal cord, that have more complex jobs than just transporting communication messages. These components are normally optimized for different use cases, processing, or/and access patterns. Some examples of the functions of the central nervous system are: short-term memory, long-term memory, muscle memory, vision interpretation, creative thought, speech, and unconscious system maintenance.

- Special senses: These are the sensory input systems, like eyes, skin, ears, and so on. These are the systems that help us gather information about the outside world. The perception of the world is done in the central nervous system. The special senses can be thought of as decoupled systems focused only on information gathering. A sensor doesn’t distribute the information it gathers, but simply sends it to the peripheral nervous system, which does the distribution.

- Controllable systems: These are the systems that can be controlled by communications sent through the peripheral nervous system. Some of these systems are controlled through conscious thoughts, and some are controlled through unconscious thoughts. These systems include the muscular system, the cardiovascular system, and the digestive system. Think of these systems as stateless services, or microservices.

Let’s now connect these body systems to some patterns and tools in big data enterprise architecture.

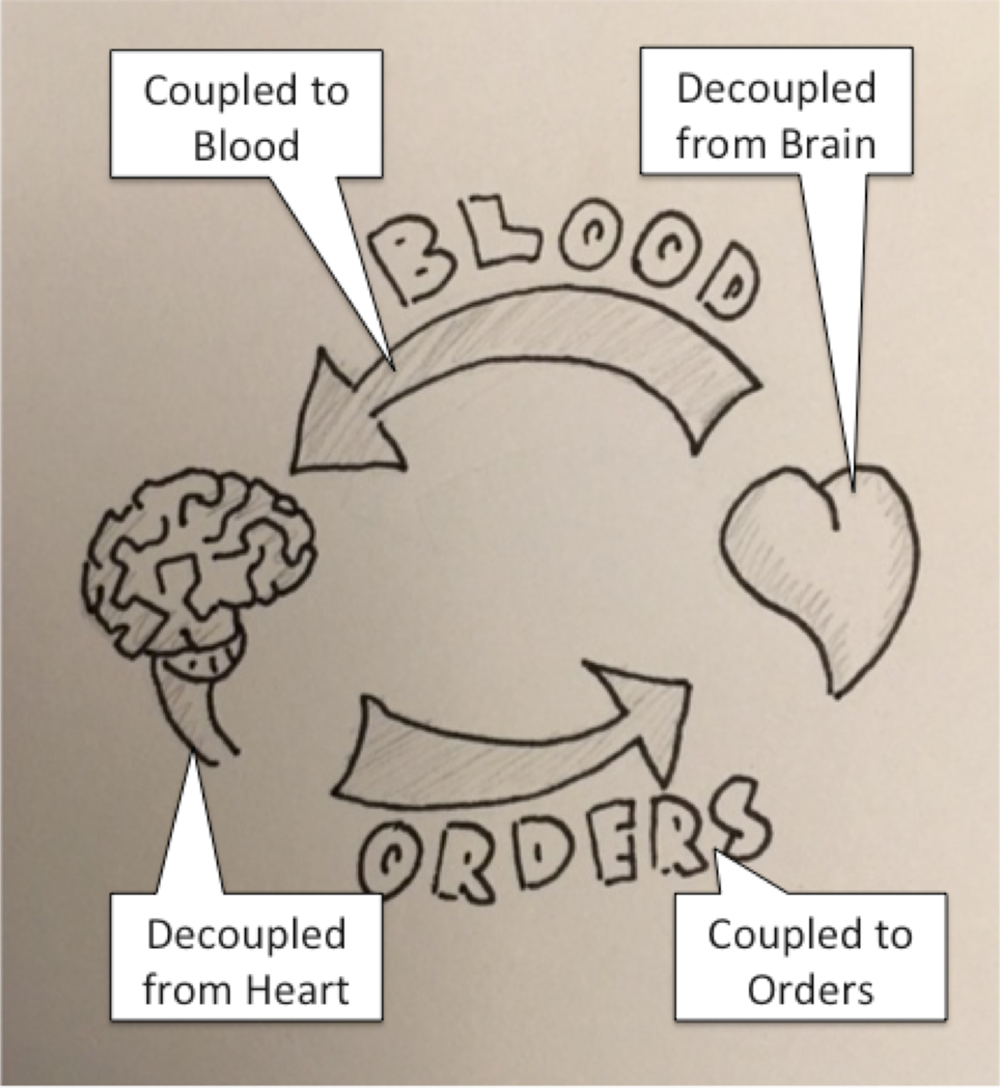

Decoupling (heart and brain)

Decoupling is an idea in multi-system development where two systems are independent from the implementation of the other because the two systems are separated by interfaces. So, let’s take the example of the heart and the brain. The brain is coupled (dependent) on the blood supplied by the heart, but not the heart itself. The heart can be replaced by another heart or even an artificial heart. So, the brain is coupled to the blood being pumped, but not the system pumping the blood.

Generally speaking, decoupling applies itself all over our bodies, which are made up of trillions of cells and many components, each being atomic with limited or no knowledge of the other components and how they work or what they are doing at any given moment. Your heart knows nothing of your bladder and your bladder is not concerned with the balancing sensors in the ear. Now, the brain has a window of visibility into other systems, but only through the protocol of messages that go through the peripheral nervous system. The brain itself is made up of parts that are decoupled from each other, each having its own regions and responsibilities.

In modern medicine, we are learning the reality of decoupled components/systems more and more. For example, we are using technology to bypass broken spinal cords by sending messages straight from implanted chips in the brain to robotic limbs or even real limbs re-animated through controlled electric stimulation (The nerve bypass: how to move a paralysed hand). The reverse is also shown through experiments that have given blind people limited sight by having a camera send signals back into the brain through an embodied chip (Man gets bionic eye, sees wife for first time in decade).

In the example of the blind person given sight, the brain doesn’t know that the vision input system of the eyes has been replaced with a camera. The visual section of your brain is interpreting the bits of information from the camera as if it had come from the eye.

In software architectures, this idea of decoupling systems through interface design is hugely important as systems get bigger and more complex. Good architecture will allow for parts of our system to be added, removed, and mutated without affecting the integrity of the system as a whole. When systems fail, we can bridge the gap and spin up new systems to replace them. Without decoupling, evolution would be very hard for both living systems and software architecture systems.

This interface design can be done in a number of ways—with a distributed message system like Kafka, with interfaces like Rest, public APIs, and with message types like JSON, Avro, and Protobufs. Where Kafka stands out as the transportation medium, the others listed above are the protocols. So, Kafka can be thought of as nerves while XML, JSON, Avro, Protobufs are the messages flowing throughout nerves.

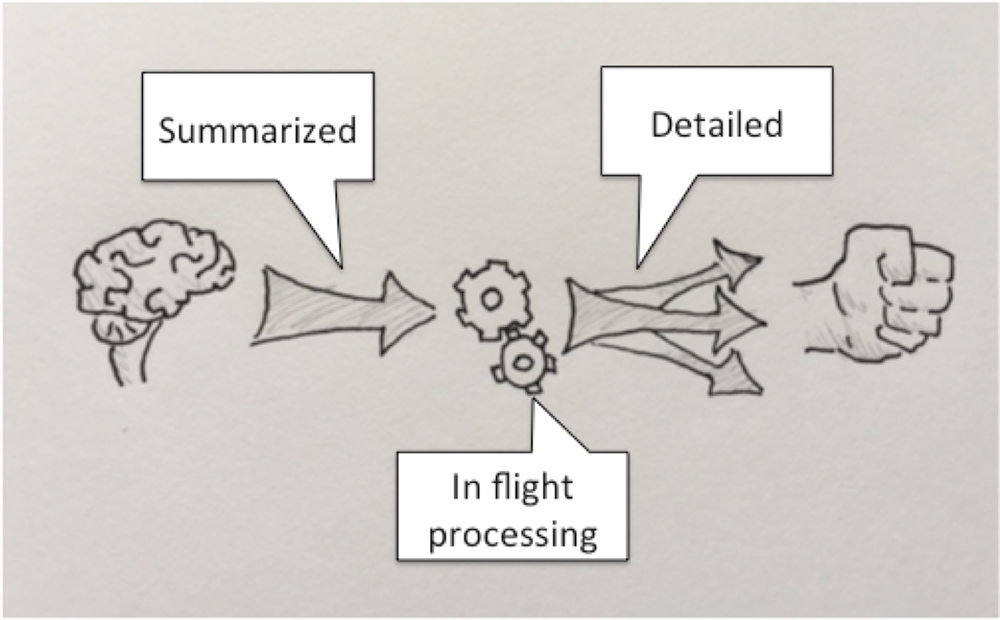

Consolidation and optimization (muscle memory)

In the age of building artificial limbs, there have been a lot of studies on how the brain sends a command to other systems—for example, telling a hand to open or close. There was an idea before the research was done that maybe the brain sent detailed instructions to each muscle to command its actions. But as we have learned more, we now know that the commands sent from the brain are more simplistic. Shortened commands are decoded near the affected systems, then detailed actions are processed and executed. In the end, this process of summarizing relieves the brain of having to conduct complex actions like finger-muscle movement so it can focus on more important problems.

One can associate this concept of consolidation to muscle memory. You will notice as you practice a sport or musical instrument that practice leads to better performance and execution. Behind the scenes, you are optimizing operations and communications for certain instructions, and building in sub-routines or models that execute the actions.

In our world of big data architecture, we see can optimization and consolidation as the migration from ad-hoc and batch processing to more real-time adaptive processing. Ad-hoc and batch will always be there, but they should be there to get you closer to optimization. Just like in the body, where optimization allows you to execute tasks with less effort, in the software architecture world, optimization may reduce resource allocation to achieve tighter SLAs, consolidate message complexities, or increase decoupling of request to implementation.

Specialization (brain)

The third architectural pattern that we can associate with the human body is the idea of specialization. Let’s just look at the central nervous system. The brain is made up of many components, each with its unique region and each with its own responsibility. Some components are responsible for different types of storage, while some are responsible for specific processing and access patterns.

Think about the storage systems in our brain. We have short-term, sensory, long-term, implicit, and explicit. Why do we have so many? The answer is there was an evolutionary benefit that each system provided over a generalized system. These systems most likely have different indexing strategies, flushing mechanisms, and aging-out/archiving processes.

We find a parallel in our world of software architecture, with storage systems like RDBMS, Lucene search engines, NoSQL stores, file systems, block stores, distributed logs, and more. The same goes for our processing systems. Vision interpretation is very different from complex decision-making. Just like the brain, in software architecture, there are different execution patterns and optimizations that serve different use cases. Tools like SQL, Spark, SPARQL, NoSQL APIs, search queries, and many more. There is a reason for the different approaches to processing, and there will be more approaches in the future as we find different ways to address our problems.

Which systems should we use, and should we restrict our choices to a limited few? The answer really is to choose the system that works best for you and your use case. Limiting system selection just for the sake of limiting is unwise. Because we have decoupled interfaces, we should have the freedom to use the storage system that best meets our needs for a specific use case, and a specific developer preference, while keeping in mind the very real possibility that we will store the data more than once for different use cases.

Summary

The human body is a beautiful example for us as system architects to use as a reference, as it demonstrates principles such as:

- Decoupling and interface design

- Common scalable message distribution system

- Plug-and-play stateful components and non-stateful components

- Specialization and optimization (versus monolithic)

- Iterative development (only the strongest survive at a component level)

Living systems have had great success with these patterns and principles, so we should consider their architecture to be part of a proven design that warrants reflection and allows innovative bottom-up development while still providing limited but resilient top-down guidance.