Reliability with Kafka

Five questions for Gwen Shapira about how Kafka can enable business agility.

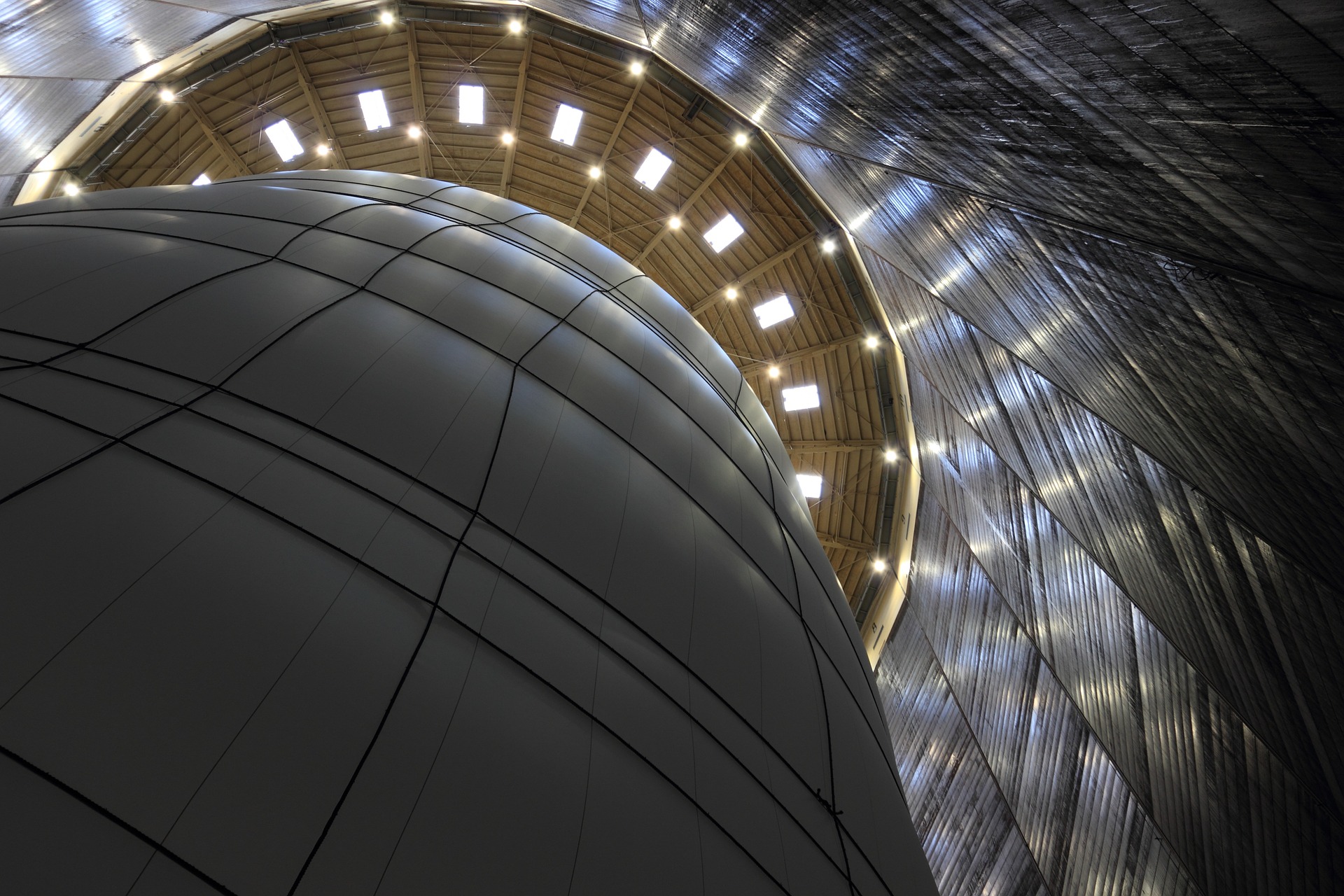

Oberhausen (source: olafpictures)

Oberhausen (source: olafpictures)

I recently sat down with Gwen Shapira, system architect at Confluent, to talk about Kafka—why it’s becoming popular (particularly with financial organizations), the benefits it can have for your organization, and how to adopt it safely and reliably. Here are some highlights from our talk.

What are some of the more exciting use cases for Kafka?

I’d say it’s most exciting to see how Apache Kafka use cases have evolved over the last few years.

I started working with Kafka around 4-5 years ago, and back then it was all about the Hadoop ecosystem—Kafka was mostly seen as a pipeline to help get data from elsewhere to Hadoop. And then maybe 2-3 years ago, stream processing became a huge deal and people started using Kafka as a basis for stream processing. Batch processing was HDFS+MapReduce or HDFS+Spark, and stream processing was Kafka+Storm or Kafka+Spark Streaming. This is obviously still going on, but now people have discovered the value of Kafka as a message bus between microservices. So they’re basically using Kafka to communicate between all those mission-critical services and basically re-building the way they’re running their business around Kafka.

I was in NYC earlier this week and talked to a bunch of financial companies—large, conservative companies that suddenly need to adapt to a world full of mobile apps and agile development, and software engineers who won’t wear suits. And they’re re-building everything, from the application that creates new accounts to fraud detection and risk analysis of loans on top of Kafka. Helping banks reinvent the way they work with customers is an exciting and humbling challenge.

Why are financial firms adopting Kafka?

Many financial firms are under intense pressure to adapt to the expectations of modern consumers. Most of us don’t want to go to a branch, fill out a form, and then wait two weeks to hear whether a loan is approved. I mean, when was the last time you physically visited a branch? We want to click on an app and get an answer right away. So those companies really needed to change—they need both faster infrastructure and a development process that’s agile and lets them keep up.

Kafka really fits the bill as underlying infrastructure for companies that want to make their apps faster and process more agile because:

- Kafka is built to be fast and to scale well, and it’s a technology that was proven in many places for years.

- Kafka is similar enough to a traditional message bus that, when a firm adopts Kafka, it doesn’t feel like a huge change. They often view it as a familiar pattern, only faster.

- Kafka supports multiple reliability models, which is a huge part of its appeal. I always tell my customers: You send website clicks to Kafka and you send financial transactions to Kafka, but you really want to treat them in completely different ways. Clicks have far more throughput but aren’t critical; financial transactions are critical, but there are far fewer of them. And you can use the same Kafka cluster for both just by turning a few knobs. Not many systems can make this claim.

- Kafka was built to decouple services. The services that send messages don’t need to care about the services that read them, and vice-versa. There is no direct communication; one service being slow or upgrading doesn’t impact other services. This reduces the cost of coordination between teams and lets each team develop their own services as fast as they can.

How do you ensure reliability with Kafka?

Generally speaking, Kafka has quite a few knobs (e.g., the number of retries, tracking of events, failover, fault detection), both in the clients and the server that control the different reliability tradeoffs. It can get rather complex, especially when you start thinking of how those knobs interact with each other.

At our Velocity talk on reliability guarantees in Kafka, we start by explaining some internals of how Kafka works, especially the replication and leader-election protocols. This understanding of these mechanisms is the mental model users need to be able to reason through the expected behavior of Kafka in different scenarios. Once you understand how Kafka behaves, figuring out the knobs is easy.

What are some tips for adopting Kafka for your data pipeline?

I was talking with a new Kafka user this morning. She was doing the first proof-of-concept for using Kafka at her company, and I was really impressed by all the experiments she was doing. She wanted to understand Kafka behavior, so she used a test framework that just sent sequences of numbers to Kafka—you know, “1, 2, 3, 4…”—and then read those sequences back. And she tried all kinds of configurations to see what would happen when she changed them, and then she used Linux tools like iptables to cause network faults to see how Kafka would behave. She put the entire system through its paces and always checked her assumptions about Kafka against the actual behavior. I have tons of confidence that by the time her company uses Kafka in production, it will be an incredibly reliable system because it will be configured and maintained by someone who experimented with Kafka’s behavior under several failure scenarios and now really understands the system.

Another tip: Never take Kafka to production with no monitoring. I was working with a really large financial company, and they told me they use Kafka to process some transactions. And when I looked, two out of five Kafka brokers were not available. They didn’t even know! Kafka is reliable enough that this didn’t cause issues for their users, but I don’t think you should let critical services go down without anyone in the company being aware of it.

You’re speaking at the Velocity Conference in San Jose this June. What presentations are you looking forward to attending while there?

That is not an easy choice! I go to conferences to get inspired, and Velocity has a great mix of topics. I try to attend some talks that directly impact my day-to-day work, and also to learn something completely new. For example:

- Microservices and governance are huge on my radar because this is exactly what my financial customers are struggling with, so I’m looking forward to this talk by Michael Benedict from Pinterest.

- Lyft’s Envoy is one of the more exciting open source projects I’ve seen, so even though I’m not using Envoy I really want to attend this session by Matt Klein from Lyft.