Risto Miikkulainen on evolutionary computation and making robots think for themselves

The O'Reilly Radar Podcast: Evolutionary computation, its applications in deep learning, and how it's inspired by biology.

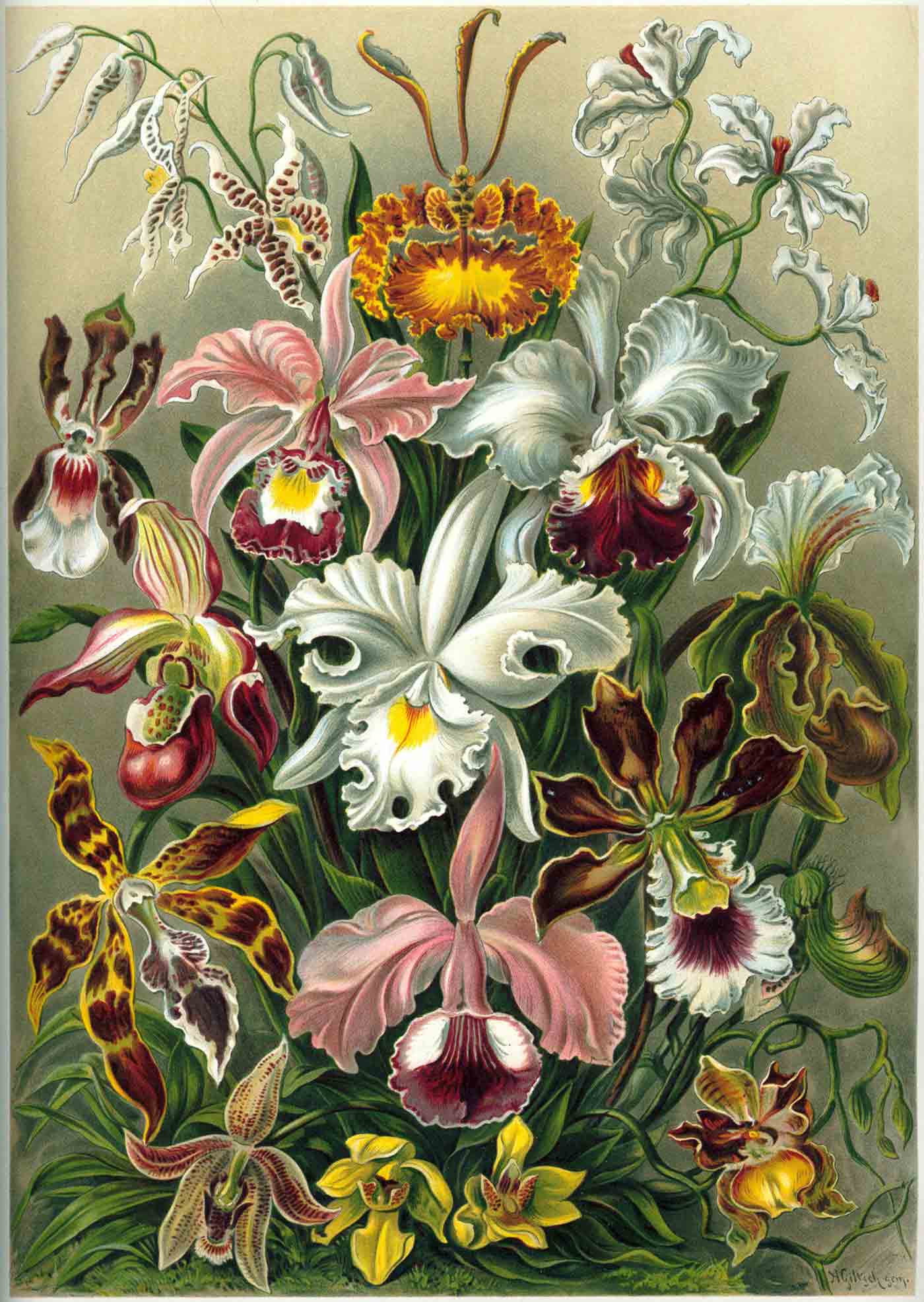

From from Ernst Haeckel's "Kunstformen der Natur" of 1899. Darwin noted that orchids exhibit a variety of complex adaptations to ensure pollination. (source: Wikimedia Commons)

From from Ernst Haeckel's "Kunstformen der Natur" of 1899. Darwin noted that orchids exhibit a variety of complex adaptations to ensure pollination. (source: Wikimedia Commons)

In this week’s episode, David Beyer, principal at Amplify Partners, co-founder of Chart.io, and part of the founding team at Patients Know Best, chats with Risto Miikkulainen, professor of computer science and neuroscience at the University of Texas at Austin. They chat about evolutionary computation, its applications in deep learning, and how it’s inspired by biology. Also note, David Beyer’s new free report “The Future of Machine Intelligence” is now available for download.

Here are some highlights from their conversation:

Finding optimal solutions

We talk about evolutionary computation as a way of solving problems, discovering solutions that are optimal or as good as possible. In these complex domains like, maybe, simulated multi-legged robots that are walking in challenging conditions—a slippery slope or a field with obstacles—there are probably many different solutions that will work. If you run the evolution multiple times, you probably will discover some different solutions. There are many paths of constructing that same solution. You have a population and you have some solution components discovered here and there, so there are many different ways for evolution to run and discover roughly the same kind of a walk, where you may be using three legs to move forward and one to push you up the slope if it’s a slippery slope.

You do (relatively) reliably discover the same solutions, but also, if you run it multiple times, you will discover others. This is also a new direction or recent direction in evolutionary computation—that the standard formulation is that you are running a single run of evolution and you try to, in the end, get the optimum. Everything in the population supports finding that optimum.

Biological inspiration

Some machine learning is simply statistics. It’s not simple, obviously, but it is really based on statistics and it’s mathematics-based, but some of the inspiration in evolutionary computation and neural networks and reinforcement learning really comes from biology. It doesn’t mean that we are trying to systematically replicate what we see in biology.

We take the components we understand, or maybe even misunderstand, but we take the components that make sense and put them together into a computational structure. That’s what’s happening in evolution, too. Some of the core ideas at the very high level of instruction are the same. In particular, there’s selection acting on variation. That’s the main principle of evolution in biology, and it’s also in computation. If you take a little bit more detailed view, we have a population, and everyone is evaluated, and then we select the best ones, and those are the ones that reproduce the most, and we get a new population that’s more likely to be better than the previous population.

Modeling biology? Not quite yet.

There’s also developmental processes that most biological systems adapt and learn during their lifetime as well. In humans, the genes specify, really, a very weak starting point. When a baby is born, there’s very little behavior that they can perform, but over time, they interact with the environment and that neural network gets set into a system that actually deals with the world. Yes, there’s actually some work in trying to incorporate some of these ideas, but that is very difficult. We are very far from actually saying that we really model biology.

OSCAR-6 innovates

What got us really hooked in this area was that there are these demonstrations where evolution not only optimizes something that you know pretty well, but also comes up with something that’s truly novel, something that you don’t anticipate. For us, it was this one application where we were evolving a controller for a robot arm, OSCAR-6. It was six degrees of freedom, but you only needed three to really control it. One of the dimensions is that the robot can turn around its vertical axis, the main axis.

The goal is to get the fingers of the robot to a particular location in 3D space that’s reachable. It’s pretty easy to do. We were working on putting obstacles in the way and accidentally disabled the main motor, the one that turns the robot around its main axis. We didn’t know it. We ran evolution anyway, and evolution learned and evolved, found a solution that would get the fingers in the goal, but it took five times longer. We only understood what was going on when we put it on screen and looked at the visualization.

What the robot was able to do was that when the target was, say, all the way to the left and it needed to turn around the main axis to get the arm close to it, it couldn’t do it because it couldn’t turn. Instead, it turned the arm from the elbow or shoulder, the other direction, away from the goal, then swung it back real hard; because of inertia, the whole robot would turn around its main axis, even when there was no motor.

This was a big surprise. We caused big problems to the robot. We disabled a big, important component of it, but it still found a solution of dealing with it: utilizing inertia, utilizing the physical simulation to get where it needed to go. This is exactly what you would like in a machine learning system. It innovates. It finds things that you did not think about. If you have a robot stuck in a rock in Mars or it loses a wheel, you’d still like it to complete its mission. Using these techniques, we can figure out ways for it to do so.