This is how we do it: Behind the curtain of the O’Reilly Security Conference CFP

Insider information on the O'Reilly Security Conference proposal process, including acceptance and rejection stats.

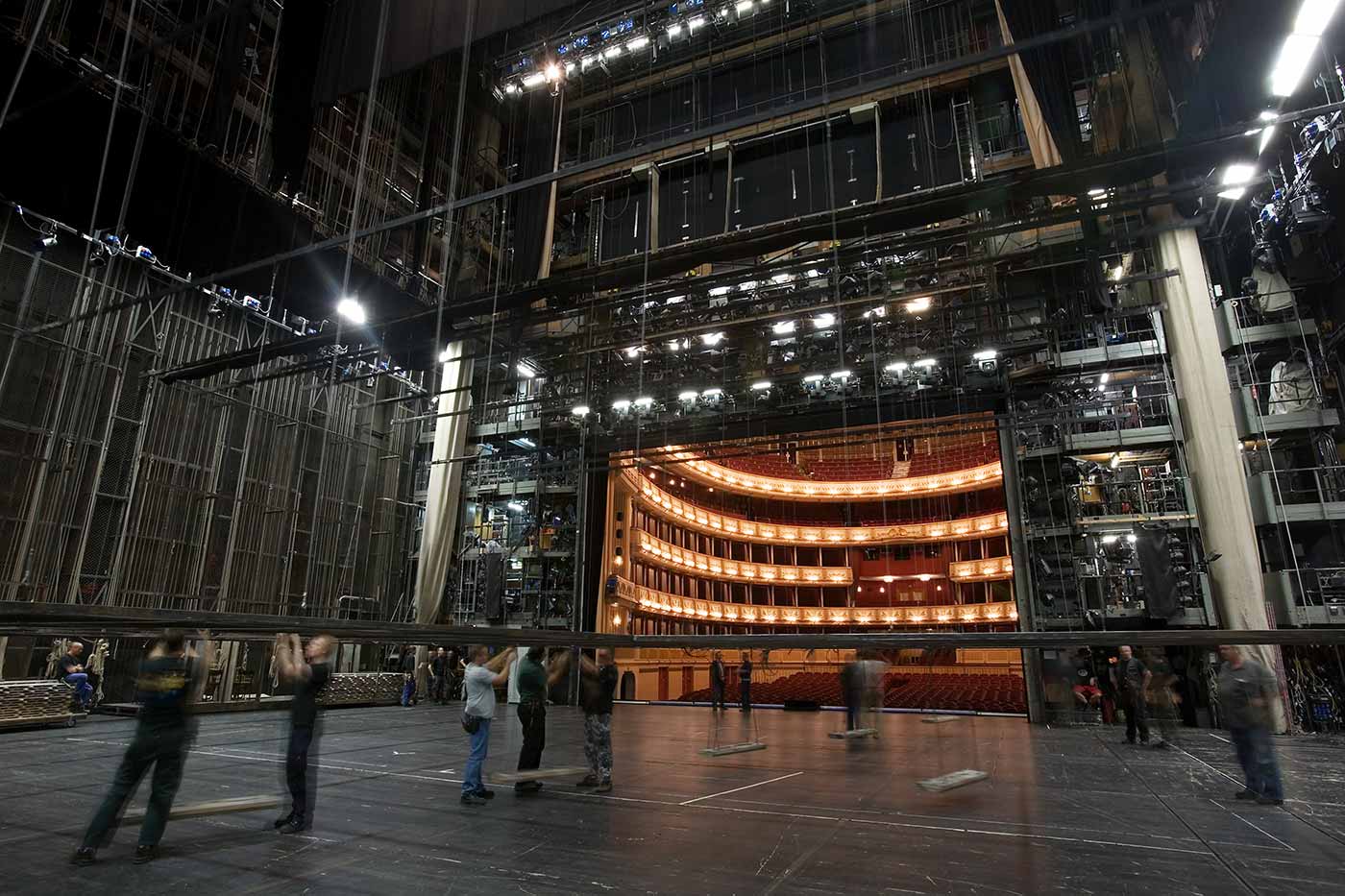

Back stage at the Vienna Opera. (source: Jorge Royan via Wikimedia Commons)

Back stage at the Vienna Opera. (source: Jorge Royan via Wikimedia Commons)

Since announcing the O’Reilly Security Conference we’ve had great interest from folks in the security industry, especially defenders who are looking forward to stepping out of the shadows and discussing what works with other like-minded professionals. We’ve also received many queries about our process for handling the call for proposals (CFP). In the spirit of sharing (a theme we’ll be seeing quite a bit of at the event), we want to present some data and feedback from the CFP process, a bit of our own version of “Behind the Music.” Tune in next week when we’ll follow up with a post on hacks for increasing your chances of getting your talk accepted next year. This first post shares our process and some basic stats around total proposals, acceptances, and rejections.

Bittersweet CFP

Between the NYC event and Amsterdam, the program committees reviewed more than 400 proposals, with some committee members, such as your trusty conference co-chairs, reviewing each and every one. We could only accept 40 talks for each conference, meaning that less than 20% of talks proposed were accepted. As you can imagine, that made for some very difficult decisions about which talks to accept.

Say my name

The first round of review was blind, meaning names and affiliations were excluded from consideration for the initial review. We chose this approach to reduce biases and level the playing field. This means reviewers only had the title, topic/track, abstract, and key takeaways to consider in their grading—they didn’t know the name or affiliation of the proposer. Blind reviews are common in academia, but less so in commercial/industry-driven events. Since the reviewer didn’t know who wrote the proposal, they didn’t consider an individual’s standing in the community, gender, race, or affiliations, but instead were focused on the proposal itself.[1] Feedback from the program committee on conducting blind reviews was positive, so we will continue blind reviews in future CFPs.

Everybody wants to tool the world

Nearly 40% of submissions received for the NYC event and 60% of submissions for the Amsterdam event were for the Tools track, whereas only 10-20% of proposals for each event were part of the Human Element track. Some topics within a single track were so popular that we had to break the proposals out into their own mini-list for consideration. For example, for the Data Science track, there were so many machine-learning talks that we split those proposals out to review separately from the rest of the track.

Stats, stats, baby

The program committee scored each talk on a scale from 1-5 with every talk receiving at least 5 reviews, and some proposals receiving significantly more than that. Across all reviews, here’s a rough distribution of the scores:

- Avg: 2.9

- Min: 1.5

- Max: 4.6

Then we (the program chairs) further reviewed proposals in the top tier to ensure that coverage was appropriate and that we didn’t, for example, accept six talks on the exact same topic. We prioritized which talks in the top tier were chosen in order to provide a balance across topics and ideally establish a narrative across the talks as well. Then we accepted those top scoring talks, waitlisted the next tier, and sent regrets to the lowest ranking tier. Here’s the score distribution of the regrets:

- Avg: 2.375

- Min: 1.5

- Max: 2.9

While we don’t collect detailed demographic information from proposers, we did allow people to note whether their presentation would have the participation of a woman, person of color, person with disabilities, or member of another group often underrepresented at tech conferences. We did not ask people to choose which of those they represented, but we can say that in total, for the NY event 36% of the talks will include diverse speakers; Amsterdam falls just slightly below that at 31%. While these percentages are not as high as we’d like, it’s actually quite high for the industry. We believe part of this is due to the program committee being intentional and persistent about finding and following up with speakers who might not otherwise have submitted. Most importantly, we expect to see those percentages rise next year.

Thrilling me softly with keynotes

Keynotes are solicited talks, meaning we don’t find these from an open CFP. As chairs, there’s a larger set of ideas we want to convey at the conference, and the keynotes are, well, key to telling those stories. We also collected nominations from the program committee (and at least one of the co-chairs is very opinionated[2] and provided a long list of candidates.) We’re tremendously excited about the individuals that will be speaking in both New York and Amsterdam.

Whoomp! Sponsored talks

We believe strongly in complete transparency with conference attendees. None of the solicited keynotes, trainings, or general sessions on the agenda are “purchased.” They were all selected based on editorial, program committee feedback. That said, there are many sponsorship opportunities including booths, events (for example, sponsoring networking), keynote remarks, and one sponsored track. Every paid speaking opportunity is labeled clearly in the program notes as “Sponsored.”

In a subsequent post, we’ll provide some tips and tricks to hack the CFP process for next year. Because everything we do, we do it for you.