Upcoming live training: Data

O'Reilly's live training events offer instructor-led, hands-on courses, with an emphasis on the social aspects of learning.

Relief found in Neumagen near Trier, a teacher with three discipuli. Around 180–185 CE. Photo of casting in Pushkin museum, Moscow. (source: Shakko on Wikimedia Commons)

Relief found in Neumagen near Trier, a teacher with three discipuli. Around 180–185 CE. Photo of casting in Pushkin museum, Moscow. (source: Shakko on Wikimedia Commons)

We’re thrilled to present several live training courses at O’Reilly Media, covering important data-related topics and practices, including: popular open source frameworks, SQL fundamentals, consideration of data ethics, big data strategy for your organization, developing enterprise workflows, geospatial analytics, and machine learning.

First, briefly—two key points distinguish these courses from most other online training:

- Whether online or in person, O’Reilly courses are live, instructor-led, hands-on courses—in contrast to the typical approach of just previously recorded classes.

- The format emphasizes social aspects of learning; we’ve found that most people attend in groups, from work.

Those benefits may seem subtle, and let’s consider them. Each course is led by an expert practitioner in the subject, someone working in industry. They’ve seen what works and what doesn’t. They know the gotchas about delivering product – instead of just memorizing some syllabus and slide deck. These instructors guide you through hands-on course materials and they’re available to help answer your questions at any point.

Also, social reinforcement among one’s peers is super important to get up to speed on new material. Learning new content as part of a team puts the spotlight on what matters—teams ask questions that are relevant to the problems they must solve together. For more details about that, check out Collective Intelligence in Human Groups, by Anita Williams Woolley at Carnegie Mellon University, which covers the dynamics of how people learn to do in team contexts.

Another key point from our research on training courses: many participants say they attend live courses to hear what other teams ask. For example, another group attending the course may be further along in their journey toward deploying streaming analytics; they may already be encountering issues your team won’t hit for another six months. What might you learn from that?

Next, let’s take a look through the courses, topics, instructors, etc., coming up.

Building Distributed Pipelines

On September 19–23, Andy Petrella and Xavier Tordoir from Data Fellas will present Building Distributed Pipelines for Data Science using Kafka, Spark, and Cassandra. The combo of these three open source projects represents the foremost design pattern in industry for robust enterprise workflows with Big Data: Kafka for scalable plumbing, Spark for scalable compute, and Cassandra for scalable storage.

Let’s talk about transformations. This course is aimed at:

- Data scientists with experience in data modeling or BI—who now must define robust pipelines to handle volume and velocity

- Software engineers with background in Scala, Java, Python—who now must integrate scalable technologies into their organization’s architecture

A year ago I sat down with Patrella and Tordoir at a little beer garden in Amsterdam. We’d just finished teaching a full day of related material (Spark) to a packed room at Scala Days EU. Patrella and Tordoir come from intense backgrounds in advanced math and physics, which they leverage to build machine learning apps at scale. The also bring expert insights into the full stack for implementations—ranging from networks, to containers, to notebooks, to UX. Prepare for a virtual reality coaster ride through the pragmatics of scalable data pipelines.

Andy Patrella’s (Geo) Twitter Stream in Spark Notebook video.

SQL Fundamentals

On October 4–5, Thomas Nield from Southwest Airlines will present SQL Fundamentals for Data. This is a hands-on course for beginners, getting up to speed in the lingua franca of data: SQL. The course covers basic data analysis, and how to write queries in SQL, in ways that allow you to practice designing and working with databases—at home—without a database server environment. The course also explores principles for creating resilient database designs and understanding the “where,” “how,” and “why” of relational databases in the IT landscape.

This course is useful for a range of professionals, including:

- Business analysts with some data analysis background—who now must access and make sense of larger data sets.

- IT professionals—to expand your skills base by understanding the core principles of database design.

- Software developers—who need to build solutions based on data.

- Project managers—to manage teams of data analysts and engineers, and understand projects feasibility, quality control, etc.

For additional info about this course, Nield has a free webcast A quick lesson on SQL querying basics coming up on Wednesday, September 14, 2016 at 2:00 p.m. PT.

Data Ethics

Also on October 4–5, Anna Lauren Hoffmann from UC Berkeley will present Data Ethics: Designing for Fairness in the Age of Algorithms. Learn how to build fair and ethical data projects from the ground up. This has become a hot topic recently, promoted especially by the White House. For example, important public issues include how re-encoding bias and discrimination work their way into algorithmic systems—which, in turn, fundamentally shape how the public uses technology. Questions surrounding the ethical use of data become especially urgent as public awareness of biased software and algorithms continues to grow.

Notably, this course is important for you because:

- Your organization collects, stores, or analyzes personal data, and you must recognize and address ethical issues regarding uses of that data.

- You’re working to use data science for social good and must ensure that your means are as ethical as your ends.

- You’re a policymaker and therefore must ensure that your words and practices build toward positive outcomes.

Distributed Computing with Spark for Beginners

On October 10–14, Tianhui Michael Li and Ariel M’ndange-Pfupfu from The Data Incubator will present Distributed Computing with Spark for Beginners.

Apache Spark has become the most popular and active open source project hosted by the Apache Software Foundation. No wonder about that: Spark helps make complex distributed computing tasks easy to conceptualize, succinct to program, and reliable to run. However, it takes practice, a lot of hands-on practice, to become fluent in Spark.

In this hands-on course, you’ll dive into how Spark works under the hood, using the core Spark API to build apps from end-to-end. For example, how to perform typical ETL, queries, joins, and aggregations on data sets using Spark and SQL. You’ll also cover critical tooling; how to deploy jobs in the cloud with large, real-world data sets; and how to monitor and tune for performance.

This course is important for software developers who need to…

- use parallelism to write efficient big data applications

- take advantage of Spark’s execution model to realize better performance in complex operations

- understand how Spark applications are run on a cluster, and broken down into jobs, stages, and tasks

Programming background in either Python or Scala are prerequisites. The instructors will provide cloud computing resources and Jupyter notebooks for the course materials, based on version 1.5.1 of Apache Spark. You’ll also need to have a Google account associated with an email to access those resources.

Managing Enterprise Data Strategies

On October 18–19, Jesse Anderson from Smoking Hand will present Managing Enterprise Data Strategies with Hadoop, Spark, and Kafka. Big data projects can turn into large investments, where even small implementation failures become time consuming and expensive. Anderson emphasizes, based on his big data experiences at Cloudera and consulting with many large enterprise teams, that a course experience is a great place to let yourself make mistakes during a planning phase instead of the more expensive development phases that come later.

You don’t need deep technical knowledge to manage successful data strategies; however, you do need an understanding of both the pitfalls and potentials that data solutions can bring. This course is aimed at:

- CxO’s, VP’s, or technical managers, who have some familiarity with big data terminology—who now must solve specific data problems at scale.

- Anyone with business experience who is moving into a career in big data.

I’ve had the priviledge of teaching alongside Anderson, and, moreover, a lot of conversations and comparing notes, where I’ve learned so much about how the industry works, how large projects succeed or fail in practice. I’m indebted to Anderson for many insights gained, and hope that you can similarly gain from what he can help teach you and your team.

For additional info about this course, Anderson has a free webcast Engineering big data solutions coming up on Tuesday, October 4, 2016, at 2:00 p.m. PT.

Geo-Located Data

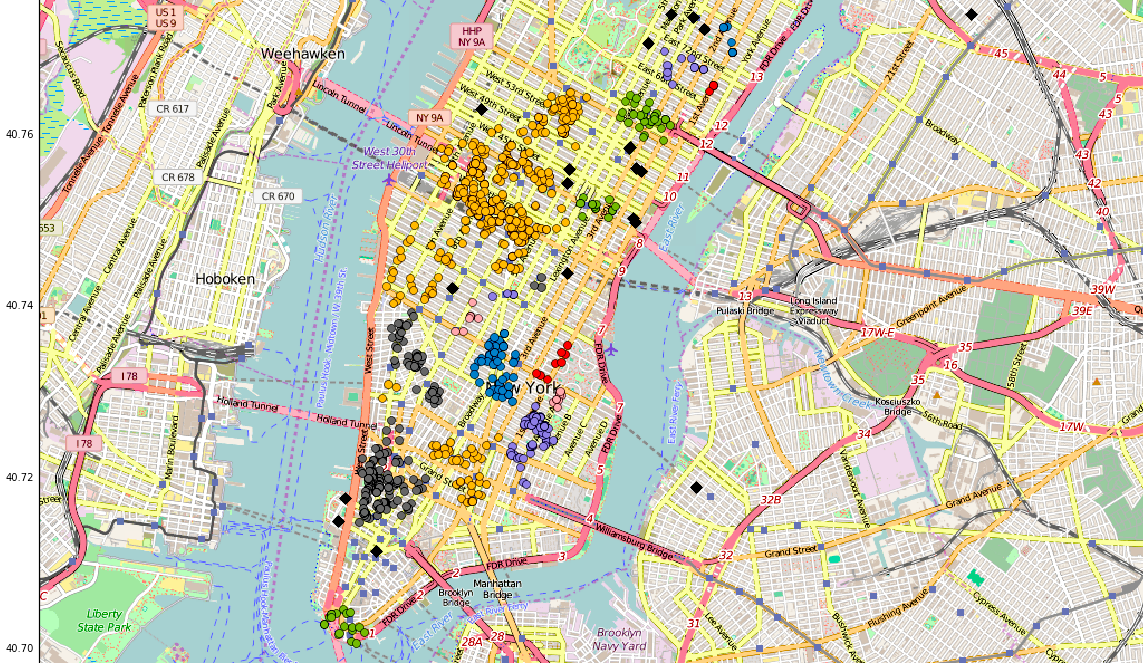

On November 1–2, Natalino Busa from Teradata will present Geo-Located Data: Extracting Patterns from Mobile Data using Scikit-Learn and Cassandra. Learn how to extract patterns and detect anomalies within geo-located data, using machine learning algorithms such as K-Means and DBSCAN. Get hands-on experience with a powerful set of complimentary tools: use scikit-learn and Jupyter notebooks to prepare and cluster geo-located data, load/extract data and models in Apache Cassandra, then build data-driven microservices in Python to prototype a venue recommender and a geo-fencing alerting engine.

Here’s a key point: most data has a geo component. Leveraging geospatial analytics can be a sophisticated step, albeit hugely important for gaining insights, useful outcomes, and competitive advantage. This course provides a great way to learn how geographical analyses enable a wide range of services, from location-based recommenders to advanced security systems. You’ll also gain the hands-on experience of how to package data-driven apps as microservices. I’ve been working with Busa on related projects—other upcoming publications about geospatial machine learning—and I’m fascinated by how he’s able to combine multiple sources of open data to help augment analysis and a visualization. He makes it look easy, and with that hands-on experience, I hope this important set of skills becomes easier for you to leverage as well.

Natalino Busa’s video on real-time anomaly detection with Cassandra, Spark, and Akka.

We have many upcoming live training events under development, and we’re continuing to add more each week. Also, for group tickets and enterprise licensing, please contact onlinetraining@oreilly.com