Using technology to design across the senses

The O'Reilly Radar Podcast: Designing a framework to shape how humans experience technology in the physical world.

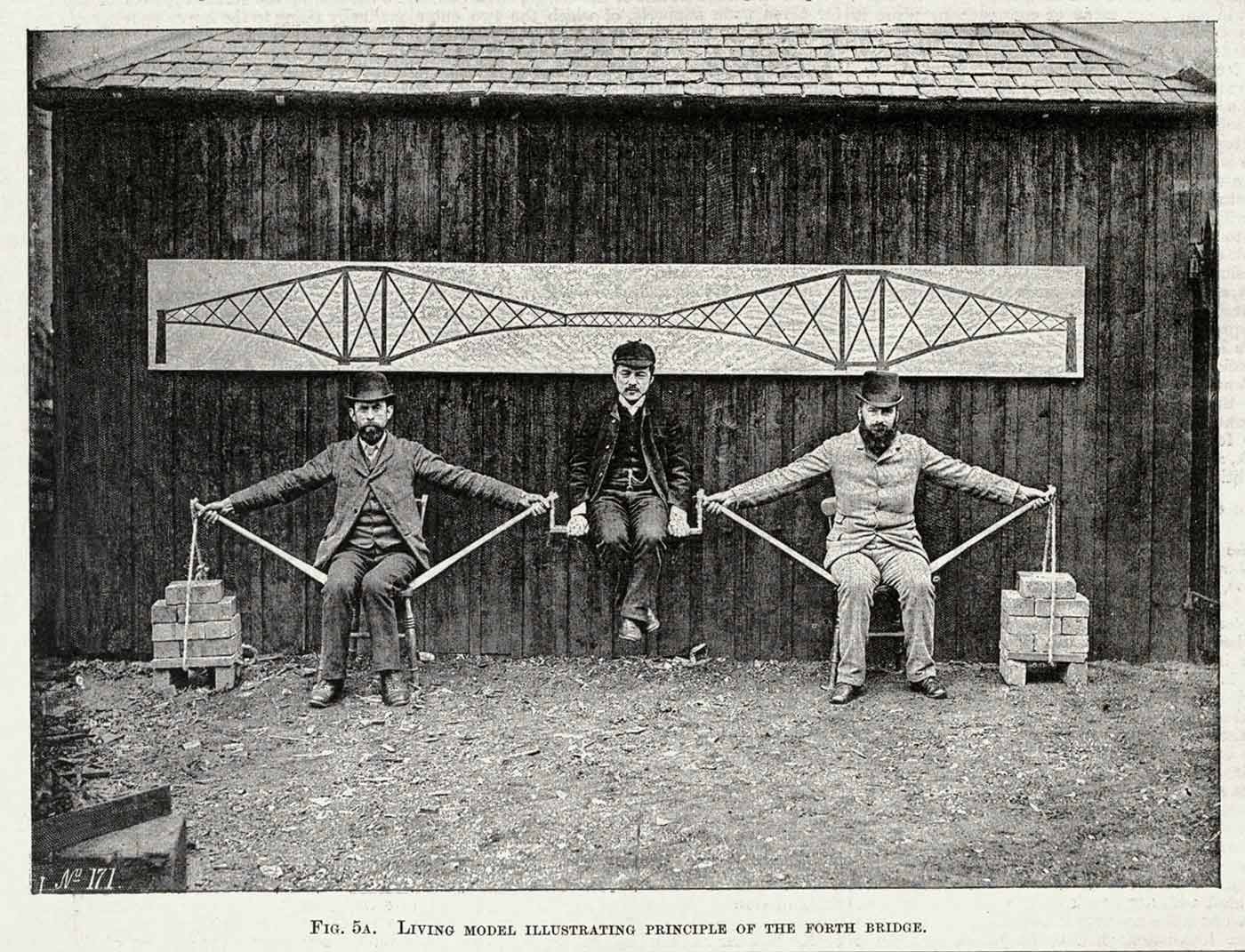

Postcard of Benjamin Baker's human cantilever bridge model. (source: Wikimedia Commons)

Postcard of Benjamin Baker's human cantilever bridge model. (source: Wikimedia Commons)

In this week’s episode of the Radar Podcast, O’Reilly’s Mac Slocum chats with Christine Park, senior product designer at Basis, and John Alderman, director of Supereverywhere. They talk about multi-modal design, which is an approach to design that takes into consideration the physical senses and the role they play in the user experience, and they also chat about how multi-modal design applies to the Web.

Here are a few highlights:

Christine: A lot of the focus on human factors in design that influences technology is really based on human factors that were developed for industrial design, how we use physical objects in environments. We’re entering a new stage in design where we have to think about how we actually use physical information. Multi-modal design is asking the questions, “How do we experience information in our environment? What does it take? What are the limitations? What are our strengths at observing and experiencing physical information? How should that and could that shape the way we experience technology?”

John: The Web, like almost every other media, is multi-modal. It’s just that it’s multi-modal within a very restricted set of senses and modes. Someone sitting butt on a chair facing a computer screen only has a few modes that they’re really engaging with, so design has reflected that. It’s stayed pretty static. One of the things that we’re trying to do is expand that set of modes, because as people move out into the world, their senses operate in very different ways. … The Web is a part of a lot of different experiences already, it’s just not recognizable as being a browser-based experience. I think that’s one of the interesting things—what will the new frameworks for Internet usage become?

John: When photography came along, it freed painters to be non-pictorial. It opened it up. Painting didn’t go away. It focused on its core competency, to use modern language. Similarly, with these other mediums, they’ll be freed to an extent to focus on what they are best at. That said, there’s just so much interesting stuff going on on all the others that designers will have to be fluent in all the others. There’s edges where they start operating together that will be really fertile.

Christine: At the end of the day, it comes back to human experience—what we want to do, what we need to do. There are different levels of problems that need to be solved. All of the disciplines have different ways of addressing those problems, and we come together creating these products. We have many different bags of tools to choose from. They get all thrown out on the table in this exploratory period, in this new phase of technology development, the IoT. With all these new kinds of products that are emerging, everything’s up for grabs. Whoever can solve the problem will get to solve the problem.