It’s time for a web page diet

Site speed is essential to business success, yet many pages are getting bigger and slower.

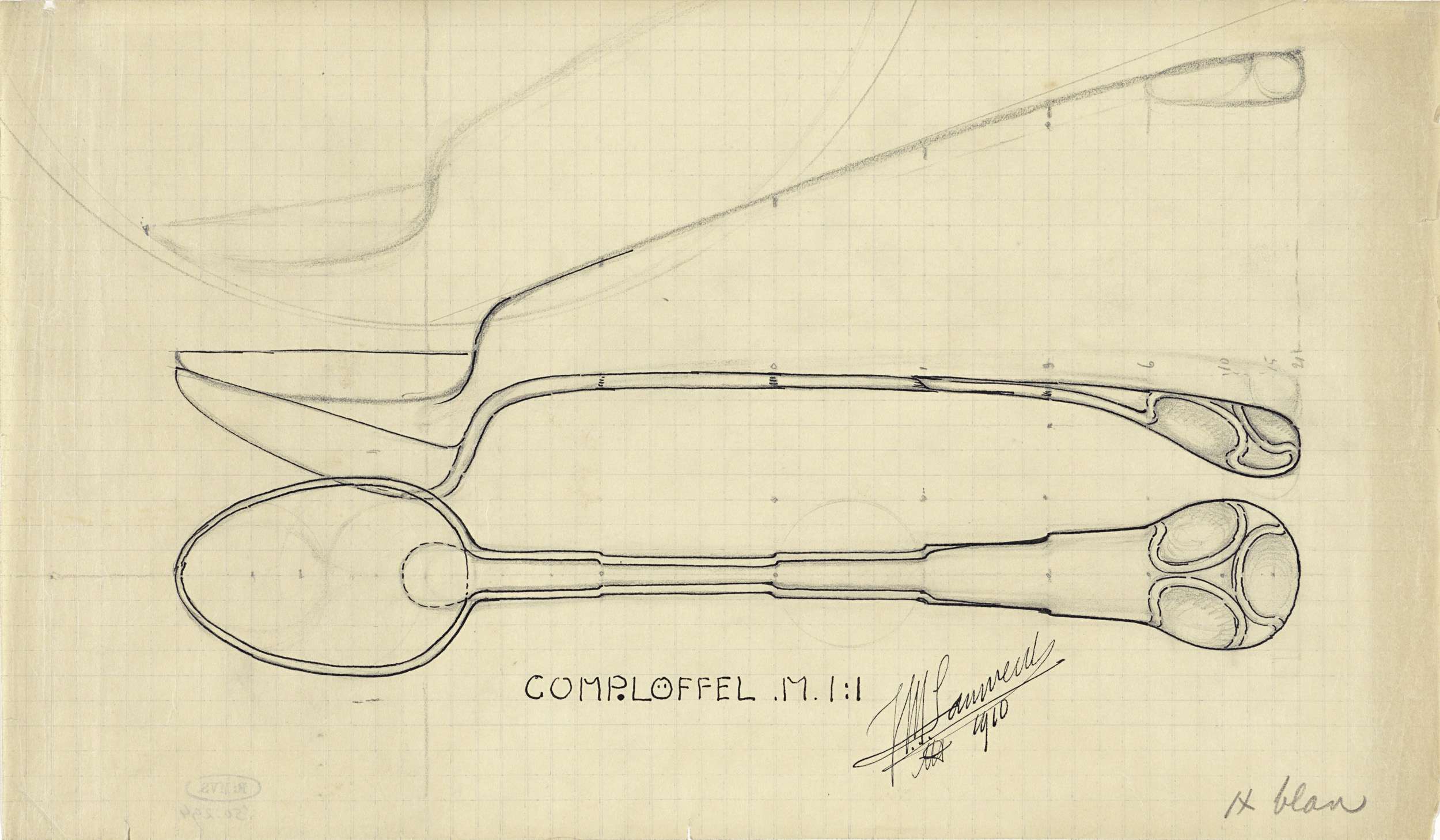

Ontwerp voor een compotelepel, Mathieu Lauweriks, 1910 (source: Rijksmuseum)

Ontwerp voor een compotelepel, Mathieu Lauweriks, 1910 (source: Rijksmuseum)

Earlier this year, I was researching online consumer preferences for a client and discovered, somewhat unsurprisingly, that people expect web sites to be fast and responsive, particularly when they’re shopping. What did surprised me, however, were findings in Radware’s “State of the Union Report Spring 2014” (registration required) that showed web sites, on average, were becoming bigger in bytes and slower in response time every year. In fact, the average Alexa 1000 web page has grown from around 780KB and 86 resources in 2011 to more than 1.4MB and 99 resources by the time of the early “2014 State of the Union Winter Report.”

As an experiment, I measured the resources loaded for Amazon.com on my own computer: 2.6MB loaded with 252 requests!

This seemed so odd. Faster is more profitable, yet companies were actually building fatter and slower web sites. What was behind all these bytes? Had web development become so sophisticated that all the technology would bust the seams of the browser window?

The main problem is performance. Time to first interaction (the point at which users can read your content or start some interaction on your page) has a direct impact on customer experience. A good experience can make the difference between converting a visit into a purchase and having the visitor close the tab before your page has even rendered. Even if you’re not selling anything, the same rules apply: If your site is slow or unresponsive, visitors will ignore it.

To illustrate just one aspect of the performance problem, let’s go back to the beginning of this post: the average site this year measured over 1.4MB and 99 resources.

The 1.4MB size seems alarming, but those 99 requests for additional resources raise additional warning flags. Externally referenced images, CSS and script files cause additional resource requests and incur round-trip costs that are a primary cause of latency and slow rendering. Resources can often be fetched from the cache, which is faster, but you only get one chance at a first impression. Make sure that initial experience is a good one.

In addition, if those external references include scripts, they can effectively block any further action when they are encountered. Each script you reference in a page is a potential opportunity to add latency, slowing down rendering and interaction.

The solutions to this particular problem are fairly straightforward. Count all of the components that have to be requested to render your page. Can you reduce this number? Combine and minify your CSS files. Take a close look at where you reference scripts and when they execute. Are scripts blocking page rendering or interaction?

Slimming down web sites is not necessarily a matter of learning new and sophisticated programming techniques. Rather, getting back to basics and focusing on correct implementation of web development essentials — HTML, CSS and JavaScript — may be all it takes to make sure your own web sites are slim, speedy and responsive.

In Web Page Size, Speed, and Performance, I illustrate some of the most common reasons why web pages have become fat and slow, and provide a primer on essential web development techniques that help make sure your own site stays slim and fast.