Chapter 4. Cloud Computing: Patterns for The What, The How, and The Why

A couple of years ago, one of the authors was walking with a group of kids during a volunteer elementary school event. Like all kids of that age, they asked him nonstop questions about anything and everything. But one question was so intriguing (“What’s in the clouds?”) that he felt compelled to respond (“A bunch of Linux servers.”). While the response surely left the few kids that were actually listening bewildered, it did bring a smile to the author’s face. Of course, today, the answer would be well expanded to include almost any kind of server and operating system you could imagine! We’re sure it doesn’t need to be said (and we will explain all of this in this chapter) but being “in the cloud” doesn’t mean there aren’t hundreds of miles of networking cable laying around in some brick-and-mortar facility, and “serverless computing” doesn’t mean there aren’t servers running code—it just means they are not necessarily yours to run or worry (less) about.

Cloud has incredible momentum, and while there is so much value left to be gained as a capability from its proper use, conversations about it are well beyond the hype. The cloud—once used for one-off projects or testing workloads, has become a development hub, a place for transactions and analytics, a services procurement platform where things get done. In fact, it’s whatever you decide to make it. That’s the beauty of the cloud: all you need is an idea and the right mindset (cloud the capability, not the destination) and you’ll be magical.

In this chapter we want to introduce you to different cloud computing usage patterns and the different kinds of ways to use those “servers in the sky” for your business—from an infrastructure perspective, to ready-to-build software for rolling your own apps, and even as granular as down-to-the-millisecond perspectives on a function’s execution time. And of course, since we already know that cloud is a capability and not a destination, then we can apply these patterns on-premises or off in any vendor’s cloud computing environments. By the end of this chapter, you’ll understand acronyms like IaaS and PaaS, but you’ll also know that the answer to “What is serverless computing?” won’t be, “A computer that is serverless”; rather, you’ll recognize it as a pattern.

Note

Some of the concepts covered in this chapter have been around for a while. Since the first part of this book is mostly geared toward business users, we felt it important to include these topics before we delve deeper into cloud discussions. If you know IaaS, PaaS, and Saas, you might want to consider jumping to “The Cloud Bazaar: SaaS and the API Economy”, where we get into the details of APIs, REST services, and some of the newer cloud patterns: serverless and function as a service. And if you already understand that serverless doesn’t mean the absence of a physical server or that PaaS isn’t a play option in American football, you could consider skipping this entire chapter.

Patterns of Cloud Computing: A Working Framework for Discussion

A journey toward the adoption of the “cloud” in many ways mimics the transition from traditional data “systems of record,” to “systems of engagement,” to “systems of people.” Consider the emergence of social media—which has its very beginnings in the cloud. In the history of the world, society has never shared so much about so little. You simply can’t deny how selfies, tweets, and TikTok dances have (for better or worse) become part of our vocabulary.

Just like how the web evolved from being a rigid set of pages to a space that’s much more organic, interactive, and integrated, the last decade has borne witness to a continuing transformation in computing, from hardened silos to flexible as-a-service models that operate in a public cloud to those that operate in a hybrid cloud. Why? Three words: social-mobile-cloud.

Social-mobile-cloud has dramatically accelerated social change in unanticipated ways; in fact, it has completely changed and altered the flow of information for the entire planet. Information used to flow from a few centralized sources out to the masses. Major media outlets like the BBC, CNN, NY Times, and Der Spiegel were dominant voices in society, able to control conversations about current events. Social-mobile-cloud has obliterated the dominance of mass media voices (for better or worse, as “Fake News” is a hot topic) and changed the flow of information to a many-to-many model. In short, the spread of mobile technology and social networks, as well as unprecedented access to data, has changed humanity—disconnected individuals have become connected groups, technology has changed how we organize and how we engage, and more. We call this mode of engagement The Why.

Of course, all this new data has become the new basis for competitive advantage. We call this data The What. All that’s left is The How. And this is where the cloud comes in. This is where you deploy infrastructure, software, services, and even monetized APIs that deliver analytics and insights in an agile way.

If you’re a startup today, only in rare cases would you go to a venture capital firm with a business plan that includes the purchase of a bunch of hardware and an army of DBAs to get started. Who really does that anymore? You’d get shown the door, if you even made it that far. Instead, what you do is go to a cloud company. But what if you’re not a startup? What if you’re an enterprise with established assets and apps? Then you want the cloud (the capability) at your destination of choice.

The transformational effects of The How (cloud), The Why (engagement), and The What (data) can be seen across all industries and their associated IT landscapes. Consider your run-of-the-mill application developer and what life was like before the cloud era and as-a-service models became a reality. Developers spent as much time navigating roadblocks as they did writing code! The list of IT barriers is endless: contending with delays for weeks or months caused by ever-changing backend persistence (database) requirements, siloed processes, database schema change synchronization cycles, cost models heavily influenced by the number of staff that had to be kept in-house to manage the solution, and other processes longer than this sentence. And we didn’t even mention the approval and wait times just to get a server in the door so that you can experience all the friction we just detailed. Make no mistake about it, this goes on in every large company—horror stories are ubiquitous. To be honest, looking back, we think it’s a wonder that any code got written at all.

Development is a great example. Its shift toward agile is encapsulated by the catch phrase continuous integration and continuous delivery—or CI/CD for short. In the development operations (DevOps) model, development cycles get measured in days; environment stand-up times are on the order of minutes (at most hours); the data persistence layer (where the data is stored, like in a database) is likely to be a hosted (at least partially) or even a fully managed service (which means that someone else administers it for you); the platform architecture is loosely coupled and based on an API economy combined with open standards; and the cost model is variable and expensed operationally, as opposed to fixed and capital cost depreciated over time.

As is true with so many aspects of IT, the premise of this book is our assertion that this wave of change has yet to reach its apex. Indeed, there was much hype around cloud as we discussed in Chapter 1, but many practitioners are now “hitting the wall” of the hype barrier. There is no question that cloud delivers real value and agility today—but it’s been limited for larger enterprise.

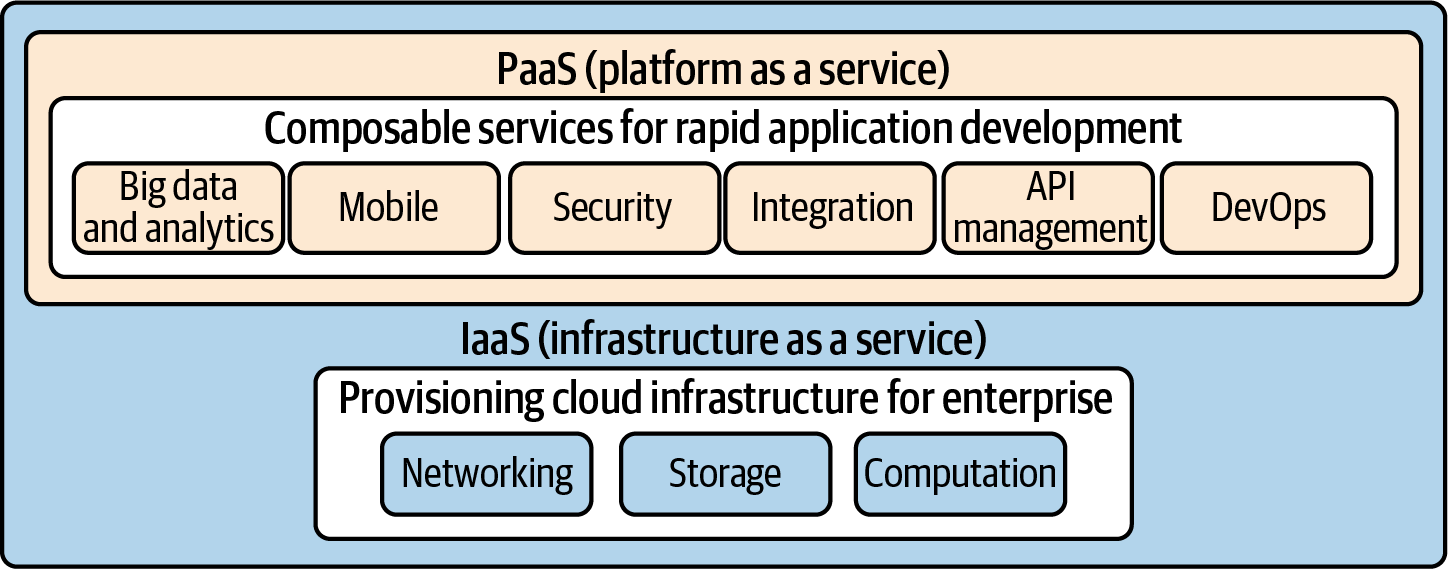

“As-a-service” generally means a cloud computing service that is provided for you (for a fee) so that you can focus on what’s important to your business: your code, iterative improvements to custom apps, the relationships you have with your customers, and so on. Each type of cloud pattern leaves you less and less “stuff” to worry about. We broadly (and coarsely) categorize these cloud patterns (provisioning models and concepts) into what you see in Figure 4-1: infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS)—we left the serverless computing pattern out for now.

Figure 4-1. A high-level comparison of responsibilities at each cloud pattern level

These cloud patterns all stand in contrast to traditional IT, where individual groups and people had to manage the entire technology stack themselves. This is the way many of us “grew up” in our career. Cloud (the capability) has blurred the lines of where these services are hosted, which is perfect because now we can talk about these as patterns without a footnote for where they reside—as opposed to destination being the lead-with discussion. Your cloud resides where you want (or better yet need) it to reside. You no longer have to differentiate between on-premises and off-premises to get access to cloud capabilities.

The shading that changes in Figure 4-1 for the second, third, and fourth columns is intended to convey where the responsibility falls for some of the specifics around any compute solution.

Note

This terminology gets a little blurry in a cloud (the capability) discussion because your own company could be managing and hosting the app dev frameworks (with instrumented chargeback accounting) for a good portion of your app, but you’re also stitching in serverless APIs (like Twilio) for some of the workflow. When you’re talking about cloud as a capability, if the environment is hosted, administrative responsibilities fall on you, and if managed, someone else has to worry about all the things you typically worry about. It’s the difference between sleeping over at mom’s house (where you are expected to still do a lot of work—you are hosted) or sleeping at a resort (managed—it’s all done for you in a luxurious manner that you’ve paid for).

Order Up: Pizza as a Service

Before we delve into the descriptions associated with the cloud patterns shown in Figure 4-1, we thought it’d be a fun exercise to imagine these cloud patterns in the context of pizza—because we’re confident the world over loves a good “wheel.”

Note

Note that this is a simplistic scenario: there are permutations and combinations to the story that could break it down or make it better—we’re intentionally trying to keep things simple…and fun.

Let’s assume you’ve got a child going into their sophomore year at university who’s living at home for the summer. Your kid mentioned how they found a gourmet “Roast Pumpkin & Chorizo” pizza recipe (pizza traditionalists know this...we’re just as aghast) from the internet and wants to use it to impress a date. You laugh and remind your offspring that you just taught them how to boil an egg last week. After some thought, your kid concludes that making a gourmet pizza is too much work (getting the ingredients, cooking, cleaning, preparation, and so on) and their core competencies are nowhere to be found in the kitchen. They announce this conclusion and share plans to take their date to a downtown gourmet pizza restaurant. You begin to ask, “How can you afford that?” but you stop because you know the answer and the question coming to you next (it has the word “borrow” in it, which really means “give”). Think of this date as software as a service (SaaS). The restaurant manages everything to do with the meal: the building and kitchen appliances, the electricity, the cooking skills, the ingredients, right up to the presentation of the food itself. Your financially indebted child just had to get to the restaurant (think of that as a connection to the internet) and once the romantic duo arrive at the restaurant, all they had to do is consume the pizza and pay.

Junior year is rolling around and as you look forward to your empty nest house again, the COVID-19 pandemic assures you that the company of your loved one will remain constant for months to come. Still single (apparently chorizo pizza doesn’t guarantee love), another budding romance is announced, and this time dinner location is at a little more intimate setting: your house. Convinced the perfect mate will love a pumpkin and cured sausage pizza recipe, your kid scurries off to the grocery store to get all the same ingredients but begs you for help preparing and baking the meal before you and your spouse are requested to go for a three-hour walk once the date arrives. As a dedicated parent, you want your offspring to find love (it might speed up the move out process) so you go along with the whole plan. While out of the house, your child serves up the meal, you stop and turn to your significant other with warmth in your heart and say, “Darling, we are platform as a service (PaaS) to our child.” Your partner looks at you and says, “You’re the most unromantic person I know.” So how is this a PaaS cloud pattern? Think about it—you provided the cooking “platform.” The house, the kitchen appliances, the cutlery and plates, the electricity, it was all there for any chef (developer) to come on in and cook (create). Your child brought in the ingredients (coding an app) and cooked the recipe. (There’s a variation to this junior year PaaS story called serverless computing, and it sits between PaaS and SaaS. We’ll get into the details later in this chapter.)

It’s senior year and your part of the world has found a way past the pandemic, your beloved kid now lives on their own somewhere else (insert heavenly sounding rejoice music) and this time they’re convinced they’ve found their pumpkin and chorizo soulmate. Your love-stricken offspring has found their own place to rent (the server), and rent includes appliances and utilities (storage and networking). Your child has invited their potential soulmate for a date, and wants the place to be as classy as possible. They take a trip to IKEA for some tasteful place settings, a kitchen table, and chairs (your 20-year-old couch wouldn’t be suitable for such an important guest to sit and eat)—call it the operating system. Next stop, the grocery store for the ingredients (the runtime and middleware), and finally a cooked meal on date night. It’s hard to believe your kid is finally this independent, but they essentially owned the entire process from start to finish outside of the core infrastructure rented (the server, storage, and so on). Your child has become an infrastructure as a service (IaaS) pizza maestro.

Notice what’s common in every one of these scenarios: at the end of the evening, your child eats a pumpkin and chorizo pizza (albeit with different people, but that’s beside the point). In the IaaS variation of the story, your kid did all of the work. In the PaaS and SaaS scenarios, other people took varying amounts of responsibility for the meal. Today, even though your child is independent and has the luxury of their own infrastructure (the apartment), they still enjoy occasionally eating at restaurants; it’s definitely easier to go out for sushi than make it. This is the beauty of the as-a-service cloud patterns—you can consume services whenever the need arises to offload the management of infrastructure, the platform, or the software itself.

Note

It goes without saying that since cloud is a capability then the very scenarios we just articulated can be wholly contained within an enterprise on-premises. For example, Human Resources can own an app for an employee learning platform, but the CIO office is providing the IaaS platform where it runs. The CIO’s office can offer up development or data science stacks that can be provisioned in minutes and charged back in a utility-like manner to different teams (perhaps one team are R aficionados, while another is Python).

As your understanding of these service models deepens, you will come to realize that the distinctions between them begin to blur. From small businesses to enterprises, organizations that want to modernize will quickly need to embrace the transition from low-agility development strategies to integrated, end-to-end DevOps. The shift toward cloud ushers in a new paradigm of “consumable IT”; the question is, what is your organization going to do? Are you going to have a conversation about how to take part in this revolutionary reinvention of IT? Are you content with only capturing 20% of its value? Are you going to risk the chance that competitors taking the leap first will find greater market share as they embrace cloud capability sooner to more quickly deliver innovation to their customers? This is an inflection point because the cloudification of IT is just about to really happen—because cloud will be approached as a capability, and not solely a destination.

Do (Almost All of) It Yourself: Infrastructure as a Service

In our “Roast Pumpkin and Chorizo” pizza example, when you finally got your offspring out of the house and they pretty much did all the work (found a place to live, shopped for ingredients, bought their own dishes), that was infrastructure as a service (IaaS)—what many refer to as “the original cloud.” In a nutshell, IaaS is a level removed from the traditional way that computing is provisioned. It’s a pay-as-you-go service where you are provided infrastructure services (storage, compute, virtualization, networking, and so on) as you need them via the cloud.

IaaS delivers the foundational computing resources shown in Figure 4-1 that you use over an internet connection on a pay-as-you-use basis. The basic building blocks needed to run apps and workloads in the cloud typically include:

- Physical datacenters

IaaS utilizes large datacenters. For public IaaS providers, those datacenters are typically distributed globally around the world. The various layers of abstraction needed by a public IaaS sit on top of that physical infrastructure and are made available to end users over the internet. In most IaaS models, end users do not interact directly with the physical infrastructure (but there are some special patterns, called bare metal, that do), but rather “talk” to the abstraction (virtualization) layer.

- Compute

IaaS is typically understood as virtualized compute resources. Providers manage the hypervisors and end users can then programmatically provision virtual “instances” with desired amounts of compute, memory, and storage. Most providers offer both CPUs and GPUs for different types of workloads. Cloud compute also typically comes paired with supporting services like auto-scaling and load balancing that provide the scale and performance characteristics that make cloud desirable in the first place.

- Network

Networking in the cloud is software-defined where the traditional networking hardware (routers and switches) is made available programmatically through graphical interfaces and APIs. Although we don’t cover it deeply in this book, companies with sensitive data or strict compliance requirements often require additional network security and privacy within a public cloud. A virtual private cloud (VPC) can be a way of creating additional isolation of cloud infrastructure resources without sacrificing speed, scale, or functionality. VPCs enable end users to create a private network for a single tenant in a public cloud. They give users control of subnet creation, IP address range selection, virtual firewalls, security groups, network access control lists, site-to-site virtual private networks (VPNs), application firewalls, and load balancing. (It’s critical for us to note that there are a lot more things you need to be concerned about when it comes to security; this is a statement for networking.)

- Storage

You need a place to store your data, right? Storage experts classify storage into three main types: block, file, and object storage. We’ll delve more into storage later in this book.

If you contrast IaaS with the other cloud patterns shown back in Figure 4-1, it’s safe to conclude that IaaS hands off the lowest level of resource control to the cloud. This means that you don’t have to maintain or update your own on-site datacenter because the provider does it for you. Again, you access and control the infrastructure via an API or dashboard.

IaaS enables end users to scale and shrink resources on an as-needed basis, reducing the need for high, up-front expenditures or unnecessary overprovisioned infrastructure; this is especially well suited for spiky workloads.

Note

Spiky workloads are those that peak at certain times (or unexpectedly) based on certain events. A great analogy would be your heart rate and exercise. When you’re at work (yes, we know the jokes you’re thinking to yourself right now) your heart isn’t racing to deliver oxygen to your body. Now go for a run. That run is a spiky workload and jumps demands on your heart to start pumping at 130 beats per minute (bpm) when the usual demand is around 60 bpm. When you’ve finished your run, you don’t need your heart beating at 130 bpm because your workload only demands 60 bpm. Apply this to compute. If you’re livestreaming a famous movie star enjoying your product launch or you were an online toilet paper seller at the brink of COVID-19 during the panic-buy phase, you needed way more capacity at those phases (130 bpm) than you do on average or once those moments pass (60 bpm).

Quite simply, IaaS lets you simplify IT infrastructure for building your own remote datacenter on the cloud, instead of building or acquiring datacenter components yourself. While this cloud computing pattern is a staple offering from all public cloud providers, we’re beginning to see many large organizations with their hybrid cloud approach offer this pattern internally (for obvious reasons)—to extract maximum value from cross-company hardware investments and flatten the time-to-value curve associated with delivering business constituents what they need.

Think about all the idle compute capacity sitting across your company or even in a single siloed department—Forbes once noted that “30 percent of servers are sitting comatose,” and we think it’s higher than that in practice. For example, our authoring team has our own server that we set up for this book, but the five of us aren’t using it all the time. In fact, this server is so powerful we could easily share half of it with someone else. As we wrote this chapter, we did just that—we partitioned up the server and offered it to another department via a cloud pattern with a chargeback on usage. As it turned out, the first group wasn’t using it much either, so we did it again, and again. By the time we finished our book, we were making money! (We’re kidding…or we were told to say so.)

When organizations can quickly provision an infrastructure layer, the required time-to-anything that depends on the hardware is dramatically reduced. Talk to any team in your organization about the efforts required to order a server—we alluded to it before and it’s brutal. From approvals to facilities requests, the hours and costs spent on just being able to plug the computer in is horrendous (this is why we jokingly said we made money with our server—because our “customers” are happy to avoid all the things we just mentioned). Bottom line: be it from a public cloud or an on-premises strategy within your organization, IaaS gets raw compute resources to those that need it quickly. Think of it this way: if you could snap your fingers and have immediate access to a four-GPU server that you could use for five hours, at less than the price of a meal at your favorite restaurant, how cool would that be? Even cooler, imagine you could then snap your fingers and have those server costs go away—like eating a fast food combo meal and not having to deal with the indigestion or calories after you savor the flavor. Think about how agile and productive you would be. Again, we want to note that if you’re running a workload on a public cloud service 24x7, 365 days a year, it may not yield the cost savings you think; depending on the workload it could be more expensive, but agility will always reign supreme with cloud (the capability) compared to the alternatives.

Moving to the cloud gives you increased agility and an opportunity for capacity planning that’s similar to the concept of using electricity: it’s “metered” based on the usage that you pay for (yes, you’ll yell at developers who leave their services running when not in use the same way you do your kids for leaving the lights on in an empty room). In other words, IaaS enables you to consider compute resources as if they are a utility like electricity. Similar to utilities, it’s likely the case that your cloud service has tiered pricing. For example, is the provisioned compute capacity multitenant or bare metal? Are you paying for fast CPUs or extra memory? Never lose sight of what we think is the number-one reason for the cloud and IaaS: it’s all about how fast you can provision the computing capacity—and this is why we keep urging you to think of cloud as a capability, rather than a destination.

We titled this section with a do-it-yourself theme because with IaaS you still have a heck of a lot of work to do in order to get going. Just like when your kid had an apartment with a stove and a fridge, your team is as far away from writing code or training their neural networks with GPU acceleration using IaaS as your kid was from sitting down with their date and eating homemade pizza.

IaaS emerged as a popular computing model in the early 2010s, and since that time, it has become the standard abstraction model for many types of workloads. Despite the relentless evolution of cloud technologies (containers and serverless are great examples) and the related rise of the microservices application pattern (a cornerstone to the modernization work we talk about throughout this book), IaaS remains a foundational cornerstone of the industry—but it’s a more crowded field than ever.

IaaS has a Twin Sibling: Bare Metal

Bare metal is not a 1980s big hair band that streaked at their concerts for a memorable finale, but rather a term that describes infrastructure in its rawest form provisioned over the cloud: bare metal as a service (BMaaS). Think of BMaaS as IaaS’s twin—very similar in so many ways, but there are some things that make them each unique. In a BMaaS environment, resources are still provisioned on-demand, made available over the internet, and billed on a pay-as-you-go basis. Typically, this used to be monthly or hourly increments, but more and more pricing models for anything as-a-service is more granular, with by-the-minute (and in some cases by-the-second or service unit) pricing schemes.

Unlike traditional IaaS, BMaaS does not provide end users with already virtualized compute, network, and storage (and sometimes not even an operating system, for that matter). Instead, it gives direct access to the underlying hardware. This level of access offers end users almost total control over their hardware specs. The hardware is neither virtualized nor supporting multiple virtual machines and so it offers end users the greatest amount of potential performance, something of significant value for use cases like high-performance computing (HPC).

Note

It’s a well-known fact that whenever you virtualize anything, you lose some performance. Performance on a bare-metal server will always outperform equivalent amounts of virtualized infrastructure. The very essence of the as-a-service model relies on virtualizing away the underlying compute resources so they can be shared and easily scaled. We chose not to delve into these nuances, but if you want the absolute most performance possible right down to single-digit percentage points, you’ll want to delve more into the potential benefits BMaaS can offer.

If you’re nuanced in the operation of traditional noncloudified datacenters, BMaaS environments are likely to feel the most familiar and may best map to the architecture patterns of your existing workloads. However, it’s important to note that these advantages can also come at the expense of traditional IaaS benefits, namely the ability to rapidly provision and horizontally scale resources by simply making copies of instances and load balancing across them. When it comes to BMaaS versus IaaS, one model is not superior to the other—it’s all about what model best supports the specific use case or workload.

Noisy Neighbors Can Be Bad Neighbors: The Multitenant Cloud

Before we cover the other as-a-service models, it’s worth taking a moment to further examine a fundamental aspect of a cloud provider deployment options: single- or multitenant cloud environments.

One of the main drawbacks to IaaS is the possibility of security vulnerabilities with the vendor you partner with, particularly on multitenant systems where the provider shares infrastructure resources with multiple clients.

Provisioning multitenant resources also means having to contend with the reality of shared resources. Performance is never consistent and might not necessarily live up to the full potential that was promised on paper. We attribute this unpredictability to the “noisy neighbors’’ effect.

In a shared cloud service, the noisy neighbor is exactly what you might imagine: other users and applications, for a shared provisioned infrastructure in a multitenant environment, and that can bog down performance and potentially ruin the experience for everyone else (think back to our example of a young man hosting a date at a shared residence). Perhaps those neighbors are generating a disproportionate amount of network traffic or are heavily taxing the infrastructure to run their apps. The end result of this effect is that all other users of the shared environment suffer degraded performance. In this kind of setting, you can’t be assured of consistent performance, which means you can’t guarantee predictable behavior down to your customers. In the same manner that inconsistent query response times foreshadow the death of a data warehouse, the same applies for services in the cloud.

In a provisioned multitenant public cloud environment, you don’t know who your neighbors are. Imagine for a moment that you are unknowingly sharing your cloud space with some slick new gaming company that happened to hit the jackpot, and their app has “gone viral.” Who knew that an app for uploading pictures of your boss to use in a friendly game of “whack-a-mole” would catch on? Within days, millions join this virtual spin on a carnival classic. All this traffic creates a network-choking phenomenon. Why do you care? If this gaming studio and its customers happen to be sharing the same multitenant environment as you, then the neighborhood just got a lot noisier, and your inventory control system could run a lot like the aforementioned game—up and down.

These drawbacks are avoidable in the public cloud and easier to manage or avoid in a hybrid cloud, but you should certainly press any IaaS provider (be they internal or external to your company) on what we’ve covered in this section.

Cloud Regions and Cloud Availability Zones for Any As-a-Service Offering

You might come across this terminology in cloud-speak: availability zones and regions. A cloud availability zone is a logically and physically isolated location within a cloud region that has independent power, cooling, and network infrastructures isolated from other zones—this strengthens fault tolerance by avoiding single points of failure between zones while also guaranteeing high bandwidth and low inter-zone latency within a region. A cloud region is a geographically and physically separate group of one or more availability zones with independent electrical and network infrastructures isolated from other regions. Regions are designed to remove shared single points of failure (SPOF) with other regions and guarantee low inter-zone latency within the region.

Note

Different companies use different terminology for this stuff, so we’re giving you the basics. For example, some talk about Metros, or have a hierarchy that goes Geography (think North America), Country (like USA), Metro (Dallas, San Jose, Washington DC), Zones (datacenters within the Metros).

We spent a lot of time on IaaS in this chapter despite the fact that the other as-a-service models are getting more attention as of late. This was by design. Each cloud pattern builds upon the other and delivers more value on the stack. Our cloud pattern stack starts with the foundation of IaaS (as shown in Figure 4-2) and will grow as we add more patterns.

Figure 4-2. IaaS provides a foundational level for any service or application that you want to deploy on the cloud—other as-a-service models build (and inherit) these benefits from here

It might be best to think of IaaS as you would a set of Russian dolls—each level of beauty gets transferred to the next and the attention to detail (the capabilities and services provided from a cloud perspective) increases. In other words, the value of IaaS gives way to even more value, flexibility, and automation in PaaS, which gives way to even more of these attributes in SaaS (depending on what it is you are trying to do). Now that you have a solid foundation on IaaS, it’s going to better illustrate the benefits of PaaS and make its adoption within your business strategies easier.

Building the Developer’s Sandbox with Platform as a Service

Platform as a service (PaaS) is primarily used for developers to play around (hence the name sandbox), compose (modernization-speak for build), and deploy applications. This pattern is even further removed from the traditional manner in which IT architectures are provisioned because required hardware and software are delivered over a cloud for use as an integrated solution stack.

Quite simply, PaaS is really useful for developers. It allows users to develop, run, and manage their own apps without having to build and maintain the infrastructure or platform usually associated with the process.

This means countless hours saved compared to traditional app dev preparation steps such as installation, configuration, troubleshooting, and the seemingly endless amounts of time spent reacting to never-ending changes in open source cross dependencies. PaaS, on the other hand, allows you to relentlessly innovate, letting developers go from zero to productive in less time than it typically takes to reboot a laptop.

PaaS is all about writing, composing, and managing apps without the headaches of software updates or hardware maintenance. PaaS takes the work out of standing up a dev environment and all configuration intricacies or forcing developers to deal with virtual machine images or hardware to get stuff done (you don’t have to prepare the pizza, thinking back to our university student analogy). With a few swipes and keystrokes, you can provision instances of your app dev environment (or apps) with the necessary development services to support them. This streamlining ties together development backbones such as node.js, Java, mobile backend services, application monitoring, analytics services, database services, and more.

The PaaS provider hosts everything—servers, networks, storage, the operating system, software, databases, and more. Development teams can use all of it for a monthly billed fee (which could be billed to a credit card if it’s a hobby, a third-party contract for your company, or a chargeback if your company is providing its employees the PaaS platform) based on usage, and users can quickly and without friction purchase more resources on demand, as needed.

PaaS is one of the fastest-growing cloud patterns today. Gartner forecasts the total market for PaaS to exceed $34 billion by 2022, doubling its 2018 size.

Digging Deeper into PaaS

What are the key business drivers behind the market demand for PaaS? Consider an app developer named Jane. PaaS provides and tailors the environment that Jane needs to compose and run her apps, thanks to the libraries and middleware services that are put in her development arsenal through a services catalog. Jane and other developers don’t need to concern themselves with how those services are managed or organized under the hood. That complexity is the responsibility of the PaaS provider to manage and coordinate. More than anything, this development paradigm decreases the time to market by accelerating productivity and easing deployment of new apps over the cloud. Are you a mobile game developer? If so, you can provision a robust and rich backend JSON in-memory key value store (like Redis) for your app in seconds, attach to it a visualization engine, and perform some analytics.

The PaaS pattern enables new business services to be built frictionless, thanks to a platform that runs on top of a managed infrastructure and integrates cleanly between services. This is where a lot of work is done for development, even after you’ve got an environment set up—getting the components you’re using to talk to each other. The traditional components of a development stack—the operating system, the integrated development environment (IDE), the change management catalog, the bookkeeping and tooling that every developer needs—can be provisioned with ease through a PaaS architecture. If you are a developer of native cloud apps, PaaS is your playground. CI/CD becomes a reality when your apps and services can be implemented on a platform that supports the full DevOps process from beginning to end.

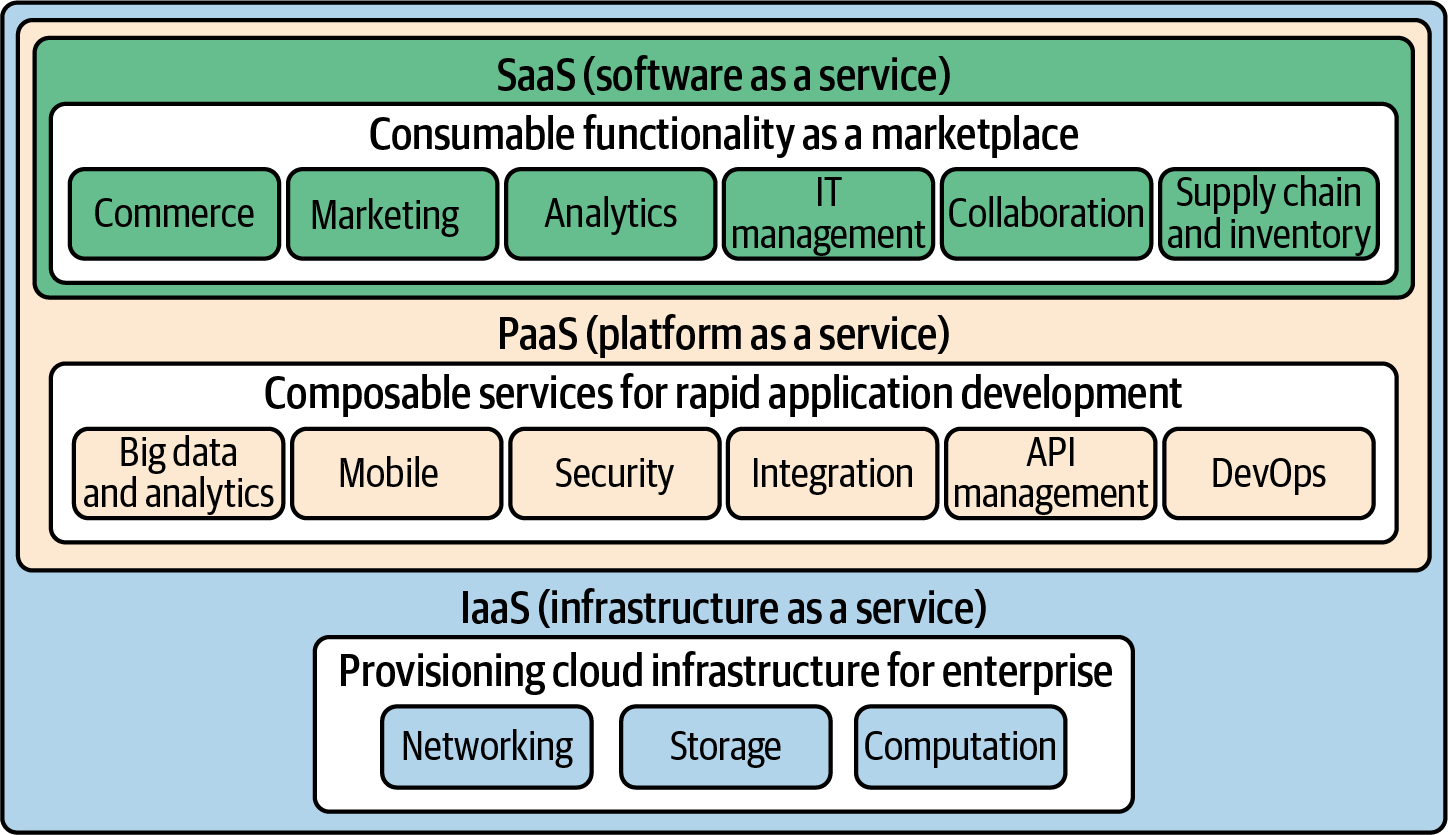

PaaS offers such tremendous potential and value to developers like Jane because there are more efficiencies to be gained than just provisioning some hardware. Take a moment to consider Figure 4-3. Having on-demand access to databases, messaging, workflow, connectivity, web portals, and so on—this is the depth of customization that developers crave from their environment. It is also the kind of tailored experience that’s missing from the IaaS pattern. The layered approach shown in Figure 4-3 demonstrates how PaaS is, in many respects, a radical departure from the way in which enterprises and businesses traditionally provision and link services over distributed systems. Read that sentence again and ask yourself, “Why is the only way I can take advantage of this stuff to have my application on a public cloud?” (It isn’t—cloud the capability!) And now you get just how limiting it can be to box your cloud-mindset into thinking of it as a destination, rather than as a capability.

Figure 4-3. PaaS provides an integrated development environment for building apps that are powered by managed services

In the traditional development approach, classes of subsystems are deployed independently of the app that they are supporting. Similarly, their lifecycles are managed independently of the primary app as well. If Jane’s app is developed out of step with her supporting network of services, she will need to spend time (time that would be better spent building innovative new features for her app) ensuring that each of these subsystems have the correct versioning, functionality mapping for dependencies, and so on. All of this translates into greater risk, steeper costs, higher complexity, and a longer development cycle for Jane.

With a PaaS delivery model, these obstacles are removed: the platform assumes responsibility for managing subservices (and their lifecycles) for you. When the minutiae of micromanaging subservices are no longer part of the equation, the potential for unobstructed end-to-end DevOps becomes possible. With a complete DevOps framework, a developer can move from concept to full production in a matter of minutes. Jane can be more agile in her development, which leads to faster code iteration and a more polished product or service for her customers. We would describe this as the PaaS approach to process-oriented design and development.

Composing in the Fabric of Cloud Services

The culture around modern apps and tools for delivering content is constantly changing and evolving. Just a short time ago, there was no such thing as Kickstarter, the global crowd-funding platform. Today you’ll find things as niche as a Kickstarter campaign for a kid’s school trip! (When we grew up, our definition for this kind of fundraising campaign was mowing our neighbor’s lawn.) The penetration of social sharing platforms (Instagram, TikTok, and so on) that only run on (or are purely designed for) mobile platforms is astonishing. Why? There are more mobile devices today than there are people on the planet—Statista estimated that there would be two for each person to start this year (2021), and that’s not including edge devices. We’re in the “app now,” “want it now” era of technology. This shift isn’t a generational phenomenon, as older generations like to think (many young’uns don’t care about Facebook, but senior citizens are dominating its signups). Ironically, our impatience with technology today is due to the general perception that the burdens of technology IT infrastructure are gone. (Two of the authors shared a flight to Spain before the pandemic and during the writing of this book recanted how they were complaining about the speed of the internet—over the ocean!)

To be successful in this new culture, modern app developers and enterprises must adopt four key fundamental concepts:

First is deep and broad integration. What people use apps for today encompasses more than one task. For example, today’s shopper expects a seamless experience to a storefront across multiple devices with different modalities (some will require gesture interactions, whereas others will use taps and clicks).

The second concept is mobile. Applications need a consistent layer of functionality to guarantee that no matter how you interact with the storefront (on your phone, a tablet, or a laptop), the experience feels the same but is still tailored to the device. Here we are talking about functionality that has to be omni-channel and consistent (we’ve all been frustrated by apps where the mobile version can’t do what the web version does), and for frictionless to happen, the customer-facing endpoints need to be deeply integrated across all devices.

Third, developers of these apps need to be agile and iterative in their approach to design. Delivery cycles are no longer set according to large gapped points in time (a yearly release of your software, for example). Users expect continuous improvement and refinement of their services and apps, and code drops need to be frequent enough to keep up with this pace of change—thus the allure of CI/CD. Continuous delivery has moved from initiative to imperative. Why? Subscription pricing means you must keep earning your client’s business, because it’s easier than ever for them to go elsewhere.

Finally, the ecosystem that supports these apps must appeal to customer and developer communities. Business services and users want to be able to use an app without needing to build it and own it. Likewise, developers demand an environment that provides them with the necessary tools and infrastructure to get their apps off the ground and often have next-to-zero tolerance for roadblocks (like budget approvals, getting database permissions, and so on).

Consuming Functionality Without the Stress: Software as a Service

If PaaS is oriented toward the developer who wants to code and build modern apps in a frictionless environment, then software as a service (SaaS) is geared much more toward lines of business that want to consume the services that are already built and ready to deploy.

In a nutshell, SaaS (some people might refer to it as cloud application services, but in our experience those people are likely billing you for hourly advice) delivers software that typically runs fully managed and hosted on the cloud. This allows you to consume functionality without having to manage software installation, general software maintenance, the sourcing and sizing of compute, backups, and more. All you have to do to use it is open a web browser and go to the service’s dashboard or access the service via an API.

Note

Nearly everyone is trying to become a SaaS business these days because it’s usually tied to a subscription license—which means recurring revenues. There is a lot of confusion these days on how subscription licensing represents cloud revenue. For example, we wrote this book using Office 365 (some of us love it, but we won’t get into our drama here). We pay a subscription fee for it, but we’re not running it in a browser on the cloud (we could, but the functionality isn’t the same); we run it locally, which in some ways makes it a hybrid product.

What’s more, vendors love the SaaS business model because it’s a model that can scale incredibly well from a sales perspective, and once you have paying customers, it’s easier to keep them—just keep delivering value.

Even if you’re brand new to the cloud, we think SaaS is the most widely recognized type of cloud service because you’re likely using it today in your personal lives. Apple Music is a SaaS service—it provides music (obviously) and the management of that music vis-à-vis a rich interface for searching, a recommendation engine, AI-personalized radio stations, and of course your own playlists. Updates to Apple Music are automatic, and your playlists are always backed up; in fact, it was Apple that helped to mainstream music as a service. Never lose your contacts again—thank you SaaS! Google’s Gmail is another—you can log in to your email account from any device, anywhere in the world, so long as you have an internet connection. But enterprises are using all sorts of SaaS products too: Monday.com, Salesforce.com, ZenDesk, Mentimeter, Prezi, and IBM all have offerings, as do so many others. Don’t get boxed into the ubiquitous SaaS vendors we’ve mentioned here—there are thousands that encompass all kinds of services, from simple backups to email to project management, to editing a giphy, to tracking a golf shot live on course, and everything in between.

Note

Many well-known SaaS vendors actually buy IaaS from cloud providers like IBM, Amazon, or Microsoft. Why? For the same reason enterprises do! They don’t want to concern themselves with things like load balancing, firewalls, and storage—they want to focus on new features to offer their clients (so that they continue paying those subscription fees). Packaging up a SaaS offering on Red Hat OpenShift really opens the aperture of a vendor’s offering because they’re thinking with cloud capabilities, which allows them to offer up their product on any public cloud or on-premises (which some clients might need because of data sovereignty or performance issues).

SaaS consumers span the breadth of multiple domains and interest groups: human resources, procurement officers, legal departments, city operations, marketing campaign support, demand-generation leads, political or business campaign analysis, agency collaboration, sales, customer care, technical support, and more. The variety of ways that business users can exploit SaaS is extraordinarily complex: it can be specific (to the extent that it requires tailored software to address the problem at hand), or it can require a broad and adaptable solution to address the needs of an entire industry (like software that runs the end-to-end operations for a dental office).

Figure 4-4 highlights the fact that SaaS can apply to anything, as long as it is provisioned and maintained as a service over cloud technology (and now that you see cloud as a capability, you can see how powerful it might be to mix public cloud SaaS offerings with internal ones too).

Figure 4-4. SaaS can be pretty much anything—as long as it is provisioned and maintained as a completely frictionless service over the cloud

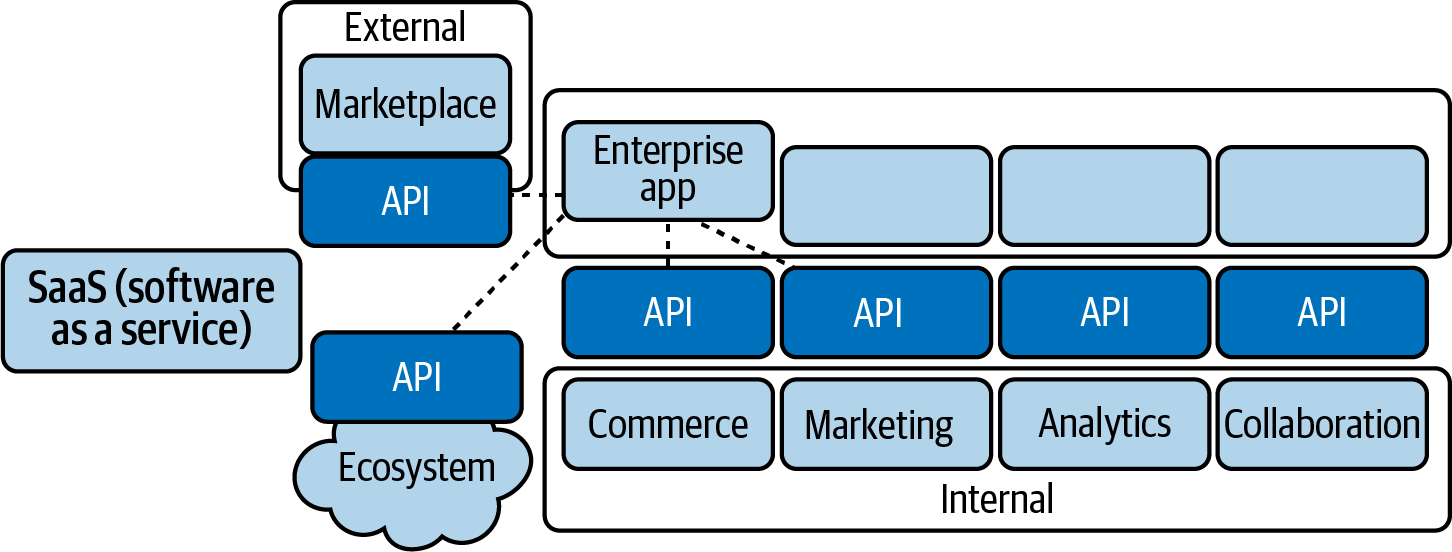

The Cloud Bazaar: SaaS and the API Economy

We’ve mostly talked about SaaS as software, but it’s important to note that SaaS can be (and is increasingly so with each passing day) delivered vis-à-vis an API. Forget about Planet of the Apes—we’re watching Planet of the Apps and it’s all because of today’s marketplace trend: the API economy. The API economy is the ability to programmatically access anything through well-defined communication protocols (like those RESTful services we talked about earlier in this book). Indeed, today’s software delivery platforms have three facets: rentable (like in the public cloud), installable (traditional software), and API composable.

API economies enable developers to easily and seamlessly integrate services within apps they compose (or in the case of an end user: services they consume). This is quite a departure from the traditional ways of app delivery, or what you may be used to if you’re from a line of business trying to understand how your company fully embraces the digital renaissance thrust upon them amidst a “mobile everywhere,” “tech years are like dog years,” pandemic-hit economy.

For most people, SaaS experiences are driven by the user experience (UX). Take for example The Weather Company. Many of you might know it as the handy app on your phone that tells you if your family picnic is going to be pleasant (at least from a weather perspective; we have no idea who is going to be there and if you want them there or not). This app is installed on more mobile devices than we’re allowed to tell you about, but basically every mobile device in the world has a weather app pre-installed on it; however, that isn’t the surprising part. This app gets almost 30 billion API requests a day—the bulk of which isn’t from users looking at it hourly, but rather applications pulling weather requests. In one of our keynote demos, we built a cool app that scrapes Twitter for monetizable intents and compares what people are saying, grabbing a Watson API to classify the image if the tweet has a picture; it also uses the location of the tweet to pull a weather forecast closely associated with the person who is tweeting. Why? Real-time promotions. This is a great example of an application that is using an API to get the Twitter stream, another to tell us what’s in the image, and yet another to get localized weather—this is why we keep saying today’s apps are composed and this is a perfect example of the API economy. Quite simply, more and more SaaS providers are going to rely on API integration of their works into third-party services to drive revenue and traffic far more than they rely on a UX-driven strategy for attracting users.

At their most basic level, SaaS apps are software that is hosted and managed in the cloud whose backend stacks mostly look the same: software components are preinstalled (SaaS); the database and application server that support the app are already in place (PaaS); and this all lives on top of the infrastructure layer (IaaS). As a consumer of the service, you just interact with the app or API; there’s no need to install or configure the software yourself. When SaaS is exposed as a typical offering, you see it as a discrete business app. However (this is important to remember), under the covers the SaaS offering is most likely a collection of dozens (even hundreds or thousands) of APIs working in concert to provide the logic that powers the app.

Having a well-thought-out and architected API is critical for developing an ecosystem around the functionality that you want to deliver through an as-a-service property. We think this point highlights something amazing: not only is the as-a-service model a better way to monetize your intellectual property, but having the pieces of business logic that form your app be consumable in very granular ways (this API call gets us a zip code, that one does a currency conversion) makes you more agile for feature delivery and code resolution.

Remember, the key is designing an API for services and software that plays well with others—whether you’re selling them or creating them for internal use. Don’t get boxed into thinking your API audience has to be just for people in your company; potentially, it could be useful to anyone on the value chain. Consumers of the SaaS API economy require instant access to that SaaS property (these services need to be always available and accessible), instant access to the API’s documentation, and instant access to the API itself (so that developers can program and code against it).

Furthermore, it is critical that the API architecture be extensible, with hooks and integration endpoints across multiple domains to promote cooperation and connectivity with new services as they emerge. A robust API economy is made possible by a hybrid cloud architecture of well-defined open integration points between external systems and cloud marketplaces from any vendor, without consideration to location—that enables service consumers to focus purely on cloud capabilities, instead of destinations (Figure 4-5).

Figure 4-5. A composed enterprise app that on the backend leverages APIs to “round out” its functionality and in turn exposes its line-of-business stakeholders’ APIs to support their applications

All You Need Is a Little Bit of REST and Some Microservices

We’ve talked a lot about RESTful APIs throughout this book, giving you the gist of them to help you understand the discussion in whatever section or chapter you were reading. With that said, since they are so fundamental to the rest (get it?) of this book, we thought we’d spend a little time giving you some analogies and a little more information, because microservices are a key tenet toward modernizing your digital estates.

Let’s pretend you’re at a superstore like Costco. A REST API consists of various endpoints that you access based on things you need. If you’re at Costco, most of the things you’ll check out and pay for will be processed in the main cashier’s line; however, Pharmacy, Optical, and Auto are separate endpoints and those items can’t be purchased through the main cashiers. Pharmacy, Optical, Auto, and Cashier are all Costco endpoints where someone is waiting to take your money (a REST API) and service your request. (Unlike Costco, when you consume a RESTful API, you get exactly what you came for and leave with just that; you don’t walk out spending hundreds of unbudgeted dollars when you just came for one or two items.)

You know how to talk to each of these endpoints, because there’s a well-defined standard for doing so—it’s akin to human language (well, perhaps today’s language isn’t so standard, but you get the point). RESTful services talk to applications via their endpoints using their own language called methods. Just like we use verbs to describe actions (“Do you have any more ski helmets?”), there are distinct REST API verbs that perform actions too—things like CREATE, READ, UPDATE, and DELETE (tech heads call these CRUD operations). Sometimes those “verbs” are in a different dialect of a method language (like HTTP verbs) and you’ll hear them called GET, POST, PUT, and DELETE. It doesn’t matter what dialect your dev team speaks with one another, so long as they also know the language (methods) of the service they’re programming for.

Each REST API has one job (when implemented as a microservice—a best practice) often referred to as the “single responsibility principle.” The REST part of that one job is the communication between these separate processes. While REST is how you talk to the API, the execution scope is on running the component code that brings back to the end user what they were looking for. Hopefully you can see how this framework is deeply rooted in CI/CD because the architecture allows you to evolve at different rates (when COVID first started, Costco’s Pharmacy service had to evolve much differently than its other endpoints). Building microservices as discrete pieces of logic allows you to granularly evolve different parts of the app over time on their own schedules (for example, look at how many times Uber updates its apps and the reasons for those updates).

With microservices, it’s not a single layer that hands an application’s data and business logic. You are literally grabbing lots of narrow-minded “bots” that do simple small tasks and wrangling them together for some larger purpose. Microservices are the killers of monolithic apps because you don’t develop applications with them; rather, you compose them by pulling together discrete pieces of logic to make an application.

We think that’s enough to understand what REST APIs are and the microservices approach, so we’ll finish this section with a list of their advantages, summarized in one place for easy reference. The microservices architecture is tremendously valuable because each component:

Is developed independently of each other—if the service has dependencies on other services (chaining) they are limited and explicit.

Is developed by a single, small team (another best practice) in which all team members can understand the entire code base—this specialization creates economies of scale, reduces bugs, and creates eminence on the service.

Is developed on its own timetable so new versions are delivered independently of other services—the team that updates the location logic of the app doesn’t have to rely on the team who does discounting calculations in order to effect the change.

Scales and fails independently—this not only makes it easier to isolate any problems, but also simplifies how the app scales. For example, if you’re getting more refund requests because of cancelled flights, you can scale refund logic independently of the services that compose the loyalty redemption component.

Can be developed in a different language—the protocol apps use to talk to each other and invoke (via REST) abstracts away the backend logic and programming language.

It’s Not Magic, But It’s Cool: The Server in Serverless?

Finally, we get to this mystery called serverless. Let’s ensure we’re clear right off the bat: serverless does not mean there isn’t a server involved, just like how cloud computing doesn’t mean your server is suspended by ice crystals in the sky. If there is one thing we want you to remember about serverless computing, it’s that serverless is yet another gear that lets developers focus on writing code.

To understand serverless computing, let’s start with the notion that no matter how you’re building and hosting apps (traditionally or via REST or GraphQL APIs) there’s always been the concept of a dedicated server that’s constantly available to service requests. In contrast, the more and more popular serverless architecture doesn’t use a live running server dedicated to all of the API calls you’re making for your app. And where do you think these serverless servers (even sounds odd to write that) run? They need a distributed, open environment that can easily bring together discrete pieces of logic from anywhere—so naturally, in a cloud of course!

Serverless computing is a new and powerful paradigm, one that naturally has been pounced on by nearly every major cloud vendor in the marketplace today—so you certainly have your pick of providers. The core concern that serverless computing is trying to get away from is the timeless struggle of procuring (ahead of time) correctly sized infrastructure for the workloads you intend to run. With the serverless computing approach, you no longer rent server capacity ahead of time or have to procure a server outright; instead, you pay per computation—without having to worry about the underlying infrastructure at all.

The serverless part means that the serverless framework focuses on scaling automatically to handle each individual request. In short, developers don’t have to worry about managing infrastructure capacity to support their service’s logic and their associated underlying resources. While IaaS requires that you think about how to provision resources (to support the application and the operating system for that matter), a serverless architecture just needs to know, “How many resources are required to execute your intent?”

To fully appreciate this, let’s take a moment to think about today’s discussions around cloud (the capability)—workloads are becoming increasingly dominated by containers, orchestration of those containers, and serverless. Looking back, the IaaS pattern we discussed earlier in this chapter was a step in the journey to cloud utopia. While IaaS offers companies more granularity in how they pay for what they use and the time it takes to start using it, as it turns out they rarely paid only for what they use—which is why we often cite that cost savings shouldn’t be the primary driver for adopting cloud: it’s not a certainty that it will provide them. Even virtual servers often involve long-running processes and less than perfect capacity utilization.

Don’t get us wrong. IaaS can be more compute and cost efficient than traditional compute, but spinning up a virtual machine (VM) can still be somewhat time-consuming and each VM brings with it overhead in the form of an operating system. The IaaS model of IT is capable of supporting almost anything from a workload perspective but has room for evolution when it comes to certain underlying philosophies and values that make cloud, well, cloud. In many cases, the container has begun replacing VMs as the standard unit of process or service deployment, with orchestration tools like Kubernetes governing the entire ecosystem of clusters.

The crux behind serverless is that you write code that will execute under certain conditions (or code that will run a desired job). When that code is executed, you are only billed for the computational cost of doing the job. You, as a developer, focus on the code and only the code. Savvy developers have always endeavored to make their code as efficient as possible. Serverless computing provides an additional incentive for those developers to tighten up their code performance even further—since more efficient code means fewer CPU cycles and therefore (in the case of serverless) even cheaper billing rates to execute their code.

Think about this from another angle: imagine that you have a rarely used service that, when run, is potentially very demanding on local resources. Your system administrator warns you that, should the need to execute this service ever arise (in an emergency), it might adversely affect or even cripple other day-to-day operations running in your environment, simply because of the vast quantities of resources it would require to meet the moment. With serverless computing, your business could host that dust-laden code on a cloud provider to accomplish the job (when necessary), without wasting or risking your own IT infrastructure resources. The burst in resource consumption could be met by the serverless computing environment, allowing your business to address the crisis (should it arrive) without crippling other local services in the fallout.

Serverless is a newer cloud model that is challenging traditional models around certain classes of cloud native applications and workloads. It isn’t for everyone, and it has its limitations (which are outside the scope of this book), but if the fit is right—it can be nothing short of a home run. Serverless goes the furthest of any cloud pattern in terms of abstracting away nearly everything but very granular encapsulated business logic, scaling perfectly with demand, and really delivering on the promise of paying only for what you use.

Serverless has a Kid! Function as a Service

We’ve grossly (perhaps unfairly) simplified it here, but we’ve been alluding to yet another as-a-service model throughout this chapter—function as a service (FaaS). FaaS is a type of cloud-computing service that allows you to execute code in response to events without the complex infrastructure (dedicated servers, whether they are traditionally dedicated or vis-à-vis IaaS) typically associated with building and launching microservices applications.

Serverless and FaaS are often conflated with one another, but the truth is that FaaS is actually a subset of serverless. Serverless is really focused on any service category—be it compute, storage, database, messaging, or API gateways—where configuration, management, and billing of servers are invisible to the end user. FaaS, on the other hand, is focused on the event-driven computing paradigm where application code only runs in response to events or requests.

Hosting a software application on the internet typically requires provisioning a virtual or physical server and managing an operating system or web server hosting processes. With FaaS, the physical hardware, virtual machine operating system, and web server software management are all handled automatically by the hybrid cloud—which is how we’ve been describing it.

The Takeaway

Today, traditional IaaS is, by far, the most mature cloud pattern and controls the vast majority of market share in this space. But containers and serverless will be technologies to watch and begin employing opportunistically where it makes sense. As the world moves more toward microservices architectures—where applications are decomposed into small piece parts, deployed independently, manage their own data, and communicate via APIs—containers and serverless approaches will only become more common.

We noted earlier that serverless is great for the right types of applications. From a business perspective, you can start paying for stuff in milliseconds with the FaaS model. Business loves the agility of it all and it reduces the costs of hiring backend infrastructure people (you still need people for IaaS), so it overall reduces operational costs. With that said, businesses have to consider a reduction in overall control of their app at the most granular of levels; furthermore, these apps can potentially be more susceptible to vendor lock-in and disaster recovery can be more complex.

What about developers? Well, they benefit too, starting with the obvious: zero system administration. This means someone (or something) else is handling scalability, which means more time to focus on code, which fosters innovation. That said, developers need to balance this “Zen” dev zone with additional architectural complexity: local testing becomes more challenging; the length of time a built service can run is capped (we didn’t cover that here because the book isn’t about the details of technology); there is a lack of operational tools; and some other things not worth getting into here.

Wrapping It Up

Now that you’re done reading this chapter, we’re confident you have a solid foundation around the different cloud patterns that will empower you to decide where to use them and what they are all about. You have a solid understanding on how APIs are shape-shifting app dev in a massive way and how newer patterns (such as serverless and its sibling FaaS) are areas you’re likely going to need to explore in the coming months when you’re done reading this book.

At this point we know you appreciate cloud as a capability, and how getting yourself (or the people you influence) out of the “cloud as a destination” mindset opens the aperture on all that cloud can deliver to your business. We hope you agree with us that hybrid cloud is the path forward and understand how it can capture the 2.5x value that’s been left on the table by the current approach most are using with cloud technology (treating it like a destination).

Before we jump further into details around security, containers, and more, we wanted to give you a solid foundation on those application development epochs we talked about in Chapter 3...so get ready to “shift left” and we’ll see you in Chapter 5.

Get Cloud Without Compromise now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.