Chapter 6. High availability 327

3. Identify nodes to deploy resources.

Identify the nodes where each resource group could run. The list of those

nodes are a candidate value for the NodeList attribute of each resource in a

group. Check that all the resources in a group can be deployed on the same

set of nodes. If not, grouping may be incorrect. Reconsider grouping.

4. Define relationships among resource groups.

Find dependencies among resource groups. Begin with applying the following

constituents:

– Partitions must be started one-by-one in sequential order. Use

StartAfter

relationships among the partitions.

– Each partition depends on an NFS server running somewhere in the

cluster. Use

DependsOnAny relationships between the partitions and the

NFS server.

To designate which partitions can collocate, use the following relationships.

– The

Collocated relationship between two resources ensures they are

located on the same node.

– The

AntiColloated relationship between two resources ensures they are

located on different nodes.

– The

Affinity relationship between resources A and B encourages locating

A on the same node where B is already running.

– The

AntiAffinity relationship between resource A and B encourages

locating A on different nodes from the one B is already running on.

6.2.4 Basic implementation with the regdb2salin script

This section describes the implementation steps using the regdb2salin script.

The implementation steps are summarized below:

Creating a peer domain

Setting up a highly available NFS server

Checking the mount points for file systems

Creating an equivalency for network interfaces

Running the db2regsarin script

Sample configuration

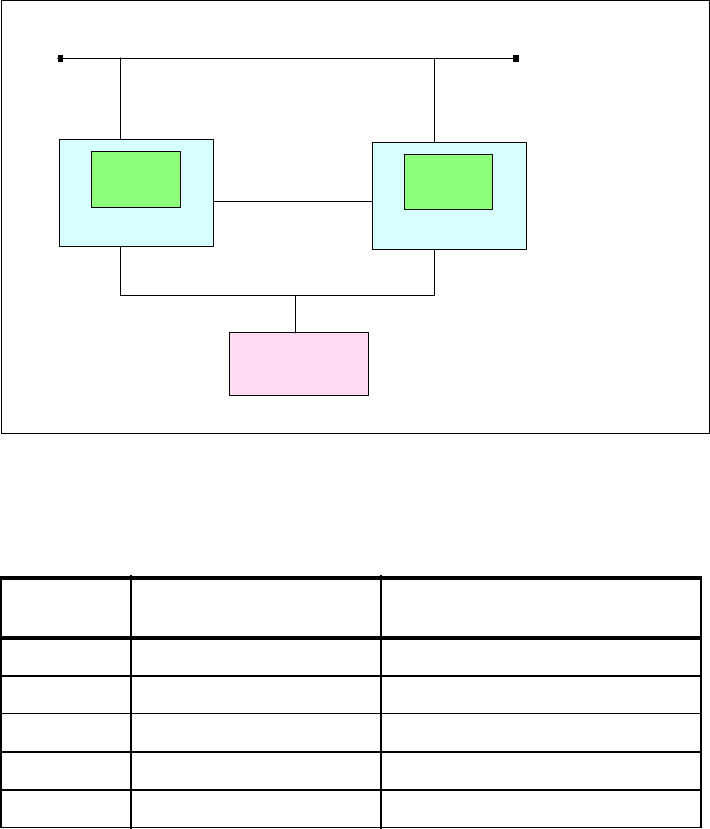

We use the configuration in Figure 6-6 on page 328 as in example in the rest of

this section.

328 DB2 Integrated Cluster Environment Deployment Guide

Figure 6-6 A sample configuration for basic implementation

This example is a variant of our lab configuration tailored to be used with the

regdb2sarin script. The file systems in Table 6-1 reside in DS4400.

Table 6-1 File system used in the sample configuration

In this example, we also assume that:

The instance name is db2inst1.

The service IP address for database clients is 9.26.162.123.

LVM is not used.

/db2sharedhome is shared via NFS and mounted on /home/db2inst1.

Use one SCSI disk (/dev/sde) as a tie breaker.

Block

device

Mount point Usage

/dev/sdb1 /db2sharedhome The instance owner’s home directory

/dev/sdb2 /varlibnfs The NFS-related files

/dev/sdc1 /db2db/db2inst1/NODE0000 The database directory for partition 0

/dev/sdc2 /db2db/db2inst1/NODE0001 The database directory for partition 1

/dev/sde N/A The tie-breaker disk

DS4400

SAN

9.26.162.123 (Service IP)

9.26.162.46

Public LAN

172.21.0.1

172.21.0.2

db2rb02

db2inst1

Partition1

db2rb01

db2inst1

Partition0

9.26.162.124(Service IP)

9.26.162.61

Chapter 6. High availability 329

Creating a peer domain

Before defining resources, you must prepare a peer domain where your

resources are deployed. Follow the steps below.

1. Prepare participating nodes for secure communication.

For nodes to join the cluster, they must be prepared for secure

communication within the cluster. Use the preprpnode command to enable

nodes’ secure communication.

preprpnode hostname1 hostname2 ....

hostnames are the host name of nodes to be enabled. For our sample

configuration, we have to enable two nodes, db2rb01 and db2rb02.

# preprpnode db2rb01 db2rb02

2. Create a peer domain.

To create a peer domain, use the mkrpdomain command.

mkrpdomain domainname hostname hostname ....

domainname is the name of a domain you create, and hostnames are the

host names of the participating nodes. For our sample configuration, we

create a peer domain named SA_Domain.

# mkrpdomain SA_Domain db2rb01 db2rb02

3. Start the peer domain.

Once you create the domain, start it with the startrpdomain command.

# startrpdomain SA_domain

It takes a while for the domain to be online. Use the lsrpdomain command to

see the running status of the domain.

# lsrpdomain

Name OpState RSCTActiveVersion MixedVersions TSPort GSPort

SA_Domain Online 2.3.3.1 No 12347 12348

4. Create a tie breaker (optional).

In a two-nodes only cluster a tie breaker is required. A tie breaker exclusively

uses one SCSI disk for cluster quorum duties. The disk can be a very small

logical disk of a disk array, but it must be dedicated to the tie breaker.

To create a tie breaker with a SCSI disk, you have to have four parameters

for the disk to identify it: HOST, CHANNEL, ID, and LUN. With the block

Note: You cannot define resources before the domain gets online. Make

sure that the domain is online before you keep on.

Get DB2 Integrated Cluster Environment Deployment Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.