Chapter 1. Proposed Implementation

The majority of model serving implementations today are based on representational state transfer (REST), which might not be appropriate for high-volume data processing or for use in streaming systems. Using REST requires streaming applications to go “outside” of their execution environment and make an over-the-network call for obtaining model serving results.

The “native” implementation of new streaming engines—for example, Flink TensorFlow or Flink JPPML—do not have this problem but require that you restart the implementation to update the model because the model itself is part of the overall code implementation.

Here we present an architecture for scoring models natively in a streaming system that allows you to update models without interruption of execution.

Overall Architecture

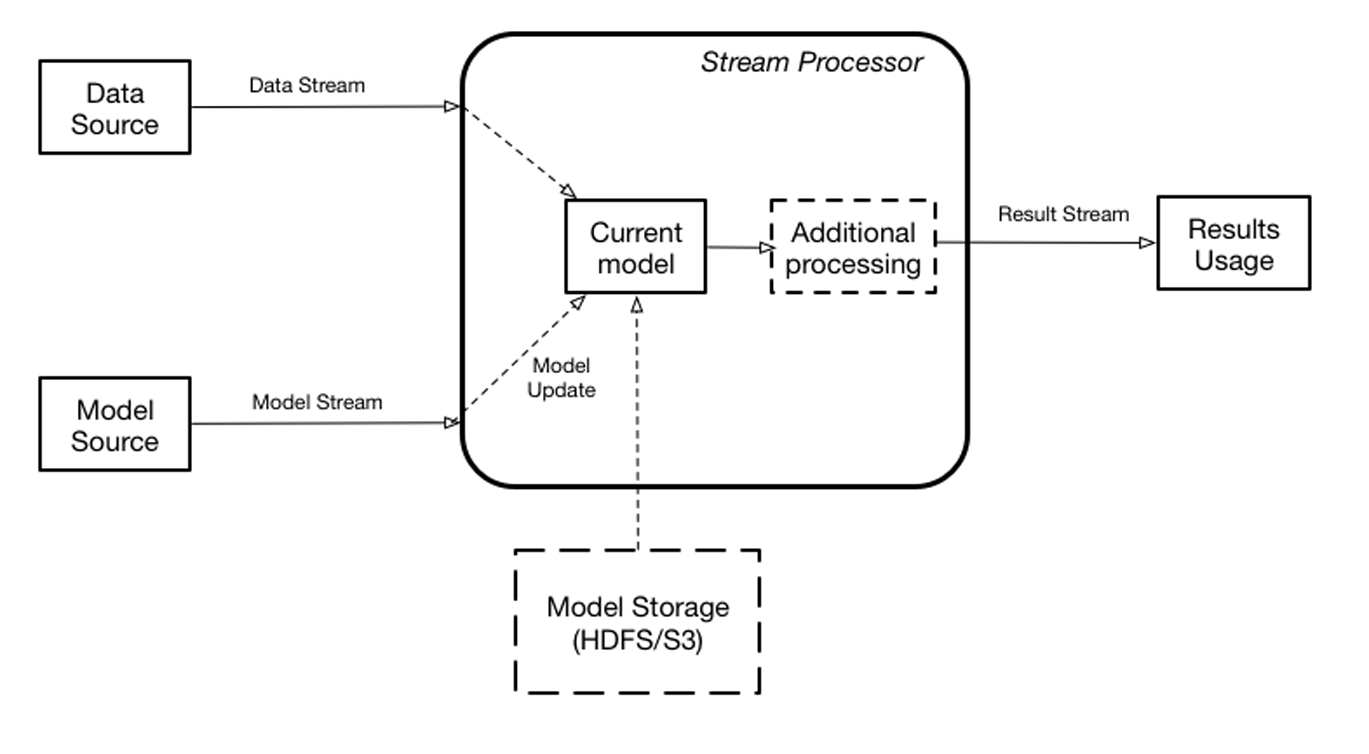

Figure 1-1 presents a high-level view of the proposed model serving architecture (similar to a dynamically controlled stream).

Figure 1-1. Overall architecture of model serving

This architecture assumes two data streams: one containing data that needs to be scored, and one containing the model updates. The streaming engine contains the current model used for the actual scoring in memory. The results of scoring can be either delivered to the customer or used by the streaming engine internally as a new stream—input for additional calculations. If there is no model currently defined, the input data is dropped. When the new model is received, it is instantiated in memory, and when instantiation is complete, scoring is switched to a new model. The model stream can either contain the binary blob of the data itself or the reference to the model data stored externally (pass by reference) in a database or a filesystem, like Hadoop Distributed File System (HDFS) or Amazon Web Services Simple Storage Service (S3).

Such approaches effectively using model scoring as a new type of functional transformation, which any other stream functional transformations can use.

Although the aforementioned overall architecture is showing a single model, a single streaming engine could score multiple models simultaneously.

Model Learning Pipeline

For the longest period of time model building implementation was ad hoc—people would transform source data any way they saw fit, do some feature extraction, and then train their models based on these features. The problem with this approach is that when someone wants to serve this model, he must discover all of those intermediate transformations and reimplement them in the serving application.

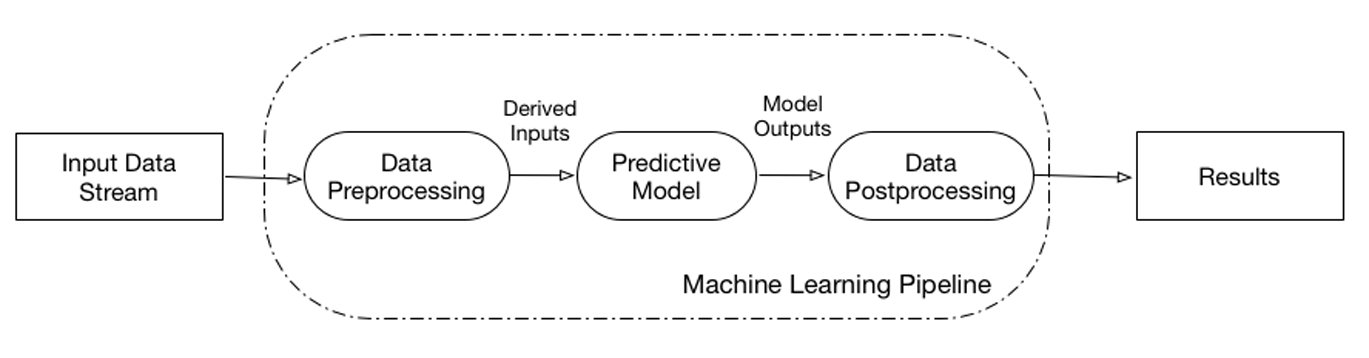

In an attempt to formalize this process, UC Berkeley AMPLab introduced the machine learning pipeline (Figure 1-2), which is a graph defining the complete chain of data transformation steps.

Figure 1-2. The machine learning pipeline

The advantage of this approach is twofold:

-

It captures the entire processing pipeline, including data preparation transformations, machine learning itself, and any required postprocessing of the machine learning results. This means that the pipeline defines the complete transformation from well-defined inputs to outputs, thus simplifying update of the model.

-

The definition of the complete pipeline allows for optimization of the processing.

A given pipeline can encapsulate more than one model (see, for example, PMML model composition). In this case, we consider such models internal—nonvisible for scoring. From a scoring point of view, a single pipeline always represents a single unit, regardless of how many models it encapsulates.

This notion of machine learning pipelines has been adopted by many applications including SparkML, TensorFlow, and PMML.

From this point forward in this book, when I refer to model serving, I mean serving the complete pipeline.

Get Serving Machine Learning Models now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.