Measuring model accuracy

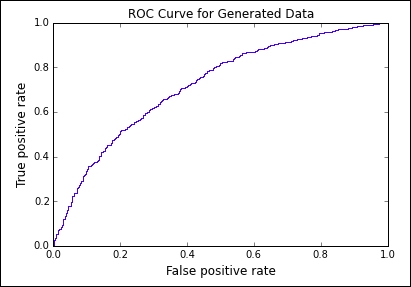

So, we know how to create a model that is "adequate", but what does this really mean? How can we differentiate whether one "adequate" model is better than another? A common approach is to compare the ROC curves. This one is generated from the simple model that we just created:

You're probably familiar with ROC curves. They show us what kind of true positive rate we can achieve by allowing a given error rate in terms of false positives. The basic take away is that we want the curve to get as close to the upper left corner as possible. In case you haven't used these visualizations before, the reason for this is that the more ...

Get Test-Driven Machine Learning now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.