Building self-service tools to monitor high-volume time-series data

The O'Reilly Data Show Podcast: Phil Liu on the evolution of metric monitoring tools and cloud computing.

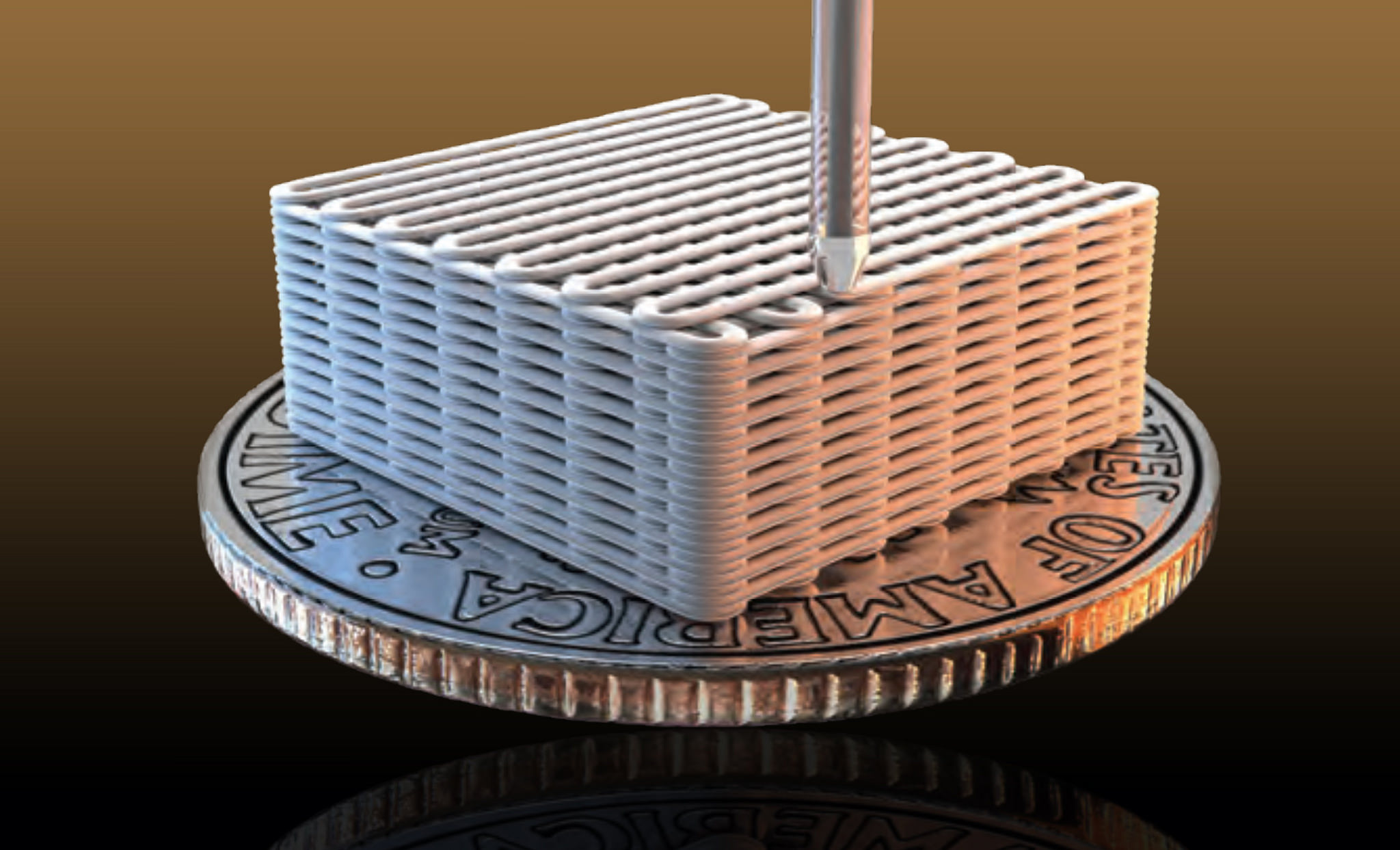

3D printing technique known as direct ink writing (source: Department of Energy/Flickr)

3D printing technique known as direct ink writing (source: Department of Energy/Flickr)

One of the main sources of real-time data processing tools is IT operations. In fact, a previous post I wrote on the re-emergence of real-time, was to a large extent prompted by my discussions with engineers and entrepreneurs building monitoring tools for IT operations. In many ways, data centers are perfect laboratories in that they are controlled environments managed by teams willing to instrument devices and software, and monitor fine-grain metrics.

During a recent episode of the O’Reilly Data Show Podcast, I caught up with Phil Liu, co-founder and CTO of SignalFx, a SF Bay Area startup focused on building self-service monitoring tools for time series. We discussed hiring and building teams in the age of cloud computing, building tools for monitoring large numbers of time series, and lessons he’s learned from managing teams at leading technology companies.

Evolution of monitoring tools

Having worked at LoudCloud, Opsware, and Facebook, Liu has seen first hand the evolution of real-time monitoring tools and platforms. Liu described how he has watched the number of metrics grow, to volumes that require large compute clusters:

One of the first services I worked on at LoudCloud was a service called MyLoudCloud. Essentially that was a monitoring portal for all LoudCloud customers. At the time, [the way] we thought about monitoring was still in a per-instance-oriented monitoring system. [Later], I was one of the first engineers on the operational side of Facebook and eventually became part of the infrastructure team at Facebook. When I joined, Facebook basically was using a collection of open source software for monitoring and configuration, so these are things that everybody knows — Nagios, Ganglia. It started out basically using just per-instance instant monitoring techniques, basically the same techniques that we used back at LoudCloud, but interestingly and very quickly as Facebook grew, this per-instance-oriented monitoring no longer worked because we went from tens or thousands of servers to hundreds of thousands of servers, from tens of services to hundreds and thousands of services internally.

Self-service analytics for developers

It’s impossible for users to track more than a handful of metrics (time series). Most real-time monitoring tools usually provide dashboards that a user can customize, and a few platforms leverage machine-learning and analytics to provide efficient snapshots (e.g., network visualizations). As Liu explained, the mechanics of data collection and storage have evolved as the nature of (Web) applications have changed:

Companies like Twitter, Netflix, Google, Facebook — these companies that internally have a lot of engineering resources, or have been around for a while — have built their own analytics around metric time series … The common thing that we found, the things that worked best with Twitter and with Facebook is that you provide a self-service analytics, a time service analytic system so developers themselves can create their own dashboards to get insights about their own applications. There are a lot of advantages to this. One is that there is no centralized team that’s managing what should be monitored; individual teams could essentially expose things that are important for their applications — if my service goes down, is it my problem or did someone else downstream push a version that’s causing problems with my service? This type of information exposed is actually very helpful for the entire engineering team.

…

[At] SignalFx, we’re out to solve the monitoring problem for modern distributed applications, built within the last seven or eight years. A modern application, I would say, has some characteristics. One is that it is a microservice-based architecture. A little bit about microservices: traditionally, I’m going to make an analogy back to the LoudCloud days. Applications built in those days were running in monolithic application servers like WebLogic, where all the coordination of the system and the replication were all built into the app server, and then you have this one piece of software that’s running on a very large server that will self-host a lot of different business logic. In the past eight years or so, slowly people have migrated off of this idea and into sub components that run in a very fast network. Instead of having coordination be part of your application server, and people are using ZooKeeper for coordination, instead of having caching of your business objects, people are using memcache. Things are basically decomposed into individual network services. This really is what we think of as a modern application. In this environment, we think the best way to find out what’s happening is to collect fine-grain metrics data, open from the application at instances all the way down to the OS and send that information into a centralized data store for you to analyze the data in real time.

“Cloud computing” engineering teams require different skills

It just so happens that many recent startups building metric monitoring tools are “cloud first” (SaaS) companies. As I noted in a recent post on Spark, enterprises seem to be more open about moving some tasks, particularly (real-time) analytics, to the cloud. As I’ve learned through numerous conversations, building an engineering team focused on the “cloud” requires members with different skills and mindsets. In recent conversations, I’ve jokingly noted that DevOps in such companies really connotes “our developers are skilled at IT operations.” Liu has built several engineering teams, and he described the approach at SignalFx:

We don’t have an operations team here at SignalFx; we have a notion of all the engineering teams providing what we call services. All [SignalFX] engineers have this mindset where, not only am I building software, but I’m also running the software because it’s end-to-end for me, so it’s not just designing and then giving it a QA, and then having it shrink wrapped, shipped and then rinse, repeat. I live with my software. I’m building software that people are using. If something is wrong and my customers are complaining, I need to figure out why that is; I need to get on it right away. That is a completely different mindset than distributed engineering [from] 15 or 20 years ago. All engineers coming up now have more operations background than they did before. They’re not experts of operations, but their building block is AWS VM right now instead of a physical server. Their building blocks are services like the ones that we’ve mentioned: ZooKeeper, Kafka, Cassandra — that’s their building block right now; they’re not libraries. It’s quite a different mindset just from what it was 10 years ago I would say.

You can listen to our entire interview in the SoundCloud player above, or subscribe through Stitcher, SoundCloud, TuneIn, or iTunes.

For more on event data and real-time analysis, check out “I Heart Logs,” by Jay Kreps and “Introduction to Apache Kafka,” by Gwen Shapira..