Data architectures for streaming applications

The O’Reilly Data Show Podcast: Dean Wampler on streaming data applications, Scala and Spark, and cloud computing.

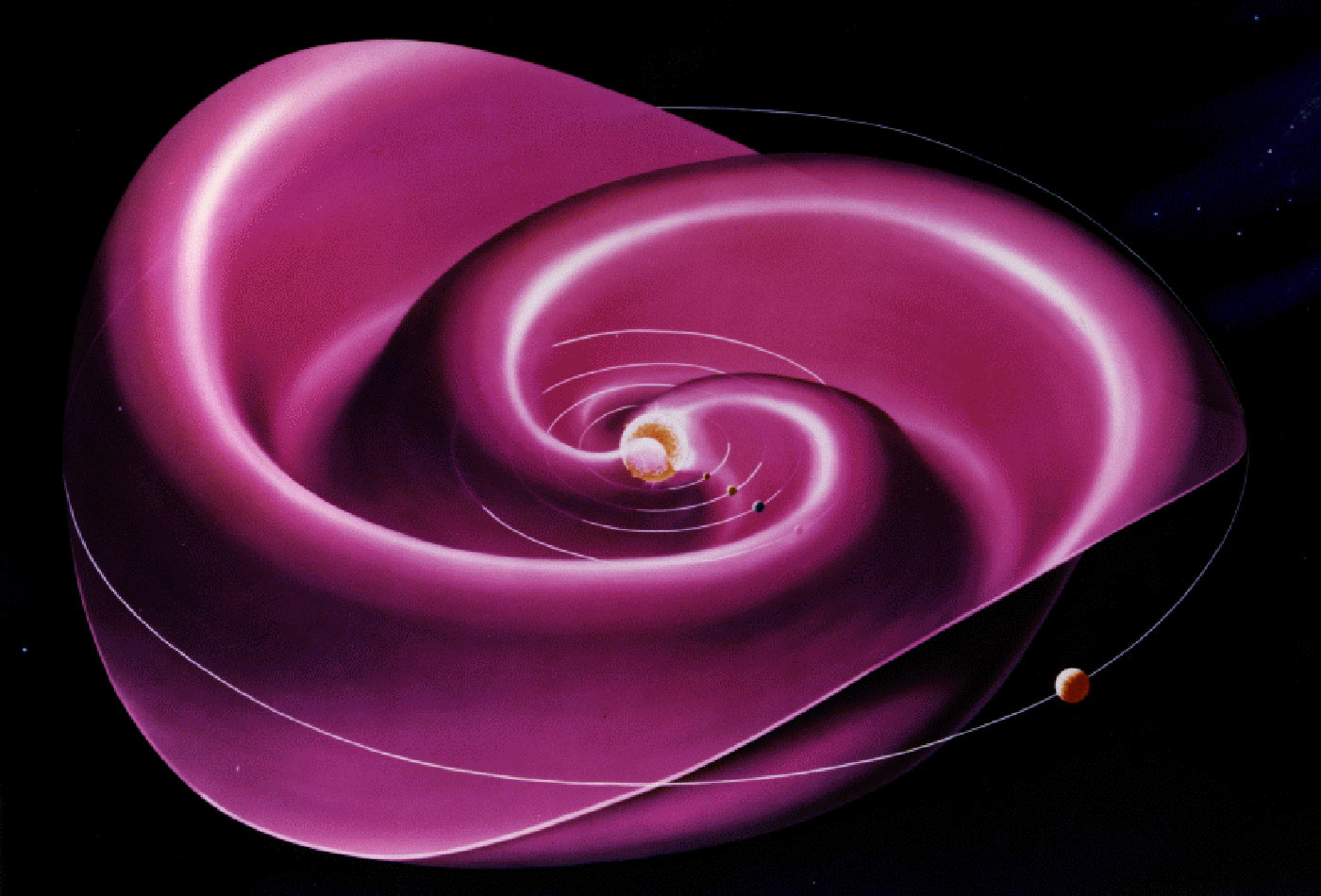

The heliospheric current sheet results from the influence of the Sun's rotating magnetic field on the plasma in the interplanetary medium (solar wind). (source: NASA on Flickr)

The heliospheric current sheet results from the influence of the Sun's rotating magnetic field on the plasma in the interplanetary medium (solar wind). (source: NASA on Flickr)

In this episode of the O’Reilly Data Show I sat down with O’Reilly author Dean Wampler, big data architect at Lightbend. We talked about new architectures for stream processing, Scala, and cloud computing.

Our interview dovetailed with conversations I’ve had lately, where I’ve been emphasizing the distinction between streaming and real time. Streaming connotes an unbounded data set, whereas real time is mainly about low latency. The distinction can be blurry, but it’s something that seasoned solution architects understand. While most companies deal with problems that fall under the realm of “near real time” (end-to-end pipelines that run somewhere between five minutes to an hour), they still need to deal with data that is continuously arriving. Part of what’s interesting about the new Structured Streaming API in Apache Spark is that it opens up streaming (or unbounded) data processing to a much wider group of users (namely data scientists and business analysts).

Here are some highlights from our conversation:

The growing interest in streaming

There are two reasons. One is that it’s an emerging area, where people are struggling to figure out what to do and how to make sense of all these tools. It does also raise the bar in terms of production issues compared to a batch job that runs for a couple of hours. … But a streaming job is supposed to run reliably for months, or whatever. Suddenly, you’re now out of the realm of the back office and into the realm of the bleeding edge, always-on, Internet that your distributed computing friends are fretting over all the time. The problems are harder. The last point I’ll make is that streaming fits the model of stuff that Lightbend has traditionally worked on, which has been more highly available, highly reliable systems and tools to support that; so, it’s a more natural fit than just the general data science and data engineering problems.

Stream processing frameworks

We’re seeing this same sort of Cambrian explosion of different options like we saw with the NoSQL databases in the 2000s. A lot of these will fall by the wayside, I think. … What I encourage people to do is, first, make sure you’re picking something that really has a vibrant community, like Spark, where it’s clear that it’s going to be around for a while, that people are going to keep moving forward. But then make sure you understand what the strengths and weaknesses of the system are.

Just enough Scala for Spark

In my view, no tool highlights the advantages of Scala and hides the disadvantages of Scala better than Spark does. I’m thinking more of the old RDD API than the newer Dataset API, but when you’re writing, effectively what are data flows, in Spark, it’s just a natural, very elegant way to express them when you use the Scala API. I think even more expressive and clean than the Python API, which is historically a very concise and great way to write data science apps. … Certainly, at Lightbend, we’ve seen that big data and the fact that it’s being used a lot for tools like Spark and Kafka has driven interest in Scala in general.

There were a lot of people who were actually starting to use Scala for the first time because of Spark; they didn’t really want to become Scala experts, but needed to know enough to be productive, and also wanted to learn some of the cool tricks that make it so elegant. That’s really the genesis of the tutorial that I’m going to give at Strata + Hadoop World New York and Singapore, and we’re also working on video training for O’Reilly on the same material, designed to help you avoid the dark corners, just give you the key things that are really so useful, and then you can take it from there.

Related resources:

- Just Enough Scala for Spark: Dean Wampler is teaching this new three-hour tutorial at Strata + Hadoop World New York (Sept 27, 2016) and Singapore (Dec 6, 2016).

- Uber’s case for incremental processing on Hadoop

- Making sense of stream processing

- Analyzing data in the Internet of Things

This post and podcast is part of a collaboration between O’Reilly and Lightbend. See our statement of editorial independence.