Natural language analysis using Hierarchical Temporal Memory

The O’Reilly Data Show Podcast: Francisco Webber on building HTM-based enterprise applications.

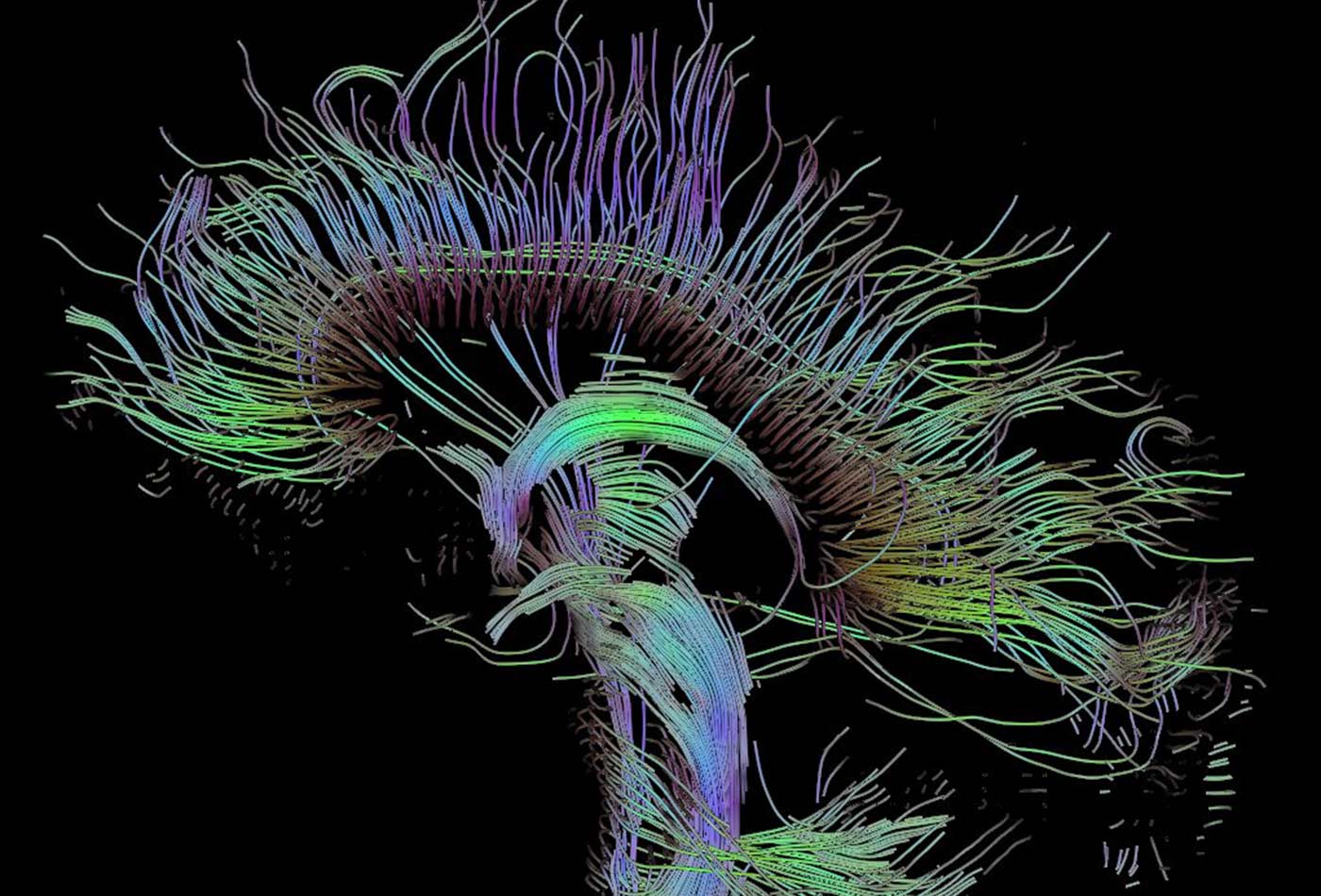

Visualization of a DTI measurement of a human brain. (source: Thomas Schultz on Wikimedia Commons)

Visualization of a DTI measurement of a human brain. (source: Thomas Schultz on Wikimedia Commons)

In this episode of the Data Show, I spoke with Francisco Webber, founder of Cortical.io, a startup that is applying tools based on Hierarchical Temporal Memory (HTM) to natural language understanding. While HTM has been around for more than a decade, there aren’t many companies that have released products based on it (at least compared to other machine learning methods). Numenta, an organization developing open source machine intelligence based on the biology of the neocortex, maintains a community site featuring showcase applications. Webber’s company has been building tools based on HTM and applying them to big text data in a variety of industries; financial services has been a particularly strong vertical for Cortical.

Here are some highlights from our conversation:

Important aspects of HTM

Time is encoded in the stream of data itself, and the system is only connected to a stream of information. HTM algorithms operate in an online fashion to understand what’s actually hidden in the data and what the system might want to extract or how to react to that incoming data. The other aspect is that whatever source the data comes from, it is always encoded in the same manner: data is turned into sparse distributed representations—large binary, and very sparse vectors. A crucial part of the algorithm depends on the fact that data is properly sparsified, thus allowing certain mechanisms to occur very efficiently.

Another important aspect is that the brain is not a processor—it’s actually a memory system. It’s just a different kind of memory system, where storing information is literally processing. What the brain stores are sequences of patterns. If certain sequences of patterns reoccur, the system tries even more to memorize them. For future patterns, when a specific input is coming along and the HTM has seen part of a sequence like this, it can actually predict what next pattern will be coming in. If it’s properly learned, and if it was sufficiently exposed to that kind of sequence, the prediction would be correct.

Natural language and HTM

We first convert text into a sparse distributed representation. What needs to be done is something very similar to what a human needs. For example, to become a medical doctor, you need to go to university, you need to read a certain number of books, you need to listen to a certain number of lectures until you have sufficient data to create a semantic map of understanding in your brain. You need to actually train your brain with input.

Now, the difference with training as you might see it in machine learning, for example, is that we humans don’t need to read five million books to become a doctor—we can read a couple of books about a couple of things, and from a learning perspective, we may not know every possible utterance, but we know how to understand utterances in the medical field. After having read my medical textbooks, I know about the medical language and I know about what this language represents. With that knowledge, I can now start to read a paper, and I can understand this paper or the work that has been done and described in the paper.

We are training on a relatively small set of training materials. For example, to train what we call general English, we took 400,000 pages from Wikipedia, and from there, we extracted a semantic map. It’s completely unsupervised. You can imagine this like creating a self-organizing map of the training material from the Wikipedia page, and this material is cut into little snippets.

Francisco Webber is giving a talk on AI-powered natural language understanding applications in the financial industry at the O’Reilly Artificial Intelligence Conference in New York, June 26-29, 2017.

Related resources:

- AI is not a matter of strength but of intelligence: Francisco Webber’s talk at the 2016 O’Reilly Artificial Intelligence conference.

- Introducing model-based thinking into A.I. systems (a Data Show episode on probabilistic machine learning and probabilistic programming)

- The Deep Learning video collection (Strata + Hadoop World 2016)

- How big compute is powering the deep learning rocket ship