Practical machine learning techniques for building intelligent applications

The O’Reilly Data Show Podcast: Mikio Braun on practical data science, deep neural networks, machine learning, and AI.

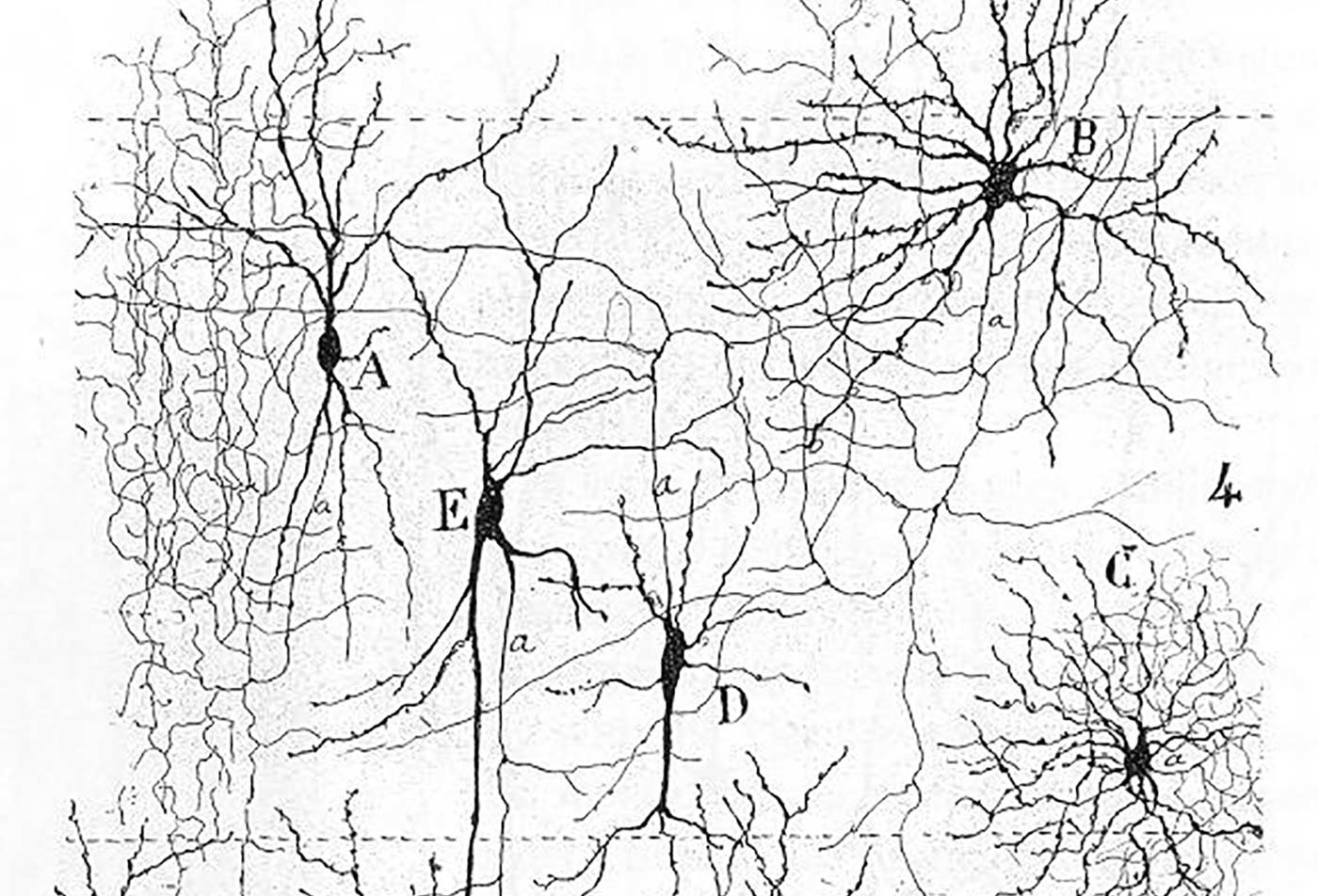

From "Texture of the Nervous System of Man and the Vertebrates" by Santiago Ramón y Cajal, 1898. (source: Wikimedia Commons)

From "Texture of the Nervous System of Man and the Vertebrates" by Santiago Ramón y Cajal, 1898. (source: Wikimedia Commons)

In this episode of the O’Reilly Data Show, I spoke with Mikio Braun, delivery lead and data scientist at Zalando. After spending previous years in academia, Braun recently made the decision to switch to industry. He shared some observations about building large-scale systems, particularly deploying data applications in production systems. Given his longstanding background as a machine learning researcher and practitioner, I wanted to get his take on topics like deep learning, hybrid systems, feature engineering, and AI applications.

Here are some highlights from our conversation:

Data scientists and software engineers

One thing that I have found extremely interesting is the way that data scientists and engineers work together, which is something I really wasn’t aware of before … when I was still at university, most of the people, or many people who end up in data science, are not actually computer scientists. We had many physicists … or electrical engineers. Usually, they are quite good at putting some math formula into code, but they don’t really know about software engineering.

I learned that there’s also some things that data scientists are really good at, and software engineers, on the other hand, are a bit lacking. That’s specifically when you work on more open-ended problems where there’s some exploratory component … For a classical software engineer, it’s really about code quality, building something. They always code for something, which they assume will run for many years, so they put a lot of effort in having clean code, good design, and everything. That also means if you have a bit more open-ended problems for them, it’s often very hard. If it’s under specified, I found it’s very hard for them to be able to work effectively.

On the other hand, if the data scientists are there, often the starting point is something like: “Here’s a bit of data. This is roughly what we want to have. Now you have to go ahead and try a lot of things, and figure something out, and do experiments in a way that is more or less objective.

Deep learning and neural networks: Past and present

General neural networks were invented in the 1980s, but of course, computers were much slower back then, so you couldn’t train the networks of the size we have right now. Then there were actually a few new methods for training really large networks. This was like in the mid 2000s. … They managed to solve really relevant real-world problems. … Because of that, they started to make money. If there’s money, then suddenly there are jobs. Suddenly things look very, very interesting for everyone.

… Over the years, they were solving more and more problems using deep learning. … It’s not like they really solved problems that couldn’t be solved before; they just showed that they could also solve them with deep learning, which of course is also nice … one method that seems to fit many, many application areas.

… The third reason why I think it’s quite popular is now you have really good open source libraries, where everyone can pluck together.

… When I was a student studying the first neural networks, the first lecture was just about backprop, and then the next lecture was an exercise where you had to compute the update rules for yourself and then implement them. Then some people, at some point, realized you can do this using the chain rule, in a way that you can just compose different network layers, and then you can automatically compute the update rule.

… On the other hand, I think what people usually don’t admit easily is what you said before — the architecture of these networks is something that’s very, very complicated. … It also takes a really long time to find the right architecture for a problem.

Feature engineering and learning representations

This is actually the most interesting question about these neural networks — do they or do they not somehow learn reasonable internal representations of the data? One of my last PhD students I supervised was actually working on this. … We could at least show that from layer to layer, you get some representation that is more fit to represent the kind of prediction you want to make.

… As humans, we think we know that we get these really good abstractions. From the visual input, we get very quickly to a point where we have representations of objects and we can reason about what they do. I think right now, it’s still unclear whether deep learning really also has this kind of thing, or whether it just learned something where it can do a good prediction or not. … I’m still waiting for some algorithm that is able to get internal representations about the world, which then allows it to reason about the world in a way that is very similar to what humans do.

Editor’s note: Mikio Braun will present a talk entitled Hardcore Data Science in Practice at Strata + Hadoop World London 2016.

Related resources:

- Building big data systems in academia and industry: previous Data Show episode featuring Mikio Braun

- Machine Learning – an O’Reilly Learning Path

- Strata + Hadoop World’s Hardcore Data Science day makes its European debut this coming June in London.

- AI and deep learning sessions at Strata + Hadoop World London 2016.