Josh Corman on the challenges of securing safety-critical health care systems

The O’Reilly Security Podcast: Where bits and bytes meet flesh, misaligned incentives, and hacking the security industry itself.

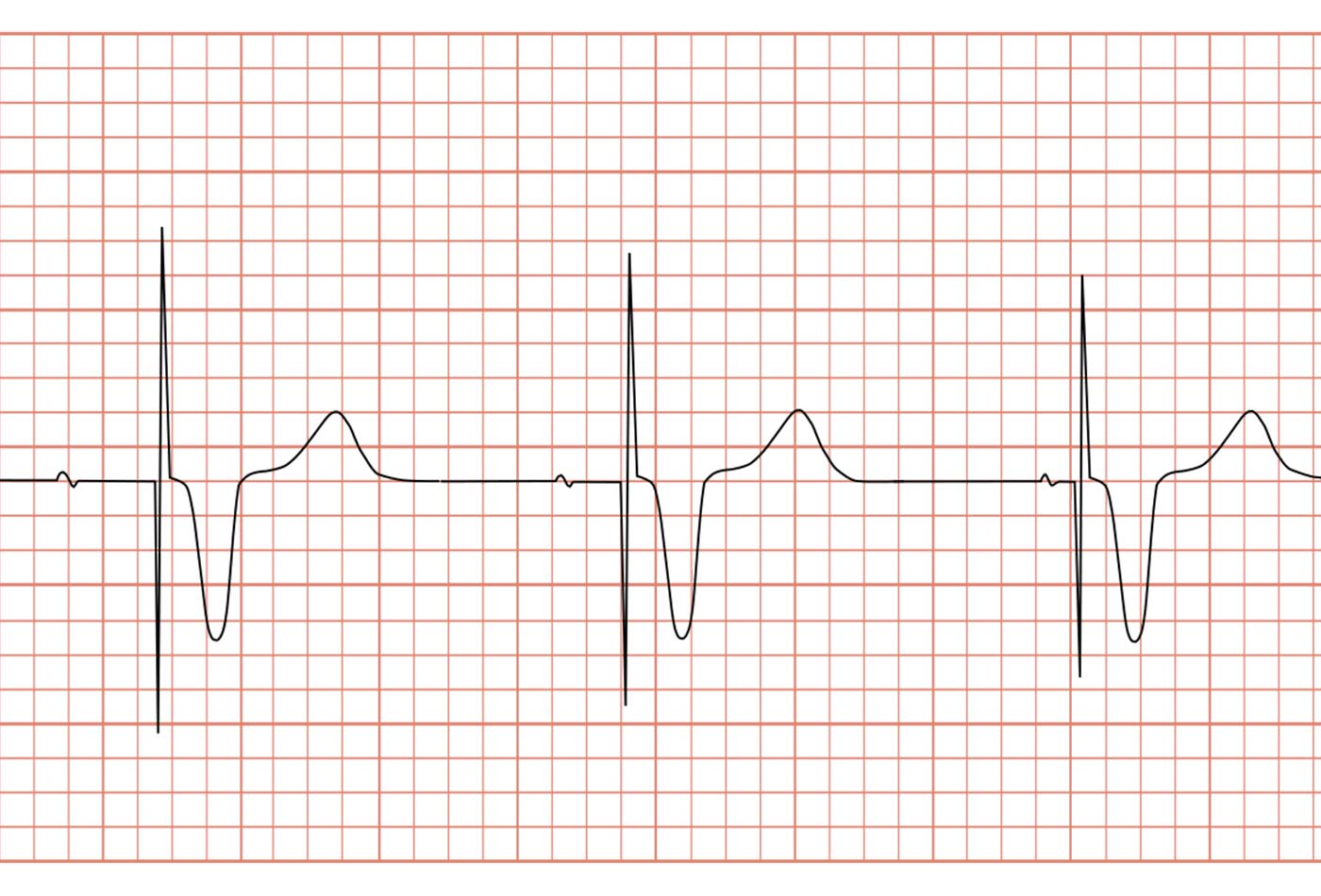

ECG pacemaker syndrome. (source: GiggsHammouri on Wikimedia Commons)

ECG pacemaker syndrome. (source: GiggsHammouri on Wikimedia Commons)

In this episode, I talk with Josh Corman, co-founder of I Am the Cavalry and director of the Cyber Statecraft Initiative for the non-profit organization Atlantic Council. We discuss his recent work advising the White House and Congress on the many issues lurking in safety-critical systems in the health care industry, the misaligned incentives across health care, regulatory bodies and the software industry, and the recent incident between MedSec and St. Jude regarding their medical devices.

Here are some highlights:

Where bits and bytes meet flesh

I asked Josh to comment on his advisory role with the White House for the Presidential Commission on Enhancing Cybersecurity:

Previous testimony from JPMorgan Chase said that they had over 2,000 full-time security people and they spend over $600 million a year securing things and they still get breached pretty routinely. About 100 of the Fortune 100 companies had had a material loss of intellectual property or trade secrets in the last couple years. If you take a step back strategically, one could argue that on a long enough time line our failure rate is 100%. If we can’t secure big banks with $600 million and 2,000 people, how do you secure a hospital with zero security staff and almost no security budget?

In many cases, we know what to secure, or even how to secure it, but we lack the incentives to do so—some of the commissioners are surprised by this, but it’s encouraging. They’re looking at really controversial ideas like software liability. One of the reasons we have such terrible software is there’s really no penalty for building and shipping terrible software. It’s controversial because if you introduce something like software liability in a casual or cavalier way, you could destroy the entire software industry.

Down the rabbit hole of legacy health care systems

When asked about his work on the HHS Cybersecurity Task Force for Congress, Josh laid bare the stark realities of health care security in a world of interconnected devices and legacy technology and systems:

There’s this thing called “meaningful use” in hospital environments. Reimbursement for medical investment was tied to meaningful use. [The health care industry] was encouraged to move rapidly from paper records to electronic records, and so they essentially took a whole bunch of medical devices that were never threat modeled, designed, architected, and implemented to be connected to anything and then forced them to be connected to everything. What that means is that even if a hospital has that 2,000 person security staff that is used to securing a bank or JPMorgan Chase, they can’t achieve the same level of network security possible in a banking environment because of the unintended consequences of meaningful use. We’re chasing rabbits down the rabbit hole and it goes a lot further than I think anybody has realized. There are some seemingly intractable problems in this long tail of Windows XP and legacy, outdated, unsupported operating systems being the overwhelming majority of the equipment in these hospitals, and they have scant security talent and budget and resources to even operate the old stuff. It’s pretty ugly.

Misaligned incentives

In my testimony to the White House, I said that for some of these things, we know what the fix is. We actually know how to completely eliminate SQL injection. We know how, but we don’t do it. I think in many cases we have technical solutions; we lack the incentives and the political will. And when you think about someone who has the means, motive, and opportunity to hurt the public through this irrational dependence on connected technology and safety critical spaces like hospitals, I don’t think we have to make perfect things. I think what we have to do is drain the low hanging fruit and the hygiene issues, because if you can raise the bar high enough, we get rid of the high intent, low capability adversaries.

You’re never going to stop Russia or China from being good enough, but at least they’re rational and we have norms and treaties and mutually assured destruction and economic sanctions and whatnot. I’m more concerned about the people that lay outside the control or the reach of deterrence. What we want to do is get to that 80/20 rule or the balance point where the really reasonable stuff, like no known vulnerabilities and make your goods patchable, at least equip us to shield ourselves against the whims and will of these more extreme adversaries. We don’t have to boil the ocean, just raise the tide line enough.

MedSec/St. Jude refocusing on the impact on patients

Building on our conversation about health care security, I asked Josh about the recent debacle with MedSec, Muddy Waters, and St. Jude:

Regardless of the veracity of the findings (because the veracity of the findings is in dispute), or whether you think it’s moral to make money off of these things, or whether you think it’s legal or should be legal to short safety-critical industries, if we can separate those three aspects we’ll see that there’s been discussion about who’s to blame here but stunningly little discussion about the effect on patients and on safety. I think it’s hard to argue that the safest thing for the customers is to tell every adversary on the planet [about the vulnerability] before the patients or the doctors who care for those patients or the regulator who regulates the care for those patients has had a chance to get ground triage, form a plan, communicate the plan, and manage expectations so that a thoughtful, unemotional response can be done when the information comes to light. My belief is that the safest outcome will factor all relevant stakeholders, and I have seen almost no press that even factors for the impact on patients.

Hacking the health care security industry

We had a 20-year stalemate with the industries that we bring these disclosure issues to. Let’s try not to be a pointing finger at past failures but a helping hand at future success. I have no interest in finding and fixing one device, one bug in one device for one manufacturer. We need to hack the industry and hack the incentives. We need to fix the whole problem. We’re seeing the tide turn from a very real risk that white hats would be completely criminalized, to a massive embrace that it’s not just a pointing finger at past failure and a researcher of the threat but rather that the researcher is a vitally necessary teammate. In fact the FDA, in their post-market guidance, is strongly advocating for high trust, high collaboration with white hats. In the context of all this sea change, from seeing us as enemies to vitally necessary teammates that help make their customers safer, our stories and advice scare the legal teams and the shareholders and might make researchers once again look like a threat.

Related resources: