Programming robots with ROS: Adding a software library

How to integrate an existing software library with ROS.

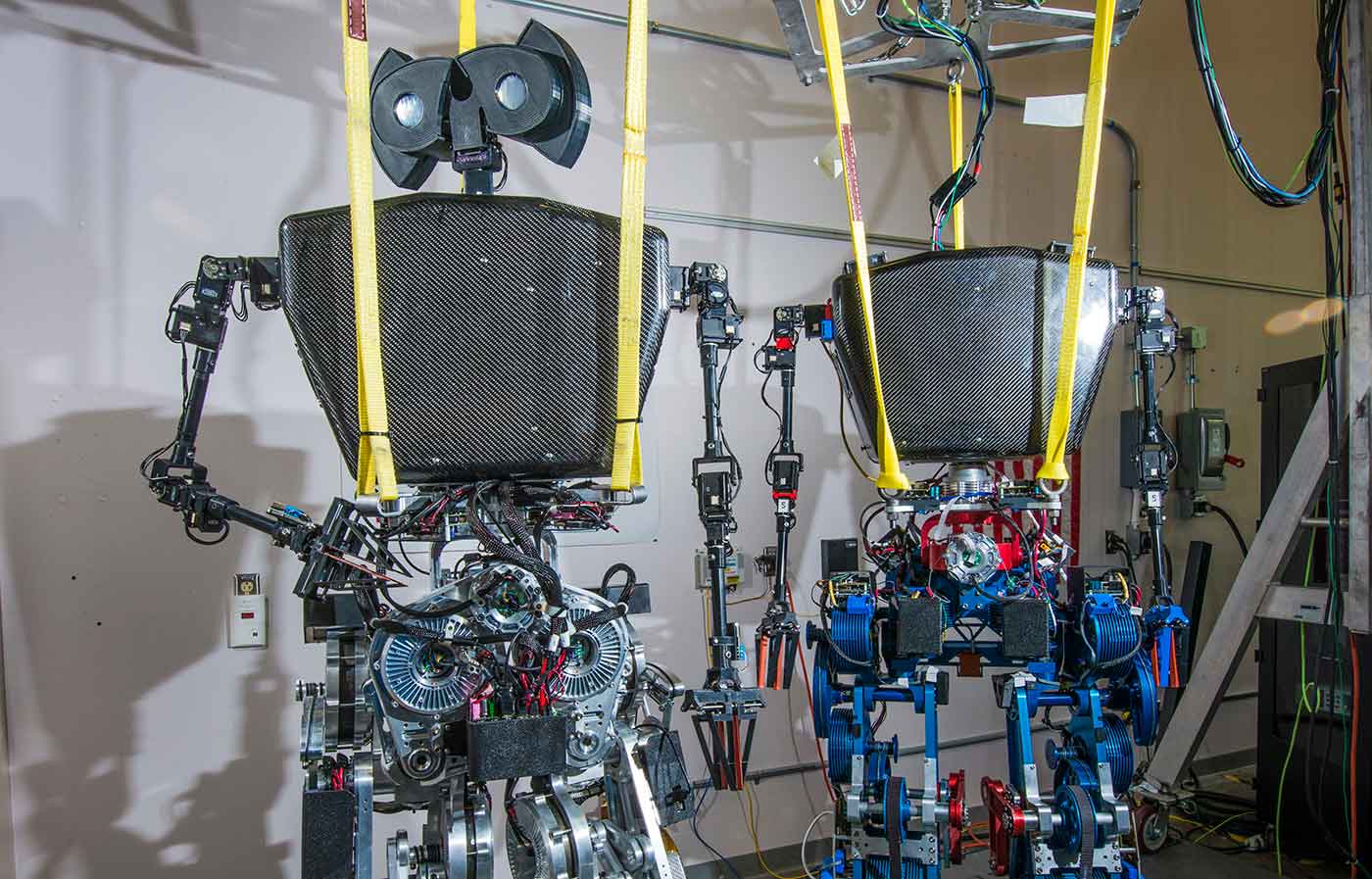

Sandia is developing energy-efficient actuation and drive train technologies to improve the charge life of legged robots. (source: Energy.gov on Flickr)

Sandia is developing energy-efficient actuation and drive train technologies to improve the charge life of legged robots. (source: Energy.gov on Flickr)

Adding a Software Library

A common step in building a robotics application is adding an existing software

library that provides an important capability. Depending on your application,

you might want to give your robot the ability to recognize certain objects,

or detect people, or (as we’ll do in this chapter) speak. There are many

good libraries (many of them open source!) out in the world implementing

such algorithms that you could use on your robot. Whenever possible, we

recommend that you try the existing libraries, especially the ones with

strong reputations for reliability and support. While it’s always tempting

to build your own, and while in some cases you may eventually end up doing so,

you’ll get going more quickly—and learn more about what exactly you need—by starting with an existing system that does most of what you want.

Many libraries that are relevant to robotics have already been

integrated with ROS, such as OpenCV, PCL, and

MoveIt. These

libraries, and the code that makes them easy to use in a ROS-based

robot, form a vital part of the overall ROS ecosystem. Much of the

value in using ROS is having ready access to the right tools for the

job, especially libraries of useful algorithms. Still, you’ll probably

find some library that you need but that hasn’t yet been integrated.

In this chapter, we’ll discuss how to integrate an existing software

library (whether it’s one that you wrote or a third-party package) with

ROS. Between the content of this chapter and the examples provided by the

many ROS-connected libraries already available, you’ll be ready to tackle

the next integration project on your own.

Make Your Robot Talk: pyttsx

From Robbie the Robot to C3PO, we have come to expect our robots to talk

with us. While (at the time of writing) there are still some fundamental

challenges to overcome on the path to creating truly conversational

machines, giving your robot the ability to speak is easy, fun, and can even

be a handy debugging tool. Consider all those times when the robot is just

sitting there, refusing to move, and you’re wondering why. While ROS

offers great software tools for helping you to understand what’s going on

(not available), to use those tools you need to be looking a

screen, not the robot. What if the robot could talk to you, telling you

what state it’s in, what it’s waiting for, or something else?

Fortunately, following decades of research in speech synthesis, there are

now multiple text-to-speech (TTS) software packages that you can use

off the shelf, as black boxes. In this section, we’ll work with the Python

pyttsx module, which provides a unified way to interact with TTS systems

that are commonly available on various operating systems.

Note

Our goal in this section is to explain the process of integrating with a

library like pyttsx. If you want a ready-to-use ROS node with speech

synthesis capabilities, try the sound_play package.

First, let’s make sure that we have pyttsx installed. On most systems,

sudo pip install pyttsx will do the job; for special cases, consult the pyttsx

documentation. To make sure that it’s working properly, let’s try one of

their example programs:

#!/usr/bin/env python

import pyttsx

engine = pyttsx.init()

engine.say('Sally sells seashells by the seashore.')

engine.say('The quick brown fox jumped over the lazy dog.')

engine.runAndWait()

Save that code to a file and run it:

user@hostname$ python pyttsx_example.py

You should hear the two sentences spoken through your computer’s audio

system. If you have trouble at this step, check your speaker/headphone

connections and volume settings; after that, consult the

pyttsx documentation.

Now we have a working executable program that will speak a couple of

sentences; how should we wrap it up into a general-purpose ROS node? We

need to decide a few things:

-

What type of topic/service/action interface will the node provide?

-

What parameters should be exposed?

-

How will we integrate the ROS event loop with the

pyttsxevent loop?

We start by defining the action interface that we’ll use to interact with

our node.

Action Interface

Because the act of converting text to speech takes time—possibly many

seconds for long sentences—it’s a good candidate for an action

server (see not available). That way, we can send goals (what we

want to say), get notification when they’ve been said, and even cancel

a sentence that is in progress.

Let’s decide on the message type that will be received as a goal by our

pyttsx node. As always, we should first consider using an existing

message, especially if it’s already being used by a similar node. In this

case, the most similar example is the sound_play/soundplay_node.py node,

which subscribes to messages of type

sound_play/SoundRequest.

But that message has a surprisingly large number of fields and flags. The

complexity of the message comes from the fact that the

sound_play/soundplay_node.py node does more than just text-to-speech (and

that it’s somewhat specific to the PR2 robot). We could use that message

type, but it’s overkill for our application.

So, we’ll design our own goal message type. We know that we need it to

contain a string field that will be the sentence to be spoken. Let’s

start with that, then add more fields later as needed. Similarly, we can

leave the feedback and result empty, because this node won’t have that much

status to report. Example 1-1 shows the action definition, also available in the

action directory of the basics package (refer back to not available for

help with .action file syntax).

Example 1-1. Talk.action

# The sentence to be spoken string sentence --- # No result content needed --- # No feedback content needed

With the action interface settled, we need to decide what kinds of

configuration we should offer.

Parameters

Consulting the pyttsx documentation, we see that we can change various settings, such as the volume and rate of speech, and the

voice that is used. Those settings are all good candidates for parameters,

which the user can set when launching the node (see not available).

Let’s start by exposing the volume and rate of speech, because we can

reasonably expect that a user might want to modify them. That’s what

parameters are for: the knobs that you expect users will want to be able to adjust easily

and/or frequently, without modifying any code. For each parameter, we need

to decide its data type and its default value (i.e., what we will do if the

user doesn’t set a value). In our case, the easiest thing is to just mirror

how the volume and rate parameters are used in the underlying pyttsx library:

-

volume(float32) -

Floating point volume in the range of 0.0 to 1.0

inclusive. Defaults to 1.0. -

rate(int32) -

Integer speech rate in words per minute. Defaults to

200 words per minute.

We should also add a parameter to control whether the node will preempt a

sentence that is currently being spoken when a new sentence is received.

Interrupting the speaker might not be the nicest thing from a

user interaction point of view, but it’s a good capability to have, and we

want the developer to have control over that behavior. Our new parameter

is:

-

preempt(bool) -

Whether to preempt in-progress speech in response to a new goal. Defaults to false.

Now we know what the external interface to our node will look like, both

for control (action server) and configuration (parameters). Next we will

determine how to design the internal structure of the node to bridge between

the pyttsx library and the rospy library.

Event Loops

A common issue when integrating an existing software library into a ROS

node is how to manage event loops. Often, the library will have its own

way to manage execution and may even want you to give up control of your

main() function. Every situation will be a little different, but it’s often the

case that you’ll need to separate the library’s event loop into its own

thread. That’s usually easy enough, but it’s also important to ensure that

the event loop can be properly and safely stopped at the right time.

For the pyttsx node, we’ll create a separate thread for its event loop,

and we’ll structure that thread in such a way that we can reliably shut it

down. Here’s the code for that thread:

def loop(self):

self.engine.startLoop(False)

while not rospy.is_shutdown():

self.engine.iterate()

time.sleep(0.1)

self.engine.endLoop()

In this thread, we check whether it’s time to shut down in between repeated

calls to the library’s iterate() function, which causes the event loop to

turn over once, processing the next event. We could instead call the

library’s startLoop() function with the argument True, which enters an

internal processing loop, but then we would need to have the right

machinery in another thread to call endLoop() at the right time.

While the details of this interaction are specific to the pyttsx library,

the underlying characteristics are shared by many libraries. For example,

it’s common to see both the “endless loop” call (startLoop(True) in the

case of pyttsx, or ros::spin() in the case of roscpp) and the “do one

loop” call (iterate() in the case of pyttsx, or ros::spinOnce() in

the case of roscpp). The right way to use the library’s event mechanisms

will depend on how they work and your requirements.

Having decided on the action interface, parameters, and event loop

structure, we’re ready to write our pyttsx action server node.

The Speech Server

Example 1-2 shows the code for a full speech synthesis node. Don’t worry

if it looks intimidating; we’re going to step through each part of the program.

Example 1-2. pyttsx_server.py

#! /usr/bin/env python

import rospy

import threading, time, pyttsx

import actionlib

from basics.msg import TalkAction, TalkGoal, TalkResult

class TalkNode():

def __init__(self, node_name, action_name):

rospy.init_node(node_name)

self.server = actionlib.SimpleActionServer(action_name, TalkAction,

self.do_talk, False)

self.engine = pyttsx.init()

self.engine_thread = threading.Thread(target=self.loop)

self.engine_thread.start()

self.engine.setProperty('volume', rospy.get_param('~volume', 1.0))

self.engine.setProperty('rate', rospy.get_param('~rate', 200.0))

self.preempt = rospy.get_param('~preempt', False)

self.server.start()

def loop(self):

self.engine.startLoop(False)

while not rospy.is_shutdown():

self.engine.iterate()

time.sleep(0.1)

self.engine.endLoop()

def do_talk(self, goal):

self.engine.say(goal.sentence)

while self.engine.isBusy():

if self.preempt and self.server.is_preempt_requested():

self.engine.stop()

while self.engine.isBusy():

time.sleep(0.1)

self.server.set_preempted(TalkResult(), "Talk preempted")

return

time.sleep(0.1)

self.server.set_succeeded(TalkResult(), "Talk completed successfully")

talker = TalkNode('speaker', 'speak')

rospy.spin()

Let’s look at the code piece by piece. First we do some standard imports,

including the Talk action message types that we’ll need and the pyttsx

module. We also import the standard threading module, which we’ll need

to manage the event loop thread:

import rospy import threading, time, pyttsx import actionlib from basics.msg import TalkAction, TalkGoal, TalkResult

Next we create a class, TalkNode, which will make it easier (or at least

cleaner) to store some state about the node, including the speech engine.

In the constructor, we initialize the node, create the action server,

initialize the speech engine, then create and start the thread that will

run the event loop:

class TalkNode():

def __init__(self, node_name, action_name):

rospy.init_node(node_name)

self.server = actionlib.SimpleActionServer(action_name, TalkAction,

self.do_talk, False)

self.engine = pyttsx.init()

self.engine_thread = threading.Thread(target=self.loop)

self.engine_thread.start()

Now it’s time to handle parameters, then start the action server. The

volume and rate parameters get passed directly to the library; we’ll

keep the preempt parameter for ourselves:

self.engine.setProperty('volume', rospy.get_param('~volume', 1.0))

self.engine.setProperty('rate', rospy.get_param('~rate', 200.0))

self.preempt = rospy.get_param('~preempt', False)

self.server.start()

Tip

A leading tilde character in a parameter name, such as ~volume, indicates that the parameter is private to the node, which means that it will be stored in and retrieved from the node’s namespace, as opposed to its parent namespace (which is the default). It is good practice to keep parameters local to the node using them whenever possible. If our node is named speaker, then the volume parameter will be stored in the parameter server as /speaker/volume (unless the node is itself pushed down into a namespace, in which case the parameter name would be further

qualified).

We already went over the code for the loop() function that runs in a

separate thread. Let’s look at the code for the goal callback,

do_talk(). On receipt of a new goal, which is a sentence, we pass the

sentence to the speech engine:

def do_talk(self, goal):

self.engine.say(goal.sentence)

Having asked the speech engine to say the sentence, we need to monitor it

for completion. Also, if preempt was set, we need to check for a

preemption request. If the current goal is to be preempted, then we call

stop() on the engine, followed by a second loop to wait for confirmation

that it’s stopped, and finally a report back to clients that the preemption

was accomplished. Otherwise, when the speech engine has finished saying

the sentence, we report that success:

while self.engine.isBusy():

if self.preempt and self.server.is_preempt_requested():

self.engine.stop()

while self.engine.isBusy():

time.sleep(0.1)

self.server.set_preempted(TalkResult(), "Talk preempted")

return

time.sleep(0.1)

self.server.set_succeeded(TalkResult(), "Talk completed successfully")

Now that we have an action server that will accept commands to make the

robot talk, we need to write an action client that will exercise it.

The Speech Client

A ROS node that activates the speech server is straightforward to write.

Example 1-3 shows the code for a simple client program that tells the

server to say “hello world” a few times.

Example 1-3. pyttsx_client.py

#! /usr/bin/env python

import rospy

import actionlib

from basics.msg import TalkAction, TalkGoal, TalkResult

rospy.init_node('speaker_client')

client = actionlib.SimpleActionClient('speak', TalkAction)

client.wait_for_server()

goal = TalkGoal()

goal.sentence = "hello world, hello world, hello world, hello world"

client.send_goal(goal)

client.wait_for_result()

print('[Result] State: %d'%(client.get_state()))

print('[Result] Status: %s'%(client.get_goal_status_text()))

In this program, following the usual initialization, we create an action

client of the appropriate type, send a sentence as a goal, then wait for

completion. That’s the beauty of using an action server: we’ve wrapped up

the non trivial behavior of synthesizing speech in an interface where we can

just send it a string of words, then wait to be told that it’s done executing.

We’ve written the code; now it’s time to test it.

Checking That Everything Works as Expected

Let’s verify that our speech server and client work as intended. Open a

new terminal, and start up roscore. In another terminal, start the server:

user@hostname$ rosrun basics pyttsx_server.py

In a third terminal, start the client:

user@hostname$ rosrun basics pyttsx_client.py

You should hear the words “hello world” repeated a few times. Let’s try

out those parameters. Stop the server, then run it again with a lower

volume setting:

user@hostname$ rosrun basics pyttsx_server.py _volume:=0.5

Now run the client again, and you should hear the same words, but quieter.

You can adjust the rate of speech in the same way. You can also

experiment with the effect of the preempt parameter: try running the

server with _preempt:=true, then run two instances of the client, each in

a separate terminal. You should hear the speech begin on behalf of the

first client, then be interrupted and start again on behalf of the second

client (the effect will be more noticeable if you modify the second client

to send a different string).

Summary

In this chapter, we discussed how to integrate an existing software library

into a ROS system, which is often called for when building a robotics

application. We worked with the relatively simple example of a

text-to-speech system that has just one kind of input (the text to be

spoken), but the basic elements apply equally well to other libraries:

decide on the appropriate data types, and develop an interface (in this case

an action interface); decide on the parameters that will be

accepted; and decide how to integrate the library’s event loop with your

own.

Even this relatively simple example resulted in a useful node that could be

deployed straight away on a robot (as long as the robot has speakers). The

node could, of course, be improved and extended in a number of ways, from

exposing more configuration of the speech engine (e.g., which voice is

being used), to delivering detailed feedback to clients (e.g., notification

of each word having been said). There’s almost always more that could

be exposed, and the art is in deciding what to leave out.

In the past few chapters, we’ve presented examples and discussed patterns for adding devices, robots, and capabilities to ROS. The ease with which the platform can be stretched and extended to cover new use cases is a key feature of ROS—but each new feature brings complexity, and writing good robot software is a challenging task to begin with. With that challenge in mind, the next few chapters will introduce some important tools and techniques that will help you to become an efficient and effective ROS developer.