Early aeronautics, 1818. (source: Library of Congress on Wikimedia Commons)

Early aeronautics, 1818. (source: Library of Congress on Wikimedia Commons) After building an image-classifying robot, the obvious next step was to make a version that can fly. I decided to construct an autonomous drone that could recognize faces and respond to voice commands.

Choosing a prebuilt drone

One of the hardest parts about hacking drones is getting started. I got my feet wet first by building a drone from parts, but like pretty much all of my DIY projects, building from scratch ended up costing me way more than buying a prebuilt version—and frankly, my homemade drone never flew quite right. It’s definitely much easier and cheaper to buy than to build.

Most of the drone manufacturers claim to offer APIs, but there’s not an obvious winner in terms of a hobbyist ecosystem. Most of the drones with usable-looking APIs cost more than $1,000—a huge barrier to entry.

But after some research, I found the Parrot AR Drone 2.0 (see Figure 1), which I think is a clear choice for a fun, low-end, hackable drone. You can buy one for $200 new, but so many people buy drones and never end up using them that a secondhand drone is a good option and available widely on eBay for $130 or less.

The Parrot AR drone doesn’t fly quite as stably as the much more expensive (about $550) new Parrot Bebop 2 drone, but the Parrot AR comes with an excellent node.js client library called node-ar-drone that is perfect for building onto.

Another advantage: the Parrot AR drone is very hard to break. While testing the autonomous code, I crashed it repeatedly into walls, furniture, house plants, and guests, and it still flies great.

The worst thing about hacking on drones compared to hacking on terrestrial robots is the short battery life. The batteries take hours to charge and then last for about 10 minutes of flying. I recommend buying two additional batteries and cycling through them while testing.

Programming my drone

Javascript turns out to be a great language for controlling drones because it is so inherently event driven. And trust me, while flying a drone, there will be a lot of asynchronous events. Node isn’t a language I’ve spent a lot of time with, but I walked away from this project super impressed with it. The last time I seriously programmed robots, I used C, where the threading and exception handling is painful enough that there is a tendency to avoid it. I hope someone builds Javascript wrappers for other drone platforms because the language makes it easy and fun to deal with our indeterministic world.

Architecture

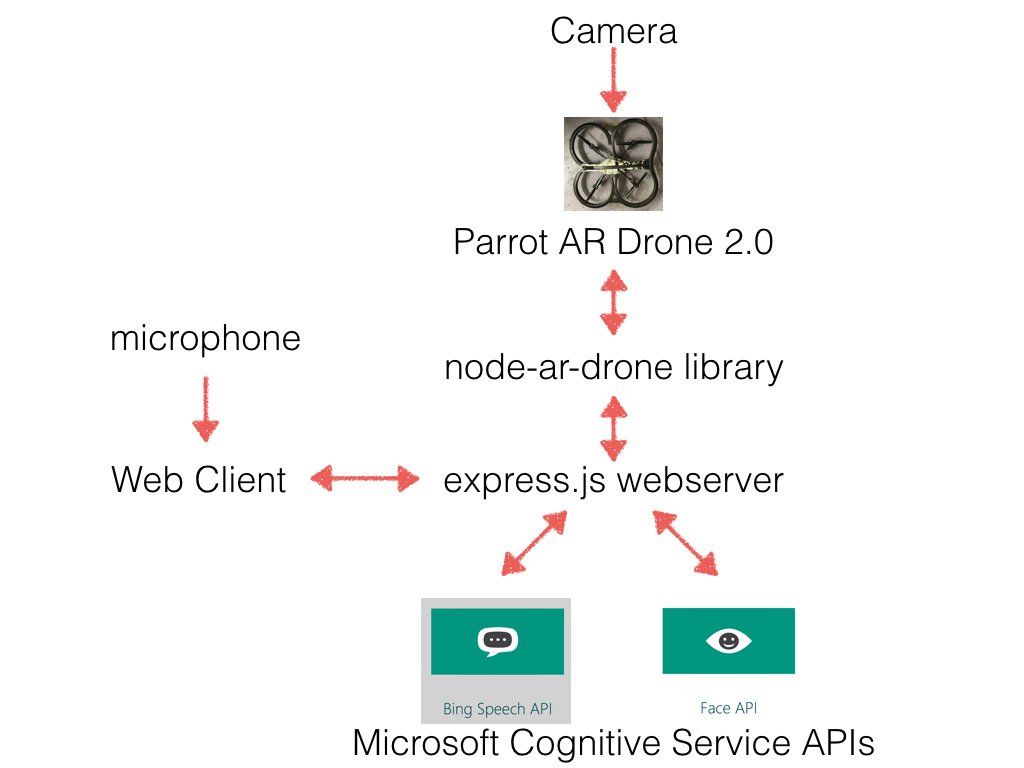

I decided to run the logic on my laptop and do the machine learning in the cloud. This setup led to lower latency than running a neural network directly on Raspberry PI hardware, and I think this architecture makes sense for hobby drone projects at the moment.

Microsoft, Google, IBM, and Amazon all have fast, inexpensive cloud machine learning APIs. In the end, I used Microsoft’s Cognitive Service APIs for this project because it’s the only API that offers custom facial recognition.

See Figure 2 for a diagram illustrating the architecture of the drone:

Getting started

By default, the Parrot AR Drone 2.0 serves a wireless network that clients connect to. This is incredibly annoying for hacking. Every time you want to try something, you need to disconnect from your network and get on the drone’s network. Luckily, there is a super useful project called ardrone-wpa2 that has a script to hack your drone to join your own WiFi network.

It’s fun to Telnet into your drone and poke around. The Parrot runs a stripped down version of Linux. When was the last time you connected to something with Telnet? Here’s an example of how you would open a terminal and log into the drone’s computer directly.

% script/connect "The Optics Lab" -p "particleorwave" -a 192.168.0.1 -d 192.168.7.43 % telnet 192.168.7.43

Flying from the command line

After installing the node library, it’s fun to make a node.js REPL (Read-Evaluate-Print-Loop) and steer your drone:

var arDrone = require('ar-drone');

var client = arDrone.createClient({ip: '192.168.7.43'});

client.createRepl();

drone> takeoff()

true

drone> client.animate(‘yawDance, 1.0)

If you are actually following along, by now you’ve definitely crashed your drone—at least a few times. I super-glued the safety hull back together about a thousand times before it disintegrated and I had to buy a new one. I hesitate to mention this, but the Parrot AR actually flies a lot better without the safety hull. This configuration makes the drone much more dangerous without the hull because when the drone bumps into something the propellers can snap, and it will leave marks in furniture.

Flying from a webpage

It’s satisfying and easy to build a web-based interface to the drone (see Figure 3). The express.js framework makes it simple to build a nice little web server:

var express = require('express');

app.get('/', function (req, res) {

res.sendFile(path.join(__dirname + '/index.html'));

});

app.get('/land', function(req, res) {

client.land();

});

app.get('/takeoff', function(req, res) {

client.takeoff();

});

app.listen(3000, function () {

});

I set up a function to make AJAX requests using buttons:

<html>

<script language='javascript'>

function call(name) {

var xhr = new XMLHttpRequest();

xhr.open('GET', name, true);

xhr.send();

}

</script>

<body>

<a onclick="call('takeoff');">Takeoff</a>

<a onclick="call('land');">Land</a>

</body>

</html>

Streaming video from the drone

I found the best way to send a feed from the drone’s camera was to open up a connection and send a continuous stream of PNGs for my webserver to my website. My webserver continuously pulls PNGs from the drone’s camera using the AR drone library.

var pngStream = client.getPngStream();

pngStream

.on('error', console.log)

.on('data', function(pngBuffer) {

sendPng(pngBuffer);

}

function sendPng(buffer) {

res.write('--daboundary\nContent-Type: image/png\nContent-length: ' + buff

er.length + '\n\n');

res.write(buffer);

});

Running face recognition on the drone images

The Azure Face API is powerful and simple to use. You can upload pictures of your friends and it will identify them. It will also guess age and gender, both functions of which I found to be surprisingly accurate. The latency is around 200 milliseconds, and it costs $1.50 per 1,000 predictions, which feels completely reasonable for this application. See below for my code that sends an image and does face recognition.

var oxford = require('project-oxford'),

oxc = new oxford.Client(CLIENT_KEY);

loadFaces = function() {

chris_url = "https://media.licdn.com/mpr/mpr/shrinknp_400_400/AAEAAQAAAAAAAALyAAAAJGMyNmIzNWM0LTA5MTYtNDU4Mi05YjExLTgyMzVlMTZjYjEwYw.jpg";

lukas_url = "https://media.licdn.com/mpr/mpr/shrinknp_400_400/p/3/000/058/147/34969d0.jpg";

oxc.face.faceList.create('myFaces');

oxc.face.faceList.addFace('myFaces', {url => chris_url, name=> 'Chris'});

oxc.face.faceList.addFace('myFaces', {url => lukas_url, name=> 'Lukas'});

}

oxc.face.detect({

path: 'camera.png',

analyzesAge: true,

analyzesGender: true

}).then(function (response) {

if (response.length > 0) {

drawFaces(response, filename)

}

});

I used the excellent ImageMagick library to annotate the faces in my PNGs. There are a lot of possible extensions at this point—for example, there is an emotion API that can determine the emotion of faces.

Running speech recognition to drive the drone

The trickiest part about doing speech recognition was not the speech recognition itself, but streaming audio from a webpage to my local server in the format Microsoft’s Speech API wants, so that ends up being the bulk of the code. Once you’ve got the audio saved with one channel and the right sample frequency, the API works great and is extremely easy to use. It costs $4 per 1,000 requests, so for hobby applications, it’s basically free.

RecordRTC has a great library, and it’s a good starting point for doing client-side web audio recording. On the client side, we can add code to save the audio file:

app.post('/audio', function(req, res) {

var form = new formidable.IncomingForm();

// specify that we want to allow the user to upload multiple files in a single request

form.multiples = true;

form.uploadDir = path.join(__dirname, '/uploads');

form.on('file', function(field, file) {

filename = "audio.wav"

fs.rename(file.path, path.join(form.uploadDir, filename));

});

// log any errors that occur

form.on('error', function(err) {

console.log('An error has occured: \n' + err);

});

// once all the files have been uploaded, send a response to the client

form.on('end', function() {

res.end('success');

});

// parse the incoming request containing the form data

form.parse(req)

speech.parseWav('uploads/audio.wav', function(text) {

console.log(text);

controlDrone(text);

});

});

I used the FFmpeg utility to downsample the audio and combine it into one channel for uploading to Microsoft:

exports.parseWav = function(wavPath, callback) {

var cmd = 'ffmpeg -i ' + wavPath + ' -ar 8000 -ac 1 -y tmp.wav';

exec(cmd, function(error, stdout, stderr) {

console.log(stderr); // command output is in stdout

});

postToOxford(callback);

});

While we’re at it, we might as well use Microsoft’s text-to-speech API so the drone can talk back to us!

Autonomous search paths

I used the ardrone-autonomy library to map out autonomous search paths for my drone. After crashing my drone into the furniture and houseplants one too many times in my livingroom, my wife nicely suggested I move my project to my garage, where there is less to break—but there isn’t much room to maneuver (see Figure 3).

When I get a bigger lab space, I’ll work more on smart searching algorithms, but for now I’ll just have my drone take off and rotate, looking for my friends and enemies:

var autonomy = require('ardrone-autonomy');

var mission = autonomy.createMission({ip: '10.0.1.3', frameRate: 1, imageSize: '640:320'});

console.log("Here we go!")

mission.takeoff()

.zero() // Sets the current state as the reference

.altitude(1)

.taskSync(console.log("Checkpoint 1"))

.go({x: 0, y: 0, z: 1, yaw: 90})

.taskSync(console.log("Checkpoint 2"))

.hover(1000)

.go({x: 0, y: 0, z: 1, yaw: 180})

.taskSync(console.log("Checkpoint 3"))

.hover(1000)

.go({x: 0, y: 0, z: 1, yaw: 270})

.taskSync(console.log("Checkpoint 4"));

.hover(1000)

.go({x: 0, y: 0, z: 1, yaw: 0

.land()

Putting it all together

Check out this video I took of my drone taking off and searching for my friend Chris:

Conclusion

Once everything is set up and you are controlling the drone through an API and getting the video feed, hacking on drones becomes incredibly fun. With all of the newly available image recognition technology, there are all kinds of possible uses, from surveying floorplans to painting the walls. The Parrot drone wasn’t really designed to fly safely inside a small house like mine, but a more expensive drone might make this a totally realistic application. In the end, drones will become more stable, the price will come down, and the real-world applications will explode.

Microsoft’s Cognitive Service Cloud APIs are easy to use and amazingly cheap. At first, I was worried that the drone’s unusually wide-angle camera might affect the face recognition and that the loud drone propeller might interfere with the speech recognition, but overall the performance was much better than I expected. The latency is less of an issue than I was expecting. Doing the computation in the Cloud on a live image feed seems like a strange architecture at first, but it will probably be the way of the future for a lot of applications.