Machine learning for procurement analytics

The O’Reilly Podcast: Eliot Knudsen on the business value of prescriptive analytics and machine learning algorithms.

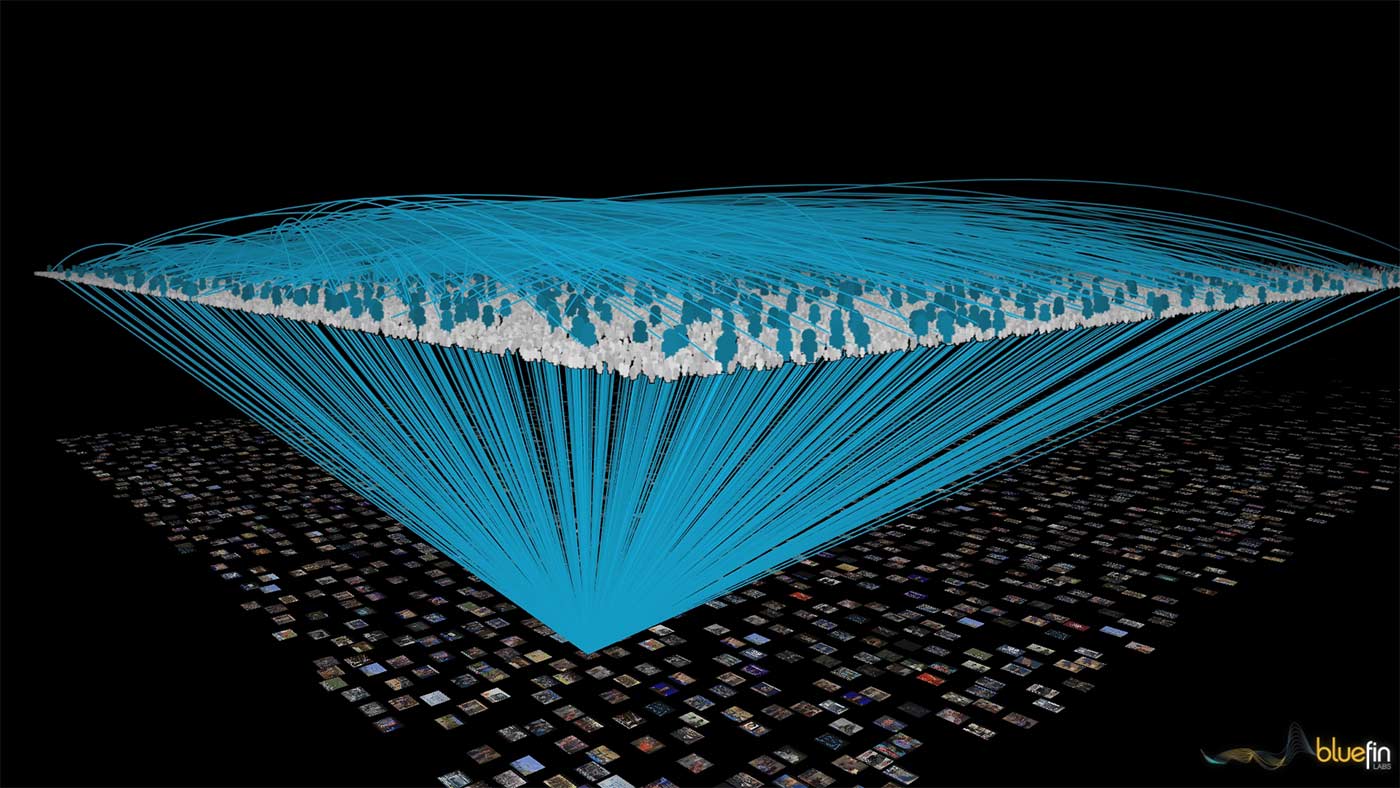

Bluefin Labs - TV Genome Visualization. (source: Bluefin on Wikimedia Commons)

Bluefin Labs - TV Genome Visualization. (source: Bluefin on Wikimedia Commons)

In this episode of the O’Reilly Podcast, O’Reilly’s Ben Lorica sat down with Eliot Knudsen, Field Architect at Tamr. Lorica and Knudsen discuss the role of prescriptive analytics in driving business change, using feedback to train machine learning algorithms, coalescing various sources of business data, and the importance of explaining your algorithms in order to relay their value.

Here are a few highlights from their conversation:

Prescriptive analytics to drive business action

The idea behind prescriptive analytics is that you’re combining forecasting ability with a specific process or change that folks want to drive in their business—whether it’s how they onboard their customers, whether it’s how they negotiate with their suppliers, whether it’s how they move different products or materials through their supply chain. … Being able to change those processes at a really granular level is what makes prescriptive analytics much, much more powerful than just planning or forecasting.

Optimizing feedback to match the speed of analytics

Feedback is one of these fascinating things, where ultimately there are different ways that these systems are tuning and learning. At the lowest level, you’re tuning the parameters in your machine learning algorithm. You’re growing, and based upon data and other heuristics, you’re changing how your algorithms predict and fit themselves to these points. When you’re actually walking through this process in real life, there’s a higher level tuning that is happening as well, which is that your understanding of the data becomes better, your understanding of what the biases in the data have becomes better, and the human tuning of which algorithms you choose and what bias you introduce to these algorithms changes as well.

The ultimate way that feedback gets incorporated into these different machine learning algorithms is both at a ‘what’s the data that I have?’ level, and then ‘what’s my understanding of the problem?’ Ultimately, the second question (what is my understanding of the problem?) changes as well because every time you run one of these predictions, you can begin to collect feedback and iterate on those machine learning levels.

Machine learning to coalesce data from many sources

The process of incorporating all of this data together is really where we pack the greatest punch. So, the concept of what we’re doing is, we’re taking this approach to machine learning and we’re applying it specifically to the problem of pulling together many, many different sources of data and putting it into a usable form for different analytical tools. The ability to resolve and coalesce these sources is a big challenge that we are focused on solving.

I think what a lot of folks will tell you when they’re actually pragmatically running these machine learning algorithms is that there are really two things that move the needle. You can either add data points or you can add features, but the algorithms that you’re running on top of these things are fairly generic and fairly swappable, so adding an incremental feature that has a lot of signal in it can be incredibly valuable from a business intelligence and a predictive algorithm perspective. Also, adding that newest data set that has the valuable information, or maybe the specific sample that you need in order to run your analysis, is critical as well.

Communicating and building trust in algorithms

This is getting a little bit technical, but the idea here is that your ability to understand the internal mechanisms of the model that you’re building is the most important thing for any interpretability of an algorithm.

Depending on how much bias you introduce to that algorithm and how you parameterize it, you can do a whole bunch of different things. When I was building algorithms as a data scientist, I would run very complicated clustering algorithms, very complicated square factor machine algorithms that are interpretable to mathematicians but not really anybody else. I think that the idea of building trust with folks about the algorithms that are being used is really critical.

Being able to explain which features are important, which features are being used by algorithms, and how much they’re contributing to the different algorithms is one of these things that is often overlooked when communicating these things out. … The ability to interpret these algorithms and explain them in a concise and simple way is important.

This post and podcast is part of a collaboration between O’Reilly and Tamr. See our statement of editorial independence.