Why your next open source project may only be an interface

We are likely to see more open interfaces and metaframeworks emerge, but they have their drawbacks.

Shukhov Tower (source: Sergey Norin on Flickr)

Shukhov Tower (source: Sergey Norin on Flickr)

What do deep learning, serverless functions, and stream processing all have in common? Outside of being big trends in computing, the open source projects backing these movements are being built in a new, and perhaps unique, way. In each domain, an API-only open source interface has emerged that must be used alongside one of many supported separate back ends. The pattern may be a boon to the industry by promising less rewrite, easy adoption, performance improvements, and profitable companies. I’ll describe this pattern, offer first-hand accounts of how it emerged, discuss what it means for open source, and explore how we should nurture it.

Typically, a new open source project provides both a new execution technology and an API that users program against. API definition is, in part, a creative activity, which has historically drawn on analogies (like Storm’s spouts and bolts or Kubernetes’ pods) to help users quickly understand how to wield the new thing. The API is specific to a project’s execution engine; together they make a single stand-alone whole. Users read the project’s documentation to install the software, interact with the interface, and benefit from the execution engine.

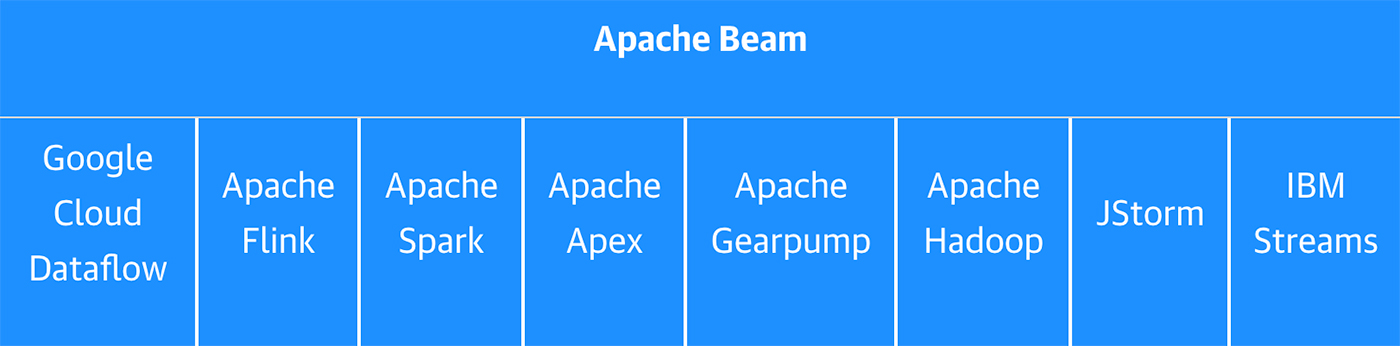

Several important new projects are structured differently. They don’t have an execution engine; instead, they are metaframeworks that provide a common interface to several different execution engines. Keras, the second-most popular deep learning framework, is an example of this trend. As creator François Chollet recently tried to explain, “Keras is meant as an interface rather than as an end-to-end framework.” Similarly, Apache Beam, a large-scale data processing framework, is a self-described “programming model.” What does this mean? What can you do with a programing model on its own? Nothing really. Both of these projects require external back ends. In the case of Beam, users write pipelines that can execute on eight different “runners,” including six open source systems (five from Apache), and three proprietary vender systems. Similarly, Keras touts support for TensorFlow, Microsoft’s Cognitive Toolkit (CNTK), Theano, Apache MxNet, and others. Chollet provides a succinct description of this approach in a recent exchange on GitHub: “In fact, we will even expand the multi-back-end aspect of Keras in the future. … Keras is a front end for deep learning, not a front end for TensorFlow.”

The similarities don’t end there. Both Beam and Keras were originally created by Googlers at the same time (2015) and in related fields (data processing and machine learning). Yet, it appears the two groups arrived at this model independently. How did this happen, and what does that mean for this model?

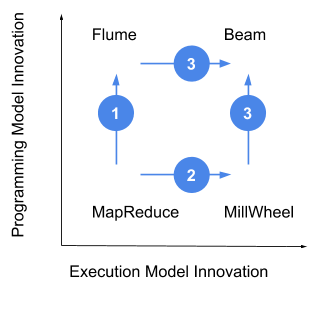

The Beam story

In 2015, I was a product manager at Google, focused on Cloud Dataflow. The Dataflow engineering team’s legendary status dates back to Jeff Dean’s and Sanjay Ghemawat’s famous MapReduce paper in 2004. Like most projects, MapReduce defined a method of execution and a programming model to take advantage of it. While the execution model is still state of the art for batch processing, the programming model was not pleasant to work with, so Google soon developed a much easier, abstracted programming model called Flume (step 1, Figure 1). Meanwhile, demand for lower latency processing resulted in a new project, with the usual execution model and programming model, called MillWheel (step 2). Interestingly, these teams came together around the idea that Flume, the abstracted programming model for batch, with some extensions, could also be a programming model for streaming (step 3). This key insight is at the heart of the Beam programming model, which at the time was called The Dataflow Model.

From the story of Beam’s origins emerge a set of principles:

- There are two degrees of innovation: programming model and execution model. Historically, we have assumed they need to be coupled, but open interfaces challenge that assumption.

- By decoupling the code with an abstraction, we also decouple the contributor community into interface designers and execution engine creators.

- Through abstraction and decoupling (technically and organizationally), the speed at which the community can absorb innovation accelerates.

Consider these principles in the case of Keras. Despite TensorFlow’s popularity, users quickly realized that its API is not for everyday use. Keras’ easy abstractions, which already had a strong following among Theano users, made it the preferred API for TensorFlow. Since then, Amazon and Microsoft have added MxNet and CNKT, respectively, as back ends. This means that developers who choose the independent open interface Keras can now execute on all four major frameworks without any re-write. Organizations are consuming the latest in technology from all the brightest groups. New projects, like PlaidML, can quickly find an audience; a Keras developer can easily try out PlaidML without learning a new interface.

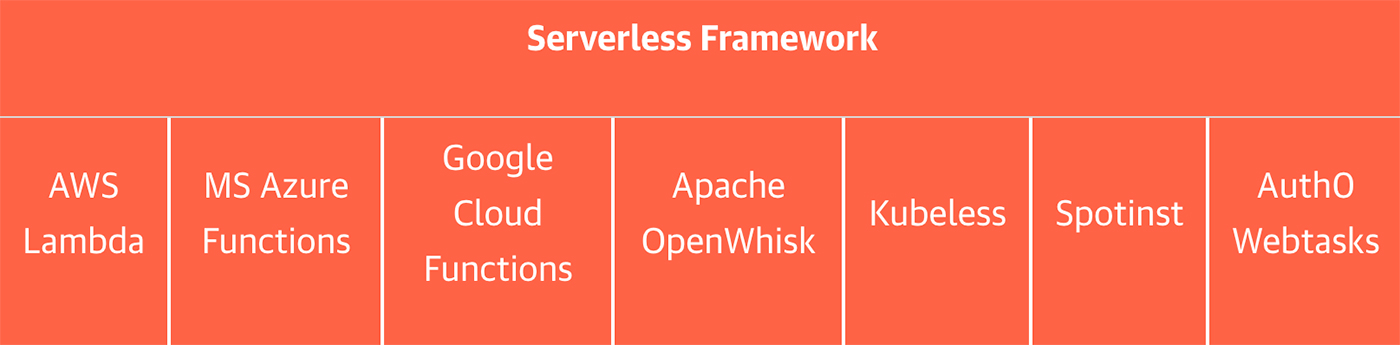

The Serverless story

The Serverless Framework’s open interface vision has, like Beam’s, evolved and was not immediately apparent. I remember seeing the announcement of JAWS (Javascript AWS) on Hacker News in 2015, the same year Keras and Beam began. Months later, the JAWS team presented their AWS Lambda-specific framework at Re:Invent. It contained scaffolding, workflow, and best practices for Lambda, Amazon’s function as a service (FaaS) offering. But Lambda was just the first of several proprietary cloud and open source FaaS offerings. The JAWS framework soon rebranded itself as Serverless and supported the newcomers.

Serverless still wasn’t a single open API interface until August 2017, when Austen Collins announced Event Gateway, the “missing piece of serverless architecture.” Even today, Serverless doesn’t offer their own execution environment. Gateway specifies a new FaaS API that abstracts and can use any of the popular execution environments. Collins’ value proposition for Event Gateway could have been taken from Keras or Beam: “Any of your events can have multiple subscribers from any other cloud service. Lambda can talk to Azure can talk to OpenWhisk. This makes businesses completely flexible…[it] protects you from lock-in, while also keeping you open for whatever else the future may bring.”

The driving forces

As a venture investor, I find myself asking the skeptical questions: are metaframeworks a real trend? What is behind this trend? Why now? At least two forces are at work: cloud managed services and an increased rate of innovation.

Cloud-managed and proprietary services

Virtually all of Google’s internal managed services employ unique, Google-specific APIs. For example, Google’s Bigtable was the first noSQL database. But because Google was shy about revealing details, the open source community dreamed up their own implementations: HBase, Cassandra, and others. Offering Bigtable as an external service would mean introducing yet another API, and a proprietary one to boot. Instead, Google Cloud Bigtable was released with an HBase-compatible API, meaning any HBase user can adopt Google’s Bigtable technology without any code changes. Offering proprietary services behind open interfaces appears to be the emerging standard for Google Cloud.

The other cloud providers are following suit. Microsoft is embracing open source and open interfaces at every turn, while Amazon is reluctantly being pulled into the mix by customers. Together the two have recently launched Gluon, an open API that, like Keras, executes on multiple deep learning frameworks. The trend of cloud providers exposing proprietary services behind open, well-adopted APIs is a win for users, who avoid lock-in and can adopt easily.

Looking forward

With cloud offerings on the rise and the rate of complexity and innovation increasing, we are likely to see more open interfaces and metaframeworks emerge, but they have their drawbacks. Additional layers of abstraction introduce indirection. Debugging may become more difficult. Features may lag or simply go unsupported; an interface may provide access features that the execution engines share, but omit sophisticated or rarely used features that add value. Finally, a new approach to execution may not immediately fit in an existing API. PyTorch and Kafka Streams, for example, have recently grown in popularity and have yet to conform to the open interfaces provided by Keras and Beam. This not only leaves users with a difficult choice, but challenges the concept of the API framework altogether.

Considering the above, here are some tips for success in this new world:

For API developers: The next time you find yourself bikeshedding on an API, consider (1) that it is an innovation vector all its own and (2) work with the entire industry to get it right. This is where the community and governance aspects of open source are critical. Getting consensus in distributed systems is hard, but François Chollet, Tyler Akidau, and Austen Collins have done a masterful job in their respective domains. Collins, for example, has been working with the CNCF’s Serverless Working Group to establish CloudEvents as an industry standard protocol.

For service developers: Focus on performance. The open interfaces are now your distribution channel, leaving you free to focus on being the best execution framework. There is no longer a risk of great tech stuck behind laggards unwilling to try or adopt. With switching costs low, your improvements can easily be seen. Watch benchmarking become the norm.

For users: Become active in the communities around these open interfaces. We need these to become lively town halls to keep the APIs use case driven and relevant. And try the different back ends that are available. It’s a new efficient freedom available to you, and it will incentivize performance and innovation.

For everyone: Consider helping out the projects that are mentioned in this post. Open interfaces are still new, and their future depends on the success of the pioneers.