Chapter 1. Artificial Intelligence and Our World

Recent advances in artificial intelligence (AI) have sparked increased interest in what the technology can accomplish. It’s true that there’s hype—as there often is with any breakthrough technology—but AI is not just another fad. Computers have been evolving steadily from machines that follow instructions to ones that can learn from experience in the form of data. Lots and lots of it.

The accomplishments are already impressive and span a variety of fields. Google DeepMind has been used to master the game of Go; autonomous vehicles are detecting and reacting to pedestrians, road signs, and lanes; and computer scientists at Stanford have created an artificially intelligent diagnosis algorithm that is just as accurate as dermatologists in identifying skin cancer. AI has even become commonplace in consumer products and can be easily recognized in our virtual assistants (e.g., Siri and Alexa) that understand and respond to human language in real time.

These are just a few examples of how AI is affecting our world right now. Many effects of AI are unknown, but one thing is becoming clearer: the adoption of AI technology—or lack of it—is going to define the future of the enterprise.

A New Age of Computation

AI is transforming the analytical landscape, yet it has also been around for decades in varying degrees of maturity. Modern AI, however, refers to systems that behave with intelligence without being explicitly programmed. These systems learn to identify and classify input patterns, make and act on probabilistic predictions, and operate without explicit rules or supervision. For example, online retailers are generating increasingly relevant product recommendations by taking note of previous selections to determine similarities and interests. The more users engage, the smarter the algorithm grows, and the more targeted its recommendations become.

In most current implementations, AI relies on deep neural networks (DNNs) as a critical part of the solution. These algorithms are at the heart of today’s AI resurgence. DNNs allow more complex problems to be tackled, and others to be solved with higher accuracy and less cumbersome, manual fine tuning.

The AI Trinity: Data, Hardware, and Algorithms

The story of AI can be told in three parts: the data deluge, improvements in hardware, and algorithmic breakthroughs (Figure 1-1).

Figure 1-1. Drivers of the AI Renaissance

Exponential Growth of Data

There is no need to recite the statistics of the data explosion of the past 10 years; that has been mainstream knowledge for some time. Suffice it to say that the age of “big data” is one of the most well understood and well documented drivers of the AI renaissance.

Before the current decade, algorithms had access to a limited amount and restricted types of data, but this has changed. Now, machine intelligence can learn from a growing number of information sources, accessing the essential data it needs to fuel and improve its algorithms.

Computational Advances to Handle Big Data

Advanced system architectures, in-memory storage, and new AI-specific chipsets in the form of graphics processing units (GPUs) are now available, advances that overcome previous computational constraints to advancing AI.

GPUs have been around in the gaming and computer-aided design (CAD) world since 1999, when they were originally developed to manipulate computer graphics and process images. They have recently been applied to the field of AI when it was found that they were a perfect fit for the large-scale matrix operations and linear algebra that form the basis of deep learning. Although parallel computing has been around for decades, GPUs excel at parallelizing the same instructions when applied to multiple data points.

NVIDIA has cemented itself as the leader in AI accelerated platforms, with a steady release of ever more powerful GPUs, along with a well executed vision for CUDA, an application programming interface (API) that makes it easier to program GPUs without the need for advanced graphic programming skills. Leading cloud vendors like Google, Amazon, and Microsoft have all introduced GPU hardware into their cloud offerings, making the hardware more accessible.

Accessing and Developing Algorithms

Whereas AI software development tools once required large capital investments, they are now relatively inexpensive or even free. The most popular AI framework is TensorFlow, a software library for machine learning originally developed by Google that has since been open sourced. As such, this world-class research is completely free for anyone to download and use. Other popular open source frameworks include MXNET, PyTorch, Caffe, and CNTK.

Leading cloud vendors have packaged AI solutions that are delivered through APIs, further increasing AI’s availability. For instance, AWS has a service for image recognition and text-to-speech, and Google has prediction APIs for services such as spam detection and sentiment analysis.

Now that the technology is increasingly available with hardware to support it and a growing body of practice, AI is spreading beyond the world of academia and the digital giants. It is now on the cusp of going mainstream in the enterprise.

What Is AI: Deep Versus Machine Learning

Before venturing further into talking about AI, it will be helpful to discuss what is meant by the terms in this book.

The term artificial intelligence has many definitions. Of these, many revolve around the concept of an algorithm that can improve itself, or learn, based on data. This is, in fact, the biggest difference between AI and other forms of software. AI technologies are ever moving toward implicit programming, where computers learn on their own, as opposed to explicit programming, where humans tell computers what to do.

Several technologies have—at various points—been classified under the AI umbrella, including statistics, machine learning, and deep learning. Statistics and data mining have been present in the enterprise for decades and need little introduction. They are helpful for making simple business calculations (e.g., average revenue per user). More advanced algorithms are also available, drawing on calculus and probability theory to make predictions (e.g., sales forecasting or detecting fraudulent transactions).

Machine learning makes predictions by using software to learn from past experiences instead of following explicitly programmed instructions. Machine learning is closely related to (and often overlaps with) statistics, given that both focus on prediction making and use many of the same algorithms, such as logistic regression and decision trees. The key difference is the ability of machine learning models to learn, which means that more data equals better models.

This book, however, focuses on deep learning, which is at the heart of today’s AI resurgence as recent breakthroughs in the field have fueled renewed interest in what AI can help enterprises achieve. In fact, the terms AI and deep learning are used synonymously (for reasons we discuss in a moment). Let’s delve into that architecture.

What Is Deep Learning?

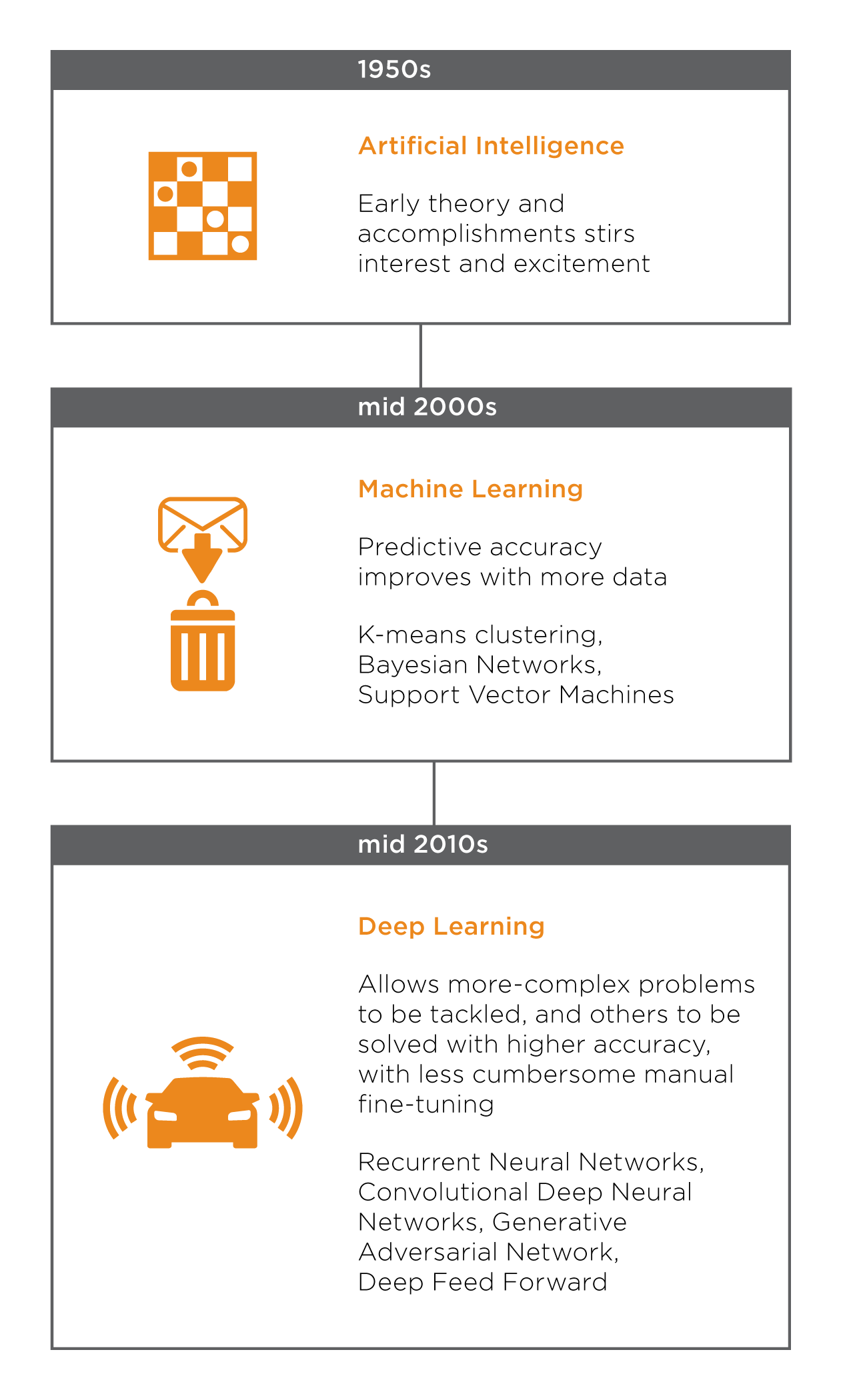

Building on the advances of machine learning, deep learning detects patterns by using artificial neural networks that contain multiple layers. The middle layers are known as hidden layers, and they enable automatic feature extraction from the data—something that was impossible with machine learning—with each successive layer using the output from the previous layer as input. Figure 1-2 briefly summarizes these advancesments over time.

The biggest advances in deep learning have been in the number of layers and the complexity of the calculations a network can process. Although early commercially available neural networks had only between 5 and 10 layers, a state-of-the-art deep neural network can handle significantly more, allowing the network to solve more complex problems and increase predictive accuracy. For example, Google’s speech recognition software improved from a 23% error rate in 2013 to a 4.9% error rate in 2017, largely by processing more hidden layers.

Figure 1-2. Evolution of AI

Why It Matters

Because of its architecture, deep learning excels at dealing with high degrees of complexity, forms, and volumes of data. It can understand, learn, predict, and adapt, autonomously improving itself over time. It is so good at this that in some contexts, deep learning has become synonymous with AI itself. This is how we will be using the term here.

Here are some differentiators of deep learning:

-

Deep learning models allow relationships between raw features to be determined automatically, reducing the need for feature engineering and data preprocessing. This is particularly true in computer vision and natural language–related domains.

-

Deep learning models tend to generalize more readily and are more robust in the presence of noise. Put another way, deep learning models can adapt to unique problems and are less affected by messy or extraneous data.

-

In many cases, deep learning delivers higher accuracy than other techniques for problems, particularly those that involve complex data fusion, when data from a variety of sources must be used to address a problem from multiple angles.

In Chapter 2, we will examine how this technology is changing the enterprise.

Get Achieving Real Business Outcomes from Artificial Intelligence now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.