Chapter 1. Analyzing Big Data

When people say that we live in an age of big data they mean that we have tools for collecting, storing, and processing information at a scale previously unheard of. The following tasks simply could not have been accomplished 10 or 15 years ago:

-

Build a model to detect credit card fraud using thousands of features and billions of transactions

-

Intelligently recommend millions of products to millions of users

-

Estimate financial risk through simulations of portfolios that include millions of instruments

-

Easily manipulate genomic data from thousands of people to detect genetic associations with disease

-

Assess agricultural land use and crop yield for improved policymaking by periodically processing millions of satellite images

Sitting behind these capabilities is an ecosystem of open source software that can leverage clusters of servers to process massive amounts of data. The introduction/release of Apache Hadoop in 2006 has led to widespread adoption of distributed computing. The big data ecosystem and tooling have evolved at a rapid pace since then. The past five years have also seen the introduction and adoption of many open source machine learning (ML) and deep learning libraries. These tools aim to leverage vast amounts of data that we now collect and store.

But just as a chisel and a block of stone do not make a statue, there is a gap between having access to these tools and all this data and doing something useful with it. Often, “doing something useful” means placing a schema over tabular data and using SQL to answer questions like “Of the gazillion users who made it to the third page in our registration process, how many are over 25?” The field of how to architect data storage and organize information (data warehouses, data lakes, etc.) to make answering such questions easy is a rich one, but we will mostly avoid its intricacies in this book.

Sometimes, “doing something useful” takes a little extra work. SQL still may be core to the approach, but to work around idiosyncrasies in the data or perform complex analysis, we need a programming paradigm that’s more flexible and with richer functionality in areas like machine learning and statistics. This is where data science comes in and that’s what we are going to talk about in this book.

In this chapter, we’ll start by introducing big data as a concept and discuss some of the challenges that arise when working with large datasets. We will then introduce Apache Spark, an open source framework for distributed computing, and its key components. Our focus will be on PySpark, Spark’s Python API, and how it fits within a wider ecosystem. This will be followed by a discussion of the changes brought by Spark 3.0, the framework’s first major release in four years. We will finish with a brief note about how PySpark addresses challenges of data science and why it is a great addition to your skillset.

Previous editions of this book used Spark’s Scala API for code examples. We decided to use PySpark instead because of Python’s popularity in the data science community and an increased focus by the core Spark team to better support the language. By the end of this chapter, you will ideally appreciate this decision.

Working with Big Data

Many of our favorite small data tools hit a wall when working with big data. Libraries like pandas are not equipped to deal with data that can’t fit in our RAM. Then, what should an equivalent process look like that can leverage clusters of computers to achieve the same outcomes on large datasets? Challenges of distributed computing require us to rethink many of the basic assumptions that we rely on in single-node systems. For example, because data must be partitioned across many nodes on a cluster, algorithms that have wide data dependencies will suffer from the fact that network transfer rates are orders of magnitude slower than memory accesses. As the number of machines working on a problem increases, the probability of a failure increases. These facts require a programming paradigm that is sensitive to the characteristics of the underlying system: one that discourages poor choices and makes it easy to write code that will execute in a highly parallel manner.

Single-machine tools that have come to recent prominence in the software community are not the only tools used for data analysis. Scientific fields like genomics that deal with large datasets have been leveraging parallel-computing frameworks for decades. Most people processing data in these fields today are familiar with a cluster-computing environment called HPC (high-performance computing). Where the difficulties with Python and R lie in their inability to scale, the difficulties with HPC lie in its relatively low level of abstraction and difficulty of use. For example, to process a large file full of DNA-sequencing reads in parallel, we must manually split it up into smaller files and submit a job for each of those files to the cluster scheduler. If some of these fail, the user must detect the failure and manually resubmit them. If the analysis requires all-to-all operations like sorting the entire dataset, the large dataset must be streamed through a single node, or the scientist must resort to lower-level distributed frameworks like MPI, which are difficult to program without extensive knowledge of C and distributed/networked systems.

Tools written for HPC environments often fail to decouple the in-memory data models from the lower-level storage models. For example, many tools only know how to read data from a POSIX filesystem in a single stream, making it difficult to make tools naturally parallelize or to use other storage backends, like databases. Modern distributed computing frameworks provide abstractions that allow users to treat a cluster of computers more like a single computer—to automatically split up files and distribute storage over many machines, divide work into smaller tasks and execute them in a distributed manner, and recover from failures. They can automate a lot of the hassle of working with large datasets and are far cheaper than HPC.

A simple way to think about distributed systems is that they are a group of independent computers that appear to the end user as a single computer. They allow for horizontal scaling. That means adding more computers rather than upgrading a single system (vertical scaling). The latter is relatively expensive and often insufficient for large workloads. Distributed systems are great for scaling and reliability but also introduce complexity when it comes to design, construction, and debugging. One should understand this trade-off before opting for such a tool.

Introducing Apache Spark and PySpark

Enter Apache Spark, an open source framework that combines an engine for distributing programs across clusters of machines with an elegant model for writing programs atop it. Spark originated at the University of California, Berkeley, AMPLab and has since been contributed to the Apache Software Foundation. When released, it was arguably the first open source software that made distributed programming truly accessible to data scientists.

Components

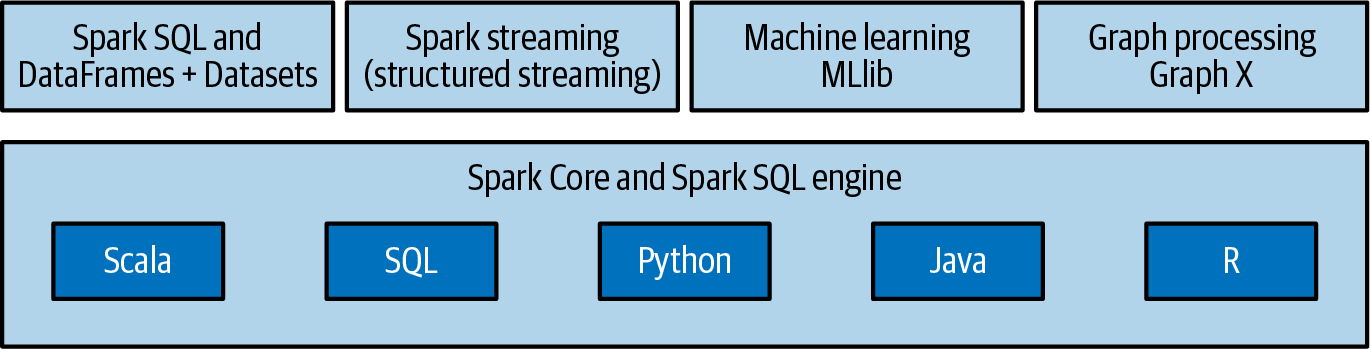

Apart from the core computation engine (Spark Core), Spark is comprised of four main components. Spark code written by a user, using either of its APIs, is executed in the workers’ JVMs (Java Virtual Machines) across the cluster (see Chapter 2). These components are available as distinct libraries as shown in Figure 1-1:

- Spark SQL and DataFrames + Datasets

- MLlib

- Structured Streaming

-

This makes it easy to build scalable fault-tolerant streaming applications.

- GraphX (legacy)

-

GraphX is Apache Spark’s library for graphs and graph-parallel computation. However, for graph analytics, GraphFrames is recommended instead of GraphX, which isn’t being actively developed as much and lacks Python bindings. GraphFrames is an open source general graph processing library that is similar to Apache Spark’s GraphX but uses DataFrame-based APIs.

Figure 1-1. Apache Spark components

PySpark

PySpark is Spark’s Python API. In simpler words, PySpark is a Python-based wrapper over the core Spark framework, which is written primarily in Scala. PySpark provides an intuitive programming environment for data science practitioners and offers the flexibility of Python with the distributed processing capabilities of Spark.

PySpark allows us to work across programming models. For example, a common pattern is to perform large-scale extract, transform, and load (ETL) workloads with Spark and then collect the results to a local machine followed by manipulation using pandas. We’ll explore such programming models as we write PySpark code in the upcoming chapters. Here is a code example from the official documentation to give you a glimpse of what’s to come:

frompyspark.ml.classificationimportLogisticRegression# Load training datatraining=spark.read.format("libsvm").load("data/mllib/sample_libsvm_data.txt")lr=LogisticRegression(maxIter=10,regParam=0.3,elasticNetParam=0.8)# Fit the modellrModel=lr.fit(training)# Print the coefficients and intercept for logistic regression("Coefficients: "+str(lrModel.coefficients))("Intercept: "+str(lrModel.intercept))# We can also use the multinomial family for binary classificationmlr=LogisticRegression(maxIter=10,regParam=0.3,elasticNetParam=0.8,family="multinomial")# Fit the modelmlrModel=mlr.fit(training)# Print the coefficients and intercepts for logistic regression# with multinomial family("Multinomial coefficients: "+str(mlrModel.coefficientMatrix))("Multinomial intercepts: "+str(mlrModel.interceptVector))

Ecosystem

Spark is the closest thing to a Swiss Army knife that we have in the big data ecosystem. To top it off, it integrates well with rest of the ecosystem and is extensible. Spark decouples storage and compute unlike Apache Hadoop and HPC systems described previously. That means we can use Spark to read data stored in many sources—Apache Hadoop, Apache Cassandra, Apache HBase, MongoDB, Apache Hive, RDBMSs, and more—and process it all in memory. Spark’s DataFrameReader and DataFrameWriter APIs can also be extended to read data from other sources, such as Apache Kafka, Amazon Kinesis, Azure Storage, and Amazon S3, on which it can operate. It also supports multiple deployment modes, ranging from local environments to Apache YARN and Kubernetes clusters.

There also exists a wide community around it. This has led to creation of many third-party packages. A community-created list of such packages can be found here. Major cloud providers (AWS EMR, Azure Databricks, GCP Dataproc) also provide third-party vendor options for running managed Spark workloads. In addition, there are dedicated conferences and local meetup groups that can be of interest for learning about interesting applications and best practices.

Spark 3.0

In 2020, Apache Spark made its first major release since 2016 when Spark 2.0 was released—Spark 3.0. This series’ last edition, released in 2017, covered changes brought about by Spark 2.0. Spark 3.0 does not introduce as many major API changes as the last major release. This release focuses on performance and usability improvements without introducing significant backward incompatibility.

The Spark SQL module has seen major performance enhancements in the form of adaptive query execution and dynamic partition pruning. In simpler terms, they allow Spark to adapt a physical execution plan during runtime and skip over data that’s not required in a query’s results, respectively. These optimizations address significant effort that users had to previously put into manual tuning and optimization. Spark 3.0 is almost two times faster than Spark 2.4 on TPC-DS, an industry-standard analytical processing benchmark. Since most Spark applications are backed by the SQL engine, all the higher-level libraries, including MLlib and structured streaming, and higher-level APIs, including SQL and DataFrames, have benefited. Compliance with the ANSI SQL standard makes the SQL API more usable.

Python has emerged as the leader in terms of adoption in the data science ecosystem. Consequently, Python is now the most widely used language on Spark. PySpark has more than five million monthly downloads on PyPI, the Python Package Index. Spark 3.0 improves its functionalities and usability. pandas user-defined functions (UDFs) have been redesigned to support Python type hints and iterators as arguments. New pandas UDF types have been included, and the error handling is now more pythonic. Python versions below 3.6 have been deprecated. From Spark 3.2 onward, Python 3.6 support has been deprecated too.

Over the last four years, the data science ecosystem has also changed at a rapid pace. There is an increased focus on putting machine learning models in production. Deep learning has provided remarkable results and the Spark team is currently experimenting to allow the project’s scheduler to leverage accelerators such as GPUs.

PySpark Addresses Challenges of Data Science

For a system that seeks to enable complex analytics on huge data to be successful, it needs to be informed by—or at least not conflict with—some fundamental challenges faced by data scientists.

-

First, the vast majority of work that goes into conducting successful analyses lies in preprocessing data. Data is messy, and cleansing, munging, fusing, mushing, and many other verbs are prerequisites to doing anything useful with it.

-

Second, iteration is a fundamental part of data science. Modeling and analysis typically require multiple passes over the same data. Popular optimization procedures like stochastic gradient descent involve repeated scans over their inputs to reach convergence. Iteration also matters within the data scientist’s own workflow. Choosing the right features, picking the right algorithms, running the right significance tests, and finding the right hyperparameters all require experimentation.

-

Third, the task isn’t over when a well-performing model has been built. The point of data science is to make data useful to non–data scientists. Uses of data recommendation engines and real-time fraud detection systems culminate in data applications. In such systems, models become part of a production service and may need to be rebuilt periodically or even in real time.

PySpark deals well with the aforementioned challenges of data science, acknowledging that the biggest bottleneck in building data applications is not CPU, disk, or network, but analyst productivity. Collapsing the full pipeline, from preprocessing to model evaluation, into a single programming environment can speed up development. By packaging an expressive programming model with a set of analytic libraries under an REPL (read-eval-print loop) environment, PySpark avoids the round trips to IDEs. The more quickly analysts can experiment with their data, the higher likelihood they have of doing something useful with it.

A read-eval-print loop, or REPL, is a computer environment where user inputs are read and evaluated, and then the results are returned to the user.

PySpark’s core APIs provide a strong foundation for data transformation independent of any functionality in statistics, machine learning, or matrix algebra. When exploring and getting a feel for a dataset, data scientists can keep data in memory while they run queries, and easily cache transformed versions of the data as well, without suffering a trip to disk. As a framework that makes modeling easy but is also a good fit for production systems, it is a huge win for the data science ecosystem.

Where to Go from Here

Spark spans the gap between systems designed for exploratory analytics and systems designed for operational analytics. It is often said that a data scientist is someone who is better at engineering than most statisticians and better at statistics than most engineers. At the very least, Spark is better at being an operational system than most exploratory systems and better for data exploration than the technologies commonly used in operational systems. We hope that this chapter was helpful and you are now excited about getting hands-on with PySpark. That’s what we will do from the next chapter onward!

Get Advanced Analytics with PySpark now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.