Let's discuss the different types of activation functions, starting with the classics:

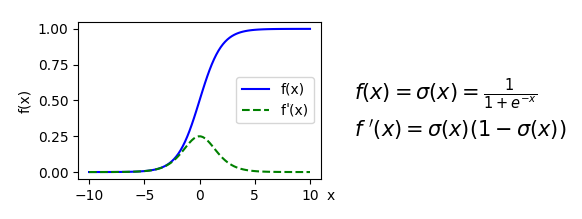

- Sigmoid: Its output is bounded between 0 and 1 and can be interpreted stochastically as the probability of the neuron activating. Because of these properties, the sigmoid was the most popular activation function for a long time. However, it also has some less desirable properties (more on that later), which led to its decline in popularity. The following diagram shows the sigmoid formula, its derivative, and their graphs (the derivative will be useful when we discuss backpropagation):

- Hyperbolic tangent ...