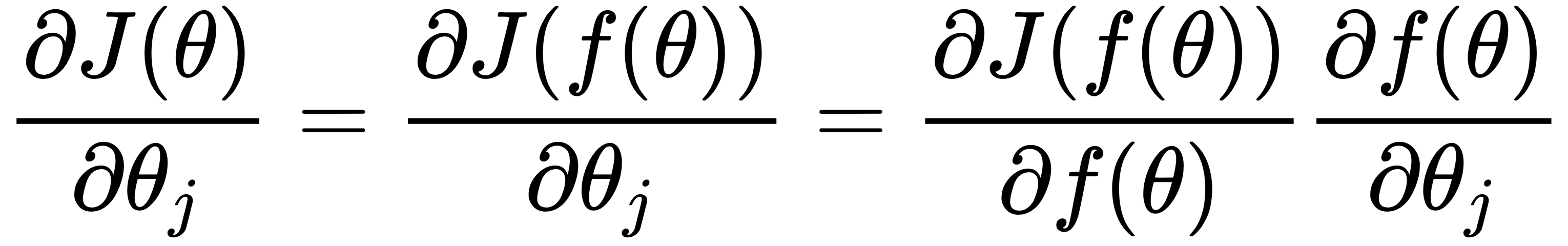

In this section, we'll discuss how to update the network weights in order to minimize the cost function. As we demonstrated in the Gradient descent section, this means finding the derivative of the cost function J(θ) with respect to each network weight. We already took a step in this direction with the help of the chain rule:

Here, f(θ) is the network output and θj is the j-th network weight. In this section, we'll push the envelope further and we'll learn how to derive the NN function itself for all the network weights (hint: chain rule). We'll do this by propagating the error gradient backward through the network (hence the ...