Chapter 1. Introduction to AI and Machine Learning

You’ve likely picked this book up because you’re curious about artificial intelligence (AI), machine learning (ML), deep learning, and all of the new technologies that promise the latest and greatest breakthroughs. Welcome! In this book, my goal is to explain a little about how AI and ML work, and a lot about how you can put them to work for you in your mobile apps using technologies such as TensorFlow Lite, ML Kit, and Core ML. We’ll start light, in this chapter, by establishing what we actually mean when we describe artificial intelligence, machine learning, deep learning, and more.

What Is Artificial Intelligence?

In my experience, artificial intelligence, or AI, has become one of the most fundamentally misunderstood technologies of all time. Perhaps the reason for this is in its name—artificial intelligence evokes the artificial creation of an intelligence. Perhaps it’s in the use of the term widely in science fiction and pop culture, where AI is generally used to describe a robot that looks and sounds like a human. I remember the character Data from Star Trek: The Next Generation as the epitome of an artificial intelligence, and his story led him in a quest to be human, because he was intelligent and self-aware but lacked emotions. Stories and characters like this have likely framed the discussion of artificial intelligence. Others, such as nefarious AIs in various movies and books, have led to a fear of what AI can be.

Given how often AI is seen in these ways, it’s easy to come to the conclusion that they define AI. However, none of these are actual definitions or examples of what artificial intelligence is, at least in today’s terms. It’s not the artificial creation of intelligence—it’s the artificial appearance of intelligence. When you become an AI developer, you’re not building a new lifeform—you’re writing code that acts in a different way to traditional code, and that can very loosely emulate the way an intelligence reacts to something. A common example of this is to use deep learning for computer vision where, instead of writing code that tries to understand the contents of an image with a lot of if...then rules that parse the pixels, you can instead have a computer learn what the contents are by “looking” at lots of samples.

So, for example, say you want to write code to tell the difference between a T-shirt and a shoe (Figure 1-1).

Figure 1-1. A T-shirt and a shoe

How would you do this? Well, you’d probably want to look for particular shapes. The distinct vertical lines in parallel on the T-shirt, with the body outline, are a good signal that it’s a T-shirt. The thick horizontal lines towards the bottom, the sole, are a good indication that it’s a shoe. But there’s a lot of code you would have to write to detect that. And that’s just for the general case—of course there would be many exceptions for nontraditional designs, such as a cutout T-shirt.

If you were to ask an intelligent being to pick between a shoe and a T-shirt, how would you do it? Assuming it had never seen them before, you’d show the being lots of examples of shoes and lots of examples of T-shirts, and it would just figure out what made a shoe a shoe, and what made a T-shirt a T-shirt. You wouldn’t need to give it lots of rules saying which one is which. Artificial Intelligence acts in the same way. Instead of figuring out all those rules and inputting them into a computer in order to tell that difference, you give the computer lots of examples of T-shirts and lots of examples of shoes, and it just figures out how to distinguish them.

But the computer doesn’t do this by itself. It does it with code that you write. That code will look and feel very different from your typical code, and the framework by which the computer will learn to distinguish isn’t something that you’ll need to figure out how to write for yourself. There are several frameworks that already exist for this purpose. In this book you’ll learn how to use one of them, TensorFlow, to create applications like the one I just mentioned!

TensorFlow is an end-to-end open source platform for ML. You’ll use many parts of it extensively in this book, from creating models that use ML and deep learning, to converting them to mobile-friendly formats with TensorFlow Lite and executing them on mobile devices, to serving them with TensorFlow-Serving. It also underpins technology such as ML Kit, which provides many common models as turnkey scenarios with a high-level API that’s designed around mobile scenarios.

As you’ll see when reading this book, the techniques of AI aren’t particularly new or exciting. What is relatively new, and what made the current explosion in AI technologies possible, is increased, low-cost computing power, along with the availability of mass amounts of data. Having both is key to building a system using machine learning. But to demonstrate the concept, let’s start small, so it’s easier to grasp.

What Is Machine Learning?

You might have noticed in the preceding scenario that I mentioned that an intelligent being would look at lots of examples of T-shirts and shoes and just figure out what the difference between them was, and in so doing, would learn how to differentiate between them. It had never previously been exposed to either, so it gains new knowledge about them by being told that these are T-shirts, and these are shoes. From that information, it then was able to move forward by learning something new.

When programming a computer in the same way, the term machine learning is used. Similar to artificial intelligence, the terminology can create the false impression that the computer is an intelligent entity that learns the way a human does, by studying, evaluating, theorizing, testing, and then remembering. And on a very surface level it does, but how it does it is far more mundane than how the human brain does it.

To wit, machine learning can be simply described as having code functions figure out their own parameters, instead of the human programmer supplying those parameters. They figure them out through trial and error, with a smart optimization process to reduce the overall error and drive the model towards better accuracy and performance.

Now that’s a bit of a mouthful, so let’s explore what that looks like in practice.

Moving from Traditional Programming to Machine Learning

To understand, in detail, the core difference between coding for machine learning and traditional coding, let’s go through an example.

Consider the function that describes a line. You might remember this from high school geometry:

y = Wx + B

This describes that for something to be a line, every point y on the line can be derived by multiplying x by a value W (for weight) and adding a value B (for bias).

(Note: AI literature tends to be very math heavy. Unnecessarily so, if you’re just getting started. This is one of a very few math examples I’ll use in this book!)

Now, say you’re given two points on this line, let’s say they are at x = 2, y = 3 and x = 3, y = 5. How would we write code that figures out the values of W and B that describe the line joining these two points?

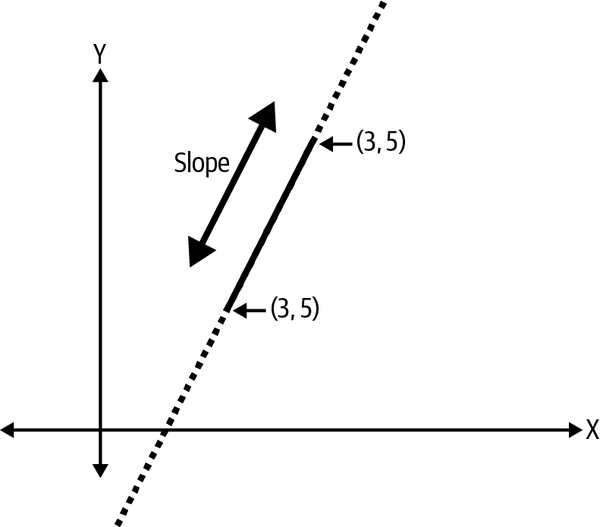

Let’s start with W, which we call the weight, but in geometry, it’s also called the slope (sometimes gradient). See Figure 1-2.

Figure 1-2. Visualizing a line segment with slope

Calculating it is easy:

W = (y2-y1)/(x2-x1)

So if we fill in, we can see that the slope is:

W = (5-3)/(3-2) = (2)/(1) = 2

Or, in code, in this case using Python:

def get_slope(p1, p2): W = (p2.y - p1.y) / (p2.x - p1.x) return W

This function sort of works. It’s naive because it ignores a divide by zero when the two x values are the same, but let’s just go with it for now.

OK, so we’ve now figured out the W value. In order to get a function for the line, we also need to figure out the B. Back to high school geometry, we can use one of our points as an example.

So, assume we have:

y = Wx + B

We can also say:

B = y - Wx

And we know that when x = 2, y = 3, and W = 2 we can backfill this function:

B = 3 - (2*2)

This leads us to derive that B is −1.

Again, in code, we would write:

def get_bias(p1, W):

B = p1.y - (W * p1.x)

return B

So, now, to determine any point on the line, given an x, we can easily say:

def get_y(x, W, B): y = (W*x) + B return y

Or, for the complete listing:

def get_slope(p1, p2):

W = (p2.y - p1.y) / (p2.x - p1.x)

return W

def get_bias(p1, W):

B = p1.y - (W * p1.x)

return B

def get_y(x, W, B):

y = W*x + B

p1 = Point(2, 3)

p2 = Point(3, 5)

W = get_slope(p1, p2)

B = get_bias(p1, W)

# Now you can get any y for any x by saying:

x = 10

y = get_y(x, W, B)

From these, we could see that when x is 10, y will be 19.

You’ve just gone through a typical programming task. You had a problem to solve, and you could solve the problem by figuring out the rules and then expressing them in code. There was a rule to calculate W when given two points, and you created that code. You could then, once you’ve figured out W, derive another rule when using W and a single point to figure out B. Then, once you had W and B, you could write yet another rule to calculate y in terms of W, B, and a given x.

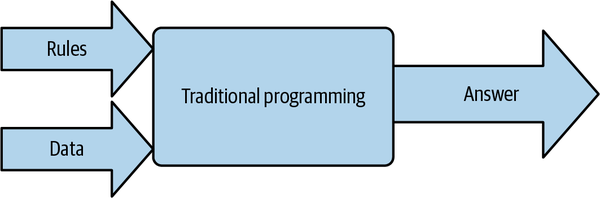

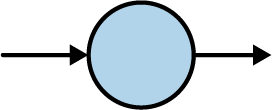

That’s traditional programming, which is now often referred to as rules-based programming. I like to summarize this with the diagram in Figure 1-3.

Figure 1-3. Traditional programming

At its highest level, traditional programming involves creating rules that act on data and which give us answers. In the preceding scenario, we had data—two points on a line. We then figured out the rules that would act on this data to figure out the equation of that line. Then, given those rules, we could get answers for new items of data, so that we could, for example, plot that line.

The core job of the programmer in this scenario is to figure out the rules. That’s the value you bring to any problem—breaking it down into the rules that define it, and then expressing those rules in terms that a computer can understand using a coding language.

But you may not always be able to express those rules easily. Consider the scenario from earlier when we wanted to differentiate between a T-shirt and a shoe. One could not always figure out the rules to determine between them, and then express those rules in code. Here’s where machine learning can help, but before we go into it for a computer vision task like that, let’s consider how machine learning might be used to figure out the equation of a line as we worked out earlier.

How Can a Machine Learn?

Given the preceding scenario, where you as a programmer figured out the rules that make up a line, and the computer implemented them, let’s now see how a Machine Learning approach would be different.

Let’s start by understanding how machine learning code is structured. While this is very much a “Hello World” problem, the overall structure of the code is very similar to what you’d see even in far more complex ones.

I like to draw a high-level architecture outlining the use of machine learning to solve a problem like this. Remember, in this case, we’ll have x and y values, so we want to figure out the W and B so that we have a line equation; once we have that equation, we can then get new y values given x ones.

Step 1: Guess the answer

Yes, you read that right. To begin with, we have no idea what the answer might be, so a guess is as good as any other answer. In real terms this means we’ll pick random values for W and B. We’ll loop back to this step with more intelligence a little later, so subsequent values won’t be random, but we’ll start purely randomly. So, for example, let’s assume that our first “guess” is that W = 10 and B = 5.

Step 2: Measure the accuracy of our guess

Now that we have values for W and B, we can use these against our known data to see just how good, or how bad, those guesses are. So, we can use y = 10x + 5 to figure out a y for each of our x values, compare that y against the “correct” value, and use that to derive how good or how bad our guess is. Obviously, for this situation our guess is really bad because our numbers would be way off. We’ll go into detail on that shortly, but for now, we realize that our guess is really bad, and we have a measure of how bad. This is often called the loss.

Step 3: Optimize our guess

Now that we have a guess, and we have intelligence about the results of that guess (or the loss), we have information that can help us create a new and better guess. This process is called optimization. If you’ve looked at any AI coding or training in the past and it was heavy on mathematics, it’s likely you were looking at optimization. Here’s where fancy calculus, in a process called gradient descent, can be used to help make a better guess. Optimization techniques like this figure out ways to make small adjustments to your parameters that drive towards minimal error. I’m not going to go into detail on that here, and while it’s a useful skill to understand how optimization works, the truth is that frameworks like TensorFlow implement them for you so you can just go ahead and use them. In time, it’s worth digging into them for more sophisticated models, allowing you to tweak their learning behavior. But for now, you’re safe just using a built-in optimizer. Once you’ve done this, you simply go to step 1. Repeating this process, by definition, helps us over time and many loops, to figure out the parameters W and B.

And that’s why this process is called machine learning. Over time, by making guesses, figuring out how good or how bad that guess might be, optimizing the next guess based on that intel, and then repeating it, the computer will “learn” the parameters for W and B (or indeed anything else), and from there, it will figure out the rules that make up our line. Visually, this might look like Figure 1-4.

Figure 1-4. The machine learning algorithm

Implementing machine learning in code

That’s a lot of description, and a lot of theory. Let’s now take a look at what this would look like in code, so you can see it running for yourself. A lot of this code may look alien to you at first, but you’ll get the hang of it over time. I like to call this the “Hello World” of machine learning, as you use a very basic neural network (which I’ll explain a little later) to “learn” the parameters W and B for a line when given a few points on the line.

Here’s the code (a full notebook with this code sample can be found in the GitHub for this book):

model = Sequential(Dense(units=1, input_shape=[1])) model.compile(optimizer='sgd', loss='mean_squared_error') xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float) ys = np.array([-3.0, -1.0, 1.0, 3.0, 5.0, 7.0], dtype=float) model.fit(xs, ys, epochs=500) print(model.predict([10.0]))

Note

This is written using the TensorFlow Keras APIs. Keras is an open source framework designed to make definition and training of models easier with a high level API. It became tightly integrated into TensorFlow in 2019 with the release of TensorFlow 2.0.

Let’s explore this line by line.

First of all is the concept of a model. When creating code that learns details about data, we often use the term “model” to define the resultant object. A model, in this case, is roughly analogous to the get_y() function from the coded example earlier. What’s different here is that the model doesn’t need to be given the W and the B. It will figure them out for itself based on the given data, so you can just ask it for a y and give it an x, and it will give you its answer.

So our first line of code looks like this—it’s defining the model:

model = Sequential(Dense(units=1, input_shape=[1]))

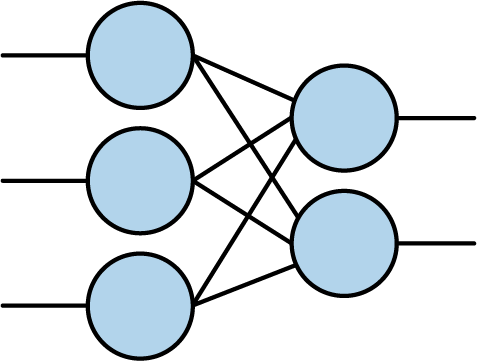

But what’s the rest of the code? Well, let’s start with the word Dense, which you can see within the first set of parentheses. You’ve probably seen pictures of neural networks that look a little like Figure 1-5.

Figure 1-5. Basic neural network

You might notice that in Figure 1-5, each circle (or neuron) on the left is connected to each neuron on the right. Every neuron is connected to every other neuron in a dense manner. Hence the name Dense. Also, there are three stacked neurons on the left, and two stacked neurons on the right, and these form very distinct “layers” of neurons in sequence, where the first “layer” has three neurons, and the second has two neurons.

So let’s go back to the code:

model = Sequential(Dense(units=1, input_shape=[1]))

This code is saying that we want a sequence of layers (Sequential), and within the parentheses, we will define those sequences of layers. The first in the sequence will be Dense, indicating a neural network like that in Figure 1-5. There are no other layers defined, so our Sequential just has one layer. This layer has only one unit, indicated by the units=1 parameter, and the input shape to that unit is just a single value.

So our neural network will look like Figure 1-6.

Figure 1-6. Simplest possible neural network

This is why I like to call this the “Hello World” of neural networks. It has one layer, and that layer has one neuron. That’s it. So, with that line of code, we’ve now defined our model architecture. Let’s move on to the next line:

model.compile(optimizer='sgd', loss='mean_squared_error')

Here we are specifying built-in functions to calculate the loss (remember step 2, where we wanted to see how good or how bad our guess was) and the optimizer (step 3, where we generate a new guess), so that we can improve on the parameters within the neuron for W and B.

In this case 'sgd' stands for “stochastic gradient descent,” which is beyond the scope of this book; in summary, it uses calculus alongside the mean squared error loss to figure out how to minimize loss, and once loss is minimized, we should have parameters that are accurate.

Next, let’s define our data. Two points may not be enough, so I expanded it to six points for this example:

xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float) ys = np.array([-3.0, -1.0, 1.0, 3.0, 5.0, 7.0], dtype=float)

The np stands for “NumPy,” a Python library commonly used in data science and machine learning that makes handling of data very straightforward. You can learn more about NumPy at https://numpy.org.

We’ll create an array of x values and their corresponding y values, so that given x = −1, y will be −3, when x is 0, y is −1, and so on. A quick inspection shows that you can see the relationship of y = 2x − 1 holds for these values.

Next let’s do the loop that we had spoken about earlier—make a guess, measure how good or how bad that loss is, optimize for a new guess, and repeat. In TensorFlow parlance this is often called fitting—namely we have x’s and y’s, and we want to fit the x’s to the y’s, or, in other words, figure out the rule that gives us the correct y for a given x using the examples we have. The epochs=500 parameter simply indicates that we’ll do the loop 500 times:

model.fit(xs, ys, epochs=500)

When you run code like this (you’ll see how to do this later in this chapter if you aren’t already familiar with it), you’ll see output like the following:

Epoch 1/500 1/1 [==============================] - 0s 1ms/step - loss: 32.4543 Epoch 2/500 1/1 [==============================] - 0s 1ms/step - loss: 25.8570 Epoch 3/500 1/1 [==============================] - 0s 1ms/step - loss: 20.6599 Epoch 4/500 1/1 [==============================] - 0s 2ms/step - loss: 16.5646 Epoch 5/500 1/1 [==============================] - 0s 1ms/step - loss: 13.3362

Note the loss value. The unit doesn’t really matter, but what does is that it is getting smaller. Remember the lower the loss, the better your model will perform, and the closer its answers will be to what you expect. So the first guess was measured to have a loss of 32.4543, but by the fifth guess this was reduced to 13.3362.

If we then look at the last 5 epochs of our 500, and explore the loss:

Epoch 496/500 1/1 [==============================] - 0s 916us/step - loss: 5.7985e-05 Epoch 497/500 1/1 [==============================] - 0s 1ms/step - loss: 5.6793e-05 Epoch 498/500 1/1 [==============================] - 0s 2ms/step - loss: 5.5626e-05 Epoch 499/500 1/1 [==============================] - 0s 1ms/step - loss: 5.4484e-05 Epoch 500/500 1/1 [==============================] - 0s 4ms/step - loss: 5.3364e-05

It’s a lot smaller, on the order of 5.3 x 10-5.

This is indicating that the values of W and B that the neuron has figured out are only off by a tiny amount. It’s not zero, so we shouldn’t expect the exact correct answer. For example, assume we give it x = 10, like this:

print(model.predict([10.0]))

The answer won’t be 19, but a value very close to 19, and it’s usually about 18.98. Why? Well, there are two main reasons. The first is that neural networks like this deal with probabilities, not certainties, so that the W and B that it figured out are ones that are highly probable to be correct but may not be 100% accurate. The second reason is that we only gave six points to the neural network. While those six points are linear, that’s not proof that every other point that we could possibly predict is necessarily on that line. The data could skew away from that line...there’s a very low probability that this is the case, but it’s nonzero. We didn’t tell the computer that this was a line, we just asked it to figure out the rule that matched the x’s to the y’s, and what it came up with looks like a line but isn’t guaranteed to be one.

This is something to watch out for when dealing with neural networks and machine learning—you will be dealing with probabilities like this!

There’s also the hint in the method name on our model—notice that we didn’t ask it to calculate the y for x = 10.0, but instead to predict it. In this case a prediction (often called an inference) is reflective of the fact that the model will try to figure out what the value will be based on what it knows, but it may not always be correct.

Comparing Machine Learning with Traditional Programming

Referring back to Figure 1-3 for traditional programming, let’s now update it to show the difference between machine learning and traditional programming given what you just saw. Earlier we described traditional programming as follows: you figure out the rules for a given scenario, express them in code, have that code act on data, and get answers out. Machine learning is very similar, except that some of the process is reversed. See Figure 1-7.

Figure 1-7. From traditional programming to machine learning

As you can see the key difference here is that with machine learning you do not figure out the rules! Instead you provide it answers and data, and the machine will figure out the rules for you. In the preceding example, we gave it the correct y values (aka the answers) for some given x values (aka the data), and the computer figured out the rules that fit the x to the y. We didn’t do any geometry, slope calculation, interception, or anything like that. The machine figured out the patterns that matched the x’s to the y’s.

That’s the core and important difference between machine learning and traditional programming, and it’s the cause of all of the excitement around machine learning because it opens up whole new scenarios of application. One example of this is computer vision—as we discussed earlier, trying to write the rules to figure out the difference between a T-shirt and a shoe would be much too difficult to do. But having a computer figure out how one matches to another makes this scenario possible, and from there, scenarios that are more important—such as interpreting X-rays or other medical scans, detecting atmospheric pollution, and a whole lot more—become possible. Indeed, research has shown that in many cases using these types of algorithms along with adequate data has led to computers being as good as, and sometimes better than, humans at particular tasks. For a bit of fun, check out this blog post about diabetic retinopathy—where researchers at Google trained a neural network on pre-diagnosed images of retinas and had the computer figure out what determines each diagnosis. The computer, over time, became as good as the best of the best at being able to diagnose the different types of diabetic retinopathy!

Building and Using Models on Mobile

Here you saw a very simple example of how you transition from rules-based programming to ML to solve a problem. But it’s not much use solving a problem if you can’t get it into your user’s hands, and with ML models on mobile devices running Android or iOS, you’ll do exactly that!

It’s a complicated and varied landscape, and in this book we’ll aim to make that easier for you, through a number of different methods.

For example, you might have a turnkey solution available to you, where an existing model will solve the problem for you, and you just want to learn how to do that. We’ll cover that for scenarios like face detection, where a model will detect faces in pictures for you, and you want to integrate that into your app.

Additionally, there are many scenarios where you don’t need to build a model from scratch, figuring out the architecture, and going through long and laborious training. A scenario called transfer learning can often be used, and this is where you are able to take parts of preexisting models and repurpose them. For example, Big Tech companies and researchers at top universities have access to data and computer power that you may not, and they have used that to build models. They’ve shared these models with the world so they can be reused and repurposed. You’ll explore that a lot in this book, starting in Chapter 2.

Of course, you may also have a scenario where you need to build your own model from scratch. This can be done with TensorFlow, but we’ll only touch on that lightly here, instead focusing on mobile scenarios. The partner book to this one, called AI and Machine Learning for Coders, focuses heavily on that scenario, teaching you from first principles how models for various scenarios can be built from the ground up.

Summary

In this chapter, you got an introduction to artificial intelligence and machine learning. Hopefully it helped cut through the hype so you can see, from a programmer’s perspective, what this is really all about, and from there you can identify the scenarios where AI and ML can be extraordinarily useful and powerful. You saw, in detail, how machine learning works, and the overall “loop” that a computer uses to learn how to fit values to each other, matching patterns and “learning” the rules that put them together. From there, the computer could act somewhat intelligently, lending us the term “artificial” intelligence. You learned about the terminology related to being a machine learning or artificial intelligence programmer, including models, predictions, loss, optimization, inference, and more.

From Chapter 3 onwards you’ll be using examples of these to implement machine learning models into mobile apps. But first, let’s explore building some more models of our own to see how it all works. In Chapter 2 we’ll look into building some more sophisticated models for computer vision!

Get AI and Machine Learning for On-Device Development now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.