In the previous step 1, the agent went from F or state 1 or s to B, which was state 2 or s'.

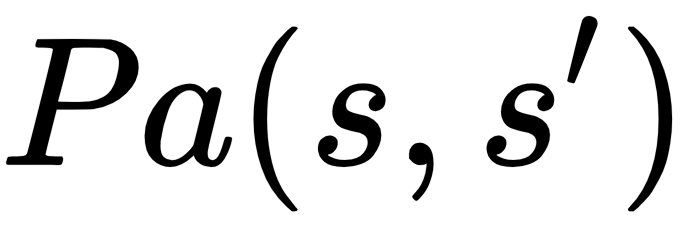

To do that, there was a strategy—a policy represented by P. All of this can be shown in one mathematical expression, the MDP state transition function:

P is the policy, the strategy made by the agent to go from F to B through action a. When going from F to B, this state transition is called state transition function:

- a is the action

- s is state 1 (F) and s' is state 2 (B)

This is the basis of MDP. The reward (right or wrong) is represented in the same way:

That means R is the reward for the action of going from state ...