Chapter 4. The Simplest Way to Manage Data

At some point, IT staff realize that transferring data from one place to another can become complicated. A natural reaction to this problem is to write a script. Scripts are easy to write, and they start out easy to understand and manage. The problem with writing a script is that things change. The data, its purpose, the technology, the policies, and the people responsible all change over time. Eventually, the bulk of your time will be spent editing the script to keep it working when things change. So, the simplest way to manage data is to use standards and tools to build automated data pipelines that will make it easier to adapt to these inevitable changes.

The Data Pipeline

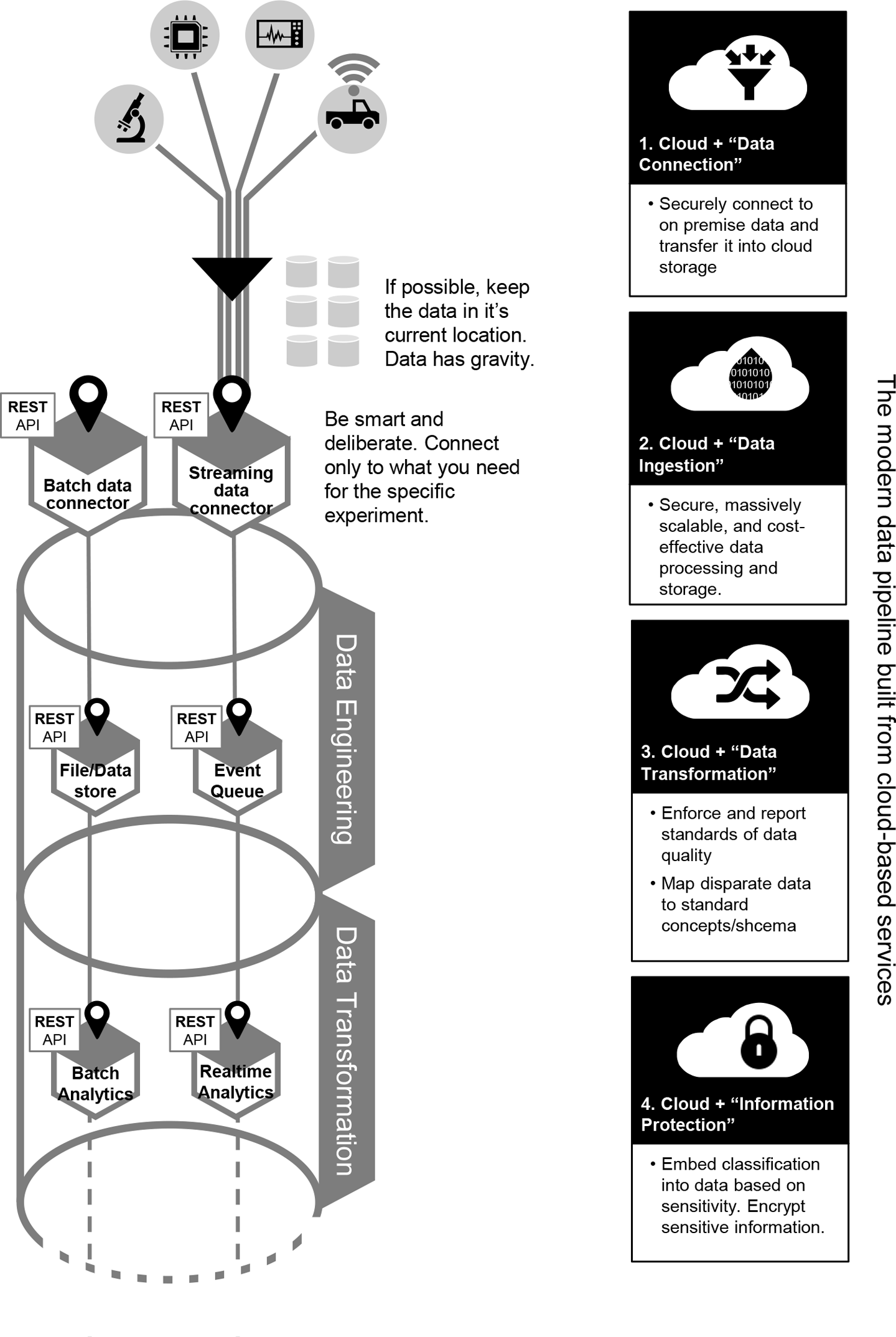

Make it standard practice to access remote data using web APIs. A standard interface makes it easy to adapt when the storage technology supporting the remote data source changes. Ideally, if data is accessed as a web service, you won’t notice if the storage mechanism changes from, say, a mainframe to a relational database.

Use a standard tool to schedule, execute, and monitor data transfer. If all goes well, the scope of your AI efforts (and your data needs) will grow over time. The number of data sources will increase. The response times and update intervals of data sources will change depending on demand. Using a single, automated data ingestion tool makes it easier to keep up with data pipeline operations, discover when data ingestion jobs are failing, and take corrective action.

Run data cleaning and transformation code in a standard execution environment. If you’re going to build an AI, you’ll likely spend a lot of time cleaning and formatting training data. You’ll need to write code that maps disparate data to a standard model—code that, for example, recognizes that “Zip Code,” “Zip,” and “Postal Code” all represent the same concept. You’ll need to write code that imputes missing values, that joins the data, that reshapes the data, and that calculates new values. Running this code in a standard execution environment makes it easier to keep track of everything required to transform raw data into a usable source of machine-learning insight.

It’s even better if the execution environment is serverless. Serverless execution automatically deploys code to servers. This means that you don’t need to worry about managing the computing resources needed to execute the code. When the machines handle the compute infrastructure, you can spend more time writing and maintaining the code.

You must protect the data you collect. Even if the algorithm itself does not directly disclose sensitive information, its training might require sensitive information. Every tool used for the AI pipeline should include implicit controls for protecting sensitive data. This includes the ability to encrypt the data, control access at the user level, and log exactly who accessed the data and when.

Figure 4-1 shows how a modern data platform is integrated into the cloud.

Figure 4-1. The modern data pipeline built from cloud-based services

What Good Data Looks Like

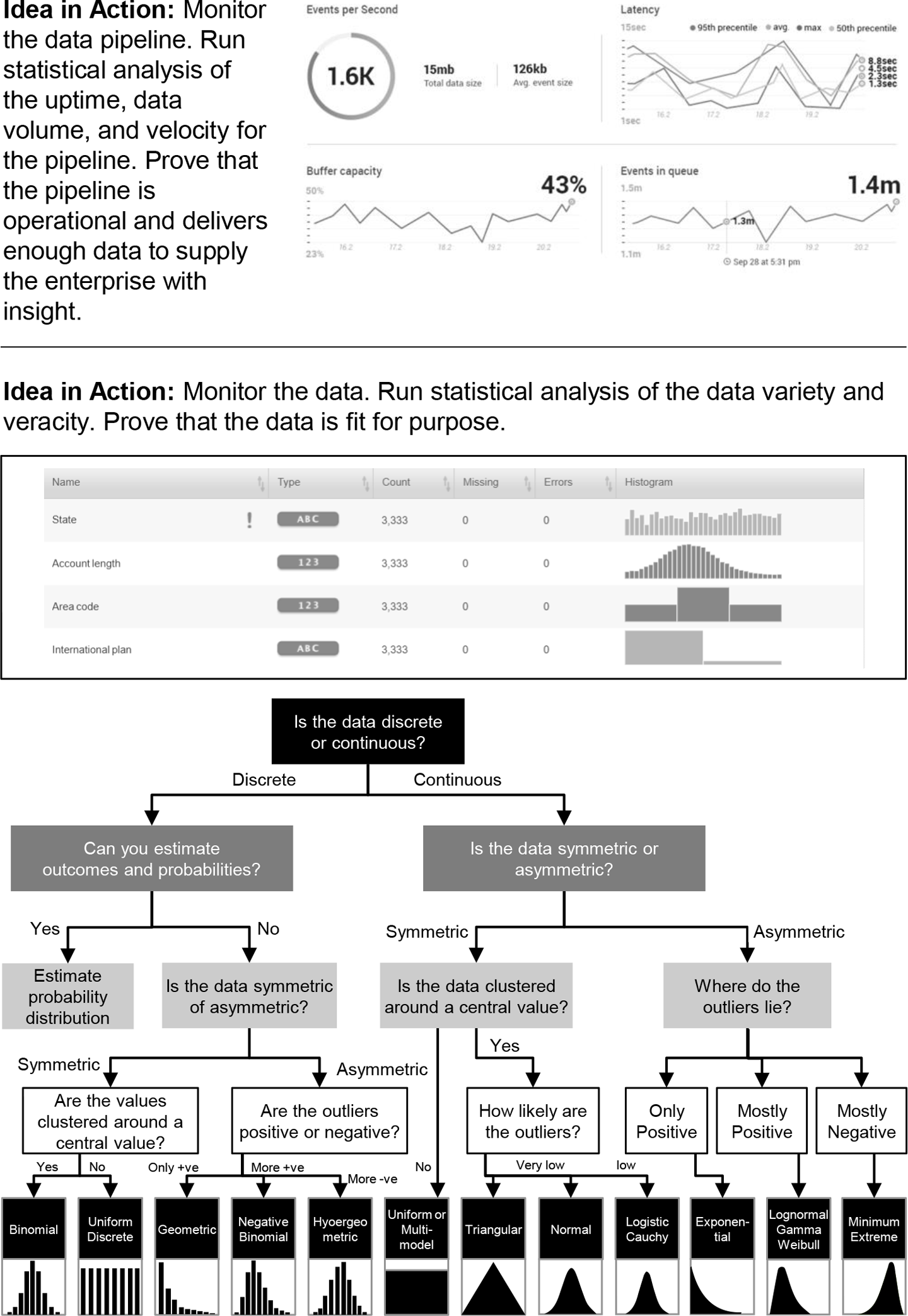

You must monitor the operation of the pipeline—this is usually done by the data engineer. Again, if all goes well, managing the data pipelines will become an important part of business operations. The data pipeline tools should include monitoring features that make managing pipeline operations as easy as possible. Monitor the pipeline for how often it runs, how many resources it consumes, and how much usable data it produces. Monitor the data to ensure that it has the required quality. What, exactly, defines quality in a dataset will depend on the task. The simplest way to monitor data quality is to compare the statistical distributions of the raw data attributes to expectations and ideals. For example, is the age of your population skewed toward youth or are customer transaction amounts suspiciously uniform?

Figure 4-2 shows data flowing down through an example pipeline.

Figure 4-2. What good data (and data pipelines) look like

Get Artificial Intelligence now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.