Chapter 3. Data Ingestion

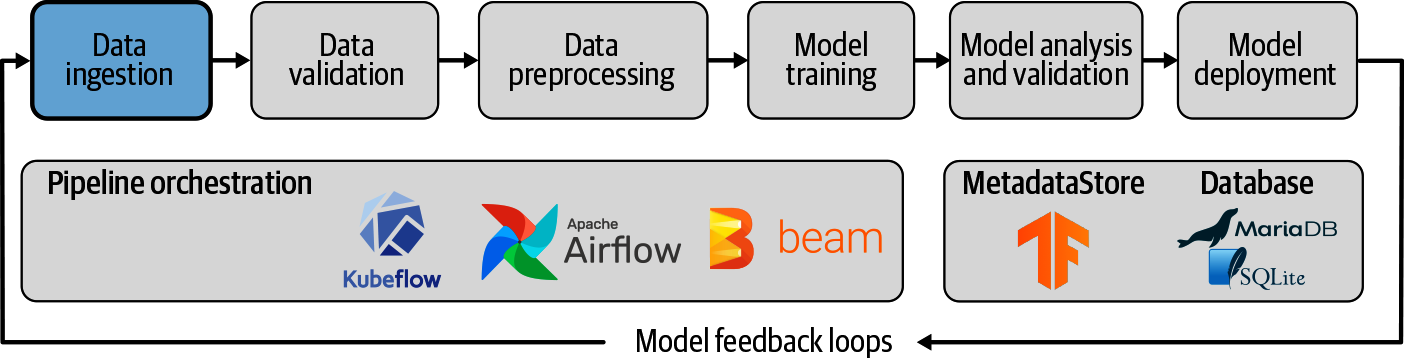

With the basic TFX setup and the ML MetadataStore in place, in this chapter, we focus on how to ingest your datasets into a pipeline for consumption in various components, as shown in Figure 3-1.

Figure 3-1. Data ingestion as part of ML pipelines

TFX provides us components to ingest data from files or services. In this chapter, we outline the underlying concepts, explain ways to split the datasets into training and evaluation subsets, and demonstrate how to combine multiple data exports into one all-encompassing dataset. We then discuss some strategies to ingest different forms of data (structured, text, and images), which have proven helpful in previous use cases.

Concepts for Data Ingestion

In this step of our pipeline, we read data files or request the data for our pipeline run from an external service (e.g., Google Cloud BigQuery). Before passing the ingested dataset to the next component, we divide the available data into separate datasets (e.g., training and validation datasets) and then convert the datasets into TFRecord files containing the data represented as tf.Example data structures.

Get Building Machine Learning Pipelines now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.