Chapter 4. Building Reactive Microservice Systems

The previous chapter focused on building microservices, but this chapter is all about building systems. Again, one microservice doesn’t make a service—they come in systems. When you embrace the microservice architectural style, you will have dozens of microservices. Managing two microservices, as we did in the last chapter, is easy. The more microservices you use, the more complex the application becomes.

First, we will learn how service discovery can be used to address location transparency and mobility. Then, we will discuss resilience and stability patterns such as timeouts, circuit breakers, and fail-overs.

Service Discovery

When you have a set of microservices, the first question you have to answer is: how will these microservices locate each other? In order to communicate with another peer, a microservice needs to know its address. As we did in the previous chapter, we could hard-code the address (event bus address, URLs, location details, etc.) in the code or have it externalized into a configuration file. However, this solution does not enable mobility. Your application will be quite rigid and the different pieces won’t be able to move, which contradicts what we try to achieve with microservices.

Client- and Server-Side Service Discovery

Microservices need to be mobile but addressable. A consumer needs to be able to communicate with a microservice without knowing its exact location in advance, especially since this location may change over time. Location transparency provides elasticity and dynamism: the consumer may call different instances of the microservice using a round-robin strategy, and between two invocations the microservice may have been moved or updated.

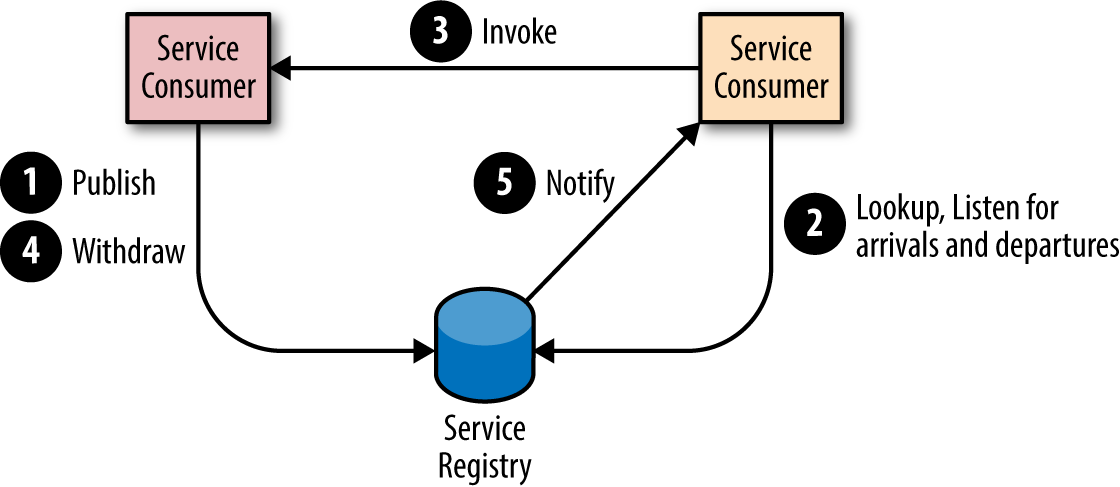

Location transparency can be addressed by a pattern called service discovery. Each microservice should announce how it can be invoked and its characteristics, including its location of course, but also other metadata such as security policies or versions. These announcements are stored in the service discovery infrastructure, which is generally a service registry provided by the execution environment. A microservice can also decide to withdraw its service from the registry. A microservice looking for another service can also search this service registry to find matching services, select the best one (using any kind of criteria), and start using it. These interactions are depicted in Figure 4-1.

Figure 4-1. Interactions with the service registry

Two types of patterns can be used to consume services. When using client-side service discovery, the consumer service looks for a service based on its name and metadata in the service registry, selects a matching service, and uses it. The reference retrieved from the service registry contains a direct link to a microservice. As microservices are dynamic entities, the service discovery infrastructure must not only allow providers to publish their services and consumers to look for services, but also provide information about the arrivals and departures of services. When using client-side service discovery, the service registry can take various forms such as a distributed data structure, a dedicated infrastructure such as Consul, or be stored in an inventory service such as Apache Zookeeper or Redis.

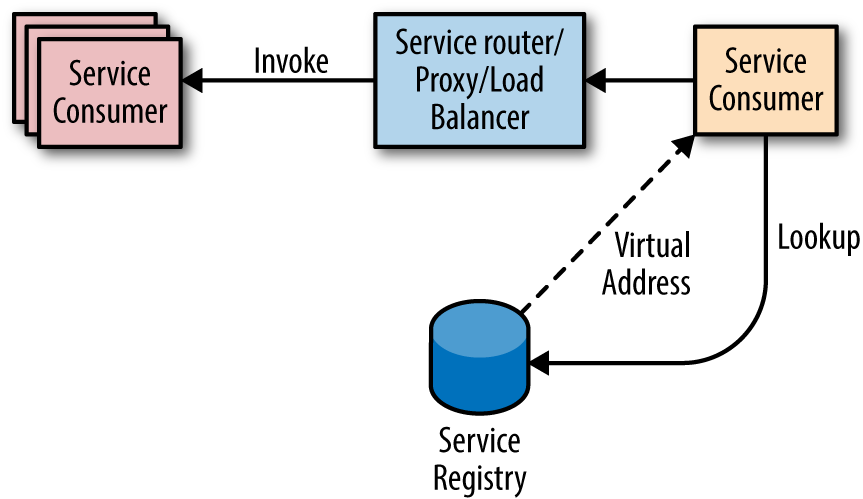

Alternatively, you can use server-side service discovery and let a load balancer, a router, a proxy, or an API gateway manage the discovery for you (Figure 4-2). The consumer still looks for a service based on its name and metadata but retrieves a virtual address. When the consumer invokes the service, the request is routed to the actual implementation. You would use this mechanism on Kubernetes or when using AWS Elastic Load Balancer.

Figure 4-2. Server-side service discovery

Vert.x Service Discovery

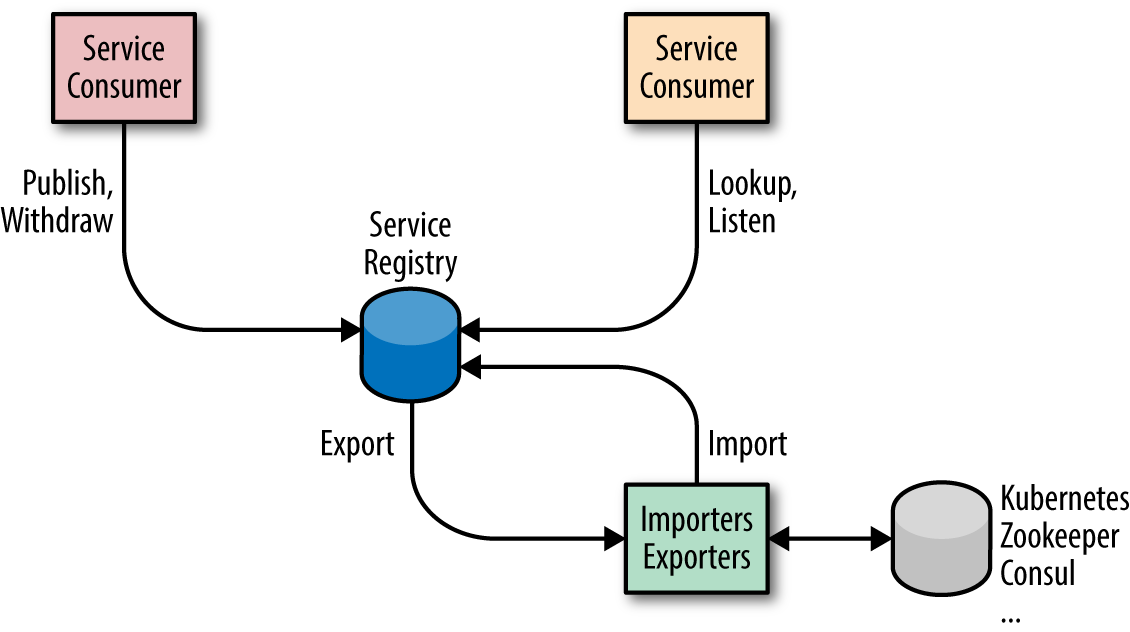

Vert.x provides an extensible service discovery mechanism. You can use client-side or server-side service discovery using the same API. The Vert.x service discovery can import or export services from many types of service discovery infrastructures such as Consul or Kubernetes (Figure 4-3). It can also be used without any dedicated service discovery infrastructure. In this case, it uses a distributed data structure shared on the Vert.x cluster.

Figure 4-3. Import and export of services from and to other service discovery mechanisms

You can retrieve services by types to get a configured service client ready to be used. A service type can be an HTTP endpoint, an event bus address, a data source, and so on. For example, if you want to retrieve the HTTP endpoint named hello that we implemented in the previous chapter, you would write the following code:

// We create an instance of service discoveryServiceDiscoverydiscovery=ServiceDiscovery.create(vertx);// As we know we want to use an HTTP microservice, we can// retrieve a WebClient already configured for the serviceHttpEndpoint.rxGetWebClient(discovery,// This method is a filter to select the servicerec->rec.getName().endsWith("hello")).flatMap(client->// We have retrieved the WebClient, use it to call// the serviceclient.get("/").as(BodyCodec.string()).rxSend()).subscribe(response->System.out.println(response.body()));

The retrieved WebClient is configured with the service location, which means you can immediately use it to call the service. If your environment is using client-side discovery, the configured URL targets a specific instance of the service. If you are using server-side discovery, the client uses a virtual URL.

Depending on your runtime infrastructure, you may have to register your service. But when using server-side service discovery, you usually don’t have to do this since you declare your service when it is deployed. Otherwise, you need to publish your service explicitly. To publish a service, you need to create a Record containing the service name, location, and metadata:

// We create the service discovery objectServiceDiscoverydiscovery=ServiceDiscovery.create(vertx);vertx.createHttpServer().requestHandler(req->req.response().end("hello")).rxListen(8083).flatMap(// Once the HTTP server is started (we are ready to serve)// we publish the service.server->{// We create a record describing the service and its// location (for HTTP endpoint)Recordrecord=HttpEndpoint.createRecord("hello",// the name of the service"localhost",// the hostserver.actualPort(),// the port"/"// the root of the endpoint);// We publish the servicereturndiscovery.rxPublish(record);}).subscribe(rec->System.out.println("Service published"));

Service discovery is a key component in a microservice infrastructure. It enables dynamism, location transparency, and mobility. When dealing with a small set of services, service discovery may look cumbersome, but it’s a must-have when your system grows. The Vert.x service discovery provides you with a unique API regardless of the infrastructure and the type of service discovery you use. However, when your system grows, there is also another variable that grows exponentially—failures.

Stability and Resilience Patterns

When dealing with distributed systems, failures are first-class citizens and you have to live with them. Your microservices must be aware that the services they invoke can fail for many reasons. Every interaction between microservices will eventually fail in some way, and you need to be prepared for that failure. Failure can take different forms, ranging from various network errors to semantic errors.

Managing Failures in Reactive Microservices

Reactive microservices are responsible for managing failures locally. They must avoid propagating the failure to another microservice. In other words, you should not delegate the hot potato to another microservice. Therefore, the code of a reactive microservice considers failures as first-class citizens.

The Vert.x development model makes failures a central entity. When using the callback development model, the Handlers often receive an AsyncResult as a parameter. This structure encapsulates the result of an asynchronous operation. In the case of success, you can retrieve the result. On failure, it contains a Throwable describing the failure:

client.get("/").as(BodyCodec.jsonObject()).send(ar->{if(ar.failed()){Throwablecause=ar.cause();// You need to manage the failure.}else{// It's a successJsonObjectjson=ar.result().body();}});

When using the RxJava APIs, the failure management can be made in the subscribe method:

client.get("/").as(BodyCodec.jsonObject()).rxSend().map(HttpResponse::body).subscribe(json->{/* success */},err->{/* failure */});

If a failure is produced in one of the observed streams, the error handler is called. You can also handle the failure earlier, avoiding the error handler in the subscribe method:

client.get("/").as(BodyCodec.jsonObject()).rxSend().map(HttpResponse::body).onErrorReturn(t->{// Called if rxSend produces a failure// We can return a default valuereturnnewJsonObject();}).subscribe(json->{// Always called, either with the actual result// or with the default value.});

Managing errors is not fun but it has to be done. The code of a reactive microservice is responsible for making an adequate decision when facing a failure. It also needs to be prepared to see its requests to other microservices fail.

Using Timeouts

When dealing with distributed interactions, we often use timeouts. A timeout is a simple mechanism that allows you to stop waiting for a response once you think it will not come. Well-placed timeouts provide failure isolation, ensuring the failure is limited to the microservice it affects and allowing you to handle the timeout and continue your execution in a degraded mode.

client.get(path).rxSend()// Invoke the service// We need to be sure to use the Vert.x event loop.subscribeOn(RxHelper.scheduler(vertx))// Configure the timeout, if no response, it publishes// a failure in the Observable.timeout(5,TimeUnit.SECONDS)// In case of success, extract the body.map(HttpResponse::bodyAsJsonObject)// Otherwise use a fallback result.onErrorReturn(t->{// timeout or another exceptionreturnnewJsonObject().put("message","D'oh! Timeout");}).subscribe(json->{System.out.println(json.encode());});

Timeouts are often used together with retries. When a timeout occurs, we can try again. Immediately retrying an operation after a failure has a number of effects, but only some of them are beneficial. If the operation failed because of a significant problem in the called microservice, it is likely to fail again if retried immediately. However, some kinds of transient failures can be overcome with a retry, especially network failures such as dropped messages. You can decide whether or not to reattempt the operation as follows:

client.get(path).rxSend().subscribeOn(RxHelper.scheduler(vertx)).timeout(5,TimeUnit.SECONDS)// Configure the number of retries// here we retry only once..retry(1).map(HttpResponse::bodyAsJsonObject).onErrorReturn(t->{returnnewJsonObject().put("message","D'oh! Timeout");}).subscribe(json->System.out.println(json.encode()));

It’s also important to remember that a timeout does not imply an operation failure. In a distributed system, there are many reasons for failure. Let’s look at an example. You have two microservices, A and B. A is sending a request to B, but the response does not come in time and A gets a timeout. In this scenario, three types of failure could have occurred:

-

The message between A and B has been lost—the operation is not executed.

-

The operation in B failed—the operation has not completed its execution.

-

The response message between B and A has been lost—the operation has been executed successfully, but A didn’t get the response.

This last case is often ignored and can be harmful. In this case, combining the timeout with a retry can break the integrity of the system. Retries can only be used with idempotent operations, i.e., with operations you can invoke multiple times without changing the result beyond the initial call. Before using a retry, always check that your system is able to handle reattempted operations gracefully.

Retry also makes the consumer wait even longer to get a response, which is not a good thing either. It is often better to return a fallback than to retry an operation too many times. In addition, continually hammering a failing service may not help it get back on track. These two concerns are managed by another resilience pattern: the circuit breaker.

Circuit Breakers

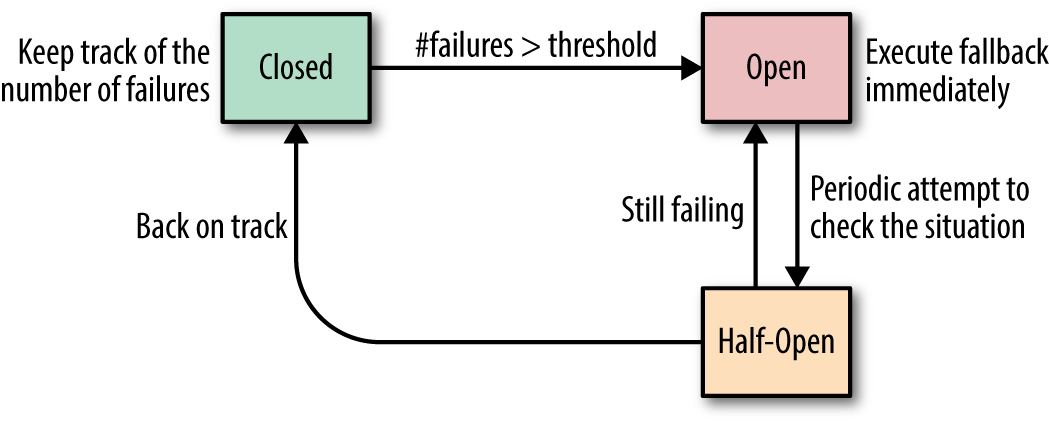

A circuit breaker is a pattern used to deal with repetitive failures. It protects a microservice from calling a failing service again and again. A circuit breaker is a three-state automaton that manages an interaction (Figure 4-4). It starts in a closed state in which the circuit breaker executes operations as usual. If the interaction succeeds, nothing happens. If it fails, however, the circuit breaker makes a note of the failure. Once the number of failures (or frequency of failures, in more sophisticated cases) exceeds a threshold, the circuit breaker switches to an open state. In this state, calls to the circuit breaker fail immediately without any attempt to execute the underlying interaction. Instead of executing the operation, the circuit breaker may execute a fallback, providing a default result. After a configured amount of time, the circuit breaker decides that the operation has a chance of succeeding, so it goes into a half-open state. In this state, the next call to the circuit breaker executes the underlying interaction. Depending on the outcome of this call, the circuit breaker resets and returns to the closed state, or returns to the open state until another timeout elapses.

Figure 4-4. Circuit breaker states

The most well-known circuit breaker implementation in Java is Hystrix (https://github.com/Netflix/Hystrix). While you can use Hystrix in a Vert.x microservice (it uses a thread pool), you need to explicitly switch to the Vert.x event loop to execute the different callbacks. Alternatively, you can use the Vert.x circuit breaker built for asynchronous operations and enforce the Vert.x nonblocking asynchronous development model.

Let’s imagine a failing hello microservice. The consumer should protect the interactions with this service and use a circuit breaker as follows:

CircuitBreakercircuit=CircuitBreaker.create("my-circuit",vertx,newCircuitBreakerOptions().setFallbackOnFailure(true)// Call the fallback// on failures.setTimeout(2000)// Set the operation timeout.setMaxFailures(5)// Number of failures before// switching to// the 'open' state.setResetTimeout(5000)// Time before attempting// to reset// the circuit breaker);// ...circuit.rxExecuteCommandWithFallback(future->client.get(path).rxSend().map(HttpResponse::bodyAsJsonObject).subscribe(future::complete,future::fail),t->newJsonObject().put("message","D'oh! Fallback")).subscribe(json->{// Get the actual json or the fallback valueSystem.out.println(json.encode());});

In this code, the HTTP interaction is protected by the circuit breaker. When the number of failures reaches the configured threshold, the circuit breaker will stop calling the microservice and instead call a fallback. Periodically, the circuit breaker will let one invocation pass through to check whether the microservice is back on track and act accordingly. This example uses a web client, but any interaction can be managed with a circuit breaker and protect you against flaky services, exceptions, and other sorts of failures.

A circuit breaker switching to an open state needs to be monitored by your operations team. Both Hystrix and the Vert.x circuit breaker have monitoring capabilities.

Health Checks and Failovers

While timeouts and circuit breakers allow consumers to deal with failures on their side, what about crashes? When facing a crash, a failover strategy restarts the parts of the system that have failed. But before being able to achieve this, we must be able to detect when a microservice has died.

A health check is an API provided by a microservice indicating its state. It tells the caller whether or not the service is healthy. The invocation often uses HTTP interactions but is not necessary. After invocation, a set of checks is executed and the global state is computed and returned. When a microservice is detected to be unhealthy, it should not be called anymore, as the outcome is probably going to be a failure. Note that calling a healthy microservice does not guarantee a success either. A health check merely indicates that the microservice is running, not that it will accurately handle your request or that the network will deliver its answer.

Depending on your environment, you may have different levels of health checks. For instance, you may have a readiness check used at deployment time to determine when the microservice is ready to serve requests (when everything has been initialized correctly). Liveness checks are used to detect misbehaviors and indicate whether the microservice is able to handle requests successfully. When a liveness check cannot be executed because the targeted microservice does not respond, the microservice has probably crashed.

In a Vert.x application, there are several ways to implement health checks. You can simply implement a route returning the state, or even use a real request. You can also use the Vert.x health check module to implement several health checks in your application and compose the different outcomes. The following code gives an example of an application providing two levels of health checks:

Routerrouter=Router.router(vertx);HealthCheckHandlerhch=HealthCheckHandler.create(vertx);// A procedure to check if we can get a database connectionhch.register("db-connection",future->{client.rxGetConnection().subscribe(c->{future.complete();c.close();},future::fail);});// A second (business) procedurehch.register("business-check",future->{// ...});// Map /health to the health check handlerrouter.get("/health").handler(hch);// ...

After you have completed health checks, you can implement a fail-over strategy. Generally, the strategy just restarts the dead part of the system, hoping for the best. While failover is often provided by your runtime infrastructure, Vert.x offers a built-in failover, which is triggered when a node from the cluster dies. With the built-in Vert.x failover, you don’t need a custom health check as the Vert.x cluster pings nodes periodically. When Vert.x loses track of a node, Vert.x chooses a healthy node of the cluster and redeploys the dead part.

Failover keeps your system running but won’t fix the root cause—that’s your job. When an application dies unexpectedly, a postmortem analysis should be done.

Summary

This chapter has addressed several concerns you will face when your microservice system grows. As we learned, service discovery is a must-have in any microservice system to ensure location transparency. Then, because failures are inevitable, we discussed a couple of patterns to improve the resilience and stability of your system.

Vert.x includes a pluggable service discovery infrastructure that can handle client-side service discovery and server-side service discovery using the same API. The Vert.x service discovery is also able to import and export services from and to different service discovery infrastructures. Vert.x includes a set of resilience patterns such as timeout, circuit breaker, and failover. We saw different examples of these patterns. Dealing with failure is, unfortunately, part of the job and we all have to do it.

In the next chapter, we will learn how to deploy Vert.x reactive microservices on OpenShift and illustrate how service discovery, circuit breakers, and failover can be used to make your system almost bulletproof. While these topics are particularly important, don’t underestimate the other concerns that need to be handled when dealing with microservices, such as security, deployment, aggregated logging, testing, etc.

If you want to learn more about these topics, check the following resources:

-

Release It! Design and Deploy Production-Ready Software (O’Reilly) A book providing a list of recipes to make your system ready for production

Get Building Reactive Microservices in Java now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.