Chapter 1. When to Use In-Memory Database Management Systems (IMDBMS)

In-memory computing, and variations of in-memory databases, have been around for some time. But only in the last couple of years has the technology advanced and the cost of memory declined enough that in-memory computing has become cost effective for many enterprises. Major research firms like Gartner have taken notice and have started to focus on broadly applicable use cases for in-memory databases, such as Hybrid Transactional/Analytical Processing (HTAP for short).

HTAP represents a new and unique way of architecting data pipelines. In this chapter we will explore how in-memory database solutions can improve operational and analytic computing through HTAP, and what use cases may be best suited to that architecture.

Improving Traditional Workloads with In-Memory Databases

There are two primary categories of database workloads that can suffer from delayed access to data. In-memory databases can help in both cases.

Online Transaction Processing (OLTP)

OLTP workloads are characterized by a high volume of low-latency operations that touch relatively few records. OLTP performance is bottlenecked by random data access—how quickly the system finds a given record and performs the desired operation. Conventional databases can capture moderate transaction levels, but trying to query the data simultaneously is nearly impossible. That has led to a range of separate systems focusing on analytics more than transactions. These online analytical processing (OLAP) solutions complement OLTP solutions.

However, in-memory solutions can increase OLTP transactional throughput; each transaction—including the mechanisms to persist the data—is accepted and acknowledged faster than a disk-based solution. This speed enables OLTP and OLAP systems to converge in a hybrid, or HTAP, system.

When building real-time applications, being able to quickly store more data in-memory sets a foundation for unique digital experiences such as a faster and more personalized mobile application, or a richer set of data for business intelligence.

Online Analytical Processing (OLAP)

OLAP becomes the system for analysis and exploration, keeping the OLTP system focused on capture of transactions. Similar to OLTP, users also seek speed of processing and typically focus on two metrics:

- Data latency is the time it takes from when data enters a pipeline to when it is queryable.

- Query latency represents the rate at which you can get answers to your questions to generate reports faster.

Traditionally, OLAP has not been associated with operational workloads. The “online” in OLAP refers to interactive query speed, meaning an analyst can send a query to the database and it returns in some reasonable amount of time (as opposed to a long-running “job” that may take hours or days to complete). However, many modern applications rely on real-time analytics for things like personalization and traditional OLAP systems have been unable to meet this need. Addressing this kind of application requires rethinking expectations of analytical data processing systems. In-memory analytical engines deliver the speed, low latency, and throughput needed for real-time insight.

HTAP: Bringing OLTP and OLAP Together

When working with transactions and analytics independently, many challenges have already been solved. For example, if you want to focus on just transactions, or just analytics, there are many existing database and data warehouse solutions:

- If you want to load data very quickly, but only query for basic results, you can use a stream processing framework.

- And if you want fast queries but are able to take your time loading data, many columnar databases or data warehouses can fit that bill.

However, rapidly emerging workloads are no longer served by any of the traditional options, which is where new HTAP-optimized architectures provide a highly desirable solution. HTAP represents a combination of low data latency and low query latency, and is delivered via an in-memory database. Reducing both latency variables with a single solution enables new applications and real-time data pipelines across industries.

Modern Workloads

Near ubiquitous Internet connectivity now drives modern workloads and a corresponding set of unique requirements. Database systems must have the following characteristics:

- Ingest and process data in real-time

- In many companies, it has traditionally taken one day to understand and analyze data from when the data is born to when it is usable to analysts. Now companies want to do this in real time.

- Generate reports over changing datasets

- The generally accepted standard today is that after collecting data during the day and not necessarily being able to use it, a four- to six-hour process begins to produce an OLAP cube or materialized reports that facilitate faster access for analysts. Today, companies expect queries to run on changing datasets with results accurate to the last transaction.

- Anomaly detection as events occur

- The time to react to an event can directly correlate with the financial health of a business. For example, quickly understanding unusual trades in financial markets, intruders to a corporate network, or the metrics for a manufacturing process can help companies avoid massive losses.

- Subsecond response times

- When corporations get access to fresh data, its popularity rises across hundreds to thousand of analysts. Handling the serving workload requires memory-optimized systems.

The Need for HTAP-Capable Systems

HTAP-capable systems can run analytics over changing data, meeting the needs of these emerging modern workloads. With reduced data latency, and reduced query latency, these systems provide predictable performance and horizontal scalability.

In-Memory Enables HTAP

In-memory databases deliver more transactions and lower latencies for predictable service level agreements or SLAs. Disk-based systems simply cannot achieve the same level of predictability. For example, if a disk-based storage system gets overwhelmed, performance can screech to a halt, wreaking havoc on application workloads.

In-memory databases also deliver analytics as data is written, essentially bypassing a batched extract, transform, load (ETL) process. As analytics develop across real-time and historical data, in-memory databases can extend to columnar formats that run on top of higher capacity disks or flash SSDs for retaining larger datasets.

Common Application Use Cases

Applications driving use cases for HTAP and in-memory databases range across industries. Here are a few examples.

Real-Time Analytics

Agile businesses need to implement tight operational feedback loops so decision makers can refine strategies quickly. In-memory databases support rapid iteration by removing conventional database bottlenecks like disk latency and CPU contention. Analysts appreciate the ability to get immediate data access with preferred analysis and visualization tools.

Risk Management

Successful companies must be able to quantify and plan for risk. Risk calculations require aggregating data from many sources, and companies need the ability to calculate present risk while also running ad hoc future planning scenarios.

In-memory solutions calculate volatile metrics frequently for more granular risk assessment and can ingest millions of records per second without blocking analytical queries. These solutions also serve the results of risk calculations to hundreds of thousands of concurrent users.

Personalization

Today’s users expect tailored experiences and publishers, advertisers, and retailers can drive engagement by targeting recommendations based on users’ history and demographic information. Personalization shapes the modern web experience. Building applications to deliver these experiences requires a real-time database to perform segmentation and attribution at scale.

In-memory architectures scale to support large audiences, converge a system or record with a system of insight for tighter feedback loops, and eliminate costly pre-computation with the ability to capture and analyze data in real time.

Portfolio Tracking

Financial assets and their value change in real time, and the reporting dashboards and tools must similarly keep up. HTAP and in-memory systems converge transactional and analytical processing so portfolio value computations are accurate to the last trade.

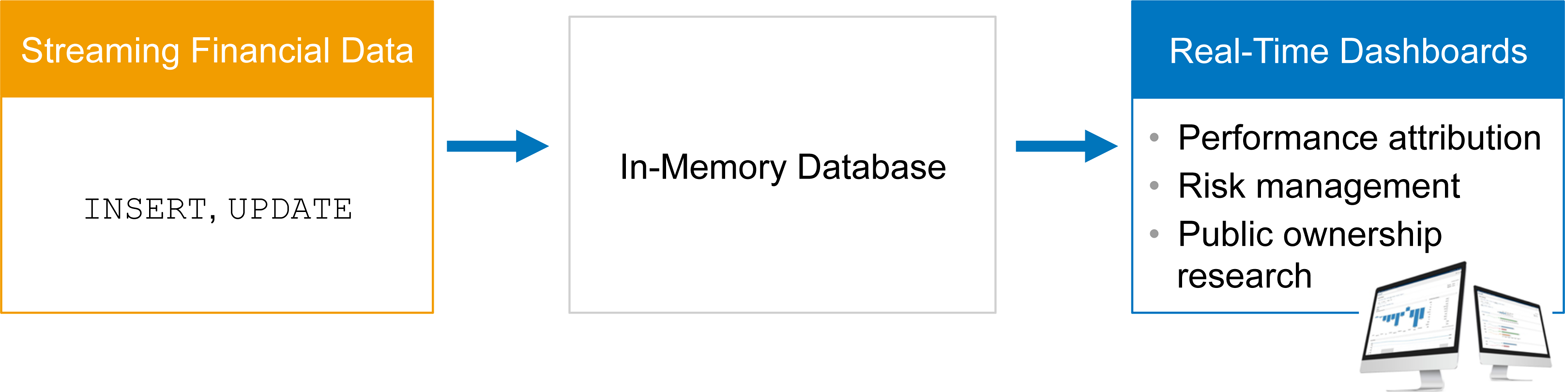

Now users can update reports more frequently to recognize and capitalize on short-term trends, provide a real-time serving layer to thousands of analysts, and view real-time and historical data through a single interface (Figure 1-1).

Figure 1-1. Analytical platform for real-time trade data

Monitoring and Detection

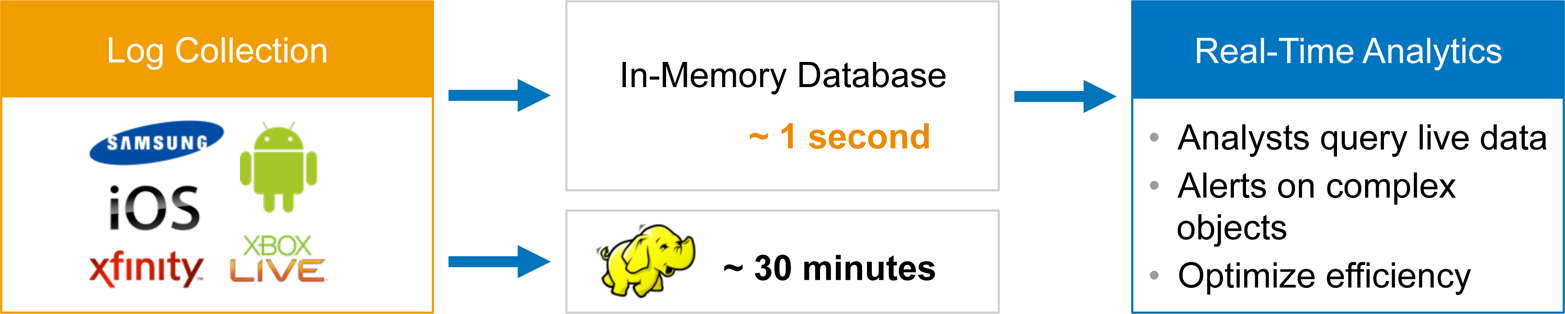

The increase in connected applications drove a shift from logging and log analysis to real-time event processing. This provides businesses the ability to instantly respond to events, rather than after the fact, in cases such as data center management and fraud detection. In-memory databases ingest data and run queries simultaneously, provide analytics on real-time and historical data in a single view, and provide the persistence for real-time data pipelines with Apache Kafka and Spark (Figure 1-2).

Figure 1-2. Real-time operational intelligence and monitoring

Conclusion

In the early days of databases, systems were designed to focus on each individual transaction and treat it as an atomic unit (for example, the debit and credit for accounting, the movement of physical inventory, or the addition of a new employee to payroll). These critical transactions move the business forward and remain a cornerstone of systems-of-record.

Yet, a new model is emerging where the aggregate of all the transactions becomes critical to understanding the shape of the business (for example, the behavior of millions of users across a mobile phone application, the input from sensor arrays in Internet of Things (IoT) applications, or the clicks measured on a popular website). These modern workloads represent a new era of transactions requiring in-memory databases to keep up with the volume of real-time data and the interest to understand that data in real time.

Get Building Real-Time Data Pipelines now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.