Chapter 1. The Motivations for a New Network Architecture

Once upon a time, there was what there was, and if nothing had happened there would be nothing to tell.

Charles de Lint

If applications had never changed, there’d be some other story to tell than the one you hold in your hands. A distributed application is in a dance with the network, with the application leading. The story of the modern data center network begins when the network was caught flat-footed when the application began the dance to a different tune. Understanding this transition helps in more ways than just learning why a change is necessary. I often encounter customers who want to build a modern network but cling to old ways of thinking. Application developers coming in from the enterprise side also tend to think in ways that are anathema to the modern data center.

This chapter will help you answer questions such as:

-

What are the characteristics of the new applications?

-

What is an access-aggregation-core network?

-

In what ways did access-aggregation-core networks fail these applications?

The Application-Network Shuffle

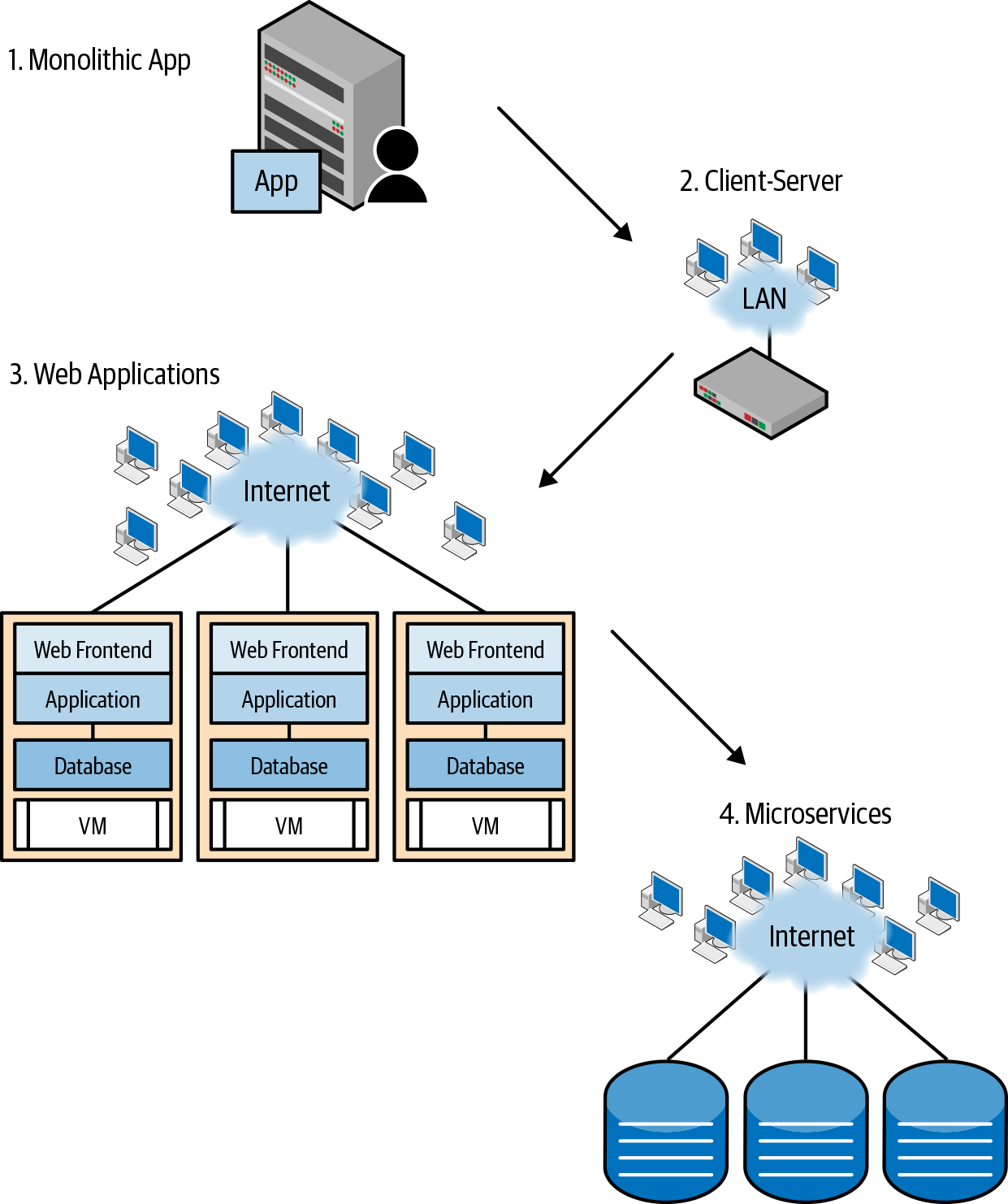

Figure 1-1 shows how applications have evolved from single monoliths to the highly distributed microservices model. With each successive evolution, the requirements from the networks have also evolved.

Figure 1-1. The evolution of application architecture

In the era of single monolithic applications, usually running on a mainframe, the network demands were minimal. The interconnects were proprietary, as were the protocols (think of SNA or DECnet). Keeping in line with the nascent stage of networking and distributed applications, the bandwidth requirements from the network were negligible by today’s standards.

The next generation of applications arose with the spread of workstations and PCs. This wave was characterized by the rise of the client-server architecture. The networks began to become more sophisticated, as characterized by the rise of the local area network (LAN). Network demand increased, although it was still paltry by today’s standards. As time went by, the data exchanged by applications went from only text and pictures to include audio and video. As befits a growing technology, interconnects and protocols abounded and were mostly proprietary. Ethernet, Token Ring, and Fiber Distributed Data Interface (FDDI) were the most popular interconnects. Interconnect speeds topped out at 100 Mbps. The likes of Novell’s IPX and Banyan Systems’ VINES competed with IP for supremacy of the upper-layer network protocols. Enterprises were wary of the freely available and openly developed TCP/IP stack. Many bet on the commercial counterparts. Applications were walled within the enterprise without any chance of access from the outside because most of the upper-layer protocols were not designed for use at scale or across enterprises. Today, all of these commercial upper-layer protocols have been reduced to footnotes in the history of networking. Ethernet and TCP/IP won. Open standards and open source scored their first victory over proprietary counterparts.

Next came the ubiquity of the internet and the crowning of the TCP/IP stack. Applications broke free of the walled garden of enterprises and began to be designed for access across the world. With the ability for clients to connect from anywhere, the scale that the application servers needed to handle went up significantly. In most enterprises, a single instance of a server couldn’t handle all the load from all the clients. Also, single-instance servers became a single point of failure. Running multiple instances of the server fronted by a load balancer thus became popular. Servers themselves were broken up into multiple units: typically the web front end, the application, and the database or storage.

Ethernet became the de facto interconnect within the enterprise. Along with TCP/IP, Ethernet formed the terra firma of enterprise networks and their applications. I still remember the day in mid or maybe later 1998 when my management announced the end of the line for Token Ring and FDDI development on the Catalyst 5000 and 6500 family of switches. Ethernet’s success in the enterprise also started eroding the popularity of the other interconnects, such as Synchronous Optical Network (SONET), in the service provider networks. By the end of this wave, Gigabit Ethernet was the most popular interconnect in enterprise networks.

As compute power continued to increase, it became impossible for most applications to take advantage of the full processing power of the CPU. This surfeit of compute power turned into a glut when improvements in processor fabrication allowed for more than one CPU core on a processor chip.

Server virtualization was invented to maximize efficient use of CPUs. Server virtualization addressed two independent needs. First, running multiple servers without requiring the use of multiple physical compute nodes made the model cost effective. Second, instead of rewriting applications to become multithreaded and parallel to take advantage of the multiple cores, server virtualization allowed enterprises to reuse their applications by running many of them in isolated units called virtual machines (VMs).

The predominant operating system (OS) for application development in the enterprise was Windows. Unix-based operating systems such as Solaris were less popular as application platforms. This was partly because of the cost of the workstations that ran these Unix or Unix-like operating systems. The fragmented market of these operating systems also contributed to the lack of applications. There was no single big leader in the Unix-style segment of operating systems. They all looked the same at a high level, but differed in myriad details, making it difficult for application developers. Linux was stabilizing and rising in popularity, but had not yet bridged the chasm between early adopters and mainstream development.

The success of the internet, especially the web, meant that the online information trickle became a firehose. Finding relevant information became critical. Search engines began to fight for dominance. Google, a much later entrant than other search engines, eventually overtook all of the others. It entered the lexicon as a verb, a synonym for web-based search, much like Xerox was used (and continues to be in some parts of the world) as a synonym for photocopy. At the time of this writing, Google was handling 3.5 billion searches per day, averaging 40,000 per second. To handle this scale, the servers became even more disaggregated, and new techniques, especially cluster-based application architectures such as MapReduce, became prominent. This involved a historic shift from the dominance of communication between clients and servers to the dominance of server-to-server traffic.

Linux became the predominant OS on which this new breed of applications was developed. The philosophy of Unix application development (embodied in Linux now) was to design programs that did one thing and one thing only, did it well, and could work in tandem with other similar programs to provide all kinds of services. So the servers were broken down even more, a movement now popularized as “microservices.” This led to the rise of containers, which were lighter weight than VMs. If everything ran on Linux, booting a machine seemed a high price to pay just to run a small part of a larger service.

The rise of Linux and the economics of large scale led to the rise of the cloud, a service that to businesses reduced the headaches of running an IT organization. It eliminated the need to know what to buy, how much to buy, and how to scale, upgrade, and troubleshoot the enterprise network and compute infrastructure. And it did this at what for many is a fraction of the cost of building and managing their own infrastructure. The story of the cloud is more nuanced than this, but more on that later.

The scale of customers supported in a cloud infrastructure far exceeded the technologies deployed in the enterprise. Containers and cloud networking also enabled a lot more communication between the servers, as it was in the case of the new breed of applications like web search. All of these new applications also demanded a higher bandwidth out of the network, and 10 Gigabit Ethernet became the common interconnect. Interconnect speeds have kept increasing, with newly announced switches supporting 400 Gigabit Ethernet support.

The scale, the difference in style of application communication, and the rise of an ever more distributed application all heaped new demands on the network to handle these new requirements. Let us turn next to the network side of the dance, look at what existed at that time and see why it could no longer keep step to the new beat.

The Network Design from the Turn of the Century

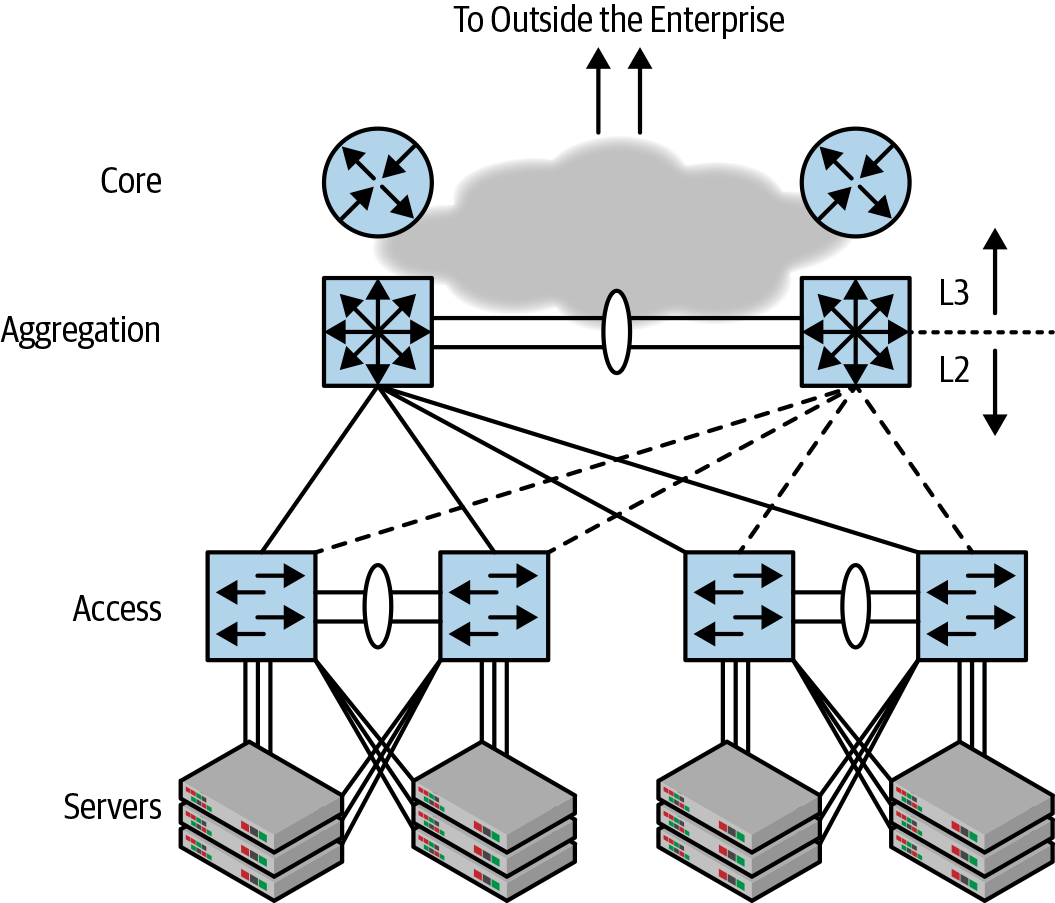

Figure 1-2 shows the network design that held sway at the end of the last century. This was the network that the modern data center applications tried to dance with. This network design is called access-aggregation-core, often shortened to access-agg-core or just access-agg.

Figure 1-2. Access-aggregation-core network architecture

The endpoints or compute nodes are attached to access switches, which are the lowest layer of Figure 1-2. The aggregation switches, also called distribution switches, in turn connect up to the core network, thus connecting the access network to the rest of the world. Explicit links between the aggregation switches and the core switches are not shown because they vary across networks, and are mostly irrelevant to the discussion that follows.

The reason for two aggregation switches, of course, was to avoid having the network isolated when one of the aggregation switches failed. A couple of switches was considered adequate for both throughput and redundancy at the time, but ultimately two proved inadequate.

Traffic between the access and aggregation switches is forwarded via bridging. North of the aggregation boxes, packets are forwarded using routing. So, aggregation boxes were Janus boxes, with the south-facing side providing bridging and the north-facing side providing routing. This is indicated in Figure 1-2 by the presence of both L2 and L3, referring to Layer 2 networking (bridging) and Layer 3 networking (routing).

Directionality in Network Diagrams

Network engineers draw the network diagram with the network equipment on top and the compute nodes at the bottom. Cardinal directions such as “north” and “south” have specific meanings in such a diagram. Northbound traffic moves from the compute nodes into the network and leaves the enterprise network toward the internet; southbound traffic goes from the internet back to local compute nodes. In other words, this is classical client-server communication. East-west traffic flows between servers in the same network. This traffic was small in the client-server era but is the dominant traffic pattern of modern data center applications, which are highly clustered, distributed applications.

The Charms of Bridging

The access-agg-core network is heavily dependent on bridging, even though it came into being at a time when the internet was already fast becoming a “thing.” If IP routing was what powered the internet, why did networking within an enterprise turn to bridging and not routing? There were three primary reasons: the rise of silicon-switched packet forwarding, the rise of proprietary network software stacks within the enterprise, and the promise of zero configuration of bridging.

Hardware packet switching

On both the service-provider side—connecting enterprises to the internet—and in the rise of client-server applications, vendors specializing in the building of networking gear began to appear. Networking equipment moved from high-performance workstations with multiple Network Interface Cards (NICs) to specialized hardware that supported only packet forwarding. Packet forwarding underwent a revolution when specialized Application-Specific Integrated Circuit (ASIC) processors were developed to forward packets. The advent of packet-switching silicon allowed significantly more interfaces to be connected to a single box and could forward packets at a much lower latency than was possible before. This hardware switching technology, however, initially supported only bridging.

Proprietary enterprise network stacks

In the client-server era of networking, IP was just another network protocol. The internet was not what we know now. There were many other competing network stacks within enterprises. Examples of such networks were Novell’s IPX and Banyan VINES. Interestingly, all these stacks differed only above the bridging layer. Bridging was the sole given. It worked with all the protocols that ran at the time in different enterprise networks. So the access-agg-core network design allowed network engineers to build a common network for all of these disparate network protocols instead of building a different network for each specific type of network protocol.

The promise of zero configuration

Routing was difficult to configure—and in some vendor stacks, it still is. IP routing involved a lot of explicit configuration. The two ends of an interface must be configured to be in the same subnet for routing to even begin to work. After that, routing protocols had to be configured with the peers with which they needed to exchange information. Furthermore, they had to be told what information was acceptable to exchange. Human error is either the first or second leading cause for network failures (after hardware failures) according to multiple sources. Routing was also slower and more CPU-intensive than bridging (though this has not been true for well more than two decades now).

Other protocols, such as AppleTalk, promoted themselves on being easy to configure.

When high-performance workstations were replaced by specialized networking equipment, the user model also underwent a shift. The specialized network devices were designed as an appliance rather than as a platform. What this meant was that the user interface (UI) was highly tailored to issuing routing-related commands instead of a general-purpose command-line shell. Moreover, UI was tailored for manual configuration rather than being automatable the way Unix servers were.

People longed to eliminate or reduce networking configuration. Self-learning bridges provided the answer that was most palatable to enterprises at the time, in part because this allowed them to put off the decision to bet on an upper-layer protocol, and in part because they promised simplification. Self-learning transparent bridges promised the nirvana of zero configuration.

Building Scalable Bridging Networks

Bridging promised the nirvana of a single network design for all upper-layer protocols coupled with faster packet switching and minimal configuration. The reality is that bridging comes with several limitations as a consequence of both the learning model and the Spanning Tree Protocol.

Broadcast storms and the impact of Spanning Tree Protocol

You might wonder whether self-learning bridges could result in forwarding packets forever. If a packet is injected to a destination that is not in the network or one that never speaks, the bridges will never learn where this destination is. So, even in a simple triangle topology, even with self-forwarding check, the packet will go around the triangle forever. Unlike the IP header, the MAC header does not contain a time-to-live (TTL) field to prevent a packet from looping forever. Even a single broadcast packet in a small network with a loop can end up using all of the available bandwidth. Such a catastrophe is called a broadcast storm. Inject enough packets with destinations that don’t exist, and you can melt the network.

To avoid this problem, a control protocol called Spanning Tree Protocol (STP) was invented by Radia Perlman. It converts any network topology into a loop-free tree, thereby breaking the loop. Breaking the loop, of course, prevents the broadcast storm.

STP presents a problem in the case of access-agg networks. The root of the spanning tree in such a network is typically one of the aggregation switches. As is apparent from Figure 1-2, a loop is created by the triangle of the two aggregation switches and an access switch. STP breaks the loop by blocking the link connecting the access switch to the nonroot aggregation switch. Unfortunately, this effectively halves the possible network bandwidth because an access switch can use only one of the links to an aggregation switch. If the active aggregation switch dies or the link between an access switch and the active aggregation switch fails, STP will automatically unblock the link to the other aggregation switch. In other words, an access switch’s uplinks to the two aggregation switches behave as an active-standby model. We see the solution to this limitation in “Increasing bandwidth through per-VLAN spanning tree”.

Over time, vendors developed their own proprietary knobs to STP to make it faster and more scalable, trying to fix the problems of slow convergence experienced with standard STP.

The burden of flooding

Another undesirable problem in bridging networks is the flooding (see the earlier sidebar for a definition) that occurs due to unknown unicast packets. End hosts receive all of these unknown unicast packets along with broadcast and unknown multicast packets. The MAC forwarding table entries have a timer of five minutes. If the owner of a MAC address has not communicated for five minutes, the entry for that MAC address is deleted from the MAC forwarding table. This causes the next packet destined to that MAC address to flood. The Address Resolution Protocol (ARP), the IPv4 protocol that is used to determine the MAC address for a given IP address, typically uses broadcast for its queries. So in a network of, say, 100 hosts, every host receives at least an additional 100 queries (one for each of the 99 other hosts and one for the default gateway).

ARP is quite efficient today, so there is no noticeable impact from handling a few hundred more packets per second. But that wasn’t always the case.

And most applications were not as thoughtful as ARP. They were quite profligate in their use of broadcast and multicast packets, causing a bridged network to be extremely noisy. One common offender was NetBIOS, the Microsoft protocol that ran on top of bridging and was used for many things.

The invention of the virtual local area network (VLAN) addresses this problem of excessive flooding. A single physical network is divided logically into smaller networks, each composed of nodes that communicate mostly with one another. Every packet is associated with a specific VLAN, and flooding is limited to switch ports that belong to the same VLAN as the packet being flooded. This allows a group within an enterprise to share the physical network with other groups without affecting other similar groups sharing the same physical network. In IP, a broadcast is contained within a subnet. Thus, a VLAN is associated with an IP subnet.

Increasing bandwidth through per-VLAN spanning tree

Remember that access switches were connected to two aggregation switches and that STP prevented loops while halving the possible network bandwidth.

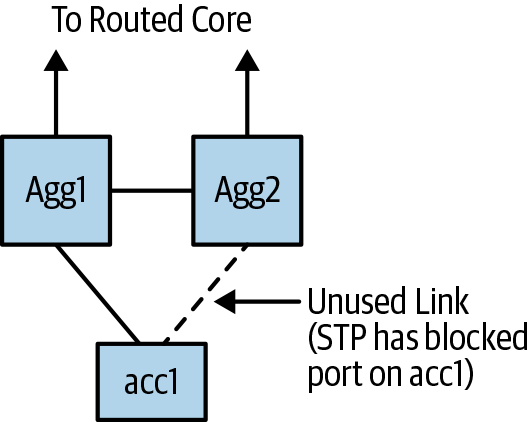

To allow both links to be active, Cisco introduced per-VLAN spanning tree (PVST); that is, it built a separate spanning tree for every VLAN. By putting the even VLANs on one of the aggregation switches and the odd VLANs on the other, for example, the protocol allowed the use of both links: one link by the even group of VLANs, and the other link by the odd group. Effectively, the spanning tree topology for a given VLAN for an access switch looked as shown in Figure 1-3.

Figure 1-3. Active STP topology for a VLAN in an access-agg-core network

Redundancy at the IP level

One more problem needed to be solved, this time with the IP layer. Because the aggregation switches represented the routing boundary, they were the first-hop router for all the hosts connected under them via the access switches. When assigning an IP address to a host, the first-hop router’s IP address is also programmed on the host. This allowed the hosts to communicate with devices outside their subnet via this first-hop router.

After a host is notified of the default gateway’s IP address, changing it is problematic. If one of the aggregation switches dies, the other aggregation switch needs to continue to service the default gateway IP address associated with the failed aggregation switch. Otherwise, hosts would be unable to communicate outside their subnet in the event of an aggregation switch failure. This undermines the entire goal of the second, redundant aggregation switch. So a solution had to be invented such that both routers support a common IP address but have only one of them be active at a time as the owner of that IP address.

Because of the topology shown in Figure 1-3, every broadcast packet (think ARP) will be delivered to both aggregation switches. In addition to having only one of them respond at a given time, the response also had to ensure the MAC address stayed the same.

This led to the invention of yet another kind of protocol, called the First Hop Routing Protocol (FHRP). The first such FHRP was Cisco’s Hot Standby Routing Protocol (HSRP). The standard FHRP protocol in use today is called Virtual Router Redundancy Protocol (VRRP). FHRP allowed the two routers to keep track of each other’s liveness and ensure that only one of them responded to an ARP for the gateway IP address at any time.

Mitigating failure: In-Service Software Upgrade

Because there were only two aggregation switches, a single failure—or taking down a switch for any reason, such as an upgrade—resulted in halving the available bandwidth. Network operators wanted to avoid finding themselves in this situation. So, network vendors introduced dual control-plane cards on an aggregation switch. This was done so that if one control-plane card went down, the other card could take over without rendering the entire box unusable. The two control planes ran in active-passive mode: in other words, only one of them was active at a time. However, in the initial days, there was no synchronization of state between the two control-plane cards. This translated to an extended downtime during a control-plane card failure because the other control-plane card had to rebuild the forwarding state again.

In time, Cisco and other vendors developed a feature called In-Service Software Upgrade (ISSU). This allowed the control-plane cards to synchronize state with each other through proprietary protocols. The idea was to let a control plane go down (due to maintenance or a bug, for instance) by switching over automatically to the standby control plane without incurring either a cold start of the system or a prolonged period of rebuilding state.

This feature was extremely complex and caused more problems than it resolved, so few large-scale data center operators use it.

All of the design choices discussed so far led to the network architecture shown in Figure 1-2.

Now, let’s turn to why this architecture, which was meant to be scalable and flexible, ended up flat-footed in the dance with the new breed of applications that arose in the data center.

The Trouble with the Access-Aggregation-Core Network Design

Around 2000, when the access-agg-core network architecture became prominent, the elements seemed reasonable: a network architecture that supported multiple upper-layer protocols, and was fast, cheap, simpler to administer, and well suited to the north-south traffic pattern of client-server application architecture. Even though this architecture was not as robust as operators would’ve liked—even with added protocols and faster processors, life went on. As an example of the fragility of this architecture, the nightmare that is a broadcast storm is familiar to anybody who has administered bridged networks. Even with STP enabled.

Then the applications changed. The new breed of applications had far more server-to-server communication than in the client-server architecture—traffic followed a more east-west pattern than north-south. Further, these new applications wanted to scale far beyond what had been imagined by the designers of access-agg-core. Increased scale meant considering failure, complexity, and agility very differently than before. The scale and different traffic pattern meant that networks had a different set of requirements than the one they were working with.

The access-agg-core network failed to meet these requirements. Let’s examine its failures.

Unscalability

Despite being designed to scale, the access-agg-core network hit scalability limits far too soon. The failures were at multiple levels:

- Flooding

-

No matter how you slice it, the “flood and learn” model of self-learning bridges doesn’t scale. MAC addresses are not hierarchical. Thus, the MAC forwarding table is a simple 60-bit lookup of the VLAN and destination MAC address of the packet. Learning one million MAC addresses via flood and learn, and periodically relearning them due to timeouts, is considered unfeasible by just about every network architect. The network-wide flooding is too much for end stations to bear. In the age of virtual endpoints, the hypervisor or the host OS sees every single one of these virtual networks. Therefore, it is forced to handle a periodic flood of a million packets.

- VLAN limitations

-

Traditionally, a VLAN ID is 12 bits long, leading to a maximum of 4,096 separate VLANs in a network. At the scale of the cloud, 4,096 VLANs is paltry. Some operators tried adding 12 more bits to create a flat 24-bit VLAN space, but 24-bit VLAN IDs are a nightmare. Why? Remember that we had an instance of the STP running per VLAN? Running 16 million instances of STP was simply out of the question. And yes, Multi-Instance STP (MSTP) was invented to address this, but it wasn’t enough.

- Burden of ARP

-

Remember that aggregation boxes needed to respond to ARPs? Now imagine two boxes having to respond to a very large number of ARPs. When Windows Vista was introduced, it lowered the default ARP refresh timer from a minute or two to 15 seconds to comply with the RFC 4861 (“Neighbor Discovery for IP version 6”) standard. The resulting ARP refreshes were so frequent that they brought a big, widely deployed aggregation switch to its knees. In one interesting episode of this problem that I ran into, the choking up of the CPU due to excessive ARPs led to the failure of other control protocols, causing the entire network to melt at an important customer site. The advent of virtual endpoints in the form of VMs and containers caused this problem to become exponentially worse as the number of endpoints that the aggregation switches had to deal with increased, even without increasing the number of physical hosts connected under these boxes.

- Limitations of switches and STP

-

A common way to deal with the increased need for the east-west bandwidth is to use more aggregation switches. However, STP prevents the use of more than two aggregation switches. Any more than that results in an unpredictable, unusable topology in the event of topology changes due to link and/or node failures. The limitation of using only two aggregation switches severely restricts the bandwidth provided by this network design. This limited bandwidth means that the network suffers from congestion, further affecting the performance of applications.

Complexity

As shown in the study of the evolution of access-agg-core networks, bridged networks require a lot of protocols. These include STP and its variants, FHRP, link failure detection, and vendor-specific protocols such as the VLAN Trunking Protocol (VTP). All of these protocols significantly increase the complexity of the bridging solution. What this complexity means in practice is that when a network fails, multiple different moving parts must be examined to identify the cause of the failure.

VLANs require every node along the path to be VLAN aware. If a configuration failure leads a transit device to not recognize a VLAN, the network becomes partitioned which results in complex, difficult-to-pin down problems.

ISSU was a fix to a problem caused by the design of access-agg-core networks. But it drags in a lot of complexity. Although ISSU has matured and some implementations do a reasonable job, it nevertheless slows down both bug fixes and the development of new features due to the increased complexity. Even the software testing becomes more complex as a result of ISSU.

As a final nail in this coffin, reality has shown us that nothing is too big to fail. When something that is not expected to fail does indeed fail, we don’t have a system in place to deal with it. ISSU is similar in a sense to nonstop Unix kernels, and how many of those are in existence today in the data center?

Unless the access-agg-core network is carefully designed, congestion can quite easily occur in such networks. To illustrate, look again at Figure 1-3. Both the Agg1 and Agg2 switches announce reachability to the subnets connected to acc1. This subnet might be spread across multiple access switches, not limited to just acc1. If the link between Agg1 and acc1 fails, when Agg1 receives a packet from the core of the network destined to a node behind acc1, it needs to send the packet to Agg2 via the link between Agg1 and Agg2, and have Agg2 deliver the packet to acc1. This means the bandwidth of the link between Agg1 and Agg2 needs to be carefully designed; otherwise, sending more traffic than planned due to link failures can cause unexpected application performance issues.

Even under normal circumstances, half the traffic will end up on the switch that has a blocked link to the access switch, causing it to use the peer link to reach the access switch via the other aggregation switch. This complicates network design, capacity planning, and failure handling.

Failure Domain

Given the large scales involved in the web-scale data centers, failure is not a possibility but a certainty. Therefore, a proportional response to failures is critically important.

The data center pioneers came up with the term blast radius as a measure of how widespread the damage is from a single failure. The more closely contained the failure is to the point of the failure, the more fine-grained is the failure domain and the smaller is the blast radius.

The access-agg-core model is prone to very coarse-grained failures; in other words, failures with large blast radiuses. For example, the failure of a single link halves the available bandwidth. Losing half the bandwidth due to a single link failure is quite excessive, especially at large scales for which at any given time, some portion of the network will have suffered a failure. The failure of a single aggregate switch brings the entire network to its knees because the traffic bandwidth of the entire network is cut in half. Worse still, a single aggregate switch now will need to handle the control plane of both switches, which can cause it to fail, as well. In other words, cascading failures resulting in a complete network failure is a real possibility in this design.

Another example of cascading failures is due to the ever-present threat of broadcast storms when the control plane becomes overwhelmed. Instead of merely diverting traffic from a single node, broadcast storms can bring the entire network down either because that node is overwhelmed or because of a bug in it.

Unpredictability

A routine failure can cause STP to fail dramatically. If a peer STP is unable to send hello packets in time for whatever reason (because it is dealing with an ARP storm, for example), the other peers assume that there is no STP running at the remote end and start forwarding packets out the link to the overwhelmed switch. This promptly causes a loop and a broadcast storm kicks in, completely destroying the network. This can happen under many conditions. I remember a case back in the early days when the switching silicon had a bug that caused packets to leak out blocked switch ports, inadvertently forming a loop and thus creating a broadcast storm.

STP also has a root election procedure that can be thrown off and result in the wrong device elected as the root. This happened, for example, at a rather large customer site, during my Cisco days, when a new device was added to the network. The customer had so many network failures as a result of this model that it demanded that switch ports be shipped in a disabled state until they were configured. With the ports administratively disabled by default, STP on a newly added switch didn’t accidentally join a network and elect itself the root.

The presence of many moving parts, often proprietary, also causes networks to become unpredictable and difficult to troubleshoot.

Inflexibility

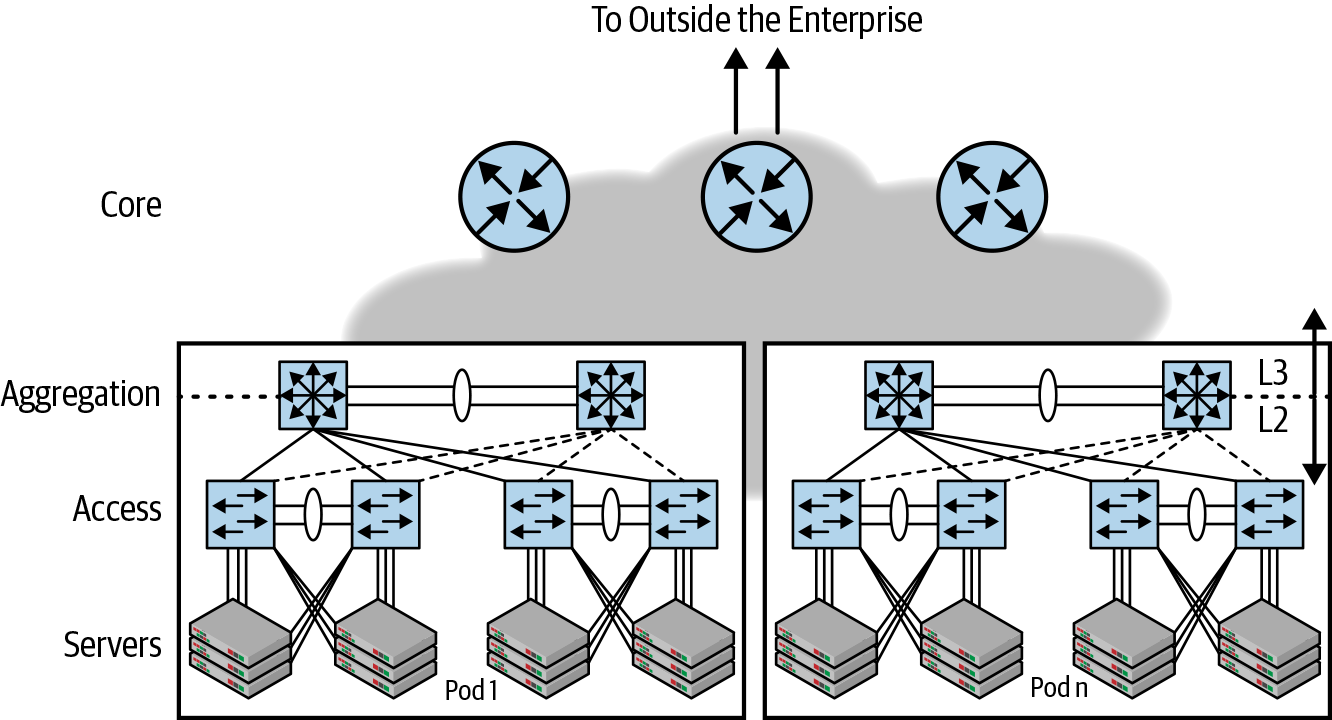

In Figure 1-4, VLANs terminate at the aggregation switch, at the boundary of bridging and routing. It is not possible to have the same VLAN be present across two different pairs of aggregate switches. In other words, the access-agg-core design is not flexible enough to allow a network engineer to assign any available free port to a VLAN based on customer need. This means that network designers must carefully plan the growth of a virtual network in terms of the number of ports it needs.

Figure 1-4. Multipod access-agg-core network

Lack of Agility

In the cloud, tenants come and go at a very rapid pace. It is therefore crucially important to provision virtual networks quickly. As we’ve discussed, VLAN requires every node in the network to be configured with the VLAN information for proper functioning. But adding a VLAN also adds load on the control plane. This is because with PVST, the number of STP hello packets to be sent out is equal to the number of ports times the number of VLANs. As discussed earlier, a single overwhelmed control plane can easily take the entire network down. So adding and removing VLANs is a manual, laborious process that usually takes days.

Also as discussed earlier, adding a new node requires careful planning. Adding a new node causes a change to the number of STP packets the node needs to generate, potentially pushing it over the edge of its scaling limit. So even provisioning a new node can be a lengthy affair involving many people to sign off, each crossing their fingers and hoping all goes well.

The Stories Not Told

Bridging and its adherents didn’t give up without a fight. Many solutions were suggested to fix the problems of bridging. I was even deeply involved in the design of some of these solutions. The only thing that remains for me personally from these exercises is the privilege of working closely with Radia Perlman on the design of the Transparent Interconnection of Lots of Links (TRILL) protocol. I won’t attempt to list all the proposed solutions and their failures. Only one of these solutions is used in a limited capacity in the modern enterprise data center. It is called Multichassis Link Aggregation (MLAG) and is used to handle dual-attached servers.

The flexibility promised by bridging to run multiple upper-layer protocols is no longer useful. IP has won. There are no other network-layer protocols to support. A different kind of flexibility is required now.

Summary

In this chapter, we looked at how the evolution of application architecture led to a change in the network architecture. Monolithic apps were relatively (in comparison to today’s applications) simple applications that ran on complex, specialized hardware and worked on networks with skinny interconnects and proprietary protocols. The next generation of applications were complex client-server applications that ran on relatively simple compute infrastructure that relied on complex networking support. The current class of applications are complex large-scale distributed applications that demand a different network architecture. We examine the architecture that has replaced the access-agg model in the next chapter.

Get Cloud Native Data Center Networking now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.