Chapter 4. Working with Data

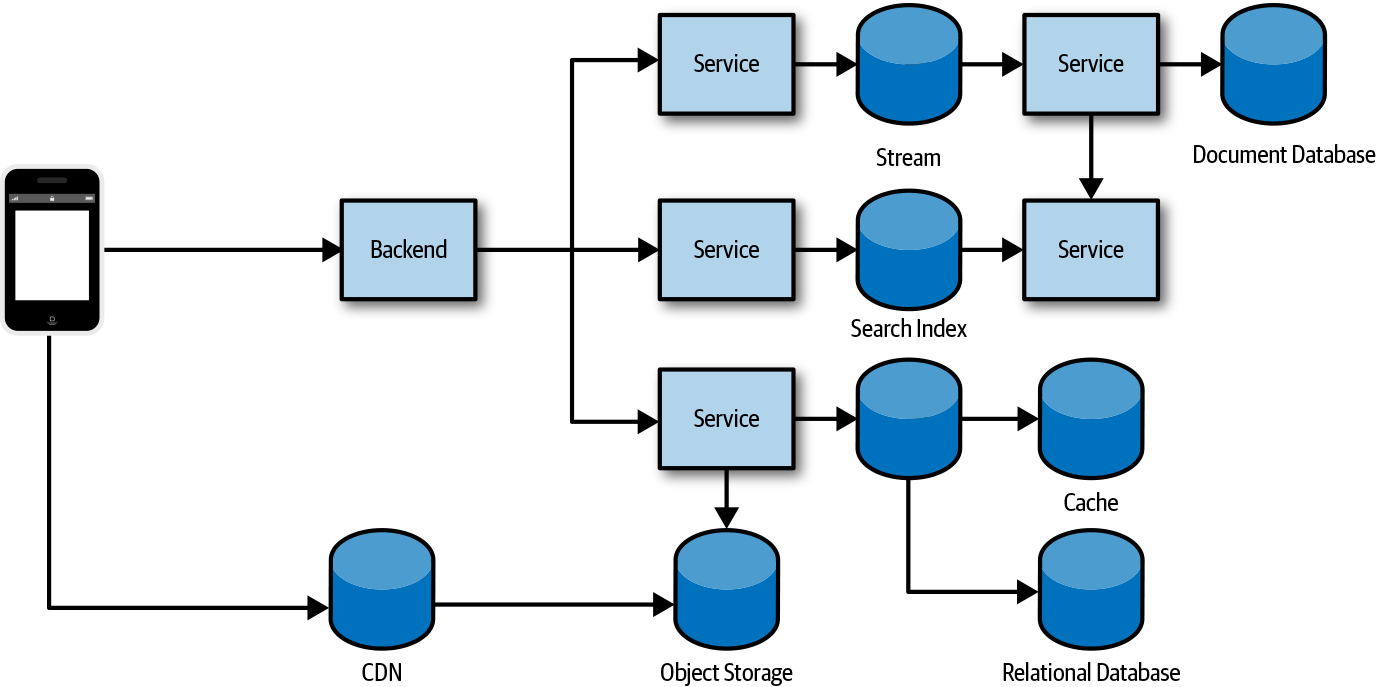

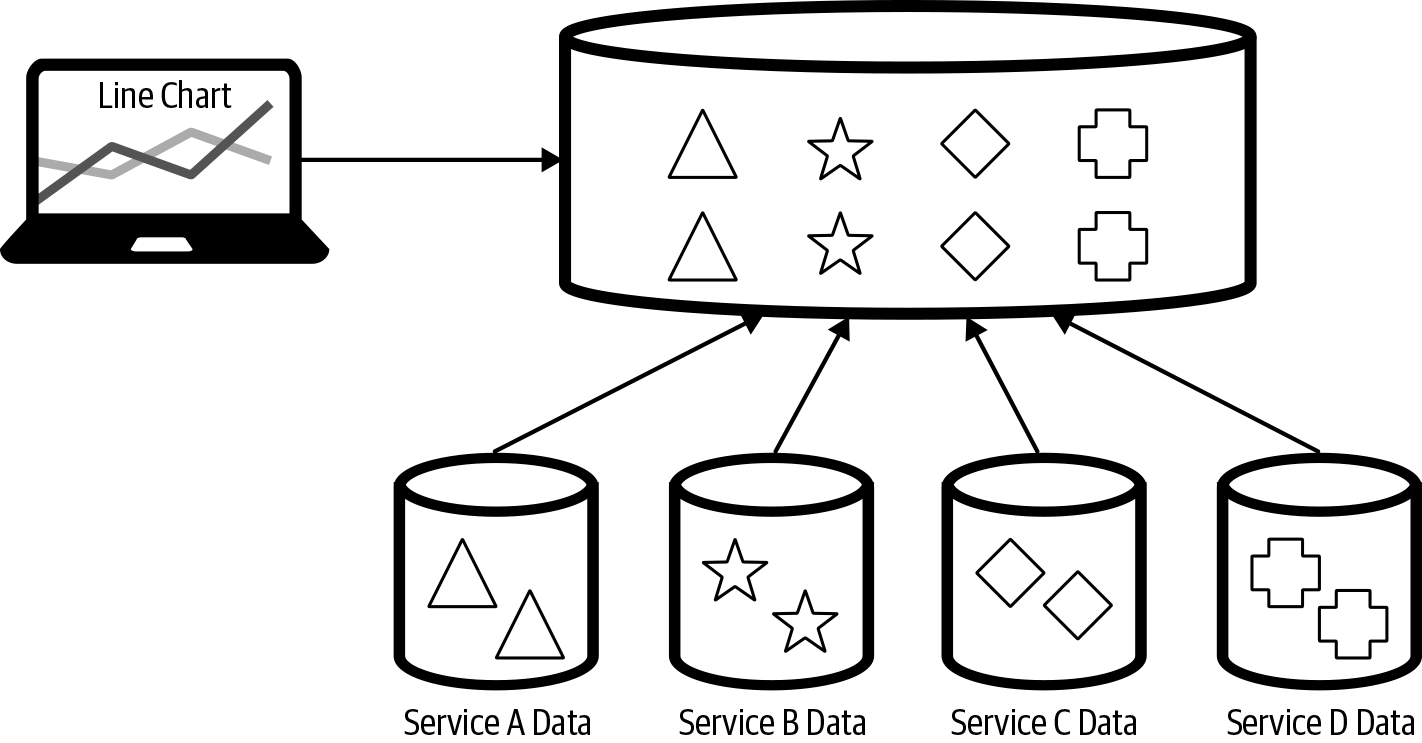

Cloud computing has made a big impact on how we build and operate software today, including how we work with the data. The cost of storing data has significantly decreased, making it cheaper and more feasible for companies to keep vastly larger amounts of data. The operational overhead of database systems is considerably less with the advent of managed and serverless data storage services. This has made it easier to spread data across different data storage types, placing data into the systems better suited to manage the classification of data stored. A trend in microservices architectures encourages the decentralization of data, spreading the data for an application across multiple services, each with its own datastores. It’s also common that data is replicated and partitioned in order to scale a system. Figure 4-1 shows how a typical architecture will consist of multiple data storage systems with data spread across them. It’s not uncommon that data in one datastore is a copy derived from data in another store, or has some other relationship to data in another store.

Cloud native applications take advantage of managed and serverless data storage and processing services. All of the major public cloud providers offer a number of different managed services to store, process, and analyze data. In addition to cloud provider–managed database offerings, some companies provide managed databases on the cloud provider of your choice. MongoDB, for example, offers a cloud-managed database service called MongoDB Atlas that is available on Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). By using a managed database, the team can focus on building applications that use the database instead of spending time provisioning and managing the underlying data systems.

Figure 4-1. Data is often spread across multiple data systems

Note

Serverless database is a term that has been used to refer to a type of managed database with usage-based billing in which customers are charged based on the amount of data stored and processed. This means that if a database is not being accessed, the user is billed only for the amount of data stored. When there is an operation on the database, either the user is charged for the specific operation or the database is scaled from zero and back during the processing of the operation.

Cloud native applications take full advantage of the cloud, including data systems used. The following is a list of cloud native application characteristics for data:

-

Prefer managed data storage and analytics services.

-

Use polyglot persistence, data partitioning, and caching.

-

Embrace eventual consistency and use strong consistency when necessary.

-

Prefer cloud native databases that scale out, tolerate faults, and are optimized for cloud storage.

-

Deal with data distributed across multiple datastores.

Cloud native applications often need to deal with silos of data, which require a different approach to working with data. There are a number of benefits to polyglot persistence, decentralized data, and data partitioning, but there are also trade-offs and considerations.

Data Storage Systems

There are a growing number of options for storing and processing data. It can be difficult to determine which products to use when building an application. Teams will sometimes engage in a number of iterations evaluating languages, frameworks, and the data storage systems that will be used in the application. Many are still not convinced they made the correct decision, and it’s common for those storage systems to be replaced or new ones added as the application evolves anyway.

It can be helpful to understand the various types of datastores and the workloads they are optimized for when deciding which products to use. Many products are, however, multimodel and are designed to support multiple data models, falling into multiple data storage classifications. Applications will often take advantage of multiple data storage systems, storing files in an object store, writing data to a relational database, and caching with an in-memory key/value store.

Objects, Files, and Disks

Every public cloud provider offers an inexpensive object storage service. Object storage services manage data as objects. Objects are usually stored with metadata for the object and a key that’s used as a reference for the object. File storage services generally provide shared access to files through a traditional file sharing model with a hierarchical directory structure. Disks or block storage provides storage of disk volumes used by computing instances. Determining where to store files such as images, documents, content, and genomics data files will largely depend on the systems that access them. Each of the following storage types is better suited for different types of files:

Note

You should prefer object storage for storing file data. Object storage is relatively inexpensive, extremely durable, and highly available. All of the major cloud providers offer different storage tiers enabling cost saving based on data access requirements.

- Object/blob storage

-

-

Use it with files when the applications accessing the data support the cloud provider API.

-

It is inexpensive and can store large amounts of data.

-

Applications need to implement a cloud provider API. If application portability is a requirement, see Chapter 7.

-

- File storage

- Disk (block) storage

In addition to the various cloud provider–managed storage options for files and objects, you can provision a distributed filesystem. The Hadoop Distributed File System (HDFS) is popular for big data analytics. The distributed filesystem can use the cloud provider disk or block storage services. Many of the cloud providers have managed services for popular distributed filesystems that include the analytics tools used. You should consider these filesystems when using the analytics tools that work with them.

Databases

Databases are generally used for storing more structured data with well-defined formats. A number of databases have been released over the past few years, and the number of databases available for us to choose from continues to grow every year. Many of these databases have been designed for specific types of data models and workloads. Some of them support multiple models and are often labeled as multimodel databases. It helps to organize databases into a group or classification when considering which database to use where in an application.

Key/value

Often, application data needs to be retrieved using only the primary key, or maybe even part of the key. A key/value store can be viewed as simply a very large hash table that stores some value under a unique key. The value can be retrieved very efficiently using the key or, in some cases, part of the key. Because the value is opaque to the database, a consumer would need to scan record-by-record in order to find an item based on the value. The keys in a key/value database can comprise multiple elements and even can be ordered for efficient lookup. Some of the key/value databases allow for the lookup using the key prefix, making it possible to use compound keys. If the data can be queried based on some simple nesting of keys, this might be a suitable option. If we’re storing orders for customer xyz in a key/value store, we might store them using the customer ID as a key prefix followed by the order number, “xyz-1001.” A specific order can be retrieved using the entire key, and orders for customer xyz could be retrieved using the “xyz” prefix.

Note

Key/value databases are generally inexpensive and very scalable datastores. Key/value data storage services are capable of partitioning and even repartitioning data based on the key. Selecting a key is important when using these datastores because it will have a significant impact on the scale and the performance of data storage reads and writes.

Document

A document database is similar to a key/value database in that it stores a document (value) by a primary key. Unlike a key/value database, which can store just about any value, the documents in a document database need to conform to some defined structure. This enables features like the maintenance of secondary indexes and the ability to query data based on the document. The values commonly stored in a document database are a composition of hashmaps (JSON objects) and lists (JSON arrays). JSON is a popular format used in document databases, although many database engines use a more efficient internal storage format like MongoDB’s BSON.

Tip

You will need to think differently about how you organize data in a document-oriented database when coming from relational databases. It takes time for many to make the transition to this different approach to data modeling.

You can use these databases for much of what was traditionally stored in a relational database like PostgreSQL. They have been growing in popularity and unlike wwith relational databases, the documents map nicely to objects in programming languages and don’t require object relational mapping (ORM) tools. These databases generally don’t enforce a schema, which has some advantages with regard to Continuous Delivery (CD) of software changes requiring data schema changes.

Relational

Relational databases organize data into two-dimensional structures called tables, consisting of columns and rows. Data in one table can have a relationship to data in another table, which the database system can enforce. Relational databases generally enforce a strict schema, also referred to schema on write, in which a consumer writing data to a database must conform to a schema defined in the database.

Relational databases have been around for a long time and a lot of developers have experience working with them. The most popular and commonly used databases, as of today, are still relational databases. These databases are very mature, they’re good with data that contains a large number of relationships, and there’s a large ecosystem of tools and applications that know how to work with them. Many-to-many relationships can be difficult to work with in document databases, but in relational database they are very simple. If the application data has a lot of relationships, especially those that require transactions, these databases might be a good fit.

Graph

A graph database stores two types of information: edges and nodes. Edges define the relationships between nodes, and you can think of a node as the entity. Both nodes and edges can have properties providing information about that specific edge or node. An edge will often define the direction or nature of a relationship. Graph databases work well at analyzing the relationships between entities. Graph data can be stored in any of the other databases, but when graph traversal becomes increasingly complex, it can be challenging to meet the performance and scale requirements of graph data in the other storage types.

Column family

A column-family database organizes data into rows and columns, and can initially appear very similar to a relational database. You can think of a column-family database as holding tabular data with rows and columns, but the columns are divided into groups known as column families. Each column family holds a set of columns that are logically related together and are typically retrieved or manipulated as a unit. Other data that is accessed separately can be stored in separate column families. Within a column family, new columns can be added dynamically, and rows can be sparse (that is, a row doesn’t need to have a value for every column).

Time-series

Time-series data is a database that’s optimized for time, storing values based on time. These databases generally need to support a very high number of writes. They are commonly used to collect large amounts of data in real time from a large number of sources. Updates to the data are rare and deletes are often completed in bulk. The records written to a time-series database are usually very small, but there are often a large number of records. Time-series databases are good for storing telemetry data. Popular uses include Internet of Things (IoT) sensors or application/system counters. Time-series databases will often include features for data retention, down-sampling, and storing data in different mediums depending on configuration data usage patterns.

Search

Search engine databases are often used to search for information held in other datastores and services. A search engine database can index large volumes of data with near-real-time access to the indexes. In addition to searching across unstructured data like that in a web page, many applications use them to provide structured and ad hoc search features on top of data in another database. Some databases have full-text indexing features, but search databases are also capable of reducing words to their root forms through stemming and normalization.

Streams and Queues

Streams and queues are data storage systems that store events and messages. Although they are sometimes used for the same purpose, they are very different types of systems. In an event stream, data is stored as an immutable stream of events. A consumer is able to read events in the stream at a specific location but is unable to modify the events or the stream. You cannot remove or delete individual events from the stream. Messaging queues or topics will store messages that can be changed (mutated), and it’s possible to remove an individual message from a queue. Streams are great at recording a series of events, and streaming systems are generally able to store and process very large amounts of data. Queues or topics are great for messaging between different services, and these systems are generally designed for the short-term storage of messages that can be changed and randomly deleted. This chapter focuses more on streams because they are more commonly used with data systems, and queues more commonly used for service communications. For more information on queues, see Chapter 3.

Note

A topic is a concept used in a publish-subscribe messaging model. The only difference between a topic and a queue is that a message on a queue goes to one subscriber, whereas a message to a topic will go to multiple subscribers. You can think of a queue as a topic with one, and only one, subscriber.

Blockchain

Records on a blockchain are stored in a way that they are immutable. Records are grouped in a block, each of which contains some number of records in the database. Every time new records are created, they are grouped into a single block and added to the chain. Blocks are chained together using hashing to ensure that they are not tampered with. The slightest change to the data in a block will change the hash. The hash from each block is stored at the beginning of the next block, ensuring that nobody can change or remove a block from the chain. Although a blockchain could be used like any other centralized database, it’s commonly decentralized, removing power from a central organization.

Selecting a Datastore

When selecting a datastore, you need to consider a number of requirements. Selecting data storage technologies and services can be quite challenging, especially given the cool new databases constantly becoming available and changes in how we build software. Start with the architecturally significant requirements—also known as nonfunctional requirements—for a system and then move to the functional requirements.

Selecting the appropriate datastore for your requirements can be an important design decision. There are literally hundreds of implementations to choose from among SQL and NoSQL databases. Datastores are often categorized by how they structure data and the types of operations they support. A good place to begin is by considering which storage model is best suited for the requirements. Then, consider a particular datastore within that category, based on factors such as feature set, cost, and ease of management.

Gather as much of the following information as you can about your data requirements.

Functional requirements

- Data format

-

What type of data do you need to store?

- Read and write

-

How will the data need to be consumed and written?

- Data size

-

How large are the items that will be placed in the datastore?

- Scale and structure

-

How much storage capacity do you need, and do you anticipate needing to partition your data?

- Data relationships

-

Will your data need to support complex relationships?

- Consistency model

-

Will you require strong consistency or is eventual consistency acceptable?

- Schema flexibility

-

What kind of schemas will you apply to your data? Is a fixed or strongly enforced schema important?

- Concurrency

-

Will the application benefit from multiversion concurrency control? Do you require pessimistic and/or optimistic concurrency control?

- Data movement

-

Will your application need to move data to other stores or data warehouses?

- Data life cycle

-

Is the data write-once, read-many? Can it be archived over time or can the fidelity of the data be reduced through down-sampling?

- Change streams

-

Do you need to support change data capture (CDC) and fire events when data changes?

- Other supported features

-

Do you need any other specific features, full-text search, indexing, and so on?

Nonfunctional requirements

- Team experience

-

Probably one of the biggest reasons teams select a specific database solution is because of experience.

- Support

-

Sometimes the database system that’s the best technical fit for an application is not the best fit for a project because of the support options available. Consider whether or not available support options meet the organizations needs.

- Performance and scalability

-

What are your performance requirements? Is the workload heavy on ingestion? Query and analytics?

- Reliability

-

What are the availability requirements? What backup and restore features are necessary?

- Replication

-

Will data need to be replicated across multiple regions or zones?

- Limits

-

Are there any hard limits on size and scale?

- Portability

-

Do you need to deploy on-premises or to multiple cloud providers?

Management and cost

- Managed service

-

When possible, use a managed data service. There are, however, situations for which a feature is not available and needed.

- Region or cloud provider availability

-

Is there a managed data storage solution available?

- Licensing

-

Are there any restrictions on licensing types in the organization? Do you have a preference of a proprietary versus open source software (OSS) license?

- Overall cost

-

What is the overall cost of using the service within your solution? A good reason to prefer managed services is for the reduced operational cost.

Selecting a database can be a bit daunting when you’re looking across the vast number of databases available today and the new ones constantly introduced in the market. A site that tracks database popularity, db-engines (https://db-engines.com), lists 329 different databases as of this writing. In many cases the skillset of the team is a major driving factor when selecting a database. Managing data systems can add significant operational overhead and burden to the team and managed data systems are often preferred for cloud-native applications, so the availability of managed data systems will quite often narrow down the options. Deploying a simple database can be easy, but consider that the patching, upgrades, performance tuning, backups, and highly available database configurations increase operations burden. Yet there are situations in which managing a database is necessary, and you might prefer some of the new databases built for the cloud, like CockroachDB or YugaByte. Also consider available tooling: it might make sense to deploy and manage a certain database if this avoids the need to build software to consume the data, like a dashboard or reporting systems.

Data in Multiple Datastores

Whether you’re working with data across partitions, databases, or services, data in multiple datastores can introduce some data management challenges. Traditional transaction management might not be possible and distributed transactions will adversely affect the performance and scale of a system. The following are some of the challenges of distributing data:

-

Data consistency across the datastores

-

Analysis of data in multiple datastores

-

Backup and restore of the datastores

The consistency and integrity of the data can be challenging when spread across multiple datastores. How do you ensure a related record in one system is updated to reflect a change in another system? How do you manage copies of data, whether they are cached in memory, a materialized view, or stored in the systems of another service team? How do you effectively analyze data that’s stored across multiple silos? Much of this is addressed through data movement, and a growing number of technologies and services are showing up in the market to handle this.

Change Data Capture

Many of the database options available today offer a stream of data change events (change log) and expose this through an easy-to-consume API. This can make it possible to perform some actions on the events, like triggering a function when a document changes or updating a materialized view. For example, successfully adding a document that contains an order could trigger an event to update reporting totals and notify an accounting service that an order for the customer has been created. Given a move to polyglot persistence and decentralized datastores, these event streams are incredibly helpful in maintaining consistency across these silos of data. Some common use cases for CDC include:

- Notifications

-

In a microservices architecture, it’s not uncommon that another service will want to be notified of changes to data in a service. For this, you can use a webhook or subscription to publish events for other services.

- Materialized views

-

Materialized views make for efficient and simplified queries on a system. The change events can be used to update these views.

- Cache invalidation

-

Caches are great for improving the scale and performance of a system, but invalidating the cache when the backing data has changed is a challenge. Instead of using a time-to-live (TTL), you can use change events to either remove the cached item or update it.

- Auditing

-

Many systems need to maintain a record of changes to data. You can use this log of changes to track what was changed and when. The user that made the change is often needed, so it might be necessary to ensure that this information is also captured.

- Search

-

Many databases are not very good at handling search, and the search datastores do not provide all of the features needed in other databases. You can use change streams to maintain a search index.

- Analytics

-

The data analytics requirements of an organization often require a view across many different databases. Moving the data to a central data lake, warehouse, or database can enable richer reporting and analytics requirements.

- Change analytics

-

Near-real-time analysis of data changes can be separated from the data access concerns and performed on the data changes.

- Archive

-

In some applications, it is necessary to maintain an archive of state. This archive is rarely accessed, and it’s often better to store this in a less expensive storage system.

- Legacy systems

-

Replacing a legacy system will sometimes require data to be maintained in multiple locations. These change streams can be used to update data in a legacy system.

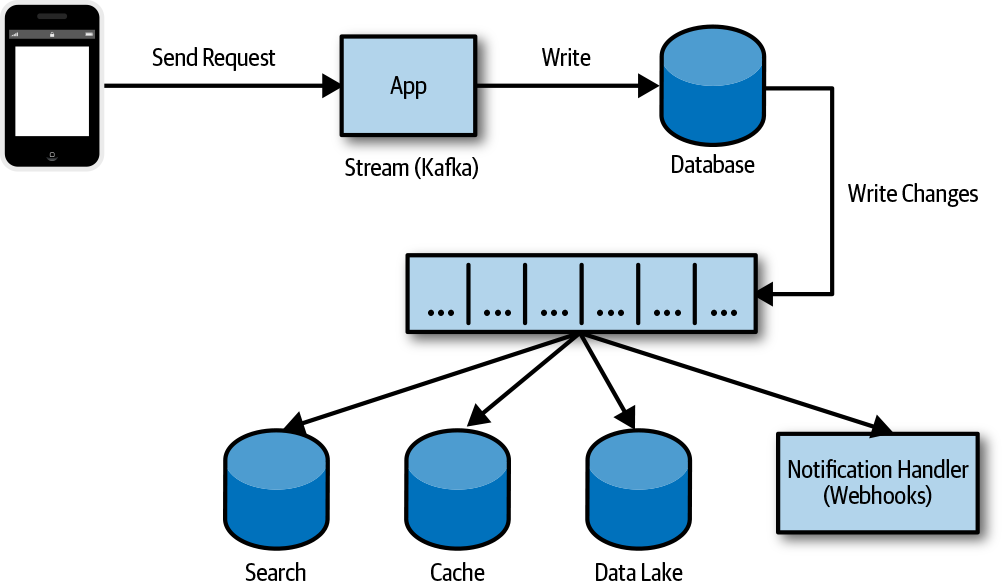

In Figure 4-2, we see an app writing to a database that logs a change. That change is then written to a stream of change logs and processed by multiple consumers. Many database systems maintain an internal log of changes that can be subscribed to with checkpoints to resume at a specific location. MongoDB, for example, allows you to subscribe to events on a deployment, data, or collection, and provide a token to resume at a specific location. Many of the cloud provider databases handle the watch process and will invoke a serverless function for every change.

Figure 4-2. CDC used to synchronize data changes

The application could have written the change to the stream and the database, but this presents some problems if one of the two operations fails and it potentially creates a race condition. For example, if the application were updating some data in the database, like an account shipping preference, and then failed to write to an event stream, the data in the database would have changed, but the other systems would not have been notified or updated, like a shipping service. The other concern is that if two processes made a change to the same record at close to the same time, the order to events can be a problem. Depending on the change and how it’s processed, this might not be an issue, but it’s something to consider. The concern is that we either record the event that something changed when it didn’t, or change something and don’t record the event.

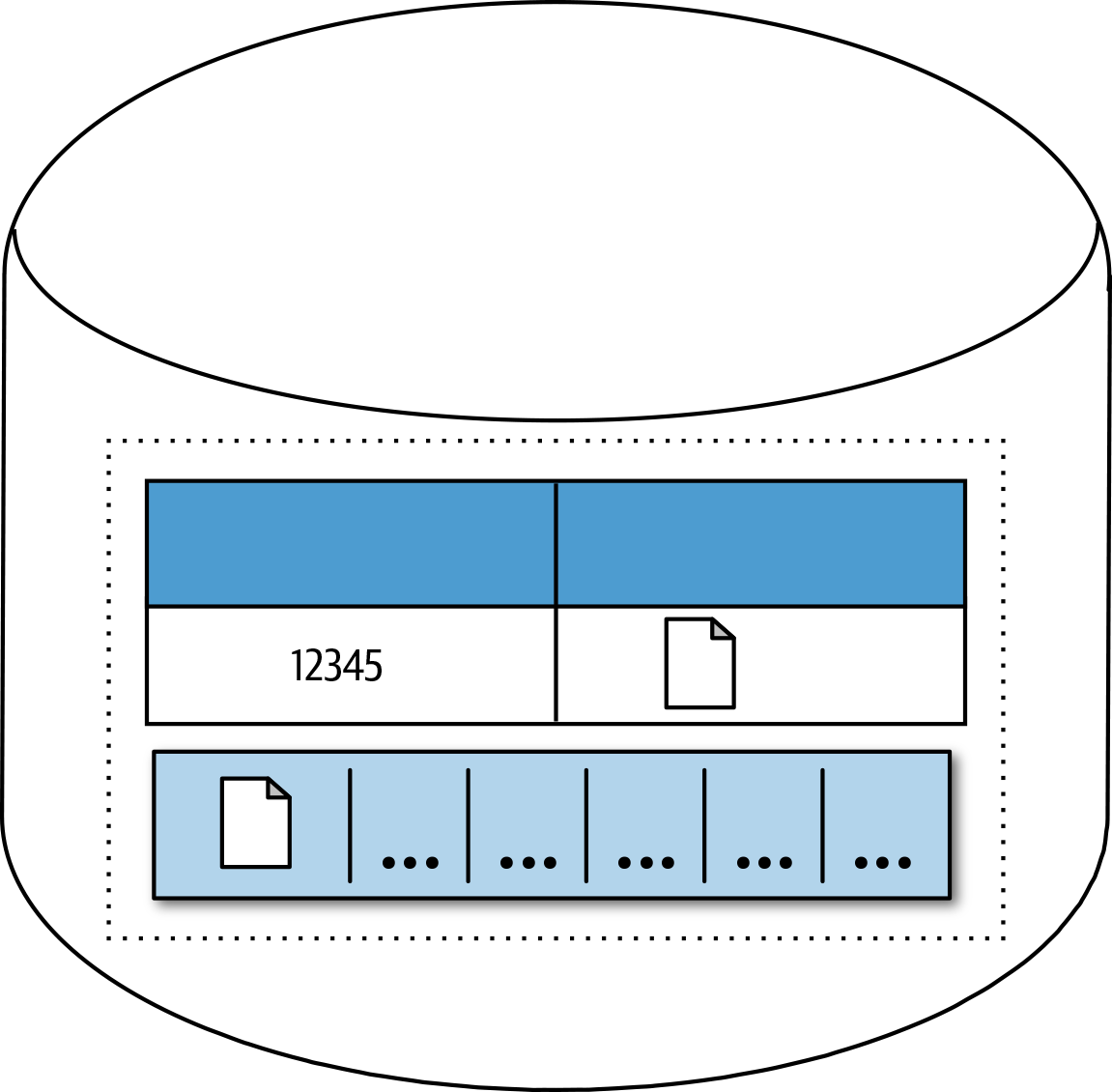

By using the databases change stream, we can write the change or mutation of the document and the log of that change as a transaction. Even though data systems consuming the event stream are eventually consistent after some period of time, it’s important that they become consistent. Figure 4-3 shows a document that has been updated and the change recorded as part of a transaction. This ensures that the change event and the actual change itself are consistent, so now we just need to consume and process that event into other systems.

Figure 4-3. Changes to a record and operation log in a transaction scope

Many of the managed data services make this really easy to implement and can be quickly configured to invoke a serverless function when a change happens in the datastore. You can configure MongoDB Atlas to invoke a function in the MongoDB Stitch service. A change in Amazon DynamoDB or Amazon Simple Storage Service (Amazon S3) can trigger a lambda function. Microsoft Azure Functions can be invoked when a change happens in Azure Cosmos DB or Azure Blob Storage. A change in Google Cloud Firestore or object storage service can trigger a Cloud Function. Implementation with popular managed data storage services can be fairly straightforward. This is becoming a popular and necessary feature with most datastores.

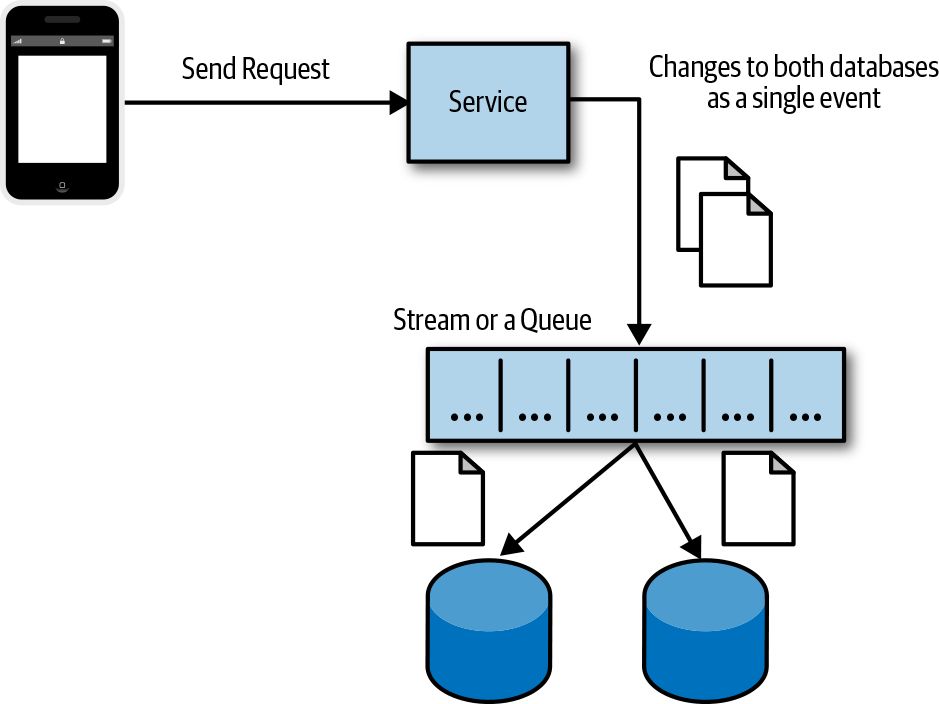

Write Changes as an Event to a Change Log

As we just saw an application failure during an operation that affects multiple datastores can result in data consistency issues. Another approach that you can use when an operation spans multiple databases is to write the set of changes to a change log and then apply those changes. A group of changes can be written to a stream maintaining order, and if a failure occurs while the changes are being applied, it can be easy to retry or resume the operation, as shown in Figure 4-4.

Figure 4-4. Saving a set of changes before writing each change

Transaction Supervisor

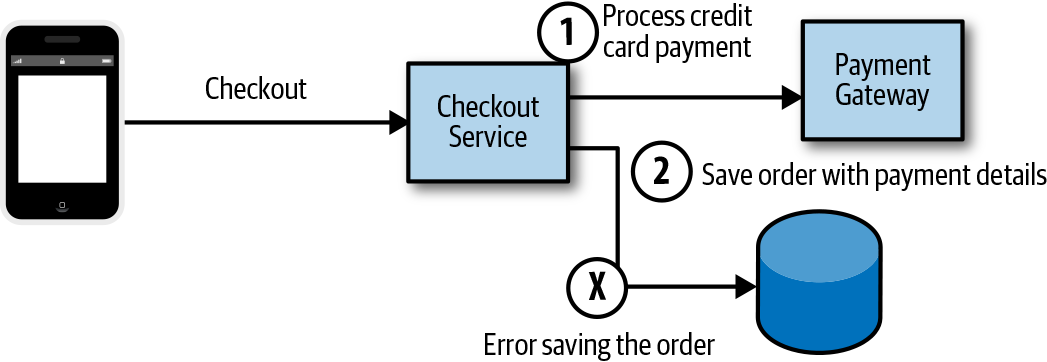

You can use a supervisor service to ensure that a transaction is successfully completed or is compensated. This can be especially useful when you’re performing transactions involving external services—for example, writing an order to the system and processing a credit card, in which credit card processing can fail, or saving the results of the processing. As Figure 4-5 illustrates, a checkout service receives an order, processes a credit card payment, and then fails to save the order to the order database. Most customers would be upset to know that their credit card was processed but there was no record of their order. This is a fairly common implementation.

Figure 4-5. Failing to save order details after processing an order

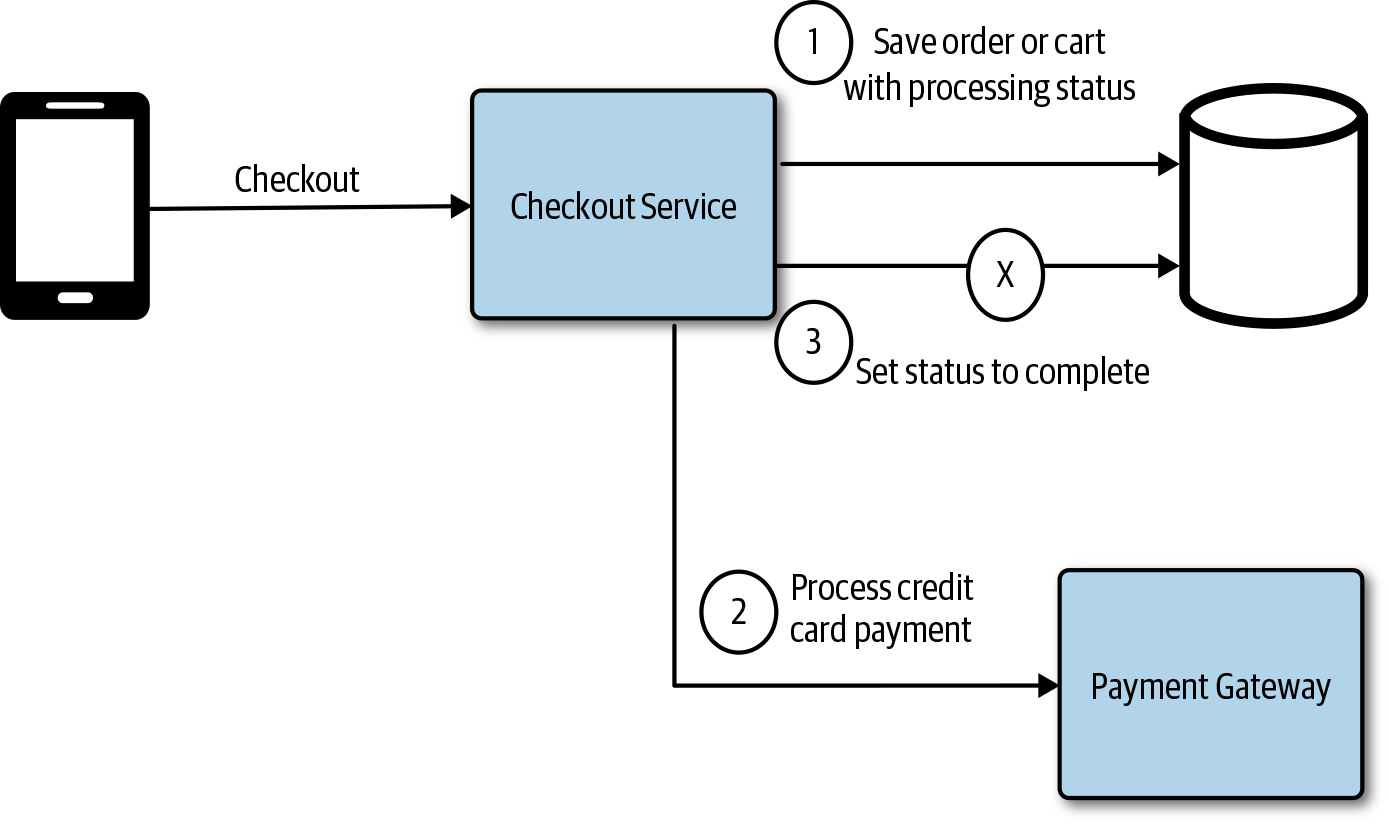

Another approach might be to save the order or cart with a status of processing, then make the call to the payment gateway to process the credit card payment, and finally, update the status of the order. Figure 4-6 demonstrates how if we fail to update the order status, at least we have the record of an order submitted and the intention to process it. If the payment gateway service offered a notification service like a webhook callback, we could configure that to ensure that the status was accurate.

Figure 4-6. Failing to update order status

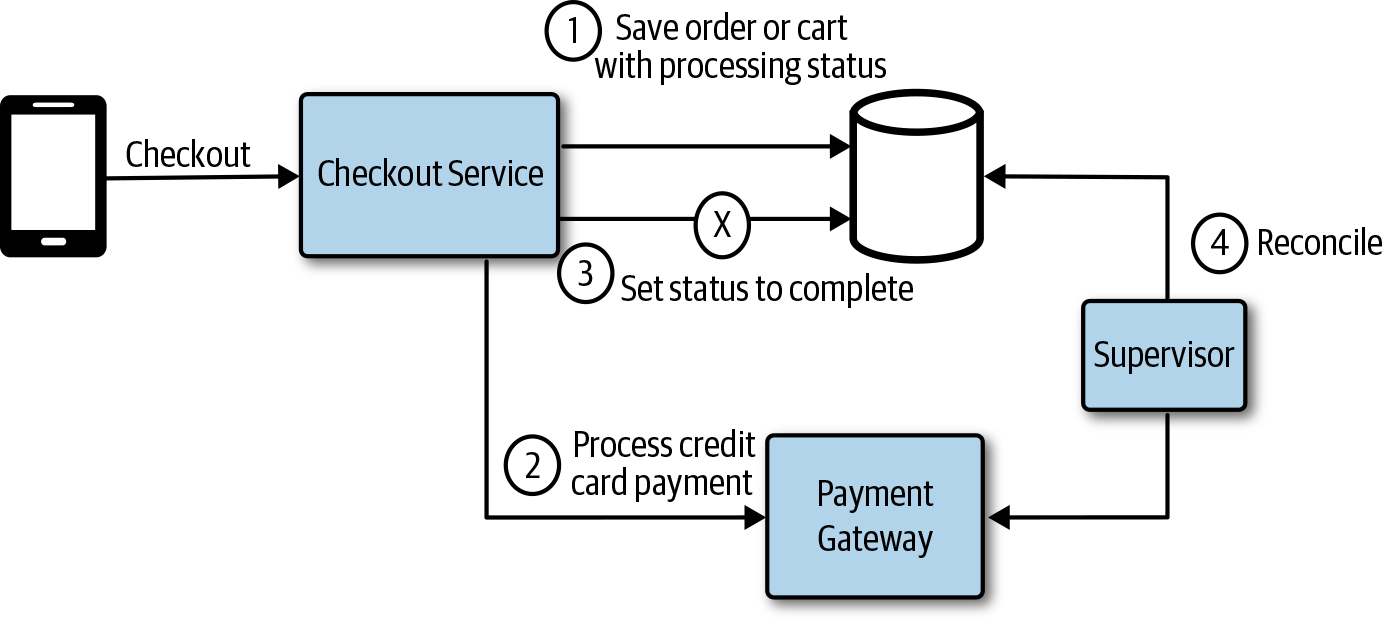

In Figure 4-7, a supervisor is added to monitor the order database for processing transactions that have not completed and reconciles the state. The supervisor could be a simple function that’s triggered at a specific interval.

Figure 4-7. A supervisor service monitors transactions for errors

You can use this approach—using a supervisor and setting status—in many different ways to monitor systems and databases for consistency and take action to correct them or generate a notification of the issue.

Compensating Transactions

Traditional distributed transactions are not commonly used in today’s cloud native applications, and not always available. There are situations for which transactions are necessary to maintain consistency across services or datastores. For example, a consumer posts some data with a file to an API requiring the application to write the file to object storage and some data to a document database. If we write the file to object storage and then fail when writing to the database, for any reason, we have a potentially orphaned file in object storage if the only way to find it is through a query on the database and reference. This is a situation in which we want to treat writing the file and the database record as a transaction; if one fails, both should fail. The file then should be removed to compensate for the failed database write. This is essentially what a compensating transaction does. A logical set of operations need to complete; if one of the operations fails, we might need to compensate the ones that succeeded.

Note

You should avoid service coordination. In many cases, you can avoid complex transaction coordination by designing for eventual consistency and using techniques like CDC.

Extract, Transform, and Load

The need to move and transform data for business intelligence (BI) is quite common. Businesses have been using Extract, Transform, and Load (ETL) platforms for a long time to move data from one system to another. Data analytics is becoming an important part of every business, large and small, so it should be no surprise that ETL platforms have become increasingly important. Data has become spread out across more systems and analytics tools have become much more accessible. Everyone can take advantage of data analytics, and there’s a growing need to move the data into a location for performing data analysis, like a data lake or date warehouse. You can use ETL to get the data from these operational data systems into a system to be analyzed. ETL is a process that comprises the following three different stages:

- Extract

-

Data is extracted or exported from business systems and data storage systems, legacy systems, operational databases, external services, and event Enterprise Resource Planning (ERP) or Customer Relationship Management (CRM) systems. When extracting data from the various sources, it’s important to determine the velocity, how often the data is extracted from each source, and the priority across the various sources.

- Transform

-

Next, the extracted data is transformed; this would typically involve a number of data cleansing, transformation, and enrichment tasks. The data can be processed off a stream and is often stored in an interim staging store for batch processing.

- Load

-

The transformed data then is loaded into the destination and can be analyzed for BI.

All of the major cloud providers offer managed ETL services, like AWS Glue, Azure Data Factory, and Google Cloud DataFlow. Moving and processing data from one source to another is increasingly important and common in today’s cloud native applications.

Microservices and Data Lakes

One challenge of dealing with decentralized data in a microservices architecture is the need to perform reporting or analysis across data in multiple services. Some reporting and analytics requirements will need the data from the services to be in a common datastore.

Note

It might not be necessary to move the data in order to perform the required analysis and reporting across all of the data. Some or all of the analysis can be performed on each of the individual datastores in conjunction with some centralized analysis tasks on the results.

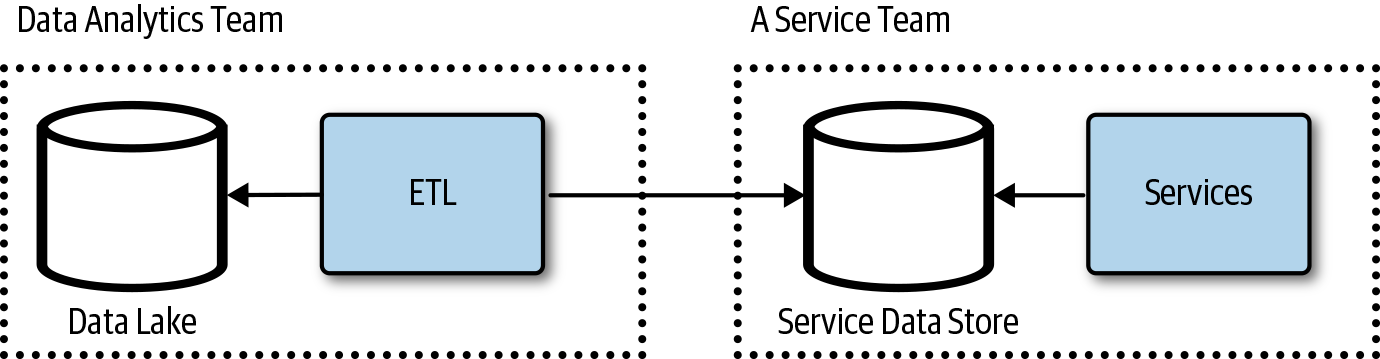

Having each service work from a shared or common database will, however, violate one of the microservices principles and potentially introduce coupling between the services. A common way to approach this is through data movement and aggregating the data into a location for a reporting or analytics team. In Figure 4-8, data from multiple microservices datastores is aggregated into a centralized database in order to deliver the necessary reporting and analytics requirements.

Figure 4-8. Data from multiple microservices aggregated in a centralized datastore

The data analytics or reporting team will need to determine how to get the data from the various service teams that it requires for the purpose of reporting without introducing coupling. There are a number of ways to approach this, and it will be important to ensure loose coupling is maintained, allowing the teams to remain agile and deliver value quickly.

The individual services team could give the data analytics teams read access to the database and allow them to replicate the data, as depicted in Figure 4-9. This would be a very quick and easy approach, but the service team does not control when or how much load the data extraction will put on the store, causing potential performance issues. This also introduces coupling, and it’s likely that the service teams then will need to coordinate with the data analytics team when making internal schema changes. The ETL load on the database adversely affecting service performance can be addressed by giving the data analytics team access to a read replica instead of the primary data. It might also be possible to give the data analytics team access to a view on the data instead of the raw documents or tables. This would help to mitigate some of the coupling concerns.

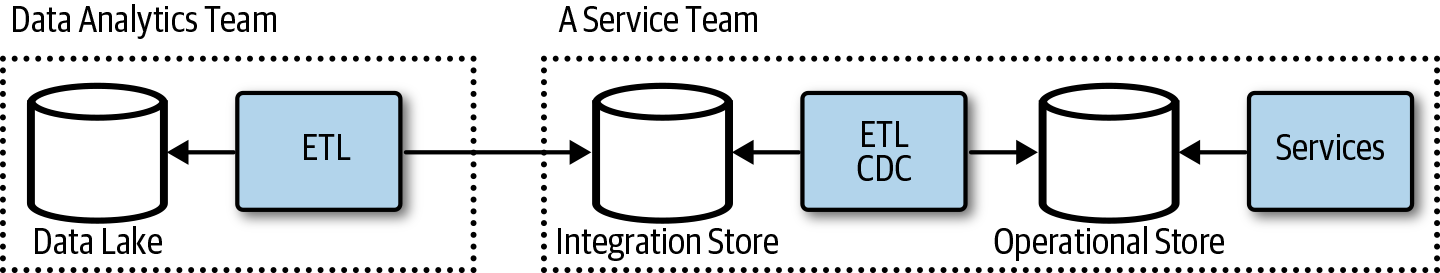

Figure 4-9. The data analytics team consumes data directly from the service team’s database

This approach can work in the early phases of the application with a handful of services, but it will be challenging as the application and teams grow. Another approach is to use an integration datastore. The service team provisions and maintains a datastore for internal integrations, as shown in Figure 4-10. This allows the service team to control what data and the shape of the data in the integration repository. This integration repository should be managed like an API, documented and versioned. The service team could run ETL jobs to maintain the database or use CDC and treat it like a materialized view. The service team could make changes to its operational store without affecting the other teams. The service team would be responsible for the integration store.

Figure 4-10. Database as an API

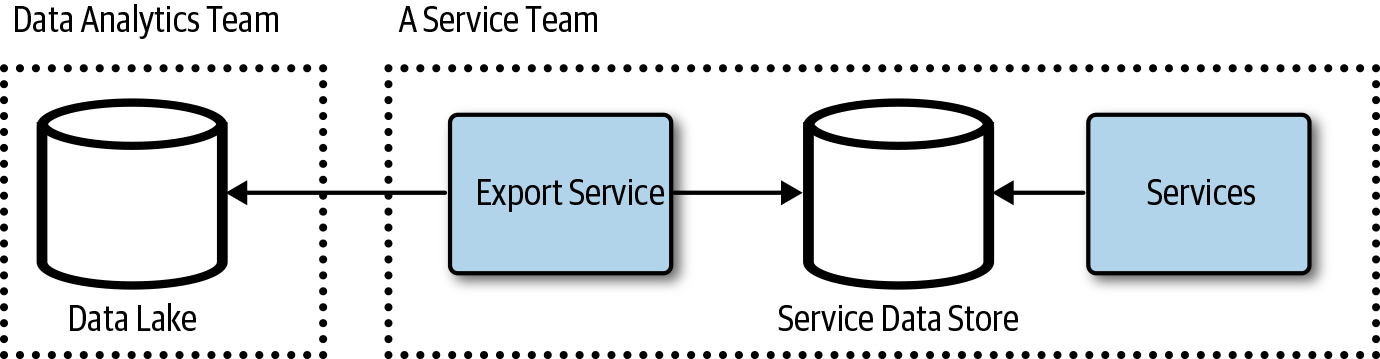

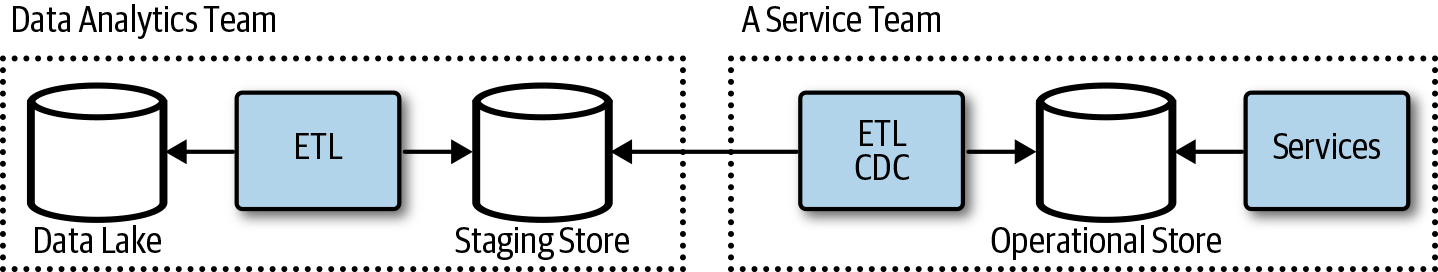

This could be turned around such that a service consumer, like the data analytics team, asks a service team to export or write data to the data lake, as illustrated in Figure 4-11, or to a staging store, as in Figure 4-12. The service teams support replication or data, logs, or data exports to a client-provided location as part of the service features and API. The data analytics team would provision a store or location in a datastore for each service team. The data analytics team then subscribes to data needed for aggregated analytics.

Figure 4-11. Service team data export service API

Figure 4-12. Service teams write to a staging store

It’s not uncommon for services to support data exports. The service implementation would define what export format and protocols are part of its API. This, for example, would be a configuration for an object storage location and credentials to which to send nightly exports, or maybe a webhook to which to send batches of changes. A service consumer such as the data analytics team would have access to the service API, allowing it to subscribe to data changes or exports. The team could send locations and credentials to which to either dump export files or send events.

Client Access to Data

Clients applications generally do not have direct access to the datastores in most applications built today. Data is commonly accessed through a service that’s responsible for performing authorizations, auditing, validation, and transformation of the data. The service is usually responsible for carrying out other functions, although in many data-centric applications, a large part of the service implementation simply handles data read and write operations.

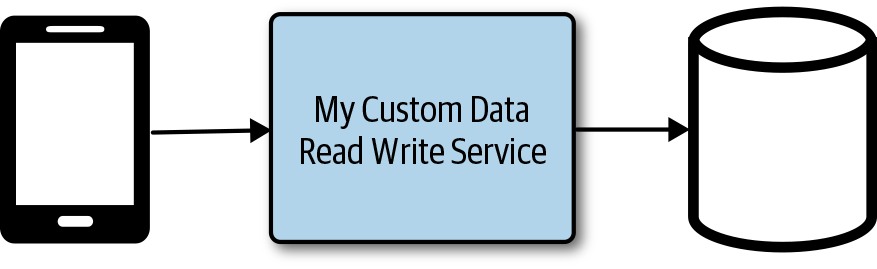

A simple data-centric application would generally require you to build and operate a service that performs authentication, authorization, logging, transformations, and validation of data. It does, however, need to control who can access what within the datastore and validate what’s being written. Figure 4-13 shows a typical frontend application calling a backend service that reads and writes to a single database. This is a common architecture for many applications today.

Figure 4-13. Client application with a backend service and database

Restricted Client Tokens (Valet-Key)

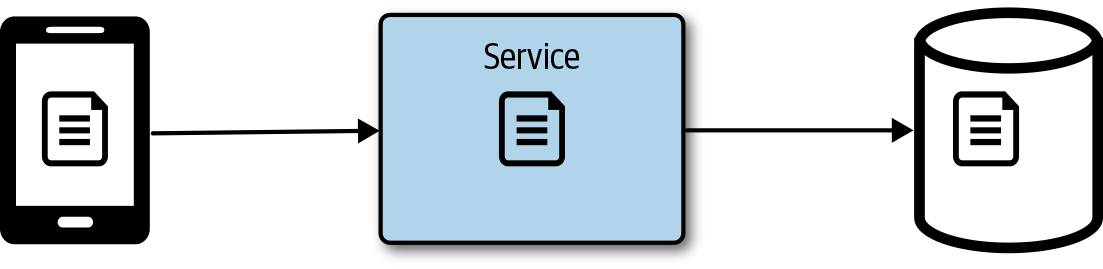

A service can create and return a token to a consumer that has limited use. This can actually be implemented using OAuth or even a custom cryptographically signed policy. The valet key is commonly used as a metaphor to explain how OAuth works and is a commonly used cloud design pattern. The token returned might be able to access only a specific data item for a limited period of time or upload a file to a specific location in a datastore. This can be a convenient way to offload processing from a service, reducing the cost and scale of the service and delivering better performance. In Figure 4-14, a file is uploaded to a service that writes the file to storage.

Figure 4-14. Client uploading a file that’s passed through the service

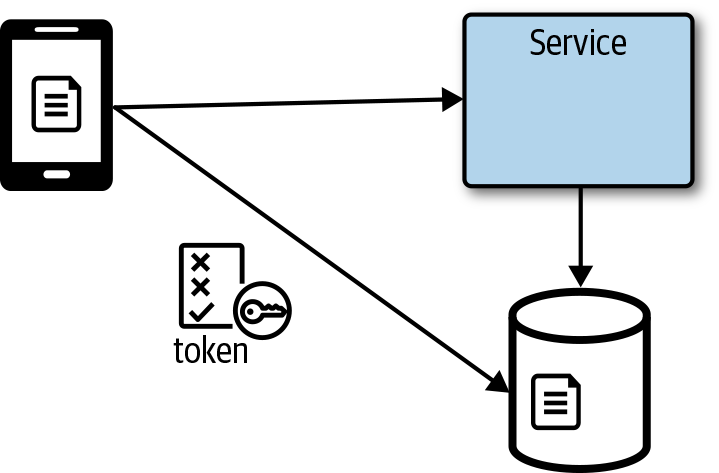

Instead of streaming a file through the service, it can be much more efficient to return a token to the client with a location to access the file if it were reading or uploading the file to a specific location. In Figure 4-15, the client requests a token and a location from the service, which then generates a token with some policies. The token policy can restrict the location to which the file can be uploaded, and it’s a best practice to set an expiration so that the token cannot be used anytime later on. The token should follow the principle of least privilege, granting the minimum permissions necessary to complete the task. In Microsoft Azure Blob Storage, the token is also referred to as a shared-access signature, and in Amazon S3, this would be a presigned URL. After the file is uploaded, an object storage function could be used to update the application state.

Figure 4-15. The client gets a token and path from a service to upload directly to storage

Database Services with Fine-Grained Access Control

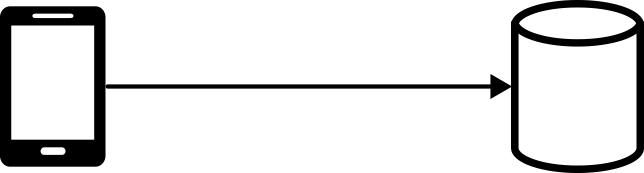

Some databases provide fine-grained access control to data in the database. These database services are sometimes called a Backend as a Service (BaaS) or Mobile Backend as a Service (MBaaS). A full-featured MBaaS will generally offer more than just data storage, given that mobile applications often need identity management and notification services as well. This almost feels like we have circled back to the days of the old thick-client applications. Thankfully, data storage services have evolved so that it’s not exactly the same. Figure 4-16 presents a mobile client connecting to a database service without having to deploy and manage an additional API. If there’s no need to ship a customer API, this can be a great way to quickly get an application out with low operational overhead. Careful attention is needed with releasing updates and testing the security rules to ensure that only the appropriate people are able to access the data.

Figure 4-16. A mobile application connecting to a database

Databases such as Google’s Cloud FireStore allow you to apply security rules that provide access control and data validation. Instead of building a service to control access and validate requests, you write security rules and validation. A user is required to authenticate to Google Firebase Authentication service, which can federate to other identity providers, like Microsoft’s Azure Active Directory services. After a user is authenticated, the client application can connect directly to the database service and read or write data, provided the operations satisfy the defined security rules.

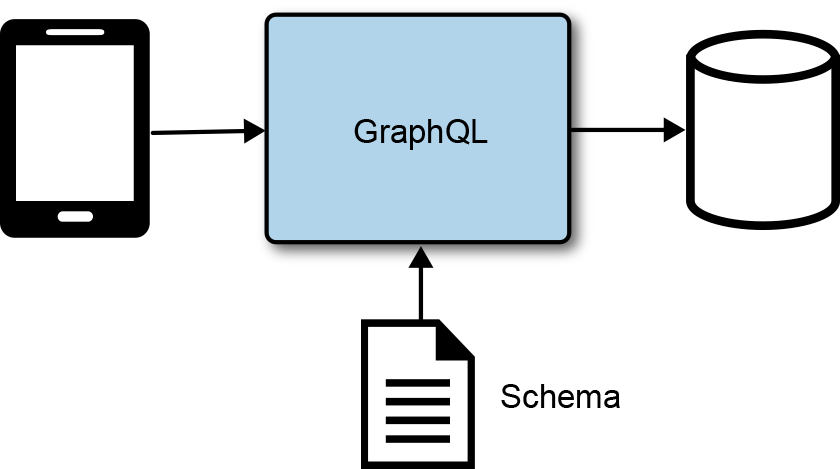

GraphQL Data Service

Instead of building and operating a custom service to manage client access to data, you can deploy and configure a GraphQL server to provide clients access to data. In Figure 4-17, a GraphQL service is deployed and configured to handle authorization, validation, caching, and pagination of data. Fully managed GraphQL services, like AWS AppSync, make it extremely easy to deploy a GraphQL-based backend for your client services.

Note

GraphQL is neither a database query language nor storage model; it’s an API that returns application data based on a schema that’s completely independent of how the data is stored.

Figure 4-17. GraphQL data access service

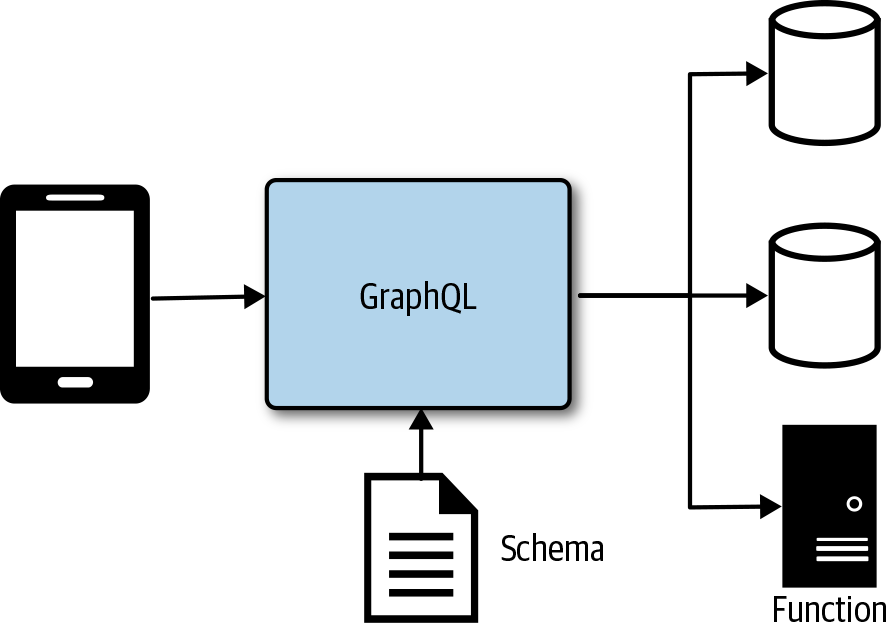

GraphQL is flexible and configurable through a GraphQL specification. You can configure it with multiple providers, and even configure it to execute services either running in a container or deployed as functions that are invoked on request, as shown in Figure 4-18. GraphQL is a great fit for data-centric backends with the occasional service method that needs to be invoked. Services like GitHub are actually moving their entire API over to GraphQL because this provides more flexibility to the consumers of the API. GraphQL can be helpful in addressing the over-fetching and chattiness that’s sometimes common with REST-based APIs.

GraphQL uses a schema-first approach, defining nodes (objects) and edges (relationships) as part of a schema definition for the graph structure. Consumers can query the schema for details about the types and relationships across the objects. One benefit of GraphQL is that it makes it easy to define the data you want, and only the data you want, without having to make multiple calls or fetch data that’s not needed. The specification supports authorizations, pagination, caching, and more. This can make it quick and easy to create a backend that handles most of the features needed in a data-centric application. For more information, visit the GraphQL website.

Figure 4-18. GraphQL service with multiple providers and execution

Fast Scalable Data

A large majority of application scaling and performance problems can be attributed to the databases. This is a common point of contention that can be challenging to scale out while meeting an application’s data-quality requirements. In the past, it was too easy to put logic into a database in the form of stored procedures and triggers, increasing compute requirements on a system that was notoriously expensive to scale. We learned to do more in the application and rely less on the database for something other than focusing on storing data.

Tip

There are very few reasons to put logic in a database. Don’t do it. If you go there, make sure that you understand the trade-offs. It might make sense in a few cases and it might improve performance, but likely at the cost of scalability.

Scaling anything and everything can be achieved through replication and partitioning. Replicating the data to a cache, materialized view, or read-replica can help increase the scalability, availability, and performance of data systems. Partitioning data either horizontally through sharding, vertically based on data model, or functionally based on features will help improve scalability by distributing the load across systems.

Sharding Data

Sharding data is about dividing the datastore into horizontal partitions, known as shards. Each shard contains the same schema, but holds a subset of the data. Sharding often is used to scale a system by distributing the load across multiple data storage systems.

When sharding data, it’s important to determine how many shards to use and how to distribute the data across the shards. Deciding how to distribute the data across shards heavily depends on the application’s data. It’s important to distribute the data in such a way that one single shard does not become overloaded and receive all or most of the load. Because the data for each shard or partition is commonly in a separate datastore, it’s important that the application can connect to the appropriate shard (partition or database).

Caching Data

Data caching is important to scaling applications and improving performance. Caching is really just about copying the data to a faster storage medium like memory, and generally closer to the consumer. There might even be varying layers of cache; for example, data can be cached in the memory of the client application and in a shared distributed cache on the backend.

When working with a cache, one of the biggest challenges is keeping the cached data synchronized with the source. When the source data changes, it is often necessary to either invalidate or update the cached copy of the data. Sometimes, the data rarely changes; in fact, in some cases the data will not change through the lifetime of the application process, making it possible to load this static data into a cache when the application starts and then not need to worry about invalidation. Here are some common approaches for cache invalidation and updates:

-

Rely on TTL configurations by setting a value that removes a cached item after a configurable expiration time. The application or a service layer then would be responsible for reloading the data when it does not find an item in the cache.

-

Use CDC to update or invalidate a cache. A process subscribes to a datastore change stream and is responsible for updating the cache.

-

Application logic is responsible for invalidating or updating the cache when it makes changes to the source data.

-

Use a passthrough caching layer that’s responsible for managing cached data. This can remove the concern of the data caching implementation from the application.

-

Run a background service at a configuration interval to update a cache.

-

Use the data replication features of the database or another service to replicate the data to a cache.

-

Caching layer renews cached items based on access and available cache resources.

Content Delivery Networks

A content delivery network (CDN) is a group of geographically distributed datacenters, also known as points of presence (POP). A CDN often is used to cache static content closer to consumers. This reduces the latency between the consumer and the content or data needed. Following are some common CDN use cases:

-

Improve website loading times by placing content closer to the consumer.

-

Improve application performance of an API by terminating traffic closer to the consumer.

-

Speed up software downloads and updates.

-

Increase content availability and redundancy.

-

Accelerate file upload through CDN services like Amazon CloudFront.

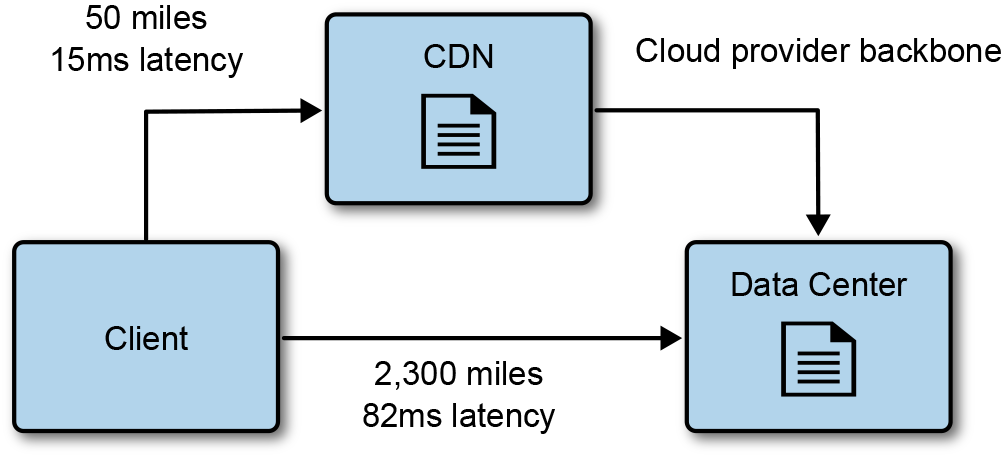

The content is cached, so a copy of it is stored at the edge locations and will be used instead of the source content. In Figure 4-19, a client is fetching a file from a nearby CDN with a much lower latency of 15 ms as opposed to the 82 ms latency between the client and the source location of the file, also known as the origin. Caching and CDN technologies enable faster retrieval of the content, and scale by removing load from the origin as well.

Figure 4-19. A client accesses content cached in a CDN closer to the client

The content cached in a CDN is usually configured with an expiration date-time, also known as TTL properties. When the expiration date-time is exceeded, the CDN reloads the content from the origin, or source. Many CDN services allow you to explicitly invalidate content based on a path; for example, /img/*. Another common technique is to change the name of the content by adding a small hash to it and updating the reference for consumers. This technique is commonly used for web application bundles like the JavaScript and CSS files used in a web application.

Here are some considerations regarding CDN cache management:

-

Use content expiration to refresh content at specific intervals.

-

Change the name of the resource by appending a hash or version to the content.

-

Explicitly expire the cache either through management console or API.

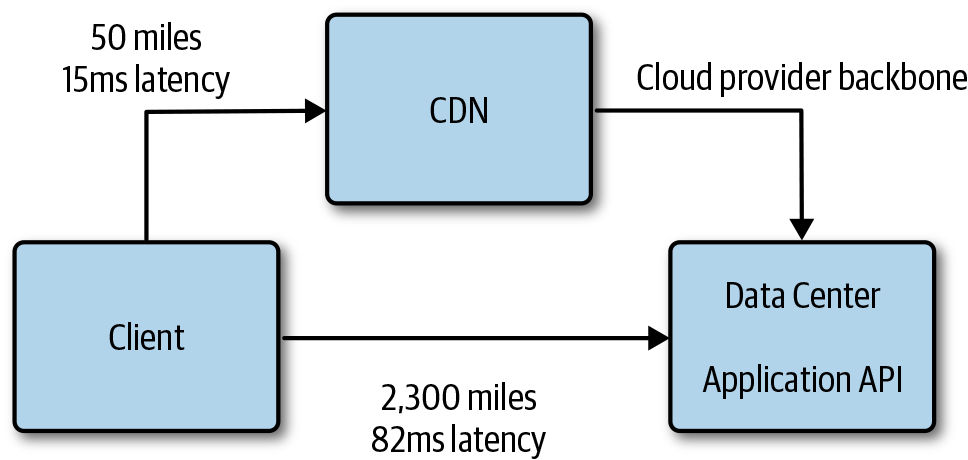

CDN vendors continue adding more features, making it possible to push more and more content, data, and services closer to the consumers, improving performance, scale, security, and availability. Figure 4-20 demonstrates a client calling a backend API with the request being routed through the CDN and over the cloud provider’s backbone connection between datacenters. This is a much faster route to the API with lower latency, improving the Secure Sockets Layer (SSL) handshake between the client and the CDN as well as the API request.

Figure 4-20. Accelerated access to a backend API

Here are a few additional features to consider when using CDN technologies:

- Rules or behaviors

-

It can be necessary to configure routing, adding response headers, or enable redirects based on request properties like SSL.

- Application logic

-

Some CDN vendors like Amazon CloudFront allow you to run application logic at the edge, making it possible to personalize content for a consumer.

- Custom name

-

It’s often necessary to use a custom name with SSL, especially when serving a website through a CDN.

- File upload acceleration

-

Some CDN technologies are able to accelerate file upload by reducing the latency to the consumer.

- API acceleration

-

As with file upload, it’s possible to accelerate APIs through a CDN by reducing the latency to the consumer.

Note

Use a CDN as much as possible, pushing as much as you can over the CDN.

Analyzing Data

The data created and stored continues to grow at exponential rates. The tools and technologies used to extract information from data continues to evolve to support the growing demand to derive insights from the data, making business insights through complex analytics available to even the smallest businesses.

Streams

Businesses need to reduce their time to insights in order to gain an edge in today’s competitive fast-moving markets. Analyzing the data streams in real time is a great way to reduce this latency. Streaming data-processing engines are designed for unbounded datasets. Unlike data in a traditional data storage system in which you have a holistic view of the data at a specific point in time, streams have an entity-by-entity view of the data over time. Some data, like stock market trades, click streams, or sensor data from devices, comes in as a stream of events that never end. Stream processing can be used to detect patterns, identify sequences, and look at results. Some events, like a sudden transition in a sensor, might be more valuable when they happen and diminish over time or enable a business to react more quickly and immediately to these important changes. Detecting a sudden drop in inventory, for example, allows a company to order more stock and avoid some missed sales opportunities.

Batch

Unlike stream processing, which is done in real time as the data arrives, batch processing is generally performed on very large bounded sets of data as part of exploring a data science hypothesis, or at specific intervals to derive business insights. Batch processing is able to process all or most of the data and can take minutes or hours to complete, whereas stream processing is completed in a matter of seconds or less. Batch processing works well with very large volumes of data, which might have been stored over a long period of time. This could be data from legacy systems or simply data for which you’re looking for patterns over many months or years.

Data analytics systems typically use a combination of batch and stream processing. The approaches to processing streams and batches have been captured as some well-known architecture patterns. The Lambda architecture is an approach in which applications write data to an immutable stream. Multiple consumers read data from the stream independent of one another. One consumer is concerned with processing data very quickly, in near real time, whereas the other consumer is concerned with processing in batch and a lower velocity across a larger set of data or archiving the data to object storage.

Data Lakes on Object Storage

Data lakes are large, scalable, and generally centralized datastores that allow you to store structured and unstructured data. They are commonly used to run map-and-reduce jobs for analyzing vast amounts of data. The analytics jobs are highly parallelizable so the analysis of the data can easily be distributed across the store. Hadoop has become the popular tool for data lakes and big data analysis. Data is commonly stored on a cluster of computers in the Hadoop Distributed File System (HDFS), and various tools in the Hadoop ecosystem are used to analyze the data. All of the major public cloud vendors provide managed Hadoop clusters for storing and analyzing the data. The clusters can become expensive, requiring a large number of very big machines. These machines might be running even when there are no jobs to run on the cluster. It is possible to shut down these clusters and maintain state for cost savings when they are not in use and resume the clusters during periods of data loading or analysis.

It’s becoming increasingly common to use fully managed services that allow you to pay for the data loaded in the service and pay-per-job execution. These services not only can reduce operational costs related to managing these services, but also can result in big savings when running the occasional analytics jobs. Cloud vendors have started providing services that align with a serverless cost model for provisioning data lakes. Azure Data Lake and Amazon S3–based AWS Lake Formation are some examples of this.

Data Lakes and Data Warehouses

Data lakes are often compared and contrasted with data warehouses because they are similar, although in large organizations it’s not uncommon to see both used. Data lakes are generally used to store raw and unstructured data, whereas the data in a data warehouse has been processed and organized into a well-defined schema. It’s common to write data into a data lake and then process it from the data lake into a data warehouse. Data scientists are able to explore and analyze the data to discover trends that can help define what is processed into a data warehouse for business professionals.

Distributed Query Engines

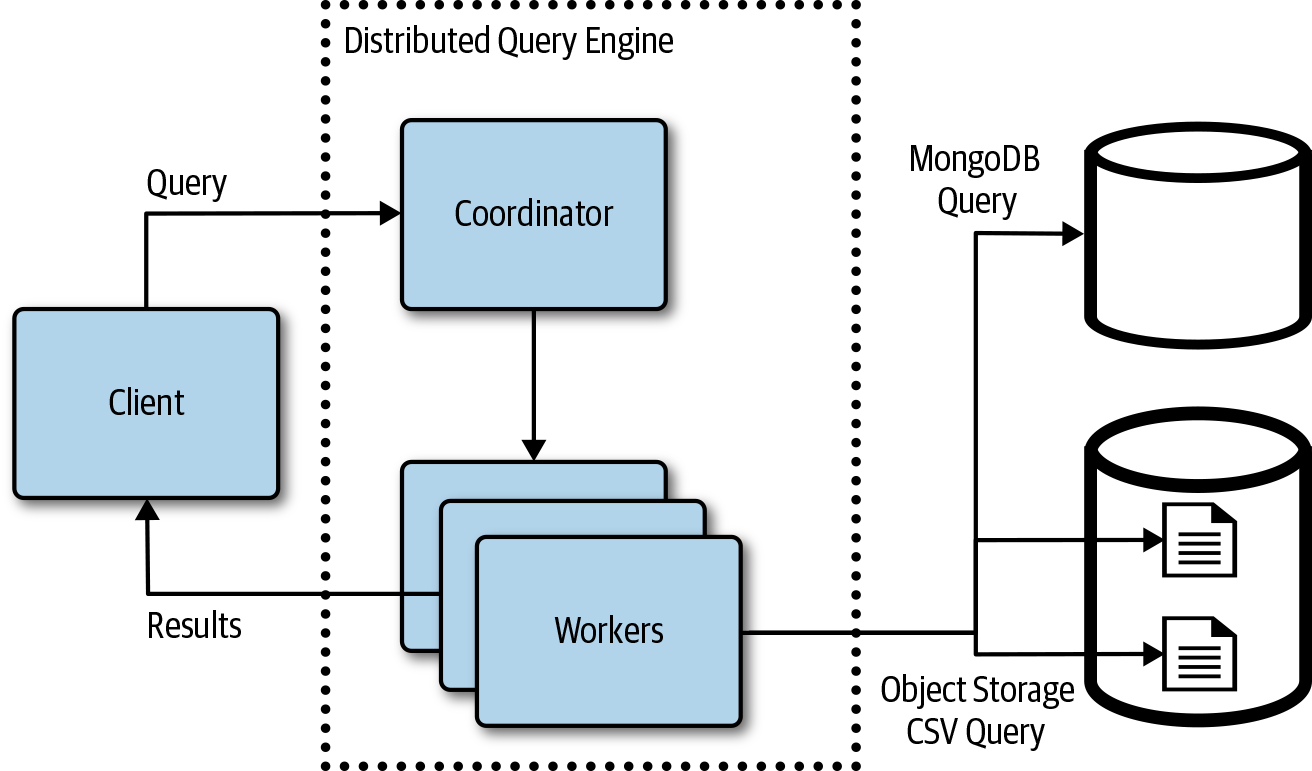

Distributed query engines are becoming increasingly popular, supporting the need to quickly analyze data stored across multiple data systems. Distributed query engines separate the query engine from the storage engine and use techniques to distribute the query across a pool of workers. A number of open source query engines have become popular in the market: Presto, Spark SQL, Drill, and Impala, to name a few. These query engines utilize a provider model to access various data storage systems and partitions.

Hadoop jobs were designed for processing large amounts of data through jobs that would run for minutes or even hours crunching through the vast amounts of data. Although a structured query language (SQL)–like interface exists in tools such as HIVE, the queries are translated to jobs submitted to a job queue and scheduled. A client would not expect that the results from a job would return in minutes or seconds. It is, however, expected that distributed query engines like Facebook’s Presto would return results from a query in the matter of minutes or even seconds.

At a high level, a client submits a query to the distributed query engine. A coordinator is responsible for parsing the query and scheduling work to a pool of workers. The pool of workers then connects to the datastores needed to satisfy the query, fetches the results, and merges the results from each to the workers. The query can run against a combination of datastores: relational, document, object, file, and so on. Figure 4-21 depicts a query that fetches information from a MongoDB database and some comma-separated values (CSV) files stored in an object store like Amazon S3, Azure Blob Storage, or Google Object Storage.

The cloud makes it possible to quickly and easily scale workers, allowing the distributed query engine to handle query demands.

Figure 4-21. Overview of a distributed query engine

Databases on Kubernetes

Kubernetes dynamic environment can make it challenging to run data storage systems in a Kubernetes cluster. Kubernetes pods are created and destroyed, and cluster nodes can be added or removed, forcing pods to move to new nodes. Running a stateful workload like a database is much different than stateless services. Kubernetes has features like stateful sets and support for persistent volumes to help with deploying and operating databases in a Kubernetes cluster. Most of the durable data storage systems require a disk volume as the underlying persistent storage mechanism, so understanding how to attach storage to pods and how volumes work is important when deploying databases on Kubernetes.

In addition to providing the underlying storage volumes, data storage systems have different routing and connectivity needs as well as hardware, scheduling, and operational requirements. Some of the newer cloud native databases have been built for these more dynamic environments and can take advantage of the environments to scale out and tolerate transient errors.

Note

There are a growing number of operators available to help simplify the deployment and management of data systems on Kubernetes. Operator Hub is a directory listing of operators (https://www.operatorhub.io).

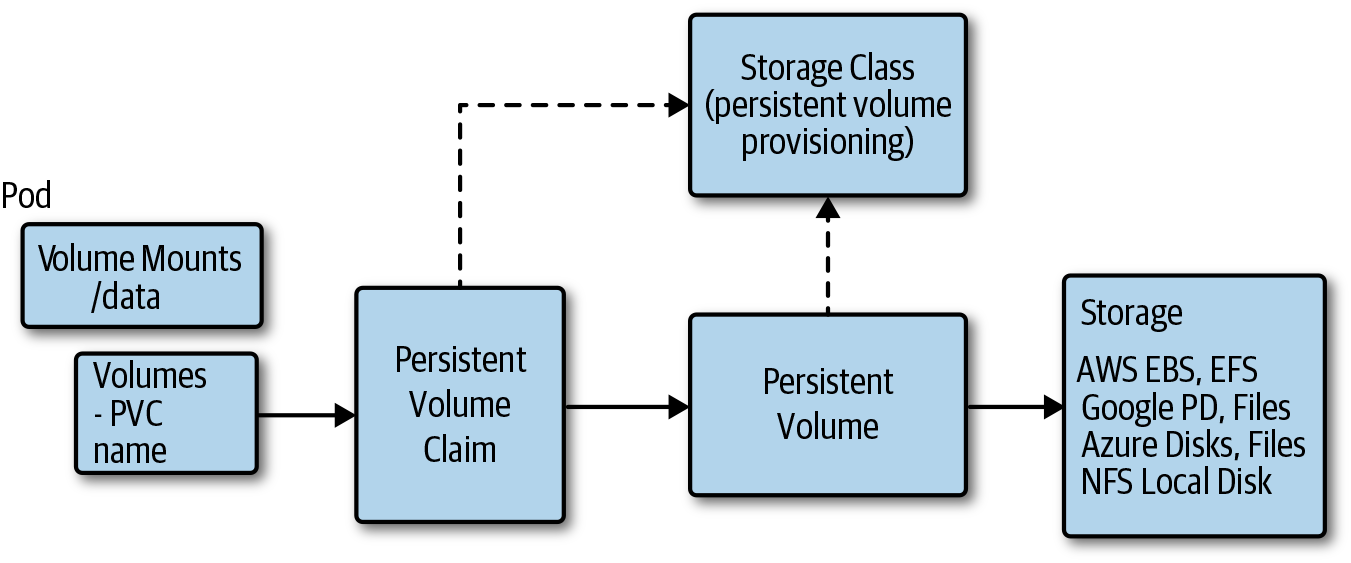

Storage Volumes

A database system like MongoDB runs in a container on Kubernetes and often needs a durable volume with a life cycle different from the container. Managing storage is much different than managing compute. Kubernetes volumes are mounted into pods using persistent volumes, persistent volume claims, and underlying storage providers. Following are some fundamental storage volume terms and concepts:

- Persistent volume

-

A persistent volume is the Kubernetes resource that represents the actual physical storage service, like a cloud provider storage disk.

- Persistent volume claim

-

A persistent volume storage claim is a storage request, and Kubernetes will assign and associate a persistent volume to it.

- Storage class

-

A storage class defines storage properties for the dynamic provisioning of a persistent volume.

A cluster administrator will provision persistent volumes that capture the underlying implementation of the storage. This could be a persistent volume to a network-attached file share or cloud provider durable disks. When using cloud provider disks, it’s more likely one or more storage classes will be defined and dynamic provisioning will be used. The storage class will be created with a name that can be used to reference the resource, and the storage class will define a provisioner as well as the parameters to pass to the provisioner. Cloud providers offer multiple disk options with different price and performance characteristics. Different storage classes are often created with the different options that should be available in the cluster.

A pod is going to be created that requires a persistent storage volume so that data is still there when the pod is removed and comes back up on another node. Before creating the pod, a persistent volume claim is created, specifying the storage requirements for the workload. When a persistent volume claim is created, and references a specific storage class, the provisioner and parameters defined in that storage class will be used to create a persistent volume that satisfies the persistent volume claims request. The pod that references the persistent volume claim is created and the volume is mounted at the path specified by the pod. Figure 4-22 shows a pod with a reference to a persistent volume claim that references a persistent volume. The persistent volume resource and plug-in contains the configuration and implementation necessary to attach the underlying storage implementation.

Figure 4-22. A Kubernetes pod persistent volume relationship

Note

Some data systems might be deployed in a cluster using ephemeral storage. Do not configure these systems to store data in the container; instead, use a persistent volume mapped to a node’s ephemeral disks.

StatefulSets

StatefulSets were designed to address the problem of running stateful services like data storage systems on Kubernetes. StatefulSets manage the deployment and scaling of a set of pods based on a container specification. StatefulSets provide a guarantee about the order and uniqueness of the pods. The pods created from the specification each have a persistent identifier that is maintained across any rescheduling. The unique pod identity comprises the StatefulSet name and an ordinal starting with zero. So, a StatefulSet named “mongo” and a replica setting of “3” would create three pods named “mongo-0,” “mongo-1,” and “mongo-2,” each of which could be addressed using this stable pod name. This is important because clients often need to be able to address a specific replica in a storage system and the replicas often need to communicate between one another. StatefulSets also create a persistent volume and persistent volume claim for each individual pod, and they are configured such that the disk created for the “mongo-0” pod is bound to the “mongo-0” pod when it’s rescheduled.

Note

StatefulSets currently require a headless service, which is responsible for the network identity of the pods and must be created in addition to the StatefulSet.

Affinity and anti-affinity is a feature of Kubernetes that allows you to constrain which nodes pods will run on. Pod anti-affinity can be used to improve the availability of a data storage system running on Kubernetes by ensuring replicas are not running on the same node. If a primary and secondary were running on the same node and that node happened to go down, the database would be unavailable until the pods were rescheduled and started on another node.

Cloud providers offer many different types of compute instance types that are better suited for different types of workloads. Data storage systems will often run better on compute instances that are optimized for disk access, although some might require higher memory instances. The stateless services running the cluster, however, do not require these specialized instances that will often cost more and are fine running on general commodity instances. You can add a pool of storage-optimized nodes to a Kubernetes cluster to run the storage workloads that can benefit from these resources. You can use Kubernetes node selection along with taints and tolerations to ensure the data storage systems are scheduled on the pool of storage optimized nodes and that other services are not.

Given most data storage systems are not Kubernetes aware, it’s often necessary to create an adapter service that runs with the data storage system pod. These services are often responsible for injecting configuration or cluster environment settings into the data storage system. For example, if we deployed a MongoDB cluster and need to scale the cluster with another node, the MongoDB sidecar service would be responsible for adding the new MongoDB pod to the MongoDB cluster.

DaemonSets

A DaemonSet ensures that a group of nodes runs a single copy of a pod. This can be a useful approach to running data storage systems when the system needs to be part of the cluster and use nodes dedicated to storage system. A pool of nodes would be created in the cluster for the purpose of running the data storage system. A node selector would be used to ensure the data storage system was only scheduled to these dedicated nodes. Taints and tolerations would be used to ensure other processes were not scheduled on these nodes. Here are some trade-offs and considerations when deciding between daemon and stateful sets:

-

Kubernetes StatefulSets work like any other Kubernetes pods, allowing them to be scheduled in the cluster as needed with available cluster resources.

-

StatefulSets generally rely on remote network attached storage devices.

-

DaemonSets offer a more natural abstraction for running on a database on a pool of dedicated nodes.

-

Discovery and communications will add some challenges that need to be addressed.

Summary

Migrating and building applications in the cloud requires a different approach to the architecture and design of applications’ data-related requirements. Cloud providers offer a rich set of managed data storage and analytics services, reducing the operating costs for data systems. This makes it much easier to consider running multiple and different types of data systems, using storage technologies that might be better suited for the task. This cost and scale of the datastores has changed, making it easier to store large amounts of data at a price point that keeps going down as cloud providers continue to innovate and compete in these areas.

Get Cloud Native now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.