Chapter 1. Continuous Delivery Basics

“Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.”

First principle of the Agile Manifesto

Continuous delivery (CD) is fundamentally a set of practices and disciplines in which software delivery teams produce valuable and robust software in short cycles. Care is taken to ensure that functionality is added in small increments and that the software can be reliably released at any time. This maximizes the opportunity for rapid feedback and learning. In 2010, Jez Humble and Dave Farley published their seminal book Continuous Delivery (Addison-Wesley), which collated their experiences working on software delivery projects around the world, and this publication is still the go-to reference for CD. The book contains a very valuable collection of techniques, methodologies, and advice from the perspective of both technology and organizations. One of the core recommended technical practices of CD is the creation of a build pipeline, through which any candidate change to the software being delivered is built, integrated, tested, and validated before being determining that it is ready for deployment to a production environment.

Continuous Delivery with a Java Build Pipeline

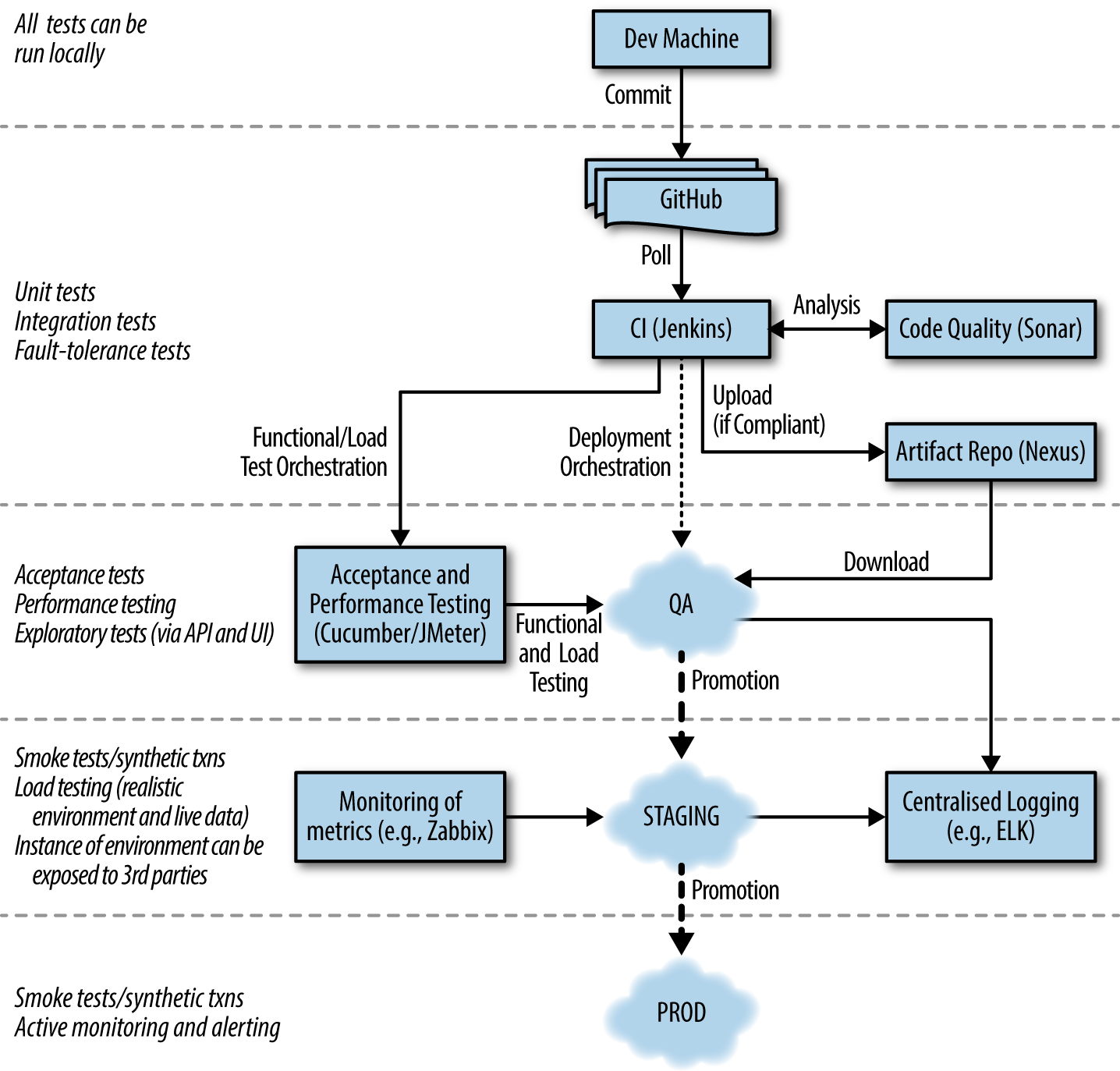

Figure 1-1 demonstrates a typical continuous delivery build pipeline for a Java-based monolithic application. The first step of the process of CD is continuous integration (CI). Code that is created on a developer’s laptop is continually committed (integrated) into a shared version control repository, and automatically begins its journey through the entire pipeline.

Figure 1-1. A typical Java application continuous delivery pipeline

The primary goal of the build pipeline is to prove that changes are production-ready. A code of configuration modification can fail at any stage of the pipeline, and this change will accordingly be rejected and not marked as ready for deployment to production.

Initially, the software application to which a code change is being applied is built and tested in isolation, and some form of code quality analysis may (should) also be applied, perhaps using a tool like Sonar Qube. Code that successfully passes the initial unit and component tests and the code-quality metrics moves to the right in the pipeline, and is exercised within a larger integrated context. Ultimately, code that has been fully validated emerges from the pipeline and is marked as ready for deployment into production. Some organizations automatically deploy applications that have successfully navigated the build pipeline and passed all quality checks—this is known as continuous delivery.

Maximize Feedback: Automate All the Things

The build pipeline must provide rapid feedback for the development team in order to be useful within their daily work cycles. Accordingly, automation is used extensively (with the goal of 100% automation), as this provides a reliable, repeatable, and error-free approach to processes. The following items should be automated:

- Software compilation and code-quality static analysis

- Functional testing, including unit, component, integration, and end-to-end

- Provisioning of all environments, including the integration of logging, monitoring, and alerting hooks

- Deployment of software artifacts to all environments, including production

- Data store migrations

- System testing, including nonfunctional requirements like fault tolerance, performance, and security

- Tracking and auditing of change history

The Impact of Containers on Continuous Delivery

Java developers will look at Figure 1-1 and recognize that in a typical Java build pipeline, two things are consistent regardless of the application framework (like Java EE or Spring) being used. The first is that Java applications are often packaged as Java Archives (JAR) or web application archive (WAR) deployment artifacts, and the second is that these artifacts are typically stored in an artifact repository such as Nexus, Apache Achiva, or Artificatory. This changes when developing Java applications that will ultimately be deployed within container technology like Docker.

Saying that a JAR file is similar to a Docker image is not a fair comparison. Indeed, it is somewhat analogous to comparing an engine and a car—and following this analogy, Java developers are much more familiar with the skills required for building engines. Due to the scope of this book, an explanation of the basics of Docker is not included, but the reader new to containerization and Docker is strongly encouraged to read Arun Gupta’s Docker for Java Developers to understand the fundamentals, and Adrian Mouat’s Using Docker for a deeper-dive into the technology.

Because Docker images are run as containers within a host Linux operating system, they typically include some form of operating system (OS), from lightweight ones like Alpine Linux or Debian Jessie, to a fully-functional Linux distribution like Ubuntu, CentOS, or RHEL. The exception of running on OS within a Docker container is the “scratch” base image that can be used when deploying statically compiled binaries (e.g., when developing applications) in Golang. Regardless of the OS chosen, many languages, including Java, also require the packaging of a platform runtime that must be considered (e.g., when deploying Java applications, a JVM must also be installed and running within the container).

The packaging of a Java application within a container that includes a full OS often brings more flexibility, allowing, for example, the installation of OS utilities for debugging and diagnostics. However, with great power comes great responsibility (and increased risk). Packaging an OS within our build artifacts increases a developer’s area of responsibility, such as the security attack surface. Introducing Docker into the technology stack also means that additional build artifacts now have to be managed within version control, such as the Dockerfile, which specifies how a container image should be built.

One of the core tenets of CD is testing in a production-like environment as soon as is practical, and container technology can make this easier in comparison with more traditional deployment fabrics like bare metal, OpenStack, or even cloud platforms. Docker-based orchestration frameworks that are used as a deployment fabric within a production environment, such as Docker Swarm, Kubernetes (nanokube), and Mesos (minimesos), can typically be spun up on a developer’s laptop or an inexpensive local or cloud instance.

The increase in complexity of packaging and running Java applications within containers can largely be mitigated with an improved build pipeline—which we will be looking at within this book—and also an increased collaboration between developers and operators. This is one of the founding principles with DevOps movement.

Get Containerizing Continuous Delivery in Java now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.