May 2017

Beginner to intermediate

596 pages

15h 2m

English

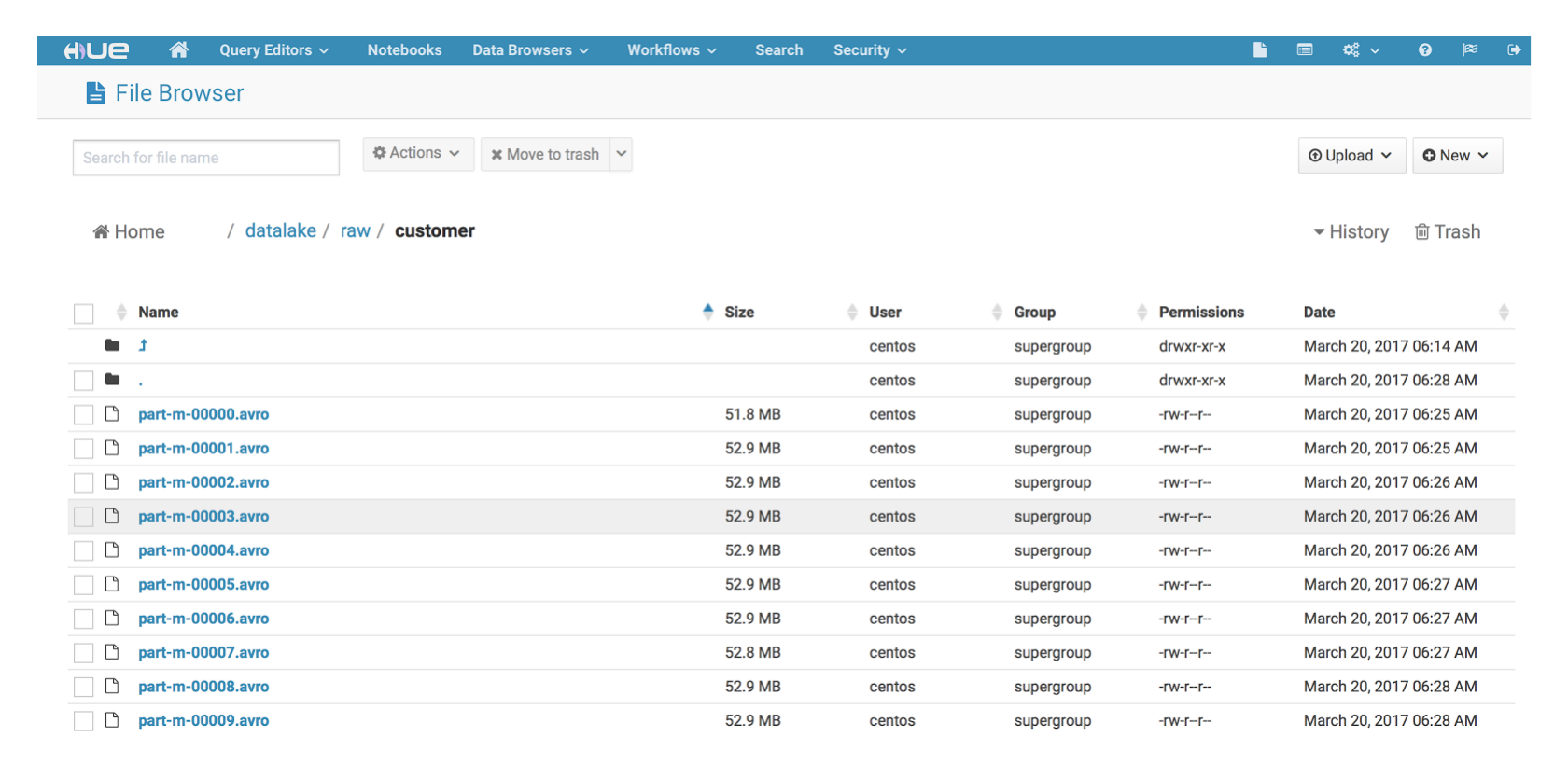

Now, let's load data from our database, one table at a time, so that we can store it in different RAW areas:

${SQOOP_HOME}/bin/sqoop import --connect jdbc:postgresql://<DB-SERVER-ADDRESS>/sourcedb?schema=public --table customer --m 10 --username postgres --password <DB-PASSWORD> --as-avrodatafile --append --target-dir /datalake/raw/customer