Kullback-Leibler divergence (KL divergence), also known as relative entropy, is a method used to identify the similarity between two probability distributions. It measures how one probability distribution p diverges from a second expected probability distribution q.

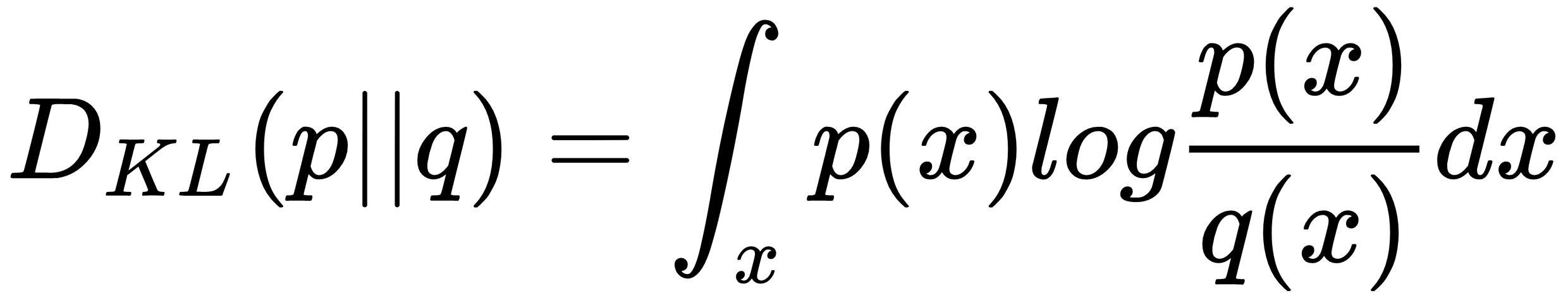

The equation used to calculate the KL divergence between two probability distributions p(x) and q(x) is as follows:

The KL divergence will be zero, or minimum, when p(x) is equal to q(x) at every other point.

Due to the asymmetric nature of KL divergence, we shouldn't use it to measure the distance between two probability distributions. It is therefore ...