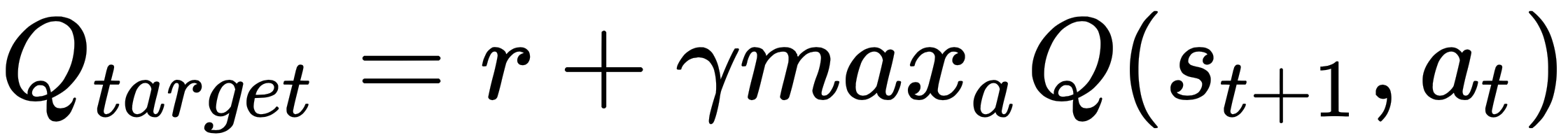

If we consider the preceding Taxi drop-off example, our neural network will consist of 500 input neurons (the state represented by 1×500 one-hot vector) and 6 output neurons, each neuron representing the Q-value for the particular action for the given state. The neural network will here approximate the Q-value for each action. Hence, the network should be trained so that its approximated Q-value and the target Q-value are same. The target Q-value as obtained from the Bellman Equation is as follows:

We train the neural network so that the square error of the difference between the target Q and predicted Q is minimized—that ...