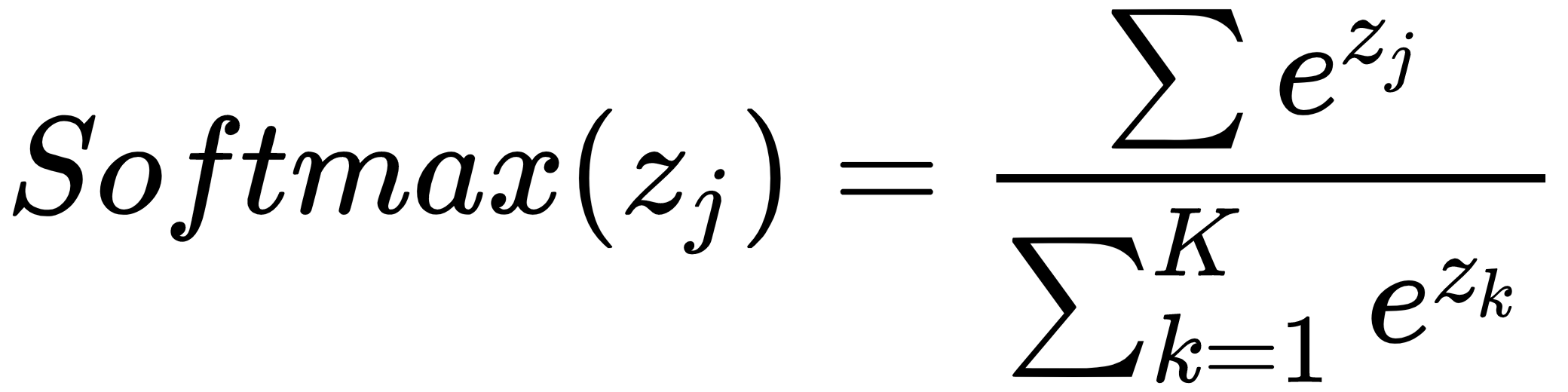

Softmax normalizes or squashes a vector of arbitrary values to a probability distribution between 0 and 1. The sum of the softmax output will be equal to 1. Therefore, it is commonly used in the last layer of a neural network to predict probabilities of the possible output classes. The following is the mathematical expression for the softmax function for a vector with j values:

Here zj represents the jth vector value and K represents the number of classes. As we can see the exponential function smoothens the output value while the denominator normalizes the final value between 0 and 1.