Key words: automatic speech, recognition, robust speech recognition, speech en-

hancement, robust speech feature, stochastic matching, model combination, speaker

adaptation, microphone array.

6.1 INTRODUCTION

Ambient intelligence is the vision of a technology that will become invisibly embed-

ded in our surroundings, enabled by simple and effortless interactions, context sen-

sitive, and adaptive to users [1]. Automatic speech recognition is a core component

that allows high-quality information access for ambient intelligence. However, it is a

difficult problem and one with a long history that began with initial papers appear-

ing in the 1950s [2, 3]. Thanks to the significant progress made in recent years in

this area [4, 5], speech recognitio n technology, once confined to research labora-

tories, is now applied to some real-world applications, and a number of commercial

speech recognition products (from Nuance, IBM, Microsoft, Nokia, etc.) are on the

market. For example, with automatic voice mail transcription by speech recognition,

a user can have a quick view of her voice mail without having to listen to it. Other

applications include voice dialing on embedded speech recognition systems.

The main factors that have made speech recognition possible are advance s in dig-

ital signal processing (DSP) and stochastic modeling algorithms. Signal processing

techniques are important for extracting reliable acoustic features from the speech

signal, and stochastic modeling algorithms are useful for representing speech utter-

ances in the form of efficient models, such as hidden Markov models (HMMs), which

simplify the speech recognition task. Other factors responsible for the commercial

success of speech recognition technology include the availability of fast processor s

(in the form of DSP chips) and high-density memories at relatively low cost.

With the current state of the art in speech recognition technology, it is relatively

easy to accomplish complex speech recognition tasks reasonably well in controlled

laboratory environments. For example, it is now possible to achieve less than a 0.4%

string error rate in a speaker-independent digit recognition task [6]. Even continuous

speech from many speakers and from a vocabulary of 5000 words can be recognized

with a word error rate below 4% [7]. This high level of performance is achievable

only when the training and the test data match. When there is a mismatch between

training and test data, performance degrades drastically.

Mismatch between training and test sets may occur because of changes in acoustic

environments (background, channel mismatch, etc.), speakers, task domains, speaking

styles, and the like [8]. Each of these sources of mismatch can cause severe distortion

in recognition performance for ambient intelligence. For example, a continuous speech

recognition system with a 5000-word vocabulary raised its word error rate from 15%

in clean conditions to 69% in 10-dB to 20-dB signal-to-noise ratio (SNR) conditions

[9, 10]. Similar degradations in recognition performance due to channel mismatch

are observed. The recognition accuracy of the SPHINX speech recognition system

on a speaker-independent alphanumeric task dropped from 85% to 20% correct

when the close-talking Sennheiser microphone used in training was replaced by the

136 CHAPTER 6 Robust Speech Recognition Under Noisy Ambient Conditions

omnidirectional Crown desktop microphone [11]. Similarly, when a digital recogni-

tion system is trained for a particular speaker, its accuracy can be easily 100%, but its

performance degrades to as low as 50% when it is tested on a new speaker.

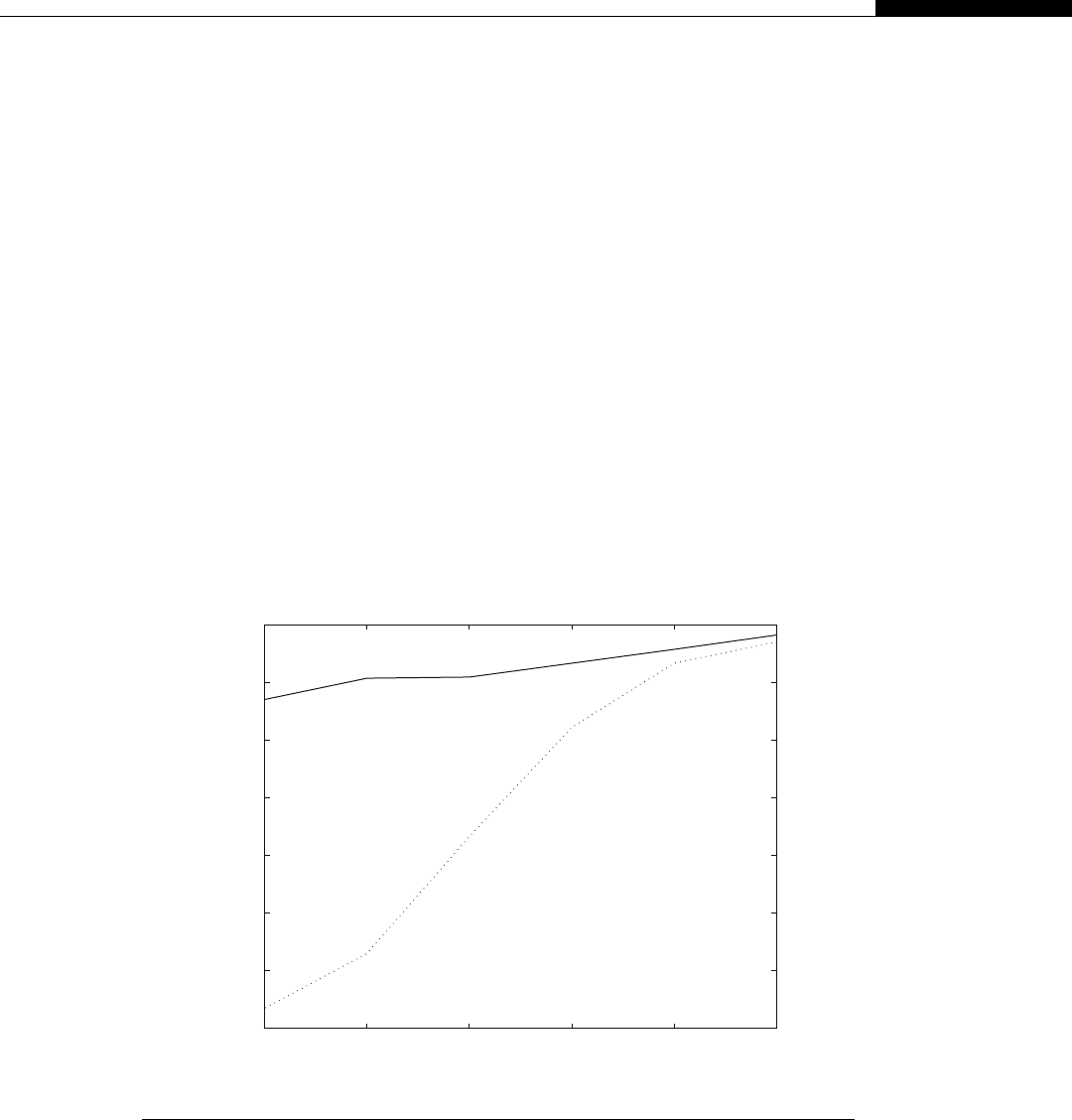

To understand the effect of mismatch between training and test conditions, we

show in Figure 6.1 the performance of a speaker-dependent, isolated-word recogni-

tion system on speech corrupted by additive white noise. The recognition system

uses a nine-word English e-set alphabet vocabulary where each word is represented

by a single-mixture continuous Gaussian density HMM with five states. The figure

shows recognition accuracy as a function of the SNR of the test speech under

(1) mismatched conditions where the recognition system is trained on clean speech

and tested on noisy speech, and (2) matched conditions where the training and the

test speech data have the same SNR.

It can be seen from Figure 6.1 that the additive noise causes a drastic degradation

in recognition performance under the mismatched conditions; with the matched

conditions, however, the degradation is moderate and graceful. It may be noted here

that if the SNR becomes too low (such as 10 dB), the result is very poor recogni-

tion performance even when the system operates under matched noise conditions.

This is because the signal is completely swamped by noise and no useful information

can be extracted from it during training or in testing.

10 15 20 25 30 35

30

40

50

60

70

80

90

100

Recognition Accuracy (%)

SNR (dB) of Test Speech

FIGURE 6.1

Effect of additive white noise on speech recognition performance under matched and mismatched

conditions: training with clean speech (dotted line); training and testing with same-SNR speech

(solid line).

6.1 Introduction 137

Get Human-Centric Interfaces for Ambient Intelligence now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.