Chapter 4. Data Management, Data Engineering, and Data Science Overview

Data Management

Data management refers to the process by which data is effectively acquired, stored, processed, and applied, aiming to bring the role of data into full play. In terms of business, data management includes metadata management, data quality management, and data security management.

Metadata Management

Metadata can help us to find and use data, and it constitutes the basis of data management.

Normally, metadata is divided into the following three types:

-

Technical metadata refers to a description of a dataset from a technical perspective, mainly form and structure, including data type (such as text, JSON, and Avro) and data structure (such as field and field type).

-

Operational metadata refers to a description of a dataset from the operation perspective, mainly data lineage and data summaries, including data sources, number of data records, and statistical distribution of numerical values for each field.

-

Business metadata refers to a description of a dataset from the business point of view, mainly the significance of a dataset for business users, including business names, business descriptions, business labels, data-masking strategies.

Metadata management, as a whole, refers to the generation, monitoring, enrichment, deletion, and query of metadata.

Data Quality Management

Data quality is a description of whether the dataset is good or bad. Generally, data quality should be assessed for the following characteristics:

-

Integrity refers to the integrity of data or metadata, including whether any field or any field content is missing (e.g., the home address only contains the street name, or no area code is included in the landline number).

-

Timeliness or “freshness” refers to whether data is delayed too long from its generation to its availability and whether updates are sufficiently frequent. For example, real-time high-density data updates are necessary for the status monitoring of servers to ensure an alarm can be sent and dealt with in a timely manner in case of any problem to avoid more serious problems. To track the number of new mobile app users, Daily Active Users (DAUs) should be updated once a day in general cases. However, the increase in the number of new users is rarely studied.

-

Accuracy refers to whether data is erroneous or abnormal—for example, incorrect phone numbers, having the wrong number of digits in an ID number, and using the wrong email format.

-

Consistency involves both format (e.g., whether the telephone number conforms to MSISDN specifications) and logic across datasets. Sometimes, it may be OK from the point of view of a single dataset. However, problems would occur if two datasets are interconnected. For example, inconsistent gender data may appear in the internal system of an enterprise. The data may show male in the CRM system but female in the marketing system. Data should be further understood so as to adjust data descriptions and ensure data visitors are not confused.

Data quality management involves not only index description and monitoring of data integrity, timeliness, accuracy, and consistency but also the improvement of data quality by means of data organization.

Sometimes the problems of data quality are not so conspicuous and there is no way to make judgments only by statistical figures—in these cases, domain knowledge is required. For example, when the Tencent data team performed a statistical analysis of SVIP QQ users, it was discovered that the age group at 40 years old was the largest of such users, far more than the ages of 39 and 41. It was thus guessed that the group had an increased opportunity for online communication with their children or more free time. However, this did not align with the domain knowledge, which was not convincing. Further analysis revealed there were an inordinate number of users with a birthdate of January 1, 1970—the default birthdate set by the system—and that this is what had accounted for the high number of 40-year-old users (the study was conducted in 2010). Therefore, data operators should have a deep understanding of data and obtain the domain knowledge that is not known by others.

Data Security Management

Data security mainly refers to the protection of data access, use, and release processes, which includes the following:

-

Data access control refers to the control of data access authority so that data can be accessed by the personnel with proper authorization.

-

Data audit refers to the recording of all data operations by log or report so as to be traceable if needed.

-

Data mask refers to the deletion of some data according to preset rules (especially the parts concerning privacy, such as personally recognizable data, personal private data, and sensitive business data) so as to protect data.

-

Data tokenization refers to the substitution of some data content according to preset rules (especially sensitive data content) so as to protect data.

Data security management, therefore, entails the addition, deletion, modification, and monitoring of data, which aims to enable users to access data in a convenient and efficient manner while ensuring data security.

Data Engineering

Most traditional enterprises are challenged by poor implementation of data acquisition, organization, analytics, and action procedures when they transform themselves for the smart era. Thus, it is urgent that enterprises build end-to-end data engineering capacity throughout their data acquisition, organization, analytics, and action procedures, so as to ensure a data- and procedure-driven business structure, rational data, and a closed-loop approach, and realize the transformation from further insight into commercial value of data. The search engine is the simplest example. After a search engine makes a user’s interactive behavior data-driven, it can optimize the presentation of the search result so as to improve the user’s searching experience and attract more users to it. This optimization is done according to duration of the user’s stay, number of clicks, and other conditions. Additionally, it can generate more data for optimization. This is a closed loop of data, which can bring about continuous business optimization.

In the smart data era, due to the complexity of data and data application contexts, data engineering needs to integrate both AI and human wisdom to maximize its effectiveness. For example, a search engine aims to solve the issue of information ingestion after the surge in the volume of information on the internet. As tens of millions of web pages cannot be dealt with using manual URL classified navigation, algorithms must be used to index information and sort search results according to users’ characteristics. In order to adapt to the increasingly complex web environment, Google has been gradually improving its search ranking intelligence, from the earliest PageRank algorithm, to Hummingbird in 2013 and the addition of the machine learning algorithm RankBrain as the third-most important sorting signal in 2015. There are over 200 sorting signals for the Google search engine; and variant signals or subsignals may be in the tens of thousands and are continuously changing. Normally, new sorting signals need to be discovered, analyzed, and evaluated by humans in order to determine their effects on the sorting results. Thus, even if there are powerful algorithms and massive data, human wisdom is absolutely necessary and undertakes a key role in efficient data engineering.

Implementation Flow of Data Engineering

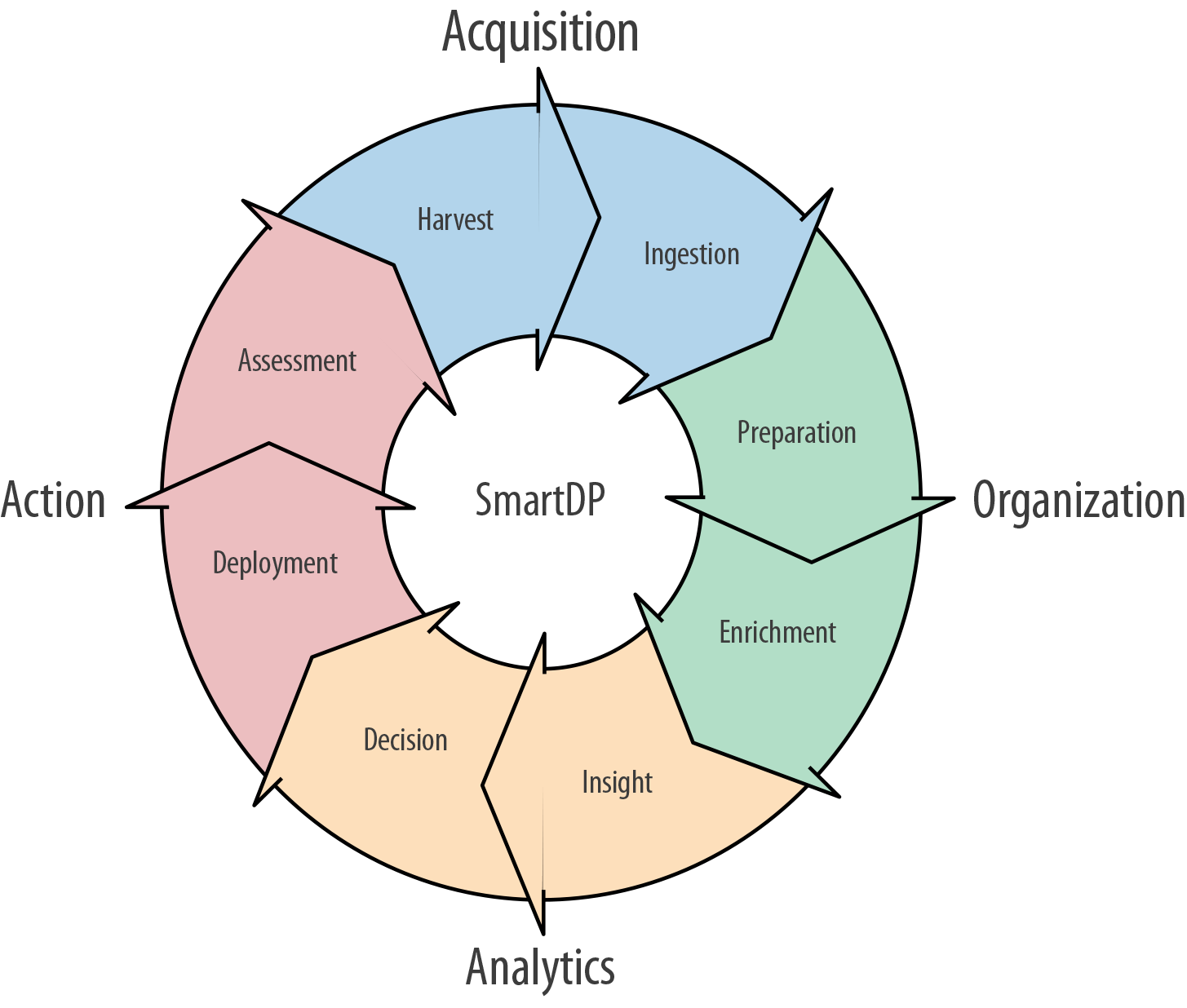

In terms of implementation, data engineering normally includes data acquisition, organization, analytics, and action, which form a closed loop of data (see Figure 4-1).

Figure 4-1. Closed loop of data processing (figure courtesy of Wenfeng Xiao)

Data Acquisition

Data acquisition focuses on generated data and captures data into the system for processing. It is divided into two stages—data harvest and data ingestion.

Different data application contexts have different demands for the latency of the data acquisition process. There are three main modes:

- Real time

-

Data should be processed in a real-time manner without any time delay. Normally, there would be a demand for real-time processing in trading-related contexts. For example:

-

For online trade fraud prevention, the data of trading parties should be dealt with by an anti-fraud model at the fastest possible speed, so as to judge if there is any fraud, and promptly report any deviant behavior to the authorities.

-

The commodities of an ecommerce website should be recommended in a real-time manner according to the historical data of clients and the current web page browsing behavior.

-

Computer manufacturers should, according to their sales conditions, make a real-time adjustment of inventories, production plans, and parts supply orders.

-

The manufacturing industry should, based on sensor data, make a real-time judgment of production line risks, promptly conduct troubleshooting, and guarantee the production.

-

- Micro batch

-

Data should be processed by the minute in a periodic manner. It is not necessary that data is processed in a real-time manner. Some delay is allowed. For example, the effect of an advertisement should be monitored every five minutes so as to determine a future release strategy. It is thus required that data should be processed in a centralized manner every five minutes in aggregate.

- Mega batch

-

Data should be processed periodically with a time span of several hours, without a high volume of data ingested in real time and a long delay in processing. For example, some web pages are not frequently updated and web page content may be crawled and updated once every day.

Streaming data is not necessarily acquired in a real-time manner. It may also be acquired in batches, depending on application context. For example, the click event stream of a mobile app is uploaded in a continuous way. However, if we only wish to count the added or retained stream in the current day, we only need to incorporate all click-stream blogs in that day in a document and upload them to the system by means of a mega batch for analytics.

Data harvest

Data harvest refers to a process by which a source generates data. It relates to what data is acquired. For instance, the primary SDK of the iOS platform or an offline WIFI probe harvests data through data sensing units.

Normally, data is acquired from two types of data sources:

- Stream

-

Streaming data is continuously generated, without a boundary. Common streams include video streams, click event streams on web pages, mobile phone sensor data streams, and so on.

- Batch

-

Batch data is generated in a periodic manner at a certain time interval, with a boundary. Common batch data includes server blog files, video files, and so on.

Data ingestion

Data ingestion refers to a process by which the data acquired from data sources is brought into your system, so the system can start acting upon it. It concerns how to acquire data.

Data ingestion typically involves three operations, namely discover, connect, and sync. Generally, no revision of any form is made to numeric values to avoid information loss.

Discover refers to a process by which accessible data sources are searched in the corporate environment. Active scanning, connection, and metadata ingestion help to develop the automation of the process and reduce the workload of data ingestion.

Connect refers to a process by which the data sources that are confirmed to exist are connected. Once connected, the system may directly access data from a data source. For example, building a connection to a MySQL database actually involves configuring the connecting strings of the data source, including IP address, username and password, database name, and so on.

Sync refers to a process by which data is copied to a controllable system. Sync is not always necessary upon the completion of connection. For example, in an environment which requires highly sensitive data security, only connection is allowed for certain data sources. Copying is not allowed for that data.

Data Organization

Data organization refers to a process to make data more available through various operations. It is divided into two stages, namely data preparation and data enrichment.

Data preparation

Data preparation refers to a process by which data quality is improved using tools. In general cases, data integrity, timeliness, accuracy, and consistency are regarded as indicators for improvement so as to make preparations for further analytics.

Common data preparation operations include:

-

Supplement and update of metadata

-

Building and presentation of data catalogs

-

Data munging, such as replacement, duplicate removal, partition, and combination

-

Data correlation

-

Checking of consistency in terms of format and meaning

-

Application data security strategy

Data enrichment

In contrast to data preparation, data enrichment shows more preference to contexts. It can be understood as a data preparation process at a higher level based on context.

Common data enrichment operations include:

- Data labels

-

Labels are highly contextual. They may have different meanings in different contexts, so they should be discussed in a specific context. For example, gender labels have different meanings in contexts such as ecommerce, fundamental demography, and social networking.

- Data modeling

-

This targets the algorithm models of a business—for example, a graph model built in order to screen the age group of econnoisseurs in the internet finance field.

Data Analytics

Data analytics refers to a process by which data is searched, explored, or displayed in a visualized manner based on specific problems so as to form insight and finally make decisions. Data analytics represents a key step from data conversion to action and is also the most complicated part of data engineering.

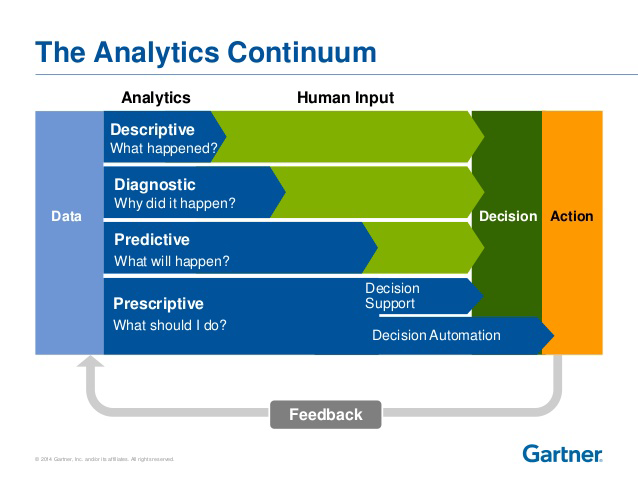

Data analytics is usually completed by data analysts with specialized knowledge. Figure 4-2 highlights some key aspects of analytics that are utilized to obtain policymaking support.

Figure 4-2. Data analytics maturity model (source: Gartner)

Each process from insight to decision is based on the results of this analysis. Nevertheless, each level of analytics means greater challenges than that of the previous one. If the system fails to complete such analytics on its own, the intervention of human wisdom is required. A data analytics system should continuously learn from human wisdom and enrich its data dimensions and AI so as to solve these problems and reduce the cost of human involvement to the largest extent. For example, in the currently popular internet finance field, big data and AI algorithms can be used to evaluate user credit quickly and determine the limit of a personal loan, almost without human intervention. And the cost of such solutions is far lower than that of traditional banks.

Data analytics is divided into two stages, namely data insight and data decisions.

Data insight

Data insight refers to a process by which data is understood through data analytics. Data insights are usually presented in the form of documents, figures, charts, or other visualizations.

Data insight can be divided into the following types depending on the time delay from data ingestion to data insight:

- Real time

-

Applicable to the contexts where data insight needs to be obtained in a real-time manner. Server system monitoring is one example of simple contexts. An alarm and response plan should be immediately triggered when key indicators (including magnetic disk and network) exceed the designated threshold. In complicated contexts such as P2P fraud prevention, a judgment should be made if there is any possibility of fraud according to contextual data (the borrower’s data and characteristics) and third-party data (the borrower’s credit data). Also, an alarm should be trigged based on such judgment.

- Interactive

-

Applicable to the context where the insight needs to be obtained in an interactive manner. For example, a business expert cannot get an answer in one query when studying the reason for the recent fall in the sales volume for a particular product. A clue needs to be obtained through continuous query, thus determining the target for the next query. The response speed of the query should be in an almost real-time manner, as required by interactive insight.

- Batch

-

Applicable to the context where the insight should be completed once every time interval. For example, there are no real-time requirements for behavior statistics of mobile app users (including add, daily active, retain) in general cases.

The depth and completeness of data insight results greatly affects the quality of decisions.

Data decisions

A decision is a process by which an action plan is formulated based on the result of data insight. In the case of sufficient and deep data insight, it is much easier to make a decision.

Action

An action is a process by which the decision generated in the analytics stage is put into use and the effect is assessed. It includes two stages, namely deployment and assessment.

Deployment

Deployment is a process by which action strategies are implemented. Simple deployment includes presenting the visualized result or reaching users during the marketing process. However, the common deployment is more complicated. Usually, it relates to shifting the data strategy from natural accumulation to active acquisition. The data acquisition stage involves deployment through construction, including offline construction of IoT devices (especially beacon devices, including iBeacon and Eddystone) and WiFi probe devices as well as improvement of business operation flow so as to obtain specific data points (such as capture of Shake, QR code scanning, and WiFi connection events).

Assessment

Assessment is a process by which the action result is measured; it aims to provide a basis for optimization of all data engineering.

In practice, although the problems of the action result appear to be derived from the decision, they are more a reflection on data quality. Data quality may relate to all the stages of data engineering, including acquisition, harvest, preparation, enrichment, insight, decision, and action. Thus, it is necessary to track the processing procedures of each link, which can help to locate the root causes for problems.

Sometimes, for the purpose of justice and objectivity, enterprises may employ third-party service providers to make an assessment; in this situation, all participants in the action should reach a consensus on the assessment criteria. For example, an app advertiser finds that users of a particular region have a large potential value through analytics, and thus hope to advertise in a targeted way in this region. In the marketing campaign, the app advertiser employs the third-party monitoring service to follow up on the marketing effect. The results indicate that quite a lot of activated users are not within the region. Does this mean an erroneous action was taken in the release channel? It is discovered through further analysis that the app advertiser, channel, and the third-party monitoring service provider are not consistent in the standards for judging the position of the audience. In the mobile field, due to the complex network environment and mobile phone structure (applications and sensors may be affected), enterprises should pay particular attention to the adjustment of positions, especially when looking at deviations in assessments.

Roles of the Data Engineering Team

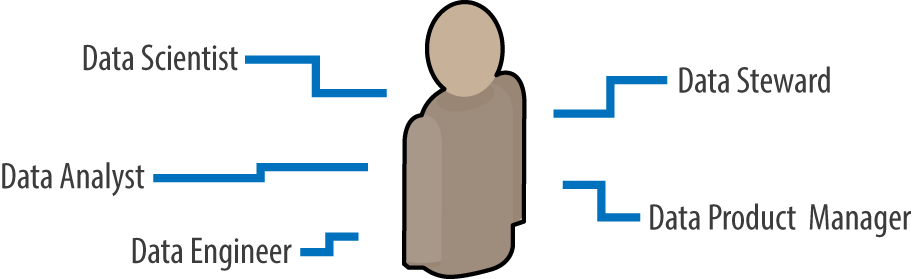

Different from traditional enterprises, data-driven enterprises should have their own specialized data engineering teams (see Figure 4-3).

Figure 4-3. Roles of the data engineering team (figure courtesy of Wenfeng Xiao)

A data engineering team involves multiple data-related roles. All members of the team realize data value through effective organizational collaboration.

- Data stewards

-

As the core of the basic architecture of a data engineering team, data stewards conduct design and technical planning for the overall architecture of the base platform for smart data, ensuring the satisfaction of the entire system’s requirements for continuous improvement of data storage and computing when an enterprise transforms itself toward intelligence and continuous development.

- Data engineers

-

Data engineers are a core technical team that supports data platform building, operation, and maintenance, as well as data processing and mining, ensuring the stable operation of the smart data platform and high-quality data.

- Data analysts

-

Data analysts are the core staff responsible for technology, data, and business; they mine and feed back problems based on analysis of historical data and provide decision-making support for problem solving and continuous optimization in terms of business development.

- Data scientists

-

Data scientists further mine the relationship between data endogeneity and exogeneity and support data analysts’ in-depth analysis based on algorithms and models; they also create models based on data and provide decisions for the future development of an enterprise.

- Data product managers

-

Data product managers analyze and mine user demands, create visualized data presentations on different functions of an enterprise, such as management, sales, data analysis, and development, and support their decision making, operation and analysis, representing the procedure- and information-oriented commercial value of data.

An enterprise may maintain a unified data engineering team across its organization depending on the complexity of the data and business, or it may establish a separate data engineering team for each business line, or use both styles. For example, the Growth Team directly led by the Facebook CEO is divided into two sub-teams, namely “data analysis” and “data infrastructure.” All data of the enterprise should be acquired, organized, analyzed, and acted on so as to facilitate continuous business optimization. Similarly, at Airbnb, the Department of Data Fundamentals manages all corporate data and designs and maintains a unified data acquisition, labeling, unification, and modeling platform. Data scientists are distributed within each business line and analyzed business value based on a unified data platform.

Data steward

A data steward is responsible for planning and managing the data assets of an enterprise, including data purchases, utilization, and maintenance, so as to provide stable, easily accessible, and high-quality data.

A data steward should have the following capabilities:

-

A deep understanding of the data managed and understanding of such data beyond all other personnel in the enterprise

-

An understanding the correlation between data and business flow, such as how to generate and use data in the business flow

-

Ability to guarantee the stability and availability of data

-

Ability to formulate operating specifications and security strategies concerning data

A data steward should understand business and the correlation between data and business, which can help the data steward reasonably plan data—for example, whether more data should be purchased so as to increase coverage, whether data quality should be optimized so as to increase the matching rate, and whether the data access strategy should be adjusted so as to satisfy compliance requirements.

Data engineer

A data engineer is responsible for the architecture and the technical platform and tools needed in data engineering, including data connectors, the data storage and computing engine, data visualization, the workflow engine, and so on. A data engineer should ensure data is processed in a stable and reliable way and provide support for the smooth operation of the work of the data steward, data scientist, and data analyst.

The capabilities of a data engineer should include, but are not limited to the following aspects:

-

Programming languages, including Java, Scala, and Python

-

Storage techniques, including column-oriented storage, row-oriented storage, KV, document, filesystem, and graph

-

Computing techniques, including stream, batch, ad-hoc analysis, search, pre-convergence, and graph calculation

-

Data acquisition techniques, including ETL, Flume, and Sqoop

-

Data visualization techniques, including D3.js, Gephi, and Tableau

Generally, data engineers come from the existing software engineering team but should have capabilities relating to data scalability.

Data scientist

Some enterprises would classify data scientists as data analysts, as they undertake similar tasks (i.e., acquiring insight from data to guide decisions).

In fact, the roles do not require completely identical skills. Data scientists should cross even higher thresholds and should be able to deal with more complex data contexts. A data scientist should have a deep background in computer science, statistics, mathematics, and software engineering as well as industry knowledge and should have the capacity to undertake algorithm research (such as algorithm optimization or new algorithm modeling). Thus, they are able to solve some more complex data issues, such as how to optimize websites to increase user retention rate or how to promote game apps so as to better realize users’ life cycle value.

If we say data analysts show a preference for summaries and analytics (descriptive and diagnostic analytics), data scientists highlight future strategic analytics (predictive analytics and independent decision analytics). In order to continuously create profits for enterprises, data scientists should have a deep understanding of business.

The capabilities owned by a data scientist should include, but are not limited by, the following aspects:

-

Programming languages, including Java, Scala, and Python

-

Storage techniques, including column-oriented storage, row-oriented storage, KV, document, filesystem, and graph

-

Computing techniques, including stream, batch, ad-hoc analysis, search, pre-convergence, and graph calculation

-

Data acquisition techniques, including ETL, Flume, and Sqoop

-

Machine learning techniques, including TensorFlow, Petuum, and OpenMPI

-

Traditional data science tools, including SPSS, MATLAB, and SAS

In terms of engineering skills, data scientists and data engineers have a similar breadth; data engineers, however, have a greater depth. Though high-quality engineering is not strictly required in these contexts, proper engineering skills can help improve the efficiency of some data exploration tests.

As a consequence, the costs paid for obtaining engineering skills are even less than those of communication and collaboration with data engineering teams.

Additionally, data scientists should have a deep understanding of machine learning and the data science platform. However, this does not mean data engineers do not need to understand algorithms as data scientists in some enterprises are responsible for algorithm design while data engineers are responsible for realization of algorithms.

Data analyst

Data analysts are responsible for exploring data based on data platforms, tools, and algorithm models and gaining business insight so as to satisfy the demands of business users. They should not only have an understanding of data but also master the specialized knowledge regarding business such as accounting, financial risk control, weather, and game app operation.

To some extent, data analysts may be regarded as data scientists at the primary level but do not need to have a solid mathematical foundation and algorithm research skills. Nevertheless, it is necessary for them to master Excel, SQL, basic statistics, and statistical tools, as well as data visualization.

A data analyst should have the following core capabilities:

- Programming

-

A general understanding of a programming language, such as Python and Java, or a database language can effectively help data analysts to process data in a scaled and characteristic manner and improve the efficiency of analytics.

- Statistics

-

Statistics can enable analysts to interpret both raw data and business problems.

- Machine learning

-

Regular machine learning algorithms can help data analysts to mine the potential interaction among groups in the dataset and improve the depth of their business analysis and breadth of perspectives.

- Data munging

-

In view of the many nonendogenetic controllable factors that may cause data deviation, such as system stability and manual operation, data analysts are required to mung data in a professional manner and guarantee the data quality of data analysis.

- Data visualization

-

Data visualization supports data interpretability for management decision making and salespeople on the one hand and enables data analysts to conduct a second mining of business problems from the perspective of charts on the other hand.

Generally, data analysts are experienced data experts in teams. They should have a strong curiosity about data and stay aware of the continuously emerging techniques and best practices.

Data analysts should also be skillful at communication. They should not only be able to satisfy the demand for exchanging business with business users but also maintain a smooth collaboration with the other roles of data teams so as to obtain resource support.

Data product manager

A data product manager focuses on data value. Data product managers should understand business, data, and their correlation. Thus, it is required that a data product manager have the capabilities of both product manager and data analyst.

Data product managers are not data analysts but should collaborate with data analysts and make data engineering product-oriented. Data analysts focus more on projects as well as the efficiency and speed of insight. They use the most convenient and agile tools to deal with business problems as soon as possible. Data product managers focus more on products and the stability and reliability of insight. They should select validated techniques and consider data security. Data product managers should also consider business demand more completely and develop data products that can be recognized in abnormal environments.

Data Science

As required by the smart data era, data science spans across computer science, statistics, mathematics, software engineering, industry knowledge, and other fields. It studies how to analyze data and to gain insight.

With the emergence of big data, smart enterprises must deal with a greater data scale and more complex data types on smart data platforms through data science. Some traditional fields and data science share similar concepts, including advanced analytics, data mining, and predictive analytics.

Data science continues some ideas of statistics, for example, statistical search, comparison, clustering, classification, and other analytics and summarization of a lot of data. Its conclusions are correlation rather than a necessary cause–effect relationship. Although data science relies heavily on computation, it is not based on a known mathematical model, which is different from computer simulation. Instead, it replaces cause–effect relationship and rigorous theories and models with a lot of data correlation and acquires new “knowledge” based on such correlation.

Get Implementing a Smart Data Platform now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.