Chapter 1. The Reliability Stack

We live in a world of services. There are vendors that will sell you Network as a Service or Infrastructure as a Service. You can pay for Monitoring as a Service or Database as a Service. And that doesnât even touch upon the most widely used acronym, SaaS, which in and of itself can stand for Software, Security, Search, or Storage as a Service.

Furthermore, many organizations have turned to a microservice approach, one where you split functionality out into many small services that all talk to each other and depend on each other. Our systems are becoming more complex and distributed and deeper with every passing year.

It seems like everything is a service now, to the point that it can be difficult to define exactly what a service even is. If you run a retail website, you might have microservices that handle things like user authentication, payments systems, and keeping track of what a user has put in their shopping cart. But clearly, the entire retail website is itself also a service, albeit one that is composed of many smaller components.

We want to make sure our services are reliable, and to do this withâand withinâcomplex systems, we need to start thinking about things in a different way than we may have in the past. You might be responsible for a globally distributed service with thousands of moving parts, or you might just be responsible for keeping a handful of VMs running. In either case, you almost certainly have human users who depend on those things at some point, even if theyâre many layers removed. And once you take human users into account, you need to think about things from their perspective.

Our services may be small or incredibly deep and complex, but almost without fail these services can no longer be properly understood via the logs or stack traces we have depended on in the past. With this shift, we need not just new types of telemetry, but also new approaches for using that telemetry.

This opening chapter establishes some truths about services and their users, outlines the various components of how service level objectives work, provides some examples of what services might look like in the first place, and finally gives you a few additional points of guidance to keep in mind as you read the rest of this book.

Service Truths

Many things may be true about a particular service, but three things are always true.

First, a proper level of reliability is the most important operational requirement of a service. A service exists to perform reliably enough for its users, where reliability encompasses not only availability but also many other measures, such as quality, dependability, and responsiveness. The question âIs my service reliable?â is pretty much analogous to the question âIs my service doing what its users need it to do?â As we discuss at great length in this book, you donât want your target to be perfect reliability, but youâll need your service to do what it is supposed to well enough for it to be useful to your users. Weâll discuss what reliability actually means in much more detail in Chapter 2, but it always comes back to doing what your users need.

Because of this, the second truth is that how you appear to be operating to your users is what determines whether youâre being reliable or notânot what things look like from your end. It doesnât matter if you can point to zero errors in your logs, or perfect availability metrics, or incredible uptime; if your users donât think youâre being reliable, youâre not.

Note

What do we mean when we say âuserâ? Simply put, a user is anything or anyone that relies on your service. It could be an actual human, the software of a paying customer, another service belonging to an internal team, a robot, and so on. For brevity this book refers to whatever might need to communicate with your service as a user, even if itâs many levels removed from an actual human.

Finally, the third thing that is always true is that nothing is perfect all the time, so your service doesnât have to be either. Not only is it impossible to be perfect, but the costs in both financial and human resources as you creep ever closer to perfection scale at something much steeper than linear. Luckily, it turns out that software doesnât have to be 100% perfect all the time, either.

The Reliability Stack

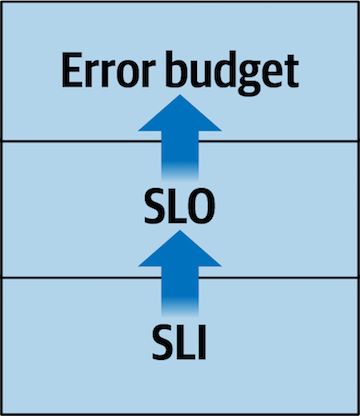

If we accept that reliability is the most important requirement of a service, users determine this reliability, and itâs okay to not be perfect all the time, then we need a way of thinking that can encompass these three truths. You have limited resources to spend, be they financial, human, or political, and one of the best ways to account for these resources is via what Iâve taken to calling the Reliability Stack. This stack is what the majority of this book is about. Figure 1-1 shows the building blocksâweâll begin with a brief overview of the key terms so weâre all on the same page.

Figure 1-1. The basic building blocks of the Reliability Stack

At the base of the Reliability Stack you have service level indicators, or SLIs. An SLI is a measurement that is determined over a metric, or a piece of data representing some property of a service. A good SLI measures your service from the perspective of your users. It might represent something like if someone can load a web page quickly enough. People like websites that load quickly, but they also donât need things to be instantaneous. An SLI is most often useful if it can result in a binary âgoodâ or âbadâ outcome for each eventâthat is, either âYes, this service did what its users needed it toâ or âNo, this service did not do what its users needed it to.â For example, your research could determine that users are happy as long as web pages load within 2 seconds. Now you can say that any page load time that is equal to or less than 2 seconds is a âgoodâ value, and any page load time that is greater than 2 seconds is a âbadâ value.

Once you have a system that can turn an SLI into a âgoodâ or âbadâ value, you can easily determine the percentage of âgoodâ events by dividing it by the total number of events. For example, letâs say you have 60,000 visitors to your site in a given day, and youâre able to measure that 59,982 of those visits resulted in pages loading within 2 seconds. You can divide your good visits by your total visits to return a percentage indicating how often your usersâ requests resulted in them receiving a web page quickly enough:

At the next level of the stack we find service level objectives, or SLOs, which are informed by SLIs. Youâve seen how to convert the number of good events among total events to a percentage. Your SLO is a target for what that percentage should be. The SLO is the âproper level of reliabilityâ targeted by the service. To continue with our example, you didnât hear any complaints about the day when 99.97% of page loads were âgood,â so you could infer that users are happy enough as long as 99.97% of pages load quickly enough. There is much more at play than that, however. Chapter 4 covers how to choose good SLOs.

Note

In Site Reliability Engineering (OâReilly), service level indicators were defined fairly broadly as âa carefully defined quantitative measure of some aspect of the level of service that is provided.â In The Site Reliability Workbook (OâReilly), they were more commonly defined as the ratio of good events divided by total events. In this book we take a more nuanced approach. The entire system starts with measurements, applies some thresholds and/or aggregates them, categorizes things into âgoodâ values and âbadâ values, converts these values into a percentage, and then compares this percentage against a target. Exactly how you go about this wonât be the same for every organization; it will heavily depend on the kinds of metrics or measurements available to you, how you are able to store and analyze them, their quantity and quality, and where in your systems you can perform the necessary math. There is no universal answer to the question of where to draw the line between SLI and SLO, but it turns out this doesnât really matter as long as youâre using the same definitions throughout your own organization. Weâll discuss these nuances in more detail later in the book.

Finally, at the top of the stack we have error budgets, which are informed by SLOs. An error budget is a way of measuring how your SLI has performed against your SLO over a period of time. It defines how unreliable your service is permitted to be within that period and serves as a signal of when you need to take corrective action. Itâs one thing to be able to find out if youâre currently achieving your SLO, and a whole other thing to be able to report on how youâve performed against that target over a day, a week, a month, a quarter, or even a year. You can then use that information to have discussions and make decisions about how to prioritize your work; for example, if youâve exhausted your error budget over a month, you might dedicate a single engineer to reliability improvements for a sprint cycle, but if youâve exhausted your error budget over an entire quarter, perhaps itâs time for an all-hands-on-deck situation where everyone focuses on making the service more reliable. Having these discussions is covered in depth in Chapter 5.

You may have also heard of service level agreements, or SLAs (Figure 1-2). Theyâre often fueled by the sameâor at least similarâSLIs as your SLOs are, and often have their own error budgets as well. An SLA is very similar to an SLO, but differs in a few important ways.

Figure 1-2. Service level agreements are also informed by service level indicators

SLAs are business decisions that are written into contracts with paying customers. You may also sometimes see them internally at companies that are large enough to have charge-back systems between organizations or teams. Theyâre explicit promises that involve payment.

An SLO is a target percentage you use to help you drive decision making, while an SLA is usually a promise to a customer that includes compensation of some sort if you donât hit your targets. If you violate your SLO, you generate a piece of data you use to think about the reliability of your service. If you violate an SLO over time, you have a choice about doing something about it. If you violate your SLA, you owe someone something. If you violate an SLA over time, you generally donât have much of a choice about how and when to act upon it; you need to be reactive, or you likely wonât remain in business for very long.

SLAs arenât covered here in much depth. Developing and managing SLAs for an effective business is a topic for another book and another time. This book focuses on the elements of the Reliability Stack that surround service level objectives and how you can use this data to have better discussions and make better decisions within your own organization or company.

Service Level Indicators

SLIs are the single most important part of the Reliability Stack, and may well be the most important concept in this entire book. You may never get to the point of having reasonable SLO targets or calculated error budgets that you can use to trigger decision making. But taking a step back and thinking about your service from your usersâ perspective can be a watershed moment for how your team, your organization, or your company thinks about reliability. SLIs are powerful datasets that can help you with everything from alerting on things that actually matter to driving your debugging efforts during incident responseâand all of that is simply because theyâre measurements that take actual users into account.

A meaningful SLI is, at its most basic, just a metric that tells you how your service is operating from the perspective of your users. Although adequate starting points might include things like API availability or error rates, better SLIs measure things like whether it is possible for a user to authenticate against your service and retrieve data from it in a timely manner. This is because the entire user journey needs to be taken into account. Chapter 3 covers this in much more depth.

A good SLI is also able to be expressed meaningfully in a sentence that all stakeholders can understand. As weâll cover later, the math that might inform the status of an SLI can become quite complicated for complex services; however, a proper SLI definition has to be understandable by a wide audience. Chapter 15 talks much more about the importance of definitions, understandability, and discoverability.

Service Level Objectives

As has been mentioned, and will be mentioned many more times, trying to ensure anything is reliable 100% of the time is a foolâs errand. Things break, failures occur, unforeseen incidents take place, and mistakes are made. Everything depends on something else, so even if youâre able to ensure that youâre reliable 99.999% of the time, if something else you depend on is any less than that, your users may not even know that your specific part of the system was operating correctly in the first place. That âsomethingâ doesnât even have to be a hard dependency your service has; it can be anything in the critical path of your users. For example, if the majority of your users rely on consumer-grade internet connections, nothing you can do will ever make your services appear to be more reliable than what those connections allow a user to experience. Nothing runs in a complete vacuumâthere are always many factors at play in complex systems.

The good news is that it isnât the case that computer systems actually need to be perfect. Computer systems even employ error detection and correction techniques at the hardware level. From their inception, the networking protocols we use today have had built-in systems to handle errors and failures, because itâs understood that those errors and failures are going to happen no matter what you try to do about them.

Even at the highest levels, such as a human browsing to a web page and expecting it to load, failures of some sort are expected and known to occur. A web page may load slowly, or parts of it wonât load at all, or the login button wonât register a click. These are all things humans have come to anticipate and are usually okay withâas long as they donât happen too often or at a particularly important moment.

And thatâs all SLOs are. Theyâre targets for how often you can fail or otherwise not operate properly and still ensure that your users arenât meaningfully upset. If a visitor to a website has a page that loads very slowlyâor even not at allâonce, theyâll probably shrug their shoulders and try to refresh. If this happens every single time they visit the site, however, the user will eventually abandon it and find another one that suits their needs.

You want to give your users a good experience, but youâll run out of resources in a variety of ways if you try to make sure this good experience happens literally 100% of the time. SLOs let you pick a target that lives between those two worlds.

Note

Itâs important to reiterate that SLOs are objectivesâthey are not in any way contractual agreements. You should feel free to change or update your targets as needed. Things in the world will change, and those changes may affect how your service operates. Itâs also possible that those changes will alter the expectations of your users. Sometimes youâll need to loosen your SLO because what was once a reasonably reachable target no longer is; other times youâll need to tighten your target because the demands or needs of your users have evolved. This is all fine, and the discussions that take place during these periods are some of the most important aspects of an SLO-based approach to reliability. Chapter 4 and Chapter 14 explore a wide variety of strategies for how to pick SLO targets and when you should change these targets.

Error Budgets

Error budgets are, in a way, the most advanced part of the Reliability Stack. This is not only because they rely on two other parts of the stack both existing and being meaningful, but also because theyâre the most difficult to implement and use in an effective manner. Additionally, while error budgets are incredibly useful in explaining the reliability status of your service to other people, they can sometimes be much more complicated to calculate than you might expect.

There are two very different approaches you can take in terms of calculating error budgets: events-based and time-based. The approach thatâs right for you will largely depend on the fidelity of the data available, how your systems work, and even personal preference. With the first approach, you think about good events and bad events. The aim is to figure out how many bad events you might be able to incur during a defined error budget time window without your user base becoming dissatisfied.

The second approach focuses on the concept of âbad time intervalsâ (often referred to as âbad minutes,â even if your resolution of measurement is well below minutes.) This gives you yet another way of explaining the current status of your service. For example, letâs say you have a 30-day window, and your SLO says your target reliability is 99.9%. This means you can have 0.1% failures or bad events over those 30 days before youâve exceeded your error budget. However, you can also frame this as âWe can have 43 bad minutes every month and meet our target,â since 0.1% of 30 days is approximately 43 minutes. Either way, youâre saying the same thing; youâre just using different implementations of the same philosophy.

Error budget calculation, and the decisions about which approach to use, can get pretty complicated. Chapter 5 covers strategies for handling this in great detail.

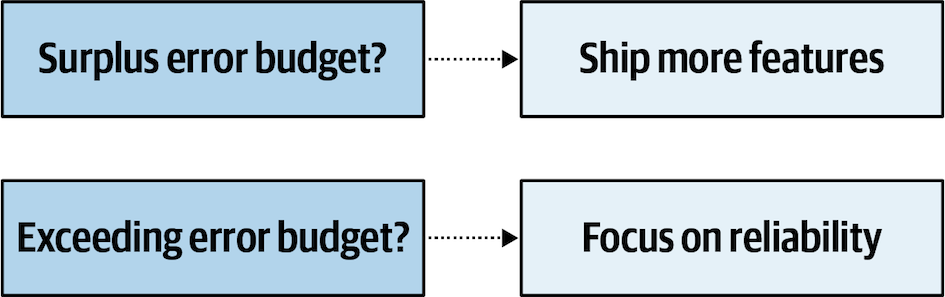

At their most basic level, error budgets are just representations of how much a service can fail over some period of time. They allow you to say things like, âWe have 30 minutes of error budget remaining this monthâ or âWe can incur 5,000 more errors every day before we run out of error budget this month.â However, error budgets are useful for much more than communication. They are, at their root, very important decision-making tools. While there is much more nuance involved in how this works in practice, Figure 1-3 illustrates the fundamental role they play in decision making.

Figure 1-3. A very basic representation of how you can use error budgets to drive decisions

The classic example is that if you have error budget remaining, you can feel free to deploy new changes, ship new features, experiment, perform chaos engineering, etc. However, if youâre out of error budget, you should take a step back and focus on making your service more reliable. Of course, this basic example is just that: an example. To inform your decisions, itâs important to have the right discussions. Weâll look at the role of error budgets in those discussions in much more detail in Chapter 5.

What Is a Service?

The word service is used a lot in this book, and that probably isnât too much of a surprise since itâs in the title. But what do we mean when we talk about a service? Just as we defined a user as anything or anyone that relies on a serviceâbe it a paying customer, an internal user, or another serviceâa service is any system that has users! Now that weâve outlined the basic ideas around how to think about measuring the reliability of a service, letâs discuss some common examples of what services are.

Note

This book sometimes uses the word system in place of service. This is because computer services are almost always complex systems as well.

Example Services

This section briefly outlines a number of common service types. This list is by no means exhaustive, since complex systems are generally unique in one way or another. This is just a starting point for thinking about what constitutes a service, and hopefully youâll see something that resembles your service in one of the following. Example SLIs and SLOs are presented for various different service types throughout this book, and in particular in Chapter 12.

Web services

The most accessible way to describe a service is to think about a web-based service that humans interact with directlyâa website, a video streaming service, a web-based email provider, and so on. These kinds of systems serve as great examples to base discussions around, for a few reasons. First, they are often much more complicated and have many more uses than you might first suspect, which means that theyâre useful for considering multiple SLIs and SLOs for a single service. Second, just about everyone has interacted with a service like this and likely does so many times every day. This means that itâs easy for anyone to follow along without having to critically think about a type of service they may not be familiar with. For these reasons, weâll frequently return to using services that fall into this category as examples throughout the book. However, that does not mean to imply in any sense that these are the only types of services out there, or the only types of services that can be made more reliable via SLO-based approaches.

Request and response APIs

Beyond web-based services, the next most common services youâll hear discussed when talking about SLOs are request and response APIs. This includes any service that provides an API that takes requests and then sends a response. In a way, almost every service fits this definition in some sense, if only loosely. Essentially, every service takes requests and responds to them in some way; however, the term ârequest and response APIâ is most commonly used to describe services in a distributed environment that accept a form of remote procedure call (RPC) with a set of data, perform some sort of processing, and respond to that call with a different set of data.

There are two primary reasons why this kind of service is commonly discussed when talking about SLOs. First, theyâre incredibly common, especially in a world of microservices. Second, theyâre often incredibly easy to measure in comparison with some of the service types weâll discuss. Establishing meaningful SLIs for a request and response API is frequently much simpler than doing so for a data processing pipeline, a database, a storage platform, etc.

If youâre not responsible for a web service or a request and response API, donât fret. Some additional service types are coming right up, and we discuss a whole variety of services in Chapter 12. Weâll also consider data reliability in depth in Chapter 11.

Data processing pipelines

Data processing pipelines are similar to request and response APIs in the sense that something sends a request with data and processing of this data occurs. The big difference is that the âresponseâ after the processing part of a pipeline isnât normally sent directly back to the original client, but is instead output to a different system entirely.

Data processing pipelines can be difficult to develop SLIs and SLOs for. SLIs for pipelines are complicated because you generally have to measure multiple things in order to inform a single SLO. For example, for a log processing pipeline youâd have to measure log ingestion rate and log indexing rate in order to get a good idea of the end-to-end latency of your pipeline; even better would be to measure how much time elapses after inserting a particular record into the pipeline before you can retrieve that exact record at the other end. Additionally, when thinking about SLIs for a pipeline, you should consider measuring the correctness of the data that reaches the other end. For lengthy pipelines or those that donât see high volumes of requests per second, measuring data correctness and freshness may be much more interesting and useful than measuring end-to-end latency. Just knowing that the data at the end of the pipeline is being updated frequently enough and is the correct data may be all you need.

SLOs are often more complicated with data processing pipelines because there are just so many more factors at play. A pipeline necessarily requires more than a single component, and these components may not always operate with similar reliability. Data processing pipelines receive much more attention in Chapter 11.

Batch jobs

Even more complicated than data processing pipelines are batch jobs. Batch jobs are series of processes that are started only when certain conditions are met. These conditions could include reaching a certain date or time, a queue filling up, or resources being available when they werenât before. Some good things to measure for batch jobs include what percentage of the time jobs start correctly, how often they complete successfully, and if the resulting data produced is both fresh and correct. You can apply many of the same tactics we discussed for data processing pipelines to many batch jobs.

Databases and storage systems

At one level, databases and storage systems are just request and response APIs: you send them some form of data, they do some kind of processing on that data, and then they send you a response. In terms of a database, the incoming data is likely very structured, the processing might be intense, and the response might be large. On the other hand, for storage system writes, the data may be less structured, the processing might mostly involve flipping bits on an underlying hardware layer, and the response might simply be a true or false telling you whether the operation completed successfully. The point is that for both of these service types, measuring things like latency and error ratesâjust like you might for a request and response APIâcan be very important.

However, databases and storage systems also need to do other, more unique things. Like processing pipelines and batch jobs, they also need to provide correct and fresh data, for example. But, one thing that sets these service types apart is that you also need to be measuring data availability and durability. Developing SLIs for these types of systems can be difficult, and picking SLO targets that make sense even more so. Chapter 11 will help you tackle these topics in the best possible way.

Compute platforms

All software has to run on something, and no matter what that platform is, itâs also a service. Compute platforms can take many forms:

Operating systems running on bare-metal hardware

Virtual machine (VM) infrastructures

Container orchestration platforms

Serverless computing

Infrastructure as a Service

Compute platforms are not overly difficult to measure, but theyâre often overlooked when organizations adopt SLO-based approaches to reliability. The simple fact of the matter is that the services that run on top of these platforms will have a more difficult time picking their own SLO targets if the platforms themselves donât have any. SLIs for services like these should measure things like how quickly a new VM can be provisioned or how often container pods are terminating. Availability is of the utmost importance for these service types, but you also shouldnât discount measuring persistent disk performance, virtual network throughputs, or control plane response latencies.

Hardware and the network

At the bottom of every stack you will find physical hardware and the network that allows various hardware components to communicate with each other. With the advent of cloud computing, not everyone needs to care about things at this level anymore, but for those that do, you can use SLO-based approaches to reliability here too.

Computing proper SLIs and setting appropriate SLOs generally requires a lot of data, so if youâre responsible for just a few racks in a data center somewhere, it might not make a lot of sense to expend a lot of effort trying to set reasonable targets. But if you have a large platform, you should be able to gather enough data to measure things such as hard drive or memory failure rates, general network performance, or even power availability and data center temperature targets.

Physical hardware, network components, power circuits, and HVAC systems are all services, too. While services of these types might have the biggest excuse to aim for very high reliability, they, too, cannot ever be perfect, so you can try to pick more reasonable targets for their reliability and operations as well.

Things to Keep in Mind

SLO-based approaches to reliability have become incredibly popular in recent years, which has actually been detrimental in some ways. Service level objective has become a buzzword: something many leadership teams think they need just because it is an important part of Site Reliability Engineering (SRE), which itself has unfortunately too often devolved into a term that only means what its users want it to mean. Try to avoid falling into this trap. Here are some things about successful SLO-based approaches that you should keep in mind as you progress through this book.

Note

The key question to consider when adopting an SLO-based approach to reliability is, âAre you thinking about your users?â Using the Reliability Stack is mostly just math that helps you do this more efficiently.

SLOs Are Just Data

The absolute most important thing to keep in mind if you adopt the principles of an SLO-based approach is that the ultimate goal is to provide you with a new dataset based on which you can have better discussions and make better decisions. There are no hard-and-fast rules: using SLOs is an approach that helps you think about your service from a new perspective, not a strict ideology. Nothing is ever perfect, and that includes the data SLOs provide you; itâs better than raw telemetry, but donât expect it to be flawless. SLOs guide you, they donât demand anything from you.

SLOs Are a Process, Not a Project

A common misconception is that you can just make SLOs an Objective and Key Result (OKR) for your quarterly roadmap and somehow end up at the other end being âdoneâ in some sense. This is not at all how SLO-based approaches to reliability work. The approaches and systems described in this book are different ways of thinking about your service, not merely tasks you can complete. Theyâre philosophies, not tickets you can distribute for a sprint or two.

Iterate Over Everything

There are no contracts in place with SLOs or any of the things that surround them. This means that you can, and should, change them when needed. Start with just trying to develop some SLIs. Then, once youâve observed those for a while, figure out if you can pick a meaningful SLO target. Once you have one of those, maybe you can pick a window for your error budget. Except maybe youâve also realized your SLI isnât as representative of the view of your users as you had hoped, so now you need to update that. And now that youâve changed your SLI, you realize you need to pick a different SLO target, which completely negates the error budget window you had picked. And all of this has to be done working hand-in-hand with not just the developers of the service but those that maintain it, those responsible for the product roadmap, the leadership chain, and so forth. This is all fine. Itâs a journey, not a destination. The map is not the territory.

The World Will Change

The world is always changing, and you should be prepared to update and change your SLIs and SLOs to take account of new developments. Maybe your users now have different needs; maybe your dependencies have made your service more robust; maybe your dependencies have made your service more brittle. All of these things, and many more, will happen during the lifecycle of a service. Your SLIs and SLOs should evolve in response to such changes.

Itâs All About Humans

If you ever find when trying to implement the approaches outlined in this book that the humans involved are frustrated or upset with things, take a step back and reflect on the choices youâve made so far. Service level objectives are ultimately about happier users, happier engineers, happier product teams, and a happier business. This should always be the goalânot to reach new heights of the number of nines you can append to the end of your SLO target.

Summary

Many of our services today are complex, distributed, and deep. This can make them more difficult to understand, which in turn can make it more difficult to figure out if theyâre doing what theyâre supposed to be doing. But by taking a step back and putting yourself into your usersâ shoes, you can develop systems and adopt approaches that let you focus on things from their perspective. At the same time, you can make sure youâre not overexerting yourself and spending resources on things that donât actually matter to your users.

An SLO-based approach to service management gives you the great benefit of being able to pick a reasonable target for a service, write that down in a relatively easily consumable way, and run that service toward that target. This might not sound like much, but if youâve ever tried to do it any other way youâll know the incredible virtue that underpins simplicity, in service-, organization-, and user-based terms. These are the things an SLO-based approach to reliability can give you.

Measure things from your usersâ perspective using SLIs, be reasonable about how often you need to be reliable using SLOs, and use the results of these measurements to help you figure out how and when to become more reliable using error budgets. Those are the basic tenets of the Reliability Stack.

Get Implementing Service Level Objectives now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.